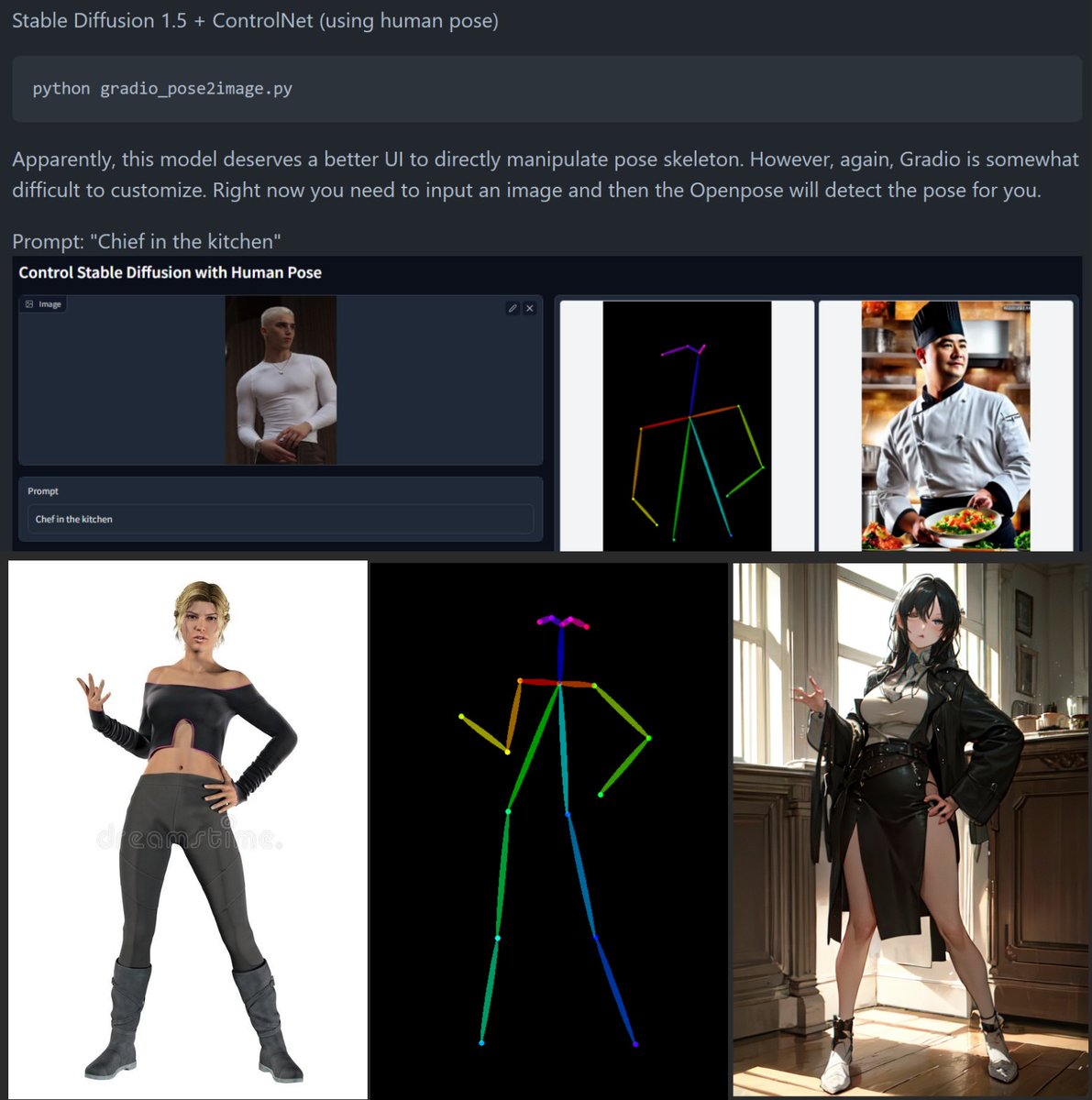

SparseCtrl is a feature added in AnimateDiff v3 that is useful for creating natural motion with a small number of inputs. Let's take a quick look at how to use it!

There are currently two types: RGB and Scribble. If you use a single image, it works like img2vid.

The workflow for SparseCtrl RGB is as follows. For Scribble, change the preprocessor to scribble or line art.

You can also use multiple images to create a frame between them. In general, Scribble motion is bit more natural than RGB. You can also use them together at the same time.

If you want to use multiple images, you can enter them in a batch. For more than two images, it's convenient to use a custom node like VideoHelperSuite's Load Images. The image is applied evenly from the first to the last frame in order.

This can also be used as a kind of frame interpolation. However, the style will change depending on the model you use, so make sure your input images are generated with the same model to get the desired results.

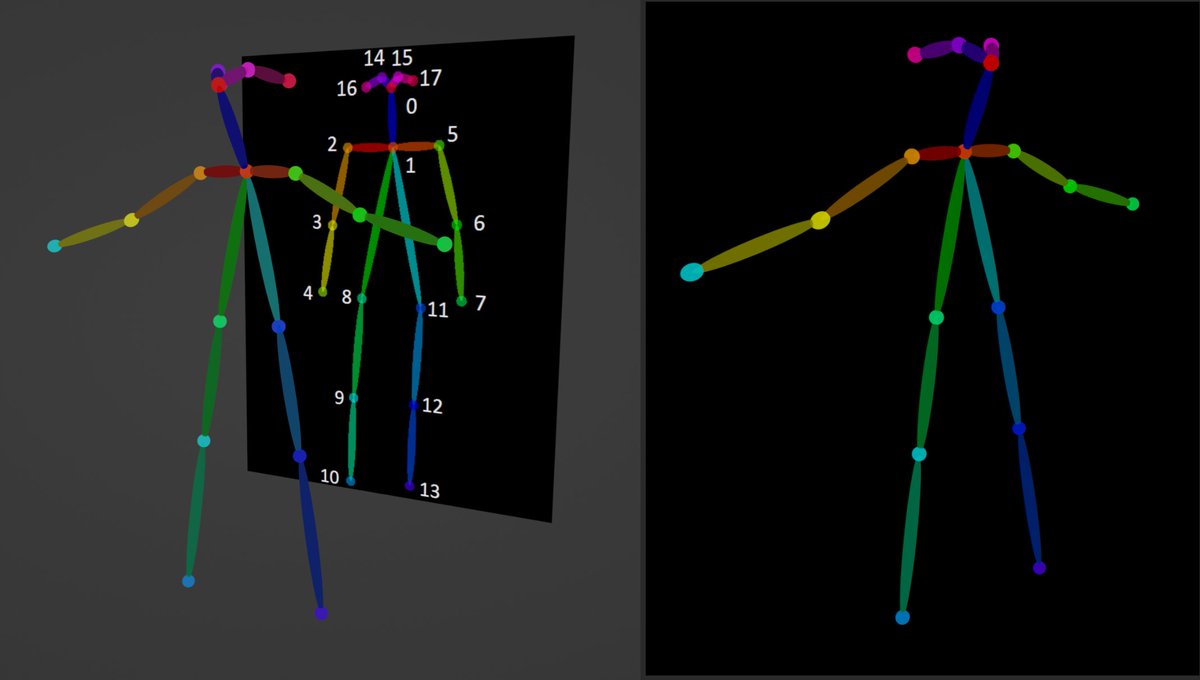

For example, Starting is 1 to 0, Ending is 0 to 1, and so on. Note the timing of when the pose in the video and input image become the same.

For the Index Method, you can specify a frame. If you enter 8, frame 8 will be most strongly affected.

• • •

Missing some Tweet in this thread? You can try to

force a refresh