Direct or explicit instruction seems to be widely misunderstood. It's often characterised as boring lectures with little interaction and not catering to the needs of all students. Nothing could be further from the truth. A short thread 🧵⬇️

Direct Instruction (DI) as a formal method was designed by Siegfried Engelmann and Wesley Becker in the 1960s for teaching core academic skills. This was a structured, systematic approach which emphasizes carefully sequenced materials delivered in a clear, unambiguous language with examples.

It's designed to leave little room for misinterpretation and to ensure that all students, regardless of background or ability, can learn effectively.

It's also anything but boring. Here is a video from the 1960s of Englemann teaching Maths. Notice how interactive and fast paced the teaching is:

It's designed to leave little room for misinterpretation and to ensure that all students, regardless of background or ability, can learn effectively.

It's also anything but boring. Here is a video from the 1960s of Englemann teaching Maths. Notice how interactive and fast paced the teaching is:

In the 1970s, Barak Rosenshine researched what makes for high quality teaching. He found that really effective teachers use direct instruction (di) as a core part of their practice and that it's about a lot more than merely explaining things ⬇️

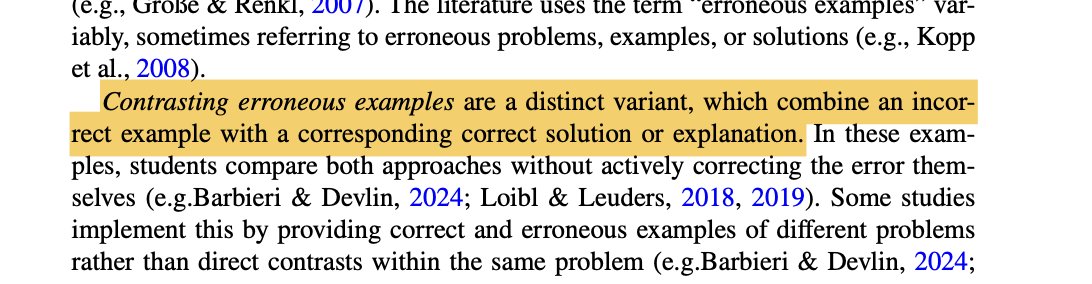

In the 1980s, Brophy and Good looked at the relationship between teacher behaviours and student achievement. They found that explicit instruction was an integral part of effective teaching and it was in fact, a form of active teaching. They write that although there is a lot of teacher talk, most of it is "academic rather than procedural or managerial and much of it involves asking questions and giving feedback rather than extended lecturing." edwp.educ.msu.edu/research/wp-co…

In the early 2000s, Explicit Direct Instruction (EDI) was developed by Silvia Ybarra and John Hollingsworth and despite the harsh sounding name, is very interactive.

Something which will probably shock most teachers is that Explicit Direct Instruction suggests that teachers talk for a maximum of two minutes before engaging students in some way ⬇️

Something which will probably shock most teachers is that Explicit Direct Instruction suggests that teachers talk for a maximum of two minutes before engaging students in some way ⬇️

One major misconception is the claim that "Direct or Explicit instruction marginalises SEN pupils." This is completely untrue, in fact the opposite is probably more accurate. The EEF recommended explicit instruction as a core part of their ‘Special Educational Needs in Mainstream Schools’ guidance report.

What is the evidence base for direct or explicit instruction?

Well there's a lot but let's take the unfortunately named Project Follow Through, (initiated in 1968 and extended right through to 1977) which was the largest and most comprehensive educational experiment ever conducted in the US. Its primary goal was to determine the most effective ways of teaching at-risk children in kindergarten through third grade.

The results indicated that Direct Instruction was the most effective across a range of measures, including basic skills, cognitive skills, and affective outcomes.

Well there's a lot but let's take the unfortunately named Project Follow Through, (initiated in 1968 and extended right through to 1977) which was the largest and most comprehensive educational experiment ever conducted in the US. Its primary goal was to determine the most effective ways of teaching at-risk children in kindergarten through third grade.

The results indicated that Direct Instruction was the most effective across a range of measures, including basic skills, cognitive skills, and affective outcomes.

Two astounding things I find about Project Follow Through:

1. Not only did these students (mostly disadvantaged and at-risk) do better on what was termed 'basic skills' such as reading and maths but they also felt better about themselves.

2. Secondly, many educationalists and academics not only ignored these results but actually encouraged schools to use the least effective methods from this study. As Cathy Watkins puts it: "The majority of schools today use methods that are not unlike the Follow Through models that were least effective (and in some cases were most detrimental)."

nifdi.org/research/esp-a…

1. Not only did these students (mostly disadvantaged and at-risk) do better on what was termed 'basic skills' such as reading and maths but they also felt better about themselves.

2. Secondly, many educationalists and academics not only ignored these results but actually encouraged schools to use the least effective methods from this study. As Cathy Watkins puts it: "The majority of schools today use methods that are not unlike the Follow Through models that were least effective (and in some cases were most detrimental)."

nifdi.org/research/esp-a…

• • •

Missing some Tweet in this thread? You can try to

force a refresh