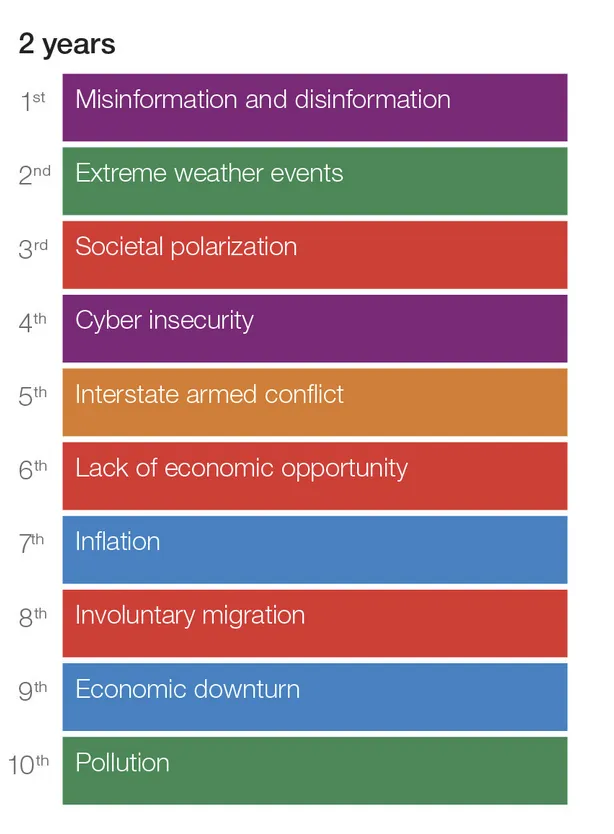

Last week the World Economic Forum published its 'Global Risk Report' identifying misinformation and disinformation as the *top global threats over the next two years*. In this essay I argue its ranking is either wrong or so confused it's not even wrong: conspicuouscognition.com/p/misinformati…

Some background: Since 2016 (Brexit and the election of Trump), policy makers, experts, and social scientists have been gripped by panic about the political harms of disinformation and misinformation. Against this backdrop, the World Economic Forum's ranking is not surprising.

Responses to the ranking were polarised. It's fair to say @NateSilver538 👇, who linked my essay in support of his view (), was not a fan. conspicuouscognition.com/p/misinformati…

Understandably, many leading misinformation researchers disagreed with Silver's assessment. They argued that misinformation *is* the top global threat because it causes or exacerbates all other threats, including war.

In my essay, I argue that on a narrow, technical understanding of dis/misinformation, the ranking seems completely wrong - bizarre, even. There is simply not good evidence that dis/misinformation, so understood, is a great threat, let alone the greatest.

In response to this, one might argue that this conclusion only follows from an overly strict definition of dis/misinformation. There is no doubt that many of humanity's problems arise in large part because people don't have good, accurate models of reality.

Maybe, then, we can just use the term 'misinformation' to refer to whatever factors cause people - whether ordinary people or those in positions of power - to have bad beliefs and make bad decisions.

As attractive as this line of reasoning it is, I argue that it is ultimately confused. It is - in the famous put-down of physicist Wolfgang Pauli - "not even wrong". There are three basic reasons for this:

1. Once we understand 'misinformation' to include subtle ways in which narratives, ideologies, and systems bias people's priorities and perceptions of reality, experts and elites at Davos are definitely not in a privileged position to identify misinformation.

2. Using labels like 'dis/misinformation' to refer to the extremely complex set of psychological, social, political, and institutional factors that cause people to hold bad beliefs and make bad decisions does not help us to understand the world or address global threats.

3. On extremely expansive understandings of the terms 'dis/misinformation', they are chronic features of the human condition and politics, and there is no reason to think they have gotten worse in recent years as a result of social media or AI. In some ways, they've improved!

That's all from me. Thanks for reading this far. You can subscribe to my weekly essays here: conspicuouscognition.com

• • •

Missing some Tweet in this thread? You can try to

force a refresh