Philosopher, University of Sussex. Tweets in personal capacity. Interested in: Philosophy, Psychology, Society. Writes at: https://t.co/MniDhzFnow

How to get URL link on X (Twitter) App

Why does it matter if claims about a "censorship industrial complex" are true or false? Because these accusations are being used to justify Trump's Big Lie about 2020 election fraud and paint Democrats as the "real threat to democracy." (2/8)

Why does it matter if claims about a "censorship industrial complex" are true or false? Because these accusations are being used to justify Trump's Big Lie about 2020 election fraud and paint Democrats as the "real threat to democracy." (2/8)

https://twitter.com/TheAtlantic/status/1844531480522788992All supported by a mix of anecdata, baseless speculation, alarmism, and the implicit assumption that exposure to misinfo = belief. Also, as part of its supporting evidence, it links to a tweet with ... 5 likes where most of comments are telling the person what an idiot they are.

https://twitter.com/DrJBhattacharya/status/1841309400813985815I've written multiple pieces criticising censorship, as well as bad content moderation policies by social media companies (which is really a different thing). And I agree Dems are naive and often bad on these issues.

#1: 'Strange Bedfellows: The Alliance Theory of Political Belief Systems' by @DavidPinsof David Sears and @haselton. A brilliant, evolutionarily plausible, parsimonious - and deeply cynical - theory of political belief systems. 2/6tandfonline.com/doi/full/10.10…

#1: 'Strange Bedfellows: The Alliance Theory of Political Belief Systems' by @DavidPinsof David Sears and @haselton. A brilliant, evolutionarily plausible, parsimonious - and deeply cynical - theory of political belief systems. 2/6tandfonline.com/doi/full/10.10…

First, some context. A few weeks ago I published an essay 👇 arguing that misinformation research confronts a dilemma when it comes to answering its most basic definitional question: What *is* misinformation? 2/15

First, some context. A few weeks ago I published an essay 👇 arguing that misinformation research confronts a dilemma when it comes to answering its most basic definitional question: What *is* misinformation? 2/15

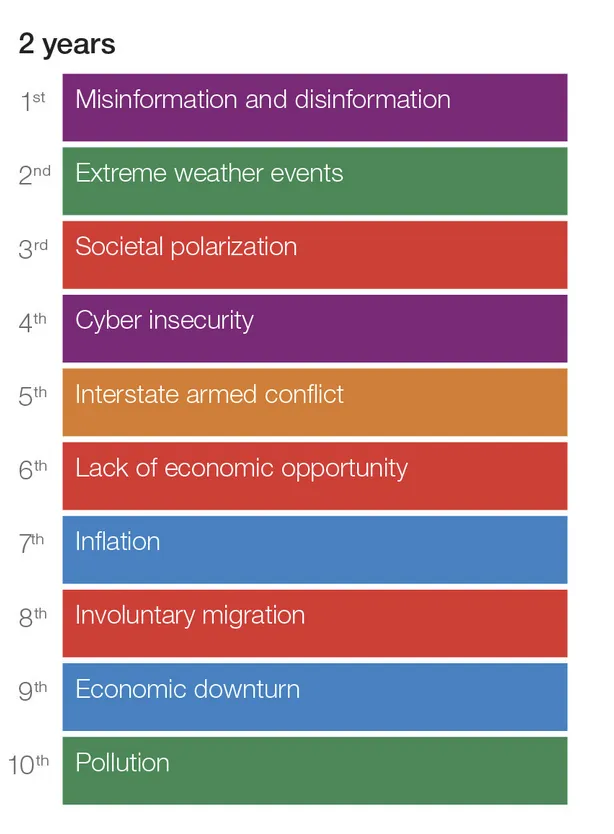

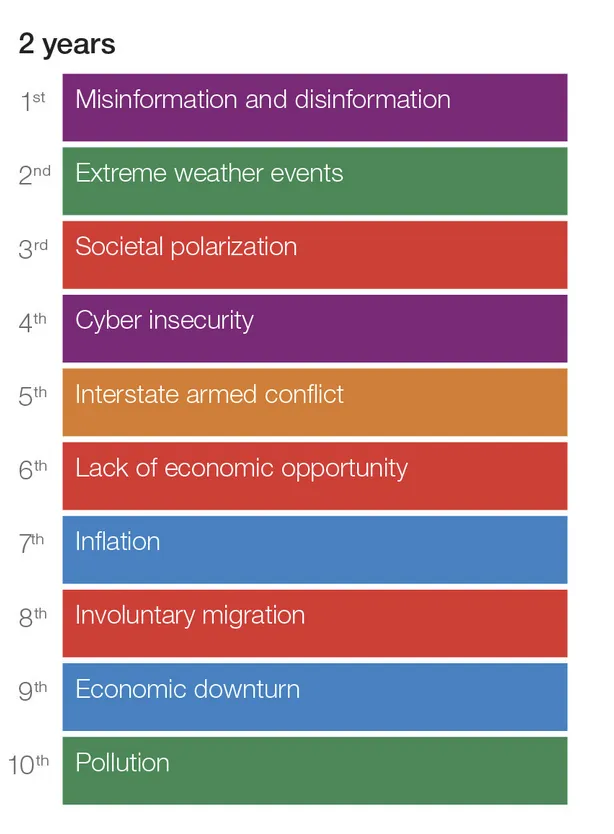

Some background: Since 2016 (Brexit and the election of Trump), policy makers, experts, and social scientists have been gripped by panic about the political harms of disinformation and misinformation. Against this backdrop, the World Economic Forum's ranking is not surprising.

Some background: Since 2016 (Brexit and the election of Trump), policy makers, experts, and social scientists have been gripped by panic about the political harms of disinformation and misinformation. Against this backdrop, the World Economic Forum's ranking is not surprising.

The blog is an experiment. I like writing, I like publicly engaging with ideas and debates, and now that I have a permanent academic job I can express and argue for controversial takes - which I will be doing - with less fear of consequences. 2/7

The blog is an experiment. I like writing, I like publicly engaging with ideas and debates, and now that I have a permanent academic job I can express and argue for controversial takes - which I will be doing - with less fear of consequences. 2/7

https://twitter.com/Sander_vdLinden/status/1698059698514092391First, it's important to be clear about which claim is at dispute. If emotionality is a fingerprint of misinformation, misinformation on average must exhibit higher rates of emotionality than reliable information. That's a striking claim which if true has important implications

https://twitter.com/TuckerCarlson/status/1656037032538390530This information isn't difficult to find. A quick google search would suffice. In ordinary life, if you discovered that someone (a friend, a colleague) had engaged in that level of extreme, psychopathic, self-interested deception, you would lose all trust in them.

And I think the more likely explanation for why some people are optimistic about AI is because they are persuaded by its potential. And I am sceptical that training AI on existing human knowledge constitutes "the most consequential theft in human history."

And I think the more likely explanation for why some people are optimistic about AI is because they are persuaded by its potential. And I am sceptical that training AI on existing human knowledge constitutes "the most consequential theft in human history."

https://twitter.com/danwilliamsphil/status/1568956062543265792I *don’t* think most people are ignorant when it comes to answering these questions, or that only an elite minority knows (e.g.) how many moons our planet has. As I said (in fact, the *first* thing I said) in the thread: I think these answers are *obviously cherry picked*.(2/7)

https://twitter.com/BomsteinRick/status/1568613300610662401First, being informed about pointless abstract facts about the world is a status symbol among highly-educated exam takers that constitute society’s elite. Most people don’t care, and have way more important things going on in their lives to bother acquiring this knowledge. (2/5)