In today's #vatniksoup, I'll talk briefly about the Community Notes system and why it doesn't work. I've previously stated that the Community Notes mechanism is a "mob rule" and can be played easily by big accounts and troll farms.

1/15

1/15

Community Notes is a community-driven content moderation program, intended to provide informative context based on a crowd-sourced voting system. As of Nov 2023, this system had over 130 000 contributors.

2/15

2/15

The idea of a crowd-sourced system as a moderation tool did not come from Elon - it was announced already back in 2020 when it was called Birdwatch. Musk later rebranded the system as Community Notes and sold it to the platform as something new.

3/15

3/15

Vitalik Buterin (@VitalikButerin) has made a very extensive (and technical) analysis on the tool and the Community Notes algorithm as a whole.

I disagree with him on some points, but I really suggest to everyone to read it:

4/15vitalik.eth.limo/general/2023/0…

I disagree with him on some points, but I really suggest to everyone to read it:

4/15vitalik.eth.limo/general/2023/0…

Also, focusing the analysis only on the algorithm and the technical aspects is simplifying the concept, as it rules out the most important variable: human factor.

People are prone to bias and disinformation tends to spread much more aggressively than the truth.

5/15

People are prone to bias and disinformation tends to spread much more aggressively than the truth.

5/15

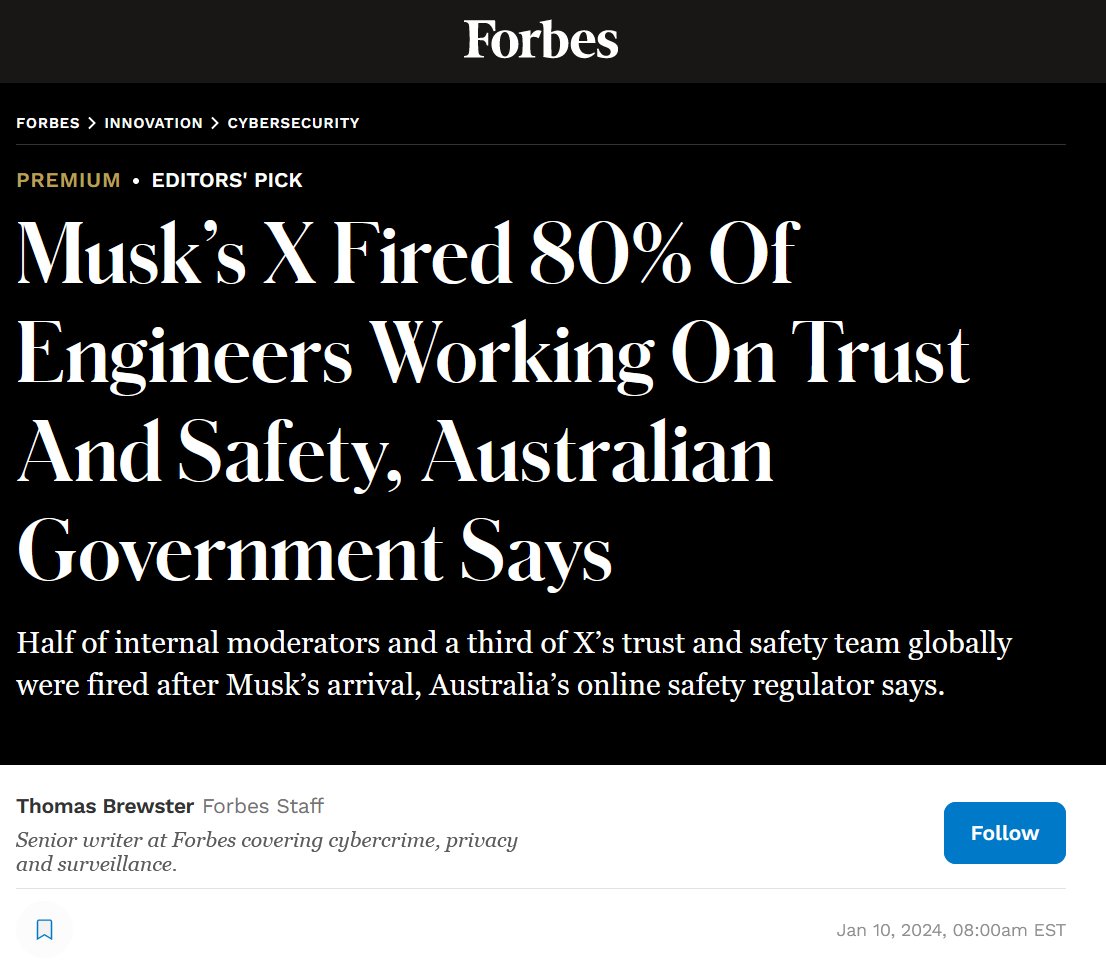

Twitter's former head of safety, Yoel Roth, has stated that the system was never intended to replace the curation team, but to complement it. But all this of course changed after Elon sacked everyone from Twitter's Trust and Safety in order to save money.

6/15

6/15

These sackings have resulted in long response times on reports on hate speech - X's attempts to deal with hateful direct messages has slowed down by 70%.

As of today, the company doesn't have any full-time staff singularly dedicated to hateful conduct issues globally.

7/15

As of today, the company doesn't have any full-time staff singularly dedicated to hateful conduct issues globally.

7/15

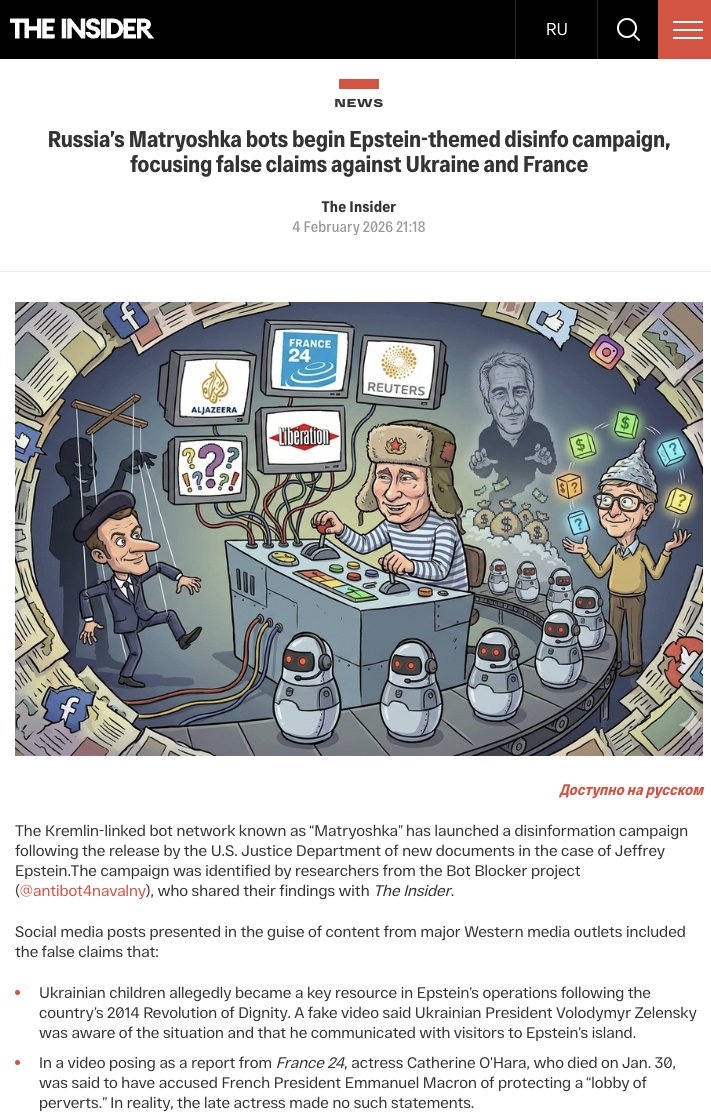

Some Community Notes contributors (who are also NAFO activists) have claimed that the system is riddled with coordinated manipulation, infighting and lack of oversight from the platform. Also, many contributors engage in conspiracy-fueled discussions.

8/15

8/15

The Notes system also has a huge problem with its scalability. During events like the 7 Oct 2023 Hamas terrorist attack, the amount of disinformation grows so large, that it's simply impossible for the small community to keep up and check factuality of said content.

9/15

9/15

Analysis by NewsGuard showed that the most popular disinformation posts related to the Israel-Hamas war (not so surprisingly originating from serial liars like @jacksonhinklle, @drloupis and @ShaykhSulaiman) failed to receive Community Notes 68% of the time.

10/15

10/15

These big accounts also have the ability to fight against the Notes they've received by mobilizing people who support their views. In the most tragicomic instance, @elonmusk claimed, without any evidence, that a Community Note on his post was "gamed by state actors".

11/15

11/15

Other than being humiliated and ridiculed, getting Community Noted doesn't really have any major downsides. Noted posts don't provide you income and advertisers can decide if they want to show ads on accounts like @dom_lucre's, but most of these...

12/15

12/15

...so-called superspreader accounts make most of their income through other means, namely through the X's subscription system. Also, many of them, including @stillgray and (allegedly) @jacksonhinklle are employed by state actors like Russia and the CCP.

13/15

13/15

With accounts that post tens or hundreds posts a day, the Notes are also inefficient - while the Community is trying to put a note on a post that's clearly disinformation, there are already 10 or 20 new ones to replace it in the algorithm.

14/15

14/15

To conclude, Community Notes are a non-functional and slow mechanism that's desperately trying to replace the Trust and Safety team. They work on a "mob rule" basis and big enough accounts (including the owner of the platform) can play around the system.

15/15

15/15

All soups:

Find us also on other socials:

vatniksoup.com

instagram.com/vatniksoup/

youtube.com/@TheSoupCentra…

Find us also on other socials:

vatniksoup.com

instagram.com/vatniksoup/

youtube.com/@TheSoupCentra…

• • •

Missing some Tweet in this thread? You can try to

force a refresh