In today's #vatniksoup, I'll talk about the state of generative AI (GAI) today, and how it will affect propaganda & disinformation in the near future.The development of GAI right now is so fast, that it's very difficult to keep track of what's going on,but here's the latest.

1/18

1/18

Generative AI is a technology capable of generating text, images, videos, or other data using generative models often in response to prompts. Some examples of GAI are platforms like Midjourney, DALL-E, ChatGPT and all those chatbots you find on every service desk these days.

2/18

2/18

In the last two years, GAI platforms, especially those that generate images, have become much more powerful. For example below, you see a comparison between generated content with different versions of Midjourney: v1 was published in Feb 2022, and v6 came out in Dec 2023.

3/18

3/18

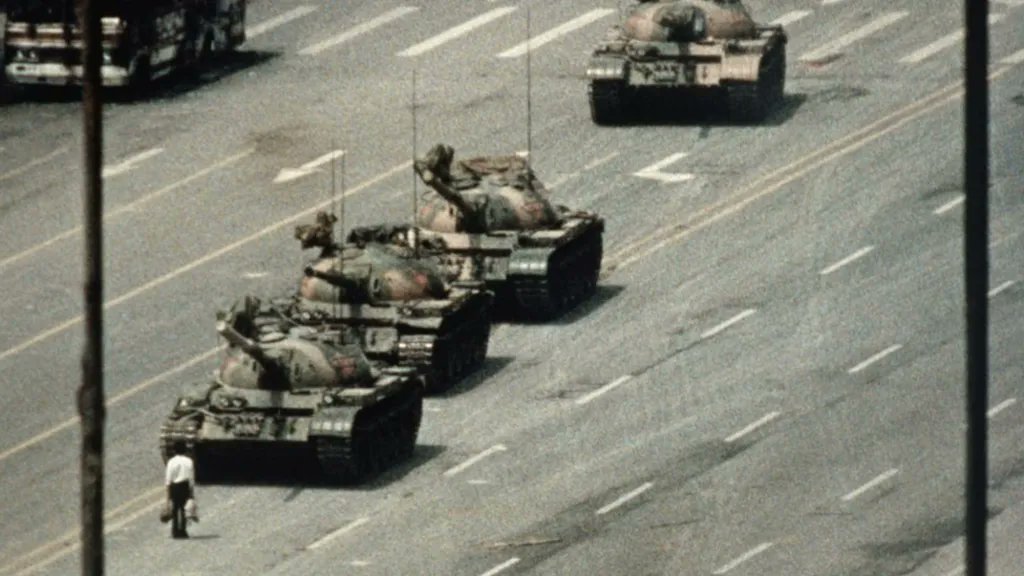

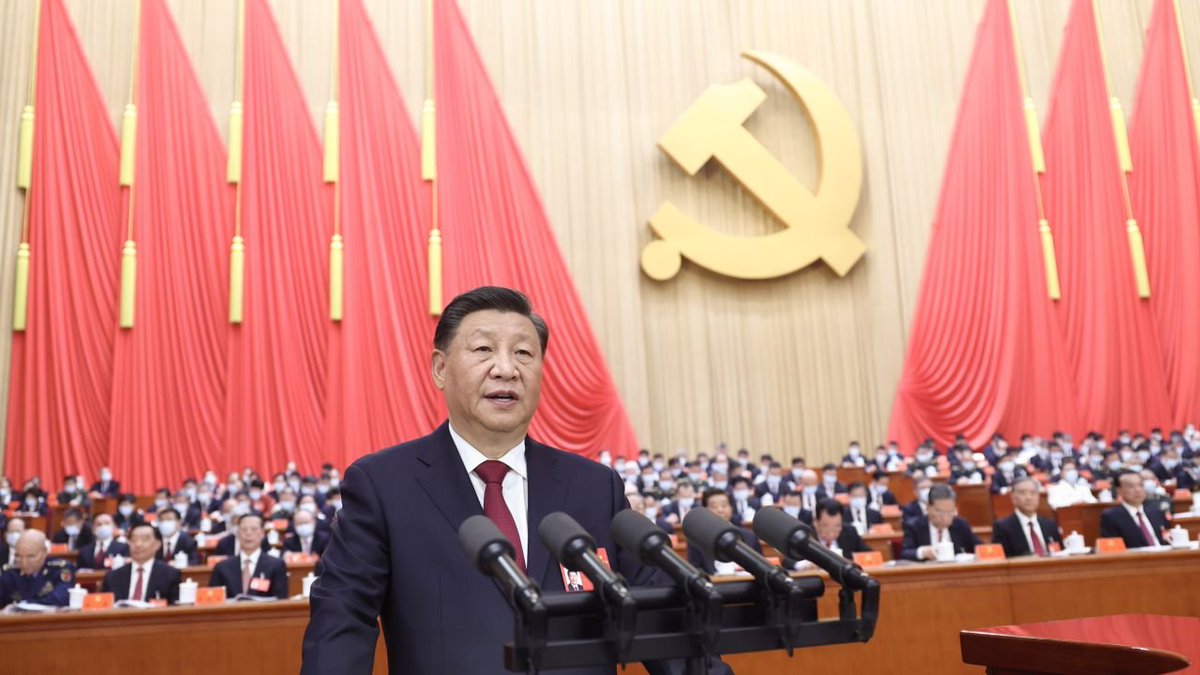

In the context of the Russo-Ukrainian War, there have been multiple examples of GAI that's been produced as anti-Ukrainian propaganda.

Few weeks after the full-scale war started, a crude and badly made Zelenskyy deepfake surfaced on social media.

4/18

Few weeks after the full-scale war started, a crude and badly made Zelenskyy deepfake surfaced on social media.

4/18

There was also a big push to make Zelenskyy appear as a cocaine addict. This was done by cutting and pasting parts from his interviews, but there was also a video published which showed "white powder" on his workdesk. The video of course was a edited and fake.

5/18

5/18

In Nov 2023, a deepfake video depicting then Commander-in-Chief Valerii Zaluzhnyi made rounds on Telegram. In the fake video, he declared Zelenskyy as an "enemy of the state", and rallied people to "deploy their weapons and enter Kyiv".

6/18

6/18

Another deepfake video was published in Feb 2024. In this video, deepfake audio of the governor of Texas, Greg Abbott, claims that Joe Biden should "deal with real internal problems" and not "play with Putin, from whom he needs to learn how to work for national interests".

7/18

7/18

Another, more recent example was published in Mar 2024, some time after the Crocus City Hall terrorist attack. This deepfake video was a composition of two interviews with Danilov and it used a deepfake audio that was then dubbed on the video.

8/18

8/18

At the beginning of the war, when only a tiny amount of information and media was coming from the front lines, people shared a lot of video game content and videos from previous conflicts. This same phenomenon was evident after the 7th Oct terrorist attack.

9/18

9/18

Deepfake content is also used to sway opinions at the election polls. In the 2023 Slovak parliamentary election, a deepfake audio was used to construct a phone call about "rigging the elections" and raising the cost of beer. The latter may have even swung the elections!

10/18

10/18

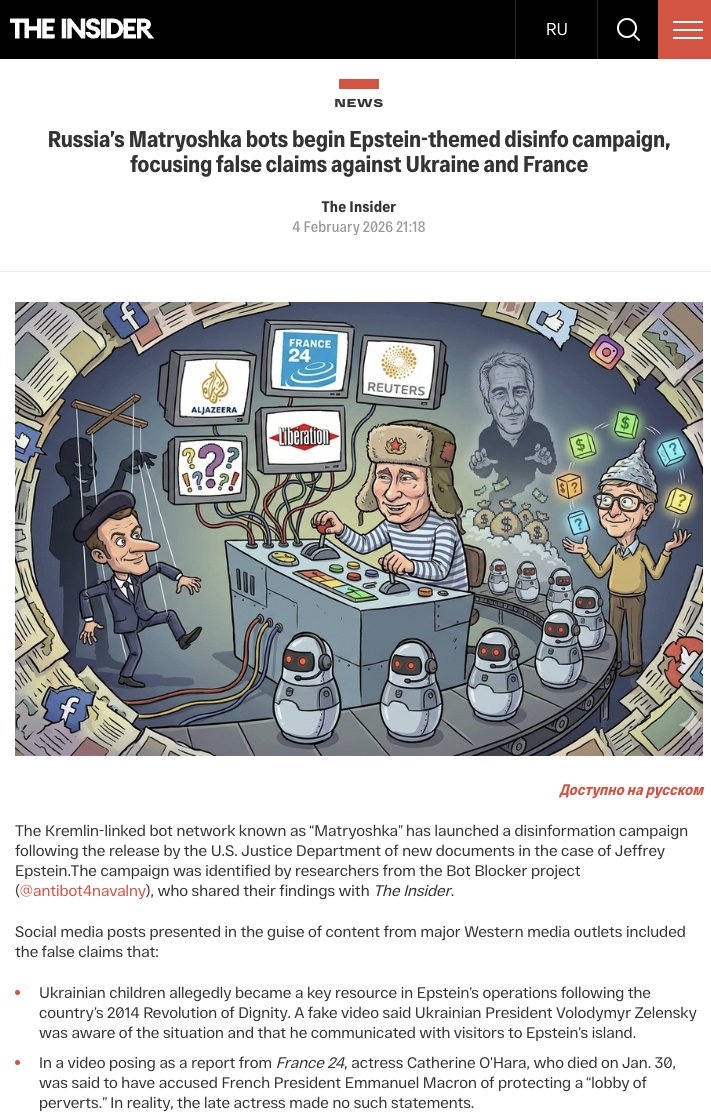

GAI is also used to generate text. One such example is recently launched fake news blog London Crier. According to @eliothiggins, most of the content on this site is stolen from other sites & then re-written by AI,with occasional hand-written fake news thrown in the mix.

11/18

11/18

Most of the examples above are crude and not very convincing. Next, let's look at what can be done today with state-of-the-art GAI technologies. For example, here's a GAI video produced with Sora AI, a GAI video platform created by OpenAI.

12/18

12/18

Here's another example that was made by TikTok user @tech.bible. The AI used 30 seconds of both webcam and audio content to create this completely fake video. This is already pretty convincing, and it's just the start.

13/18

13/18

Generative AI can also be used to create music. Here's a song titled "Diapers and Deception (Fuck Vladimir Putin)", an electronic deep house song with vocals that took me around 15 seconds to make. As you can hear, it's a banger and a certified summer hit.

14/18

14/18

Below you see photorealistic examples made with the latest version of Midjourney AI (v6). I, for one, have hard time telling that these images were generated by AI. Many platforms are also figuring out how to overcome the challenging parts, like generating human hands.

15/18

15/18

Deepfakes, robocalls, and other techniques are already used for nefarious purposes. For example, fake robocalls that impersonated Joe Biden were used to sway voters in New Hampshire, and a finance worker transferred 25 million USD after a tele call with a deepfake boss.

16/18

16/18

To conclude, GAI is developing FAST, and it will change our attitude towards information & disinformation. In the near future, it will be extremely difficult to notice a difference between real and fake content. People will also have hard time believing in anything anymore.

17/18

17/18

Finally, I would recommend everyone to follow @Shayan86 and @O_Rob1nson, as they do the best fact-checking and de-bunking when it comes to fake content.

18/18

18/18

• • •

Missing some Tweet in this thread? You can try to

force a refresh