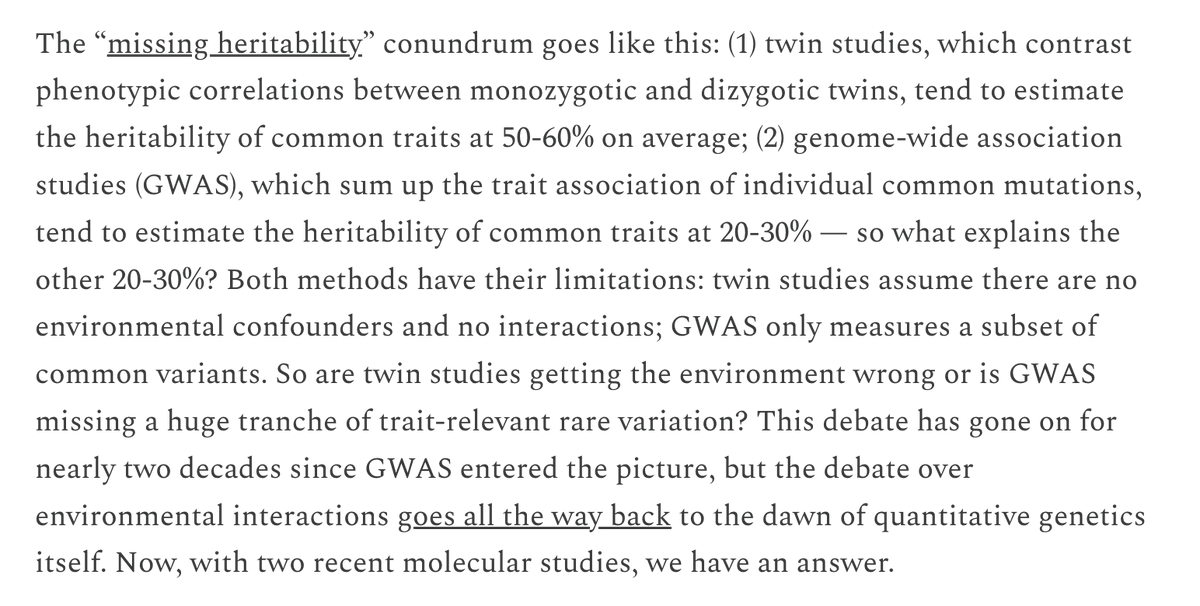

I've written the first part of a chapter on the heritability of IQ scores. Focusing on what IQ is attempting to measure. I highlight multiple paradoxical findings demonstrating IQ is not just "one innate thing".

I'll summarize the key points here. 🧵 gusevlab.org/projects/hsq/#…

I'll summarize the key points here. 🧵 gusevlab.org/projects/hsq/#…

First, a few reasons to write this. 1) The online IQ discourse is completely deranged. 2) IQists regularly invoke molecular heritability as evidence for classic behavioral genetics findings while ignoring the glaring differences (ex: from books by Ritchie and Haier/Colom/Hunt).

Thus, molecular geneticists have been unwittingly drafted into reifying IQ even though we know that every trait is heritable and behavior is highly environmentally confounded. 3) IQ GWAS have focused on crude factor models that perpetuate the "one intelligence" misconception.

So what is an IQ test and the "g" factor? In short, test takes are asked questions related to pattern matching, memory, verbal/numeric reasoning, and general knowledge (some examples below). The weighted average of their scores is then the IQ score.

It turns out people who do poorly on one test tend to do poorly on other tests, which produces weak correlations known as the "positive manifold". These correlations can be summarized with factor analysis, producing a "general" factor (g) that explains 25-45% of total variance.

There's nothing special about the g score: it's just the IQ score computed with a different weighting (the "g loadings", more on these later).

But what could explain these correlations? In fact, many different theories can produce the same exact positive manifold and g patterns:

But what could explain these correlations? In fact, many different theories can produce the same exact positive manifold and g patterns:

For example, Thomson's "sampling" theory, where IQ subtests sample from a large number of partially overlapping processes, can produce exactly the pattern of test correlations, leading factor, and factor loadings observed in the UK Biobank. Even though no actual g exists!

Or mutualism theory, where underlying processes interact dynamically over time, together with environmental inputs, to produce an apparent positive manifold. Again, g is not a causal variable, but an emergent statistical byproduct of these mutualistic relationships.

Finally, g/factor theory: where we treat the latent factors as measures of the true causal process itself. One factor doesn't fit the data well, so all sorts of more complex factor models have been proposed. Including a synthesis of sampling + factors in Process Overlap Theory.

I'm stressing the fact that many different theories fit the data in part because a causal/biological "g" is often taken as a given. But also because getting the theory right is critical to understanding what is actually being measured, test bias, and effective tests.

Ok, I promised paradoxical findings so here are five:

1) What are the weights used to compute g? They are highly correlated with how culturally specific a given subtest is (e.g. high for vocab, low for digit memory). So g is just IQ rescaled to emphasize cultural knowledge.

1) What are the weights used to compute g? They are highly correlated with how culturally specific a given subtest is (e.g. high for vocab, low for digit memory). So g is just IQ rescaled to emphasize cultural knowledge.

2) Ability + Age differentiation: The *highest* test correlations are among individuals with the *lowest* IQ, yet test correlations also increase with age. So IQ is measuring something different at the low/high ends and is also dynamic through development.

3) There is no "Matthew effect": In study after study, individuals with higher starting IQ do not acquire knowledge/skills faster -- in fact they converge! IQ also can't predict cognitive decline. This means IQ is not a measure of "processing speed" but of baseline knowledge.

4) Socioeconomic status (SES), in contrast, *is* associated with divergence: No SES/IQ differences are observed in kids at 10 months, yet low SES kids were 6 pts behind at age 2, and 15-17 pts (>1SD) behind by age 16. Thus IQ is confounded by SES from the start and throughout.

5) The Flynn Effect: Mean IQ in the population has been increasing over generations, with some studies showing the increase happening on more g/culture loaded subtests and among lower scorers and even within families. Environmental/cultural factors thus reshape IQ over time.

Clearly multiple dynamic phenomena are at play, so how does this fit with theory? In fact, longitudinal data strongly support mutualism, with gains in one cognitive domain translating into gains in other domains. Recently, even shown in RCTs [Stine-Morrow et al. 2024].

The same is observed in cross-sectional analyses, where mutualist/network models consistently fit IQ data better than factor models. [Knyspel + Plomin 2024] even applied networks to twin data and showed reversed relationships between twin "heritability" and g loading!

Finally, while neuroscience suffers from the same construct validity issues as IQ research, the one consistent finding is that there is no "neuro g": g correlates with many different structural/functional patterns, is better explained by network models, and does not replicate.

In short, IQ is indexing a bundle of different and often confounded processes, including individual and cultural shifts. IQ/g scores should be modeled as a dynamic network with environmental interactions, and there's absolutely no reason to treat them as "real" or "innate".

Now that we have a handle on what we are/aren't estimating with IQ, next time I will discuss the heritability and molecular genetic findings. My previous thread on the heritability of Educational Attainment is linked below. /x

https://twitter.com/SashaGusevPosts/status/1740840759279095908

@threadreaderapp : unroll

• • •

Missing some Tweet in this thread? You can try to

force a refresh