In today’s #vatniksoup, I’ll discuss social media superspreaders. Due to their effectiveness, superspreader accounts are often used to spread "low credibility" content, disinformation and propaganda, and today this is more often done by hostile state actors such as Russia.

1/14

1/14

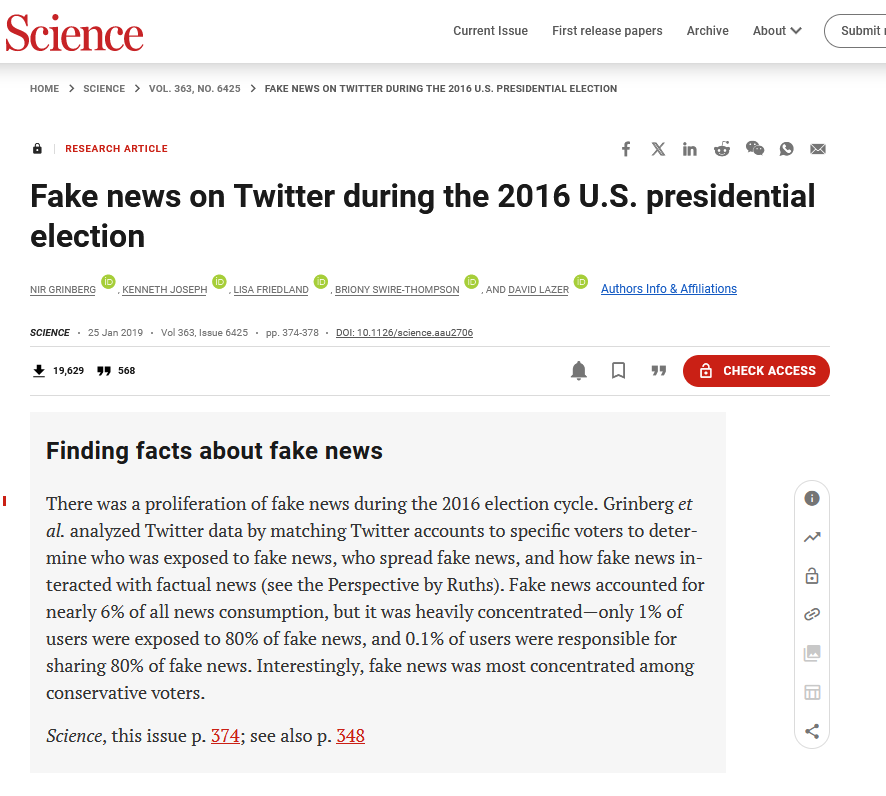

DeVerna et al. (2024) described superspreaders as "users who consistently disseminate a disproportionately large amount of low-credibility content," also known as bullshit. It’s worth noting, that some of these people may actually believe the lies they spread.

2/14

2/14

The numbers behind these accounts are astonishing – a study by Grinberg et al. (2019) found out that 0,1% of Twitter accounts were responsible for sharing approximately 80% of the mis/disinformation related to the 2016 US presidential election.

3/14

3/14

The same applies to COVID-19 related disinformation, as only 12 accounts the researchers referred to as the "dirty dozen", produced 65% of the anti-vaccine content on Twitter. The most famous of this group is the presidential candidate RFK Jr.:

4/14

4/14

https://twitter.com/P_Kallioniemi/status/1658079214447337475

These accounts are naturally amplified by often state-sponsored troll and bot farms. Inorganic amplifying can make the content seem more attractive to regular people through massive amount of likes and shares, a technique that’s based on basic behavioral sciences.

5/14

5/14

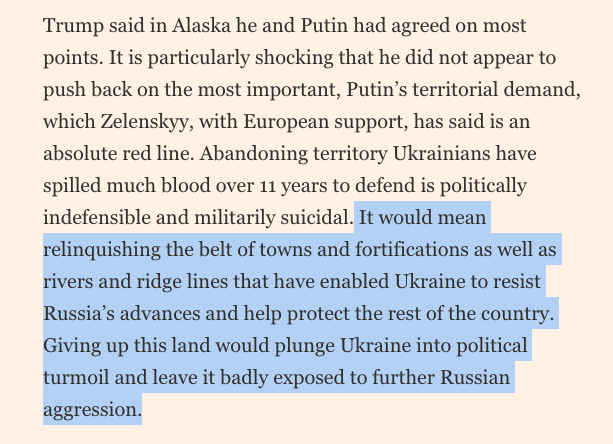

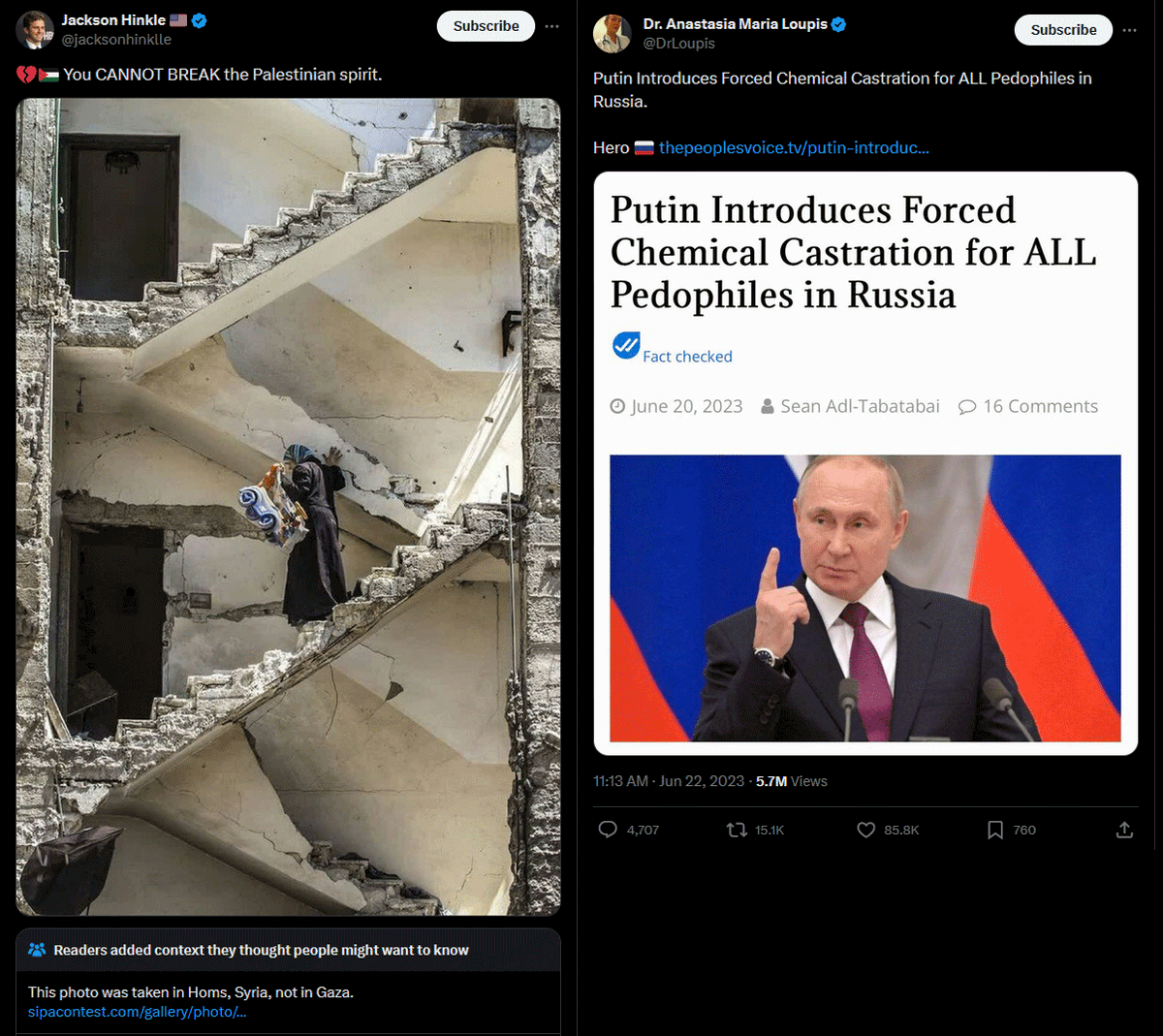

When it comes to geopolitics and especially the situation in Ukraine, we can easily name a few of the most prominent superspreader accounts who have no interest in the truth: Jackson Hinkle, Kim Dotcom, Ian Miles Cheong, Alex Jones, Tucker Carlson and Russell Brand.

6/14

6/14

Another good way to spot superspreaders is to check the "Community Notes leaderboard" website, where Jackson Hinkle holds the position number 4, Cheong is at 7th position, and Elon Musk himself can be found at spot #39.

7/14

7/14

Naturally, the platform’s owner also often comments and shares content from these people & even engages in conversations with them on Spaces, because apparently he wants to be surrounded by conspiratorial "Yes Men",instead of doing tough interviews with people like Don Lemon.8/14

Most superspreader accounts have very little interest in the truth, as the nature of social media encourages you to go for maximum engagement (likes, shares, comments). On X, this even affects your ad share revenue, basically allowing people to earn money through lies.

9/14

9/14

There are many examples of pro-Kremlin narratives being spread by these accounts. One of them is the lie that Zelenskyy "bought a mansion from King Charles". The news came from a AI-generated fake news blog, and was spread by large accounts like Liz Churchill’s.

10/14

10/14

Another fake story about the "US-funded Ukrainian bioweapons labs" that even made it to the mainstream was started by QAnon follower Jacob Creech AKA @WarClandestine, who later on bragged about making money from the ad share revenue system of X.

11/14

11/14

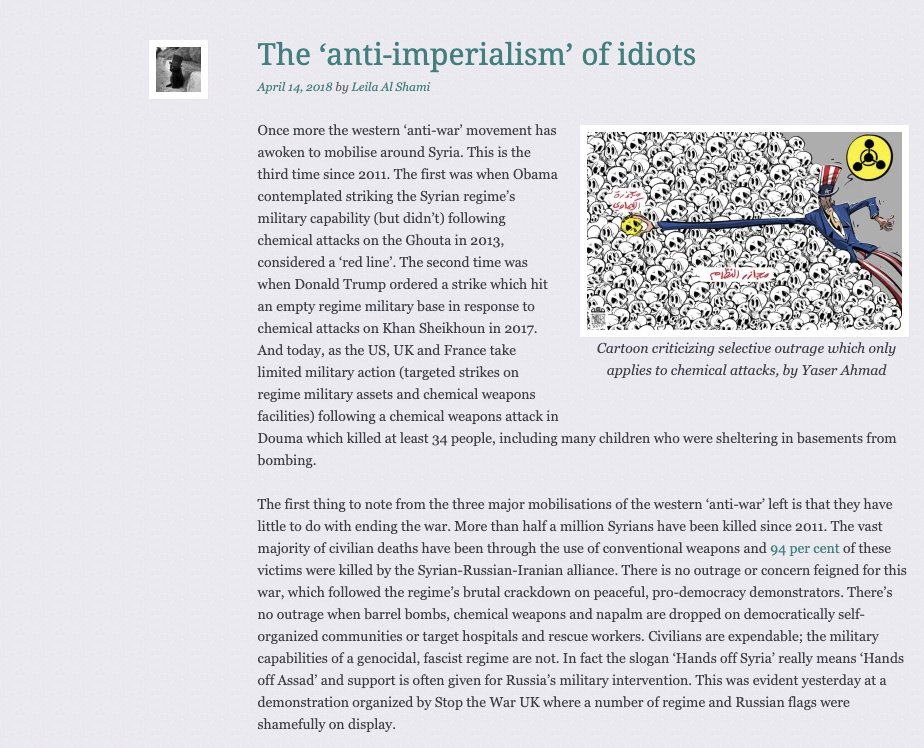

Most of the content promoted and made up by these large accounts draw inspiration from various conspiracy theories like QAnon, PizzaGate, or The Great Reset. They often also share photos in wrong context, for example photos from Syria are told to be from Gaza.

12/14

12/14

As I’ve stated many times before, there are no downsides to rage farming and spreading lies online, and after Elon took over it has actually become a viable monetization strategy that can make you relatively rich.

13/14

13/14

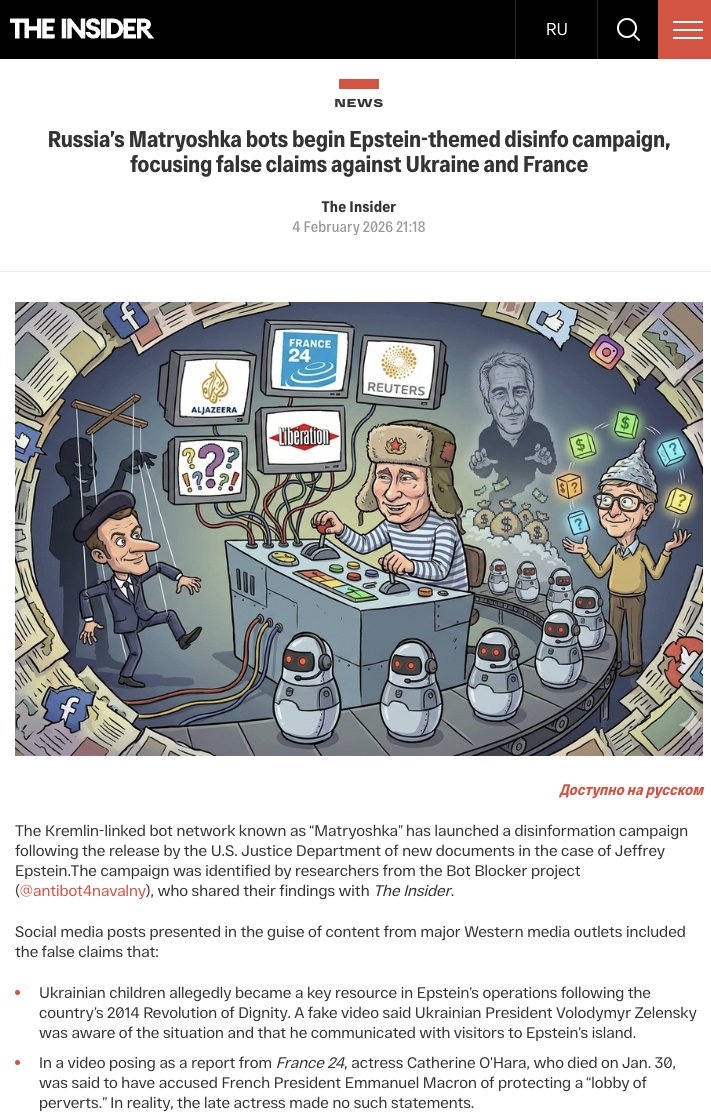

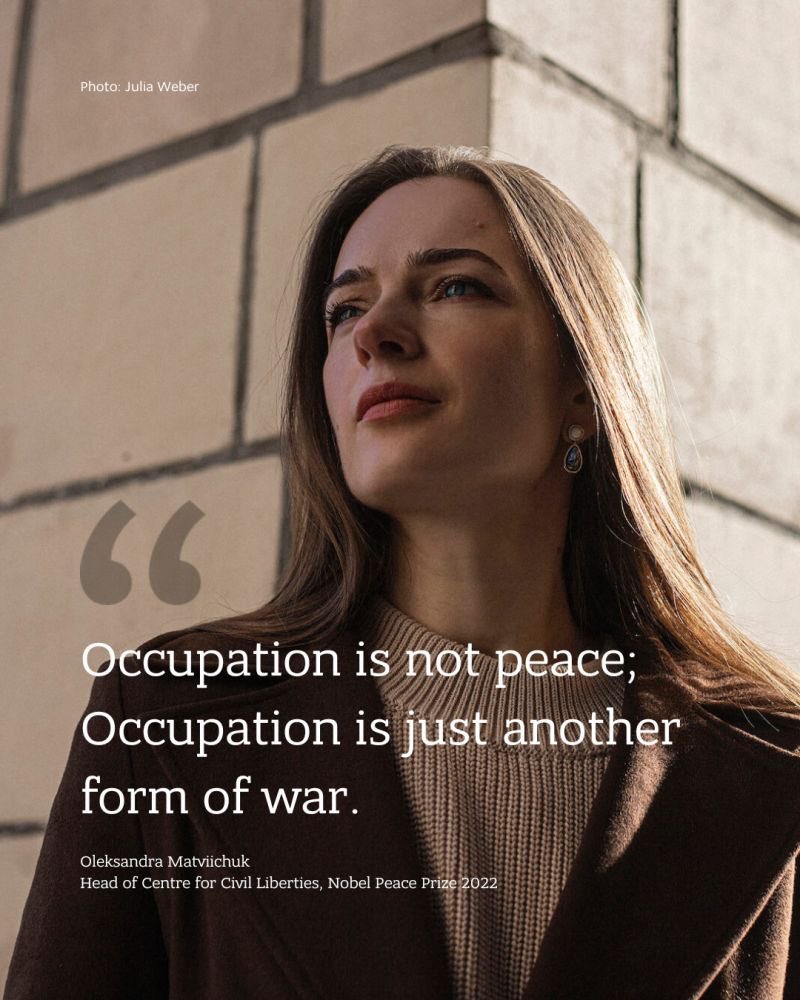

Hostile state actors have also figured out the potential of using superspreaders to amplify their false narratives. For example, Russia's embassy accounts often tag people like Jackson Hinkle in their posts, hoping they'd share the content to their large following.

14/14

14/14

• • •

Missing some Tweet in this thread? You can try to

force a refresh