MONEY AFTER AI IS CRYPTO

What is money after generative AI and robotics? This is essentially crypto. Money itself becomes cryptocurrency, just as much intelligence becomes electricity.

Here’s why:

1) First, cryptocurrency is what’s provably scarce in the age of AI abundance. That isn’t a bad thing. For example, you need scarce crypto assets to prove you’re a human when AI tools for faking humanity are abundant.

2) Second, money is a bridge across economically distinct actors. You don’t need to pay your hand to move, nor do you need to pay a robot you own to move. But a robot owned by another economic actor will still need money to rent. So, send the coin to unlock that drone.

3) Basically, scarcity at a high level doesn’t go away, so money doesn’t go away. But many forms of scarcity may go away. Washing machines are stationary household robots and may be replaced by walking machines that are mobile household robots.

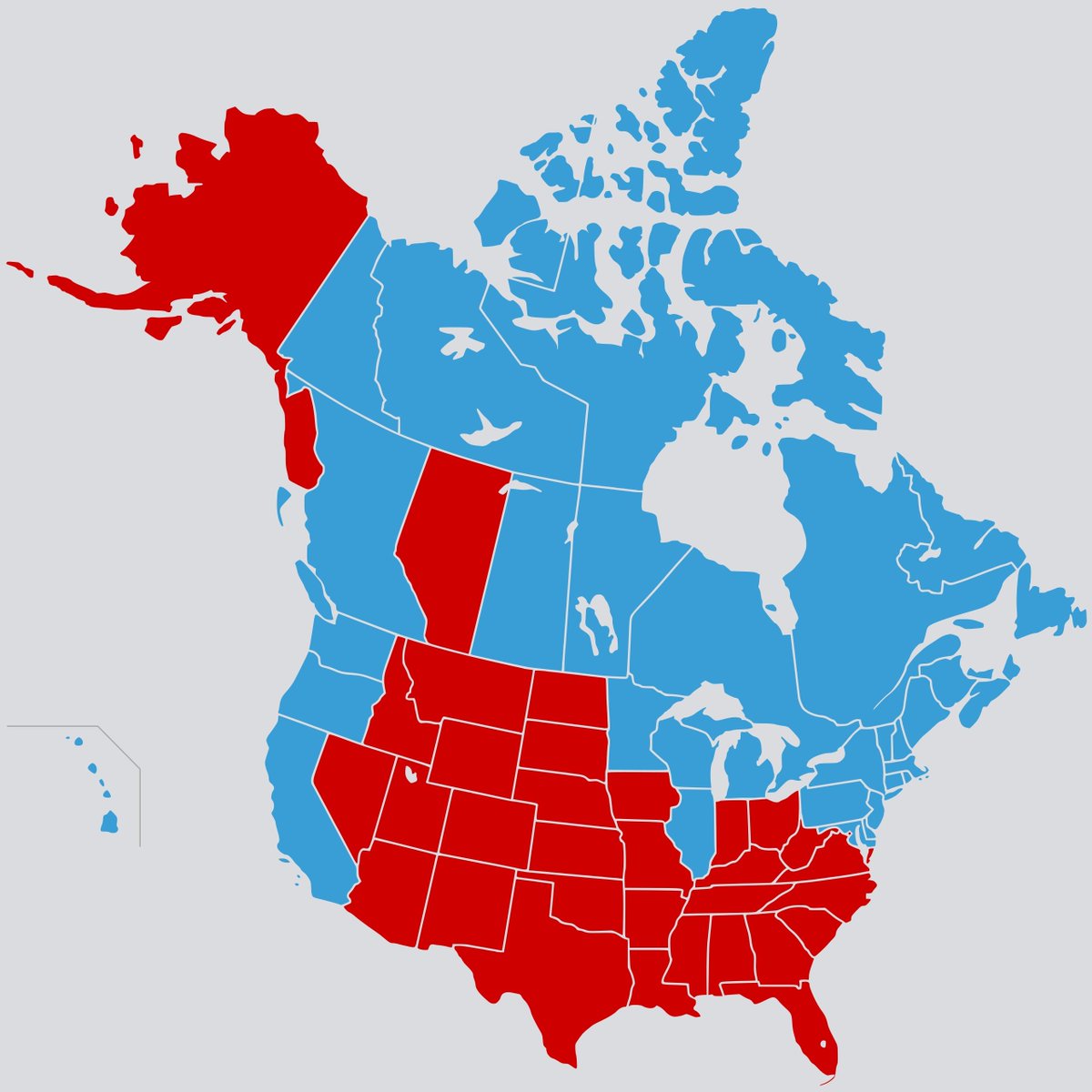

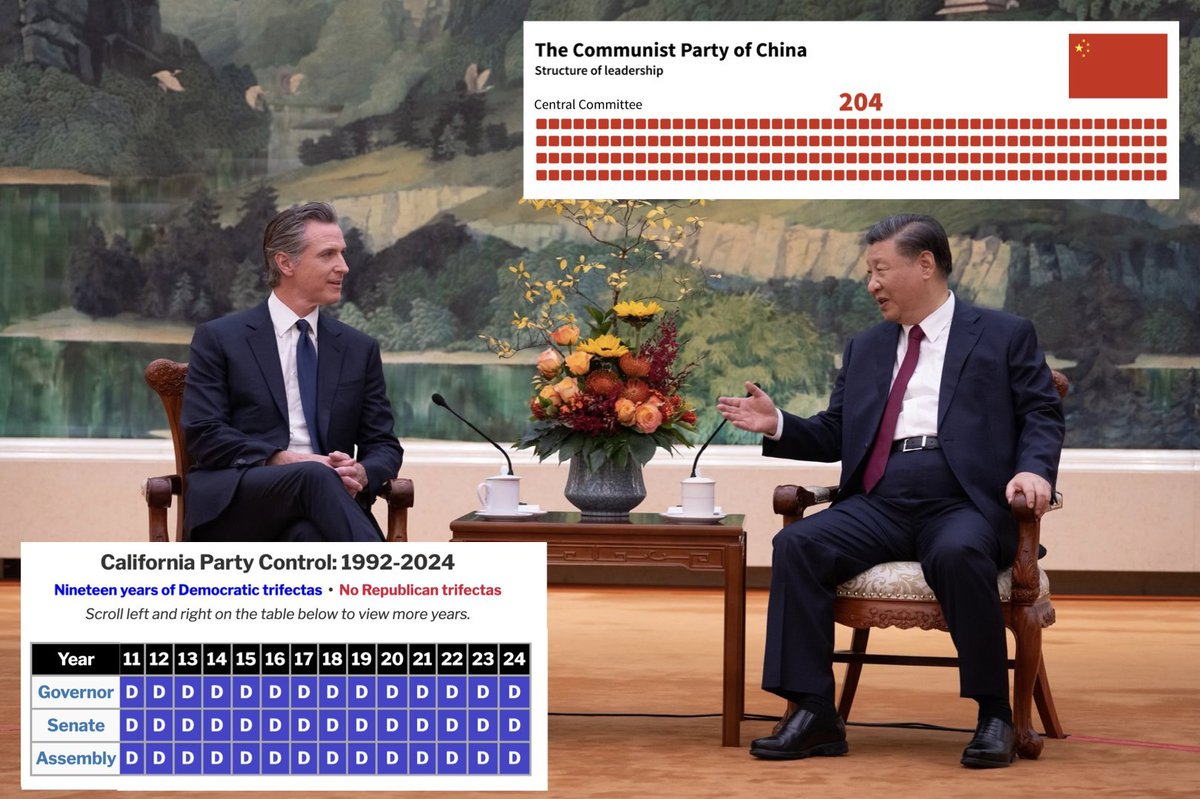

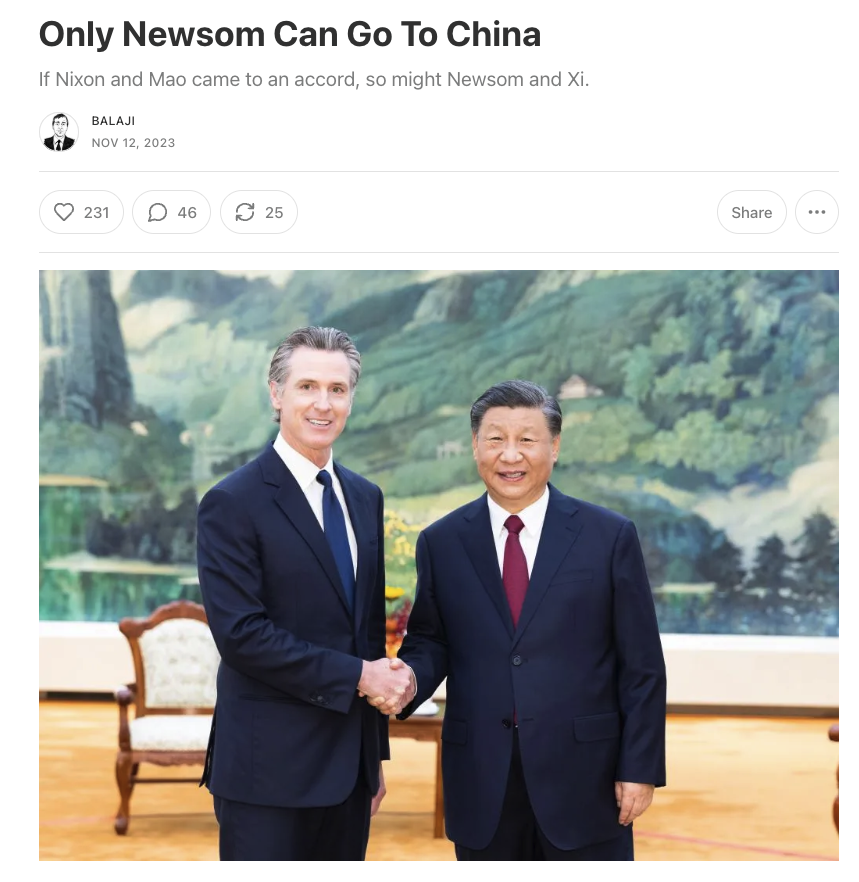

4) The supply chains to build the robots and the nuclear power plants to run the AI datacenters will remain scarce. And those are largely in China, and more generally in Asia. So money is valuable there.

5) The most important form of scarcity in the AI age are the private keys to control the robots. Those too will be crypto, because web3 backends like Bitcoin and Ethereum have far higher levels of security than any web2 system.

In short: AI is digital abundance but it doesn’t make everything abundant. Crypto is digital scarcity and complements AI’s abundance. So money after AI is crypto.

What is money after generative AI and robotics? This is essentially crypto. Money itself becomes cryptocurrency, just as much intelligence becomes electricity.

Here’s why:

1) First, cryptocurrency is what’s provably scarce in the age of AI abundance. That isn’t a bad thing. For example, you need scarce crypto assets to prove you’re a human when AI tools for faking humanity are abundant.

2) Second, money is a bridge across economically distinct actors. You don’t need to pay your hand to move, nor do you need to pay a robot you own to move. But a robot owned by another economic actor will still need money to rent. So, send the coin to unlock that drone.

3) Basically, scarcity at a high level doesn’t go away, so money doesn’t go away. But many forms of scarcity may go away. Washing machines are stationary household robots and may be replaced by walking machines that are mobile household robots.

4) The supply chains to build the robots and the nuclear power plants to run the AI datacenters will remain scarce. And those are largely in China, and more generally in Asia. So money is valuable there.

5) The most important form of scarcity in the AI age are the private keys to control the robots. Those too will be crypto, because web3 backends like Bitcoin and Ethereum have far higher levels of security than any web2 system.

In short: AI is digital abundance but it doesn’t make everything abundant. Crypto is digital scarcity and complements AI’s abundance. So money after AI is crypto.

• • •

Missing some Tweet in this thread? You can try to

force a refresh