Good morning. At some point this summer, perhaps quite soon, @AIatMeta will be releasing a LLaMA-3 model with 400B parameters. It will likely be the strongest open-source LLM ever released by a wide margin.

This is a thread about how to run it locally. 🧵

This is a thread about how to run it locally. 🧵

First up, the basics: You can quantize models to about ~6bits per parameter before performance degrades. We don't want performance to degrade, so 6 bits it is. This means the model will be (6/8) * 400B = 300GB.

For every token generated by a language model, each weight must be read from memory once. Immediately, this makes it clear that the weights must stay in memory. Even offloading them to an M.2 drive (read speed <10GB/sec) will mean waiting 300/10 = 30 seconds per token.

In fact, reading the weights once per token turns out to be the bottleneck for generating text from an LLM - to get good speed, you need surprisingly little FLOPs, and surprisingly huge amounts of memory bandwidth.

So, if we don't really need massive compute power, we have two choices for where to put the weights: CPU memory or GPU memory.

GPU memory has higher bandwidth than CPU memory, but it's more expensive. How much more expensive?

GPU memory has higher bandwidth than CPU memory, but it's more expensive. How much more expensive?

Let's work it out: The highest-memory consumer GPUs (3090/4090) are 24GB. We would need about 16 of them for this. This would cost >$30,000, and you wouldn't even be able to fit them in a single case anyway.

The highest-memory datacenter GPUs (A100/H100) are 80GB. We would need 4-5 of these. Although we can fit that in a single case, the cost at current prices will probably be >$50,000.

What about CPU RAM? Consumer RAM is quite cheap, but to fit 300+GB in a single motherboard, we're probably looking at a server board, which means we need RDIMM server memory. Right now, you can get 64GB of this for about $300-400, so the total for 384GB would be ~$2,100.

You'll notice this number is a lot lower than the ones above! But how much bandwidth do we actually get? Here's the tricky bit: It depends on how many memory "channels" your motherboard has. This is not the same as memory slots!

Most consumer motherboards are "dual-channel", they have 2 memory channels, even if they have 4 slots. A single stick of DDR5 RAM has a bandwidth of about 40GB/sec, so with 2 channels, this becomes 80GB/sec. 300GB/80GB = theoretical minimum of 4 seconds per token. Still slow!

Server motherboards, on the other hand, have a lot more. Intel Xeon has 8 channels per CPU, AMD EPYC has 12. Let's go with AMD - we want those extra channels.

1 EPYC CPU = 12 RAM channels = 480GB/sec

2 EPYC CPUs = 24 RAM channels = 960GB/sec!

Now we're getting somewhere! But what's the total system cost? Let's add it up:

2 EPYC CPUs = 24 RAM channels = 960GB/sec!

Now we're getting somewhere! But what's the total system cost? Let's add it up:

1 socket system:

Motherboard: ~$800

CPU: ~$1,100

Memory: ~$2,100

Case/PSU/SSD: ~$400

Total: $4,500

2 socket system:

Motherboard: ~$1,000

CPU: ~$2,200

Memory: ~$2,100

Case/PSU/SSD: ~$400

Total: $5,700

Motherboard: ~$800

CPU: ~$1,100

Memory: ~$2,100

Case/PSU/SSD: ~$400

Total: $4,500

2 socket system:

Motherboard: ~$1,000

CPU: ~$2,200

Memory: ~$2,100

Case/PSU/SSD: ~$400

Total: $5,700

These aren't cheap systems, but you're serving an enormous (and enormously capable) open LLM at 1-2tok/sec, similar speeds to a fast human typist at a fraction of the cost of doing it on GPU!

If you want to serve a 400B LLM locally, DDR5 servers are the way to go.

If you want to serve a 400B LLM locally, DDR5 servers are the way to go.

Sample 2-socket build:

Motherboard: Gigabyte MZ73-LM1

CPU: 2x AMD EPYC 9124

RAM: 24x 4800mhz+ 16GB DDR5 RDIMM

PSU: Corsair HX1000i (You don't need all that power, you just need lots of CPU power cables for 2 sockets!)

Case: PHANTEKS Enthoo Pro 2 Server

Motherboard: Gigabyte MZ73-LM1

CPU: 2x AMD EPYC 9124

RAM: 24x 4800mhz+ 16GB DDR5 RDIMM

PSU: Corsair HX1000i (You don't need all that power, you just need lots of CPU power cables for 2 sockets!)

Case: PHANTEKS Enthoo Pro 2 Server

You'll also need heatsinks - 4U/tower heatsinks for Socket SP5 EPYC processors are hard to find, but some lunatic is making them in China and selling on Ebay/Aliexpress. Buy two.

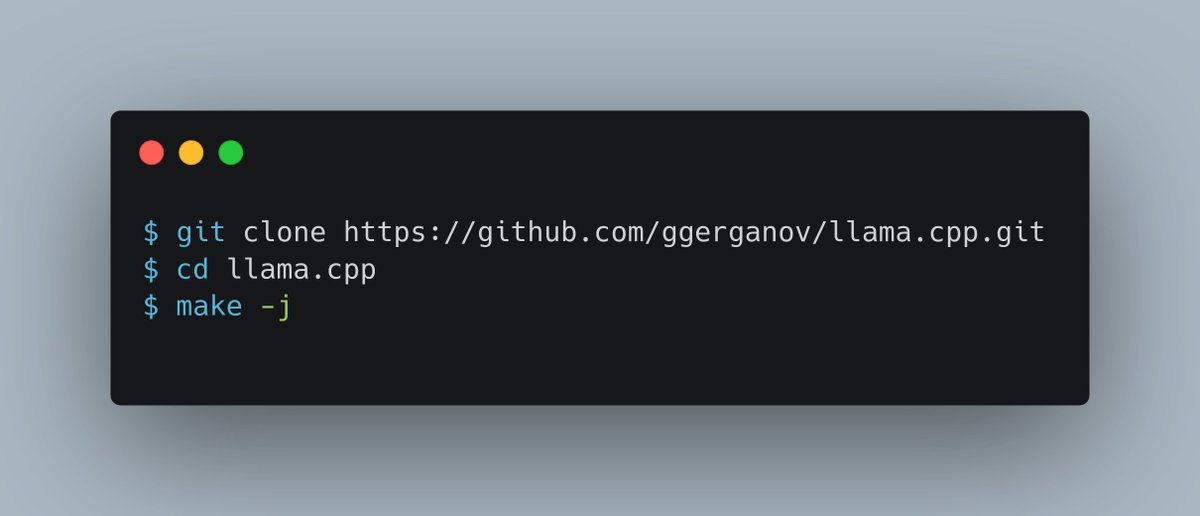

Once you actually have the hardware, it's super-easy: Just run llama.cpp or llama-cpp-python and pass in your Q6 GGUF. One trick, though: On a two-socket motherboard, you need to interleave the weights across both processors' RAM. Do this:

numactl --interleave=0-1 [your_script]

numactl --interleave=0-1 [your_script]

And that's it! A single no-GPU server tower pulling maybe 400W in the corner of your room will be running an LLM far more powerful than GPT-4.

Even a year ago, I would have dismissed this as completely impossible, and yet the future came at us fast. Good luck!

Even a year ago, I would have dismissed this as completely impossible, and yet the future came at us fast. Good luck!

@byjlw_ @AIatMeta It is possible to permanently put some layers on the GPU and others on the CPU - this is natively supported in llama.cpp. However, you can't really move layers around during the computation, it's just going to be much slower than leaving them where they are.

• • •

Missing some Tweet in this thread? You can try to

force a refresh