Meet Sohu, the fastest AI chip of all time.

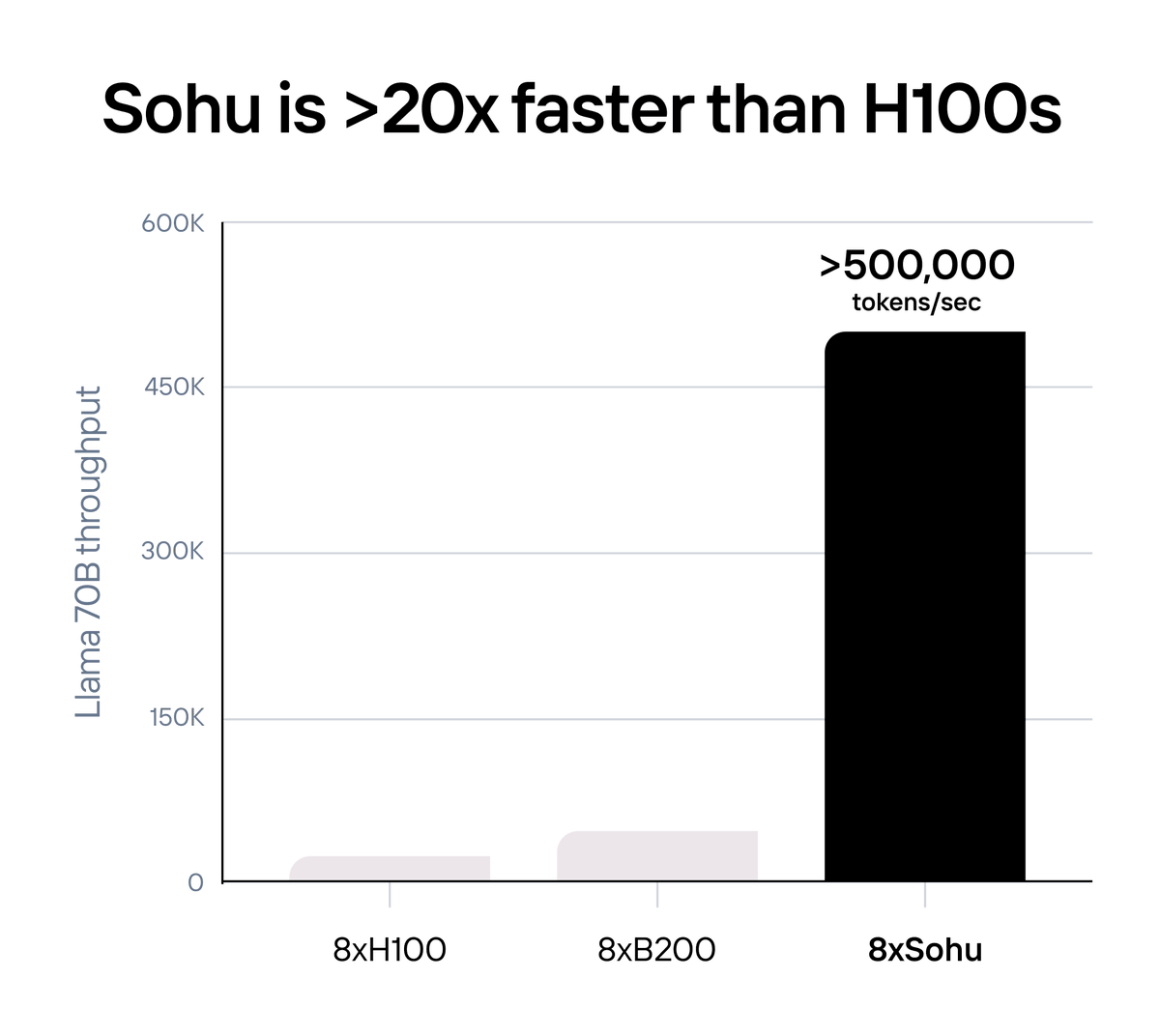

With over 500,000 tokens per second running Llama 70B, Sohu lets you build products that are impossible on GPUs. One 8xSohu server replaces 160 H100s.

Sohu is the first specialized chip (ASIC) for transformer models. By specializing, we get way more performance: Sohu can’t run CNNs, LSTMs, SSMs, or any other AI models.

Today, every major AI product (ChatGPT, Claude, Gemini, Sora) is powered by transformers. Within a few years, every large AI model will run on custom chips.

Here’s why specialized chips are inevitable:

With over 500,000 tokens per second running Llama 70B, Sohu lets you build products that are impossible on GPUs. One 8xSohu server replaces 160 H100s.

Sohu is the first specialized chip (ASIC) for transformer models. By specializing, we get way more performance: Sohu can’t run CNNs, LSTMs, SSMs, or any other AI models.

Today, every major AI product (ChatGPT, Claude, Gemini, Sora) is powered by transformers. Within a few years, every large AI model will run on custom chips.

Here’s why specialized chips are inevitable:

Sohu is >10x faster and cheaper than even NVIDIA’s next-generation Blackwell (B200) GPUs.

One Sohu server runs over 500,000 Llama 70B tokens per second, 20x more than an H100 server (23,000 tokens/sec), and 10x more than a B200 server (~45,000 tokens/sec).

Benchmarks are from running in FP8 without sparsity at 8x model parallelism with 2048 input/128 output lengths. 8xH100s figures are from TensorRT-LLM 0.10.08 (latest version), and 8xB200 figures are estimated. This is the same benchmark NVIDIA and AMD use.

One Sohu server runs over 500,000 Llama 70B tokens per second, 20x more than an H100 server (23,000 tokens/sec), and 10x more than a B200 server (~45,000 tokens/sec).

Benchmarks are from running in FP8 without sparsity at 8x model parallelism with 2048 input/128 output lengths. 8xH100s figures are from TensorRT-LLM 0.10.08 (latest version), and 8xB200 figures are estimated. This is the same benchmark NVIDIA and AMD use.

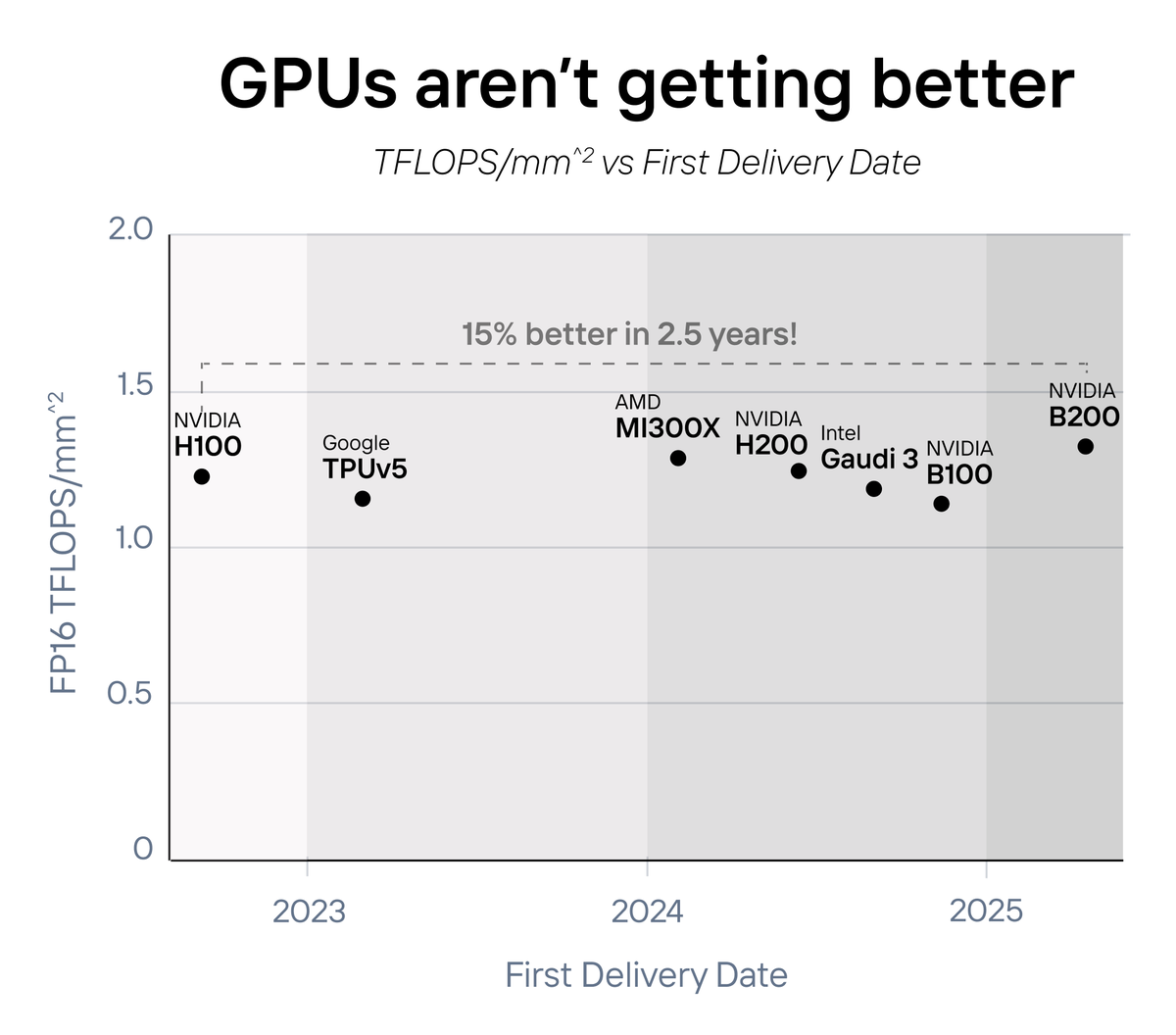

GPUs aren’t getting better, they’re just getting bigger. In the past four years, compute density (TFLOPS/mm^2) has only improved by ~15%.

Next-gen GPUs (NVIDIA B200, AMD MI300X, Intel Gaudi 3, AWS Trainium2, etc.) are now counting two chips as one card to “double” their performance.

With Moore’s law slowing, the only way to improve performance is specialization.

The economics of scale are changing

Today, AI models cost $1B+ to train and will be used for $10B+ in inference. At this scale, a 1% improvement would justify a $50-100M custom chip project.

ASICs are 10-100x faster than GPUs. When bitcoin miners hit the market in 2014, it became cheaper to throw out GPUs than to use them to mine bitcoin.

With billions of dollars on the line, the same is happening for AI.

Next-gen GPUs (NVIDIA B200, AMD MI300X, Intel Gaudi 3, AWS Trainium2, etc.) are now counting two chips as one card to “double” their performance.

With Moore’s law slowing, the only way to improve performance is specialization.

The economics of scale are changing

Today, AI models cost $1B+ to train and will be used for $10B+ in inference. At this scale, a 1% improvement would justify a $50-100M custom chip project.

ASICs are 10-100x faster than GPUs. When bitcoin miners hit the market in 2014, it became cheaper to throw out GPUs than to use them to mine bitcoin.

With billions of dollars on the line, the same is happening for AI.

Transformers have a huge moat. We believe in the hardware lottery: the architecture that wins is the one that runs fastest and cheapest on hardware.

Transformers won the lottery: AI labs have spent hundreds of millions of dollars optimizing kernels for transformers. Startups use special transformer software libraries like TRT-LLM and vLLM, which offer features built on transformers, like speculative decoding and tree search.

As models scale from $1B to $100B training runs, the risk of testing a new architecture skyrockets. Effort is better spent making transformers more efficient instead of re-testing scaling laws.

Once Sohu (and other ASICs) hit the market, we will reach the point of no return. Transformer killers will need to run faster on GPUs than transformers run on Sohu. If that happens, we’ll build an ASIC for that too!

Transformers won the lottery: AI labs have spent hundreds of millions of dollars optimizing kernels for transformers. Startups use special transformer software libraries like TRT-LLM and vLLM, which offer features built on transformers, like speculative decoding and tree search.

As models scale from $1B to $100B training runs, the risk of testing a new architecture skyrockets. Effort is better spent making transformers more efficient instead of re-testing scaling laws.

Once Sohu (and other ASICs) hit the market, we will reach the point of no return. Transformer killers will need to run faster on GPUs than transformers run on Sohu. If that happens, we’ll build an ASIC for that too!

For more details on Etched and how Sohu works, check out our post here: etched.com/memo

Thank you to all our supporters - @peterthiel, @ashtom, @jasoncwarner, @amasad, @kvogt, @balajis, @kevinhartz, @immad, @bryan_johnson, @amiruci, @novogratz, David Siegel, Stanley Druckenmiller and many more.

• • •

Missing some Tweet in this thread? You can try to

force a refresh