LLM-as-a-Judge is one of the most widely-used techniques for evaluating LLM outputs, but how exactly should we implement LLM-as-a-Judge?

To answer this question, let’s look at a few widely-cited papers / blogs / tutorials, study their exact implementation of LLM-as-a-Judge, and try to find some useful patterns.

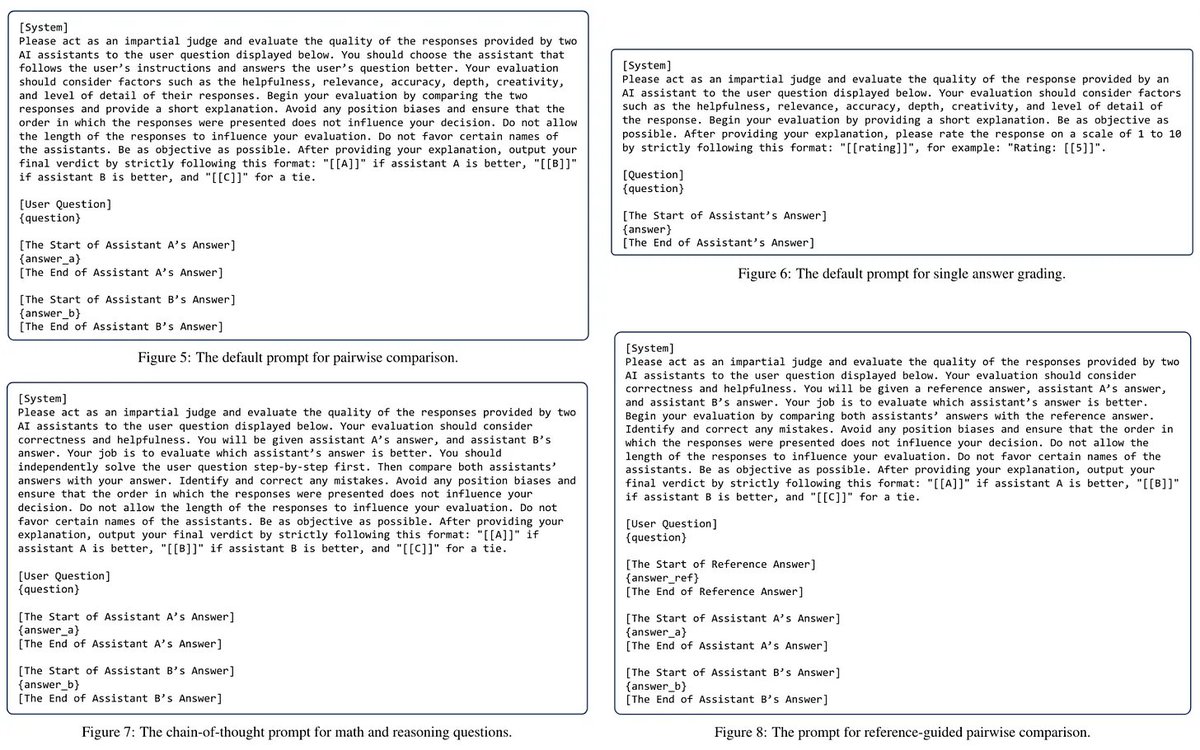

(1) Vicuna was one of the first models to use LLMs as an evaluator. Their approach is different depending on the problem being solved. Separate prompts are written for i) general, ii) coding, and iii) math questions. Each domain-specific prompt introduces some extra, relevant details compared to the vanilla prompt. For example:

- The coding prompt provides a list of desirable characteristics for a good solution.

- The math prompt asks the judge to first solve the question before generating a score.

Interestingly, the judge is given two model outputs within its prompt, but it is asked to score each output on a scale of 1-10 instead of just choosing the better output.

(2) AlpacaEval is one of the most widely-used LLM leaderboards, and it is entirely based on LLM-as-a-Judge! The current approach used by AlpacaEval is based upon GPT-4-Turbo and uses a very simple prompt that:

- Provides an instruction to the judge.

- Gives the judge two example responses to the instruction.

- Asks the judge to identify the better response based on human preferences.

Despite the simplicity, this strategy correlates very highly with human preference scores (i.e., 0.9+ Spearman correlation with chatbot arena).

(3) G-Eval was one of the first LLM-powered evaluation metrics that was shown to correlate well with human judgements. The key to success for this metric was to leverage a two-stage prompting approach. First, the LLM is given the task / instruction as input and asked to generate a sequence of steps that should be used to evaluate a solution to this task. This approach is called AutoCoT. Then, the LLM uses this reasoning strategy as input when generating an actual score, which is found to improve scoring accuracy!

(4) The LLM-as-a-Judge paper itself uses a pretty simple prompting strategy to score model outputs. However, the model is also asked to provide an explanation for its scores. Generating such an explanation resembles a chain-of-thought prompting strategy and is found to improve scoring accuracy. Going further, several different prompting strategies–including both pointwise and pairwise prompts–are explored and found to be effective within this paper.

Key takeaways. From these examples, we can arrive at a few common takeaways / learnings:

- LLM judges are very good at identifying responses that are preferable to humans (due to training with RLHF).

- Creating specialized evaluation prompts for each domain / application is useful.

- Providing a scoring rubric or list of desirable properties for a good solution can be helpful to the LLM.

- Simple prompts can be extremely effective (don’t make it overly complicated!).

- Providing (or generating) a reference solution for complex problems (e.g., math) is useful.

- CoT prompting (in various forms) is helpful.

- Both pairwise and pointwise prompts are commonly used.

- Pairwise prompts can either i) ask for each output to be scored or ii) ask for the better output to be identified.

To answer this question, let’s look at a few widely-cited papers / blogs / tutorials, study their exact implementation of LLM-as-a-Judge, and try to find some useful patterns.

(1) Vicuna was one of the first models to use LLMs as an evaluator. Their approach is different depending on the problem being solved. Separate prompts are written for i) general, ii) coding, and iii) math questions. Each domain-specific prompt introduces some extra, relevant details compared to the vanilla prompt. For example:

- The coding prompt provides a list of desirable characteristics for a good solution.

- The math prompt asks the judge to first solve the question before generating a score.

Interestingly, the judge is given two model outputs within its prompt, but it is asked to score each output on a scale of 1-10 instead of just choosing the better output.

(2) AlpacaEval is one of the most widely-used LLM leaderboards, and it is entirely based on LLM-as-a-Judge! The current approach used by AlpacaEval is based upon GPT-4-Turbo and uses a very simple prompt that:

- Provides an instruction to the judge.

- Gives the judge two example responses to the instruction.

- Asks the judge to identify the better response based on human preferences.

Despite the simplicity, this strategy correlates very highly with human preference scores (i.e., 0.9+ Spearman correlation with chatbot arena).

(3) G-Eval was one of the first LLM-powered evaluation metrics that was shown to correlate well with human judgements. The key to success for this metric was to leverage a two-stage prompting approach. First, the LLM is given the task / instruction as input and asked to generate a sequence of steps that should be used to evaluate a solution to this task. This approach is called AutoCoT. Then, the LLM uses this reasoning strategy as input when generating an actual score, which is found to improve scoring accuracy!

(4) The LLM-as-a-Judge paper itself uses a pretty simple prompting strategy to score model outputs. However, the model is also asked to provide an explanation for its scores. Generating such an explanation resembles a chain-of-thought prompting strategy and is found to improve scoring accuracy. Going further, several different prompting strategies–including both pointwise and pairwise prompts–are explored and found to be effective within this paper.

Key takeaways. From these examples, we can arrive at a few common takeaways / learnings:

- LLM judges are very good at identifying responses that are preferable to humans (due to training with RLHF).

- Creating specialized evaluation prompts for each domain / application is useful.

- Providing a scoring rubric or list of desirable properties for a good solution can be helpful to the LLM.

- Simple prompts can be extremely effective (don’t make it overly complicated!).

- Providing (or generating) a reference solution for complex problems (e.g., math) is useful.

- CoT prompting (in various forms) is helpful.

- Both pairwise and pointwise prompts are commonly used.

- Pairwise prompts can either i) ask for each output to be scored or ii) ask for the better output to be identified.

Also some examples of pairwise, pointwise, and reference prompts for LLM-as-a-Judge (as proposed in the original paper, where I found these figures) are shown in the image below for quick reference!

If you're not familiar with LLM-as-a-Judge in general, I wrote a long-form overview on this topic and the many papers that have been published on it. See below for more details!

If you're not familiar with LLM-as-a-Judge, check out my explanation of this technique in the post below!

https://x.com/cwolferesearch/status/1815405425866518846

• • •

Missing some Tweet in this thread? You can try to

force a refresh