🚨New WP: Can LLMs predict results of social science experiments?🚨

Prior work uses LLMs to simulate survey responses, but can they predict results of social science experiments?

Across 70 studies, we find striking alignment (r = .85) between simulated and observed effects 🧵👇

Prior work uses LLMs to simulate survey responses, but can they predict results of social science experiments?

Across 70 studies, we find striking alignment (r = .85) between simulated and observed effects 🧵👇

To evaluate predictive accuracy of LLMs for social science experiments, we used #GPT4 to predict 476 effects from 70 well-powered experiments, including:

➡️50 survey experiments conducted through NSF-funded TESS program

➡️20 additional replication studies (Coppock et al. 2018)

➡️50 survey experiments conducted through NSF-funded TESS program

➡️20 additional replication studies (Coppock et al. 2018)

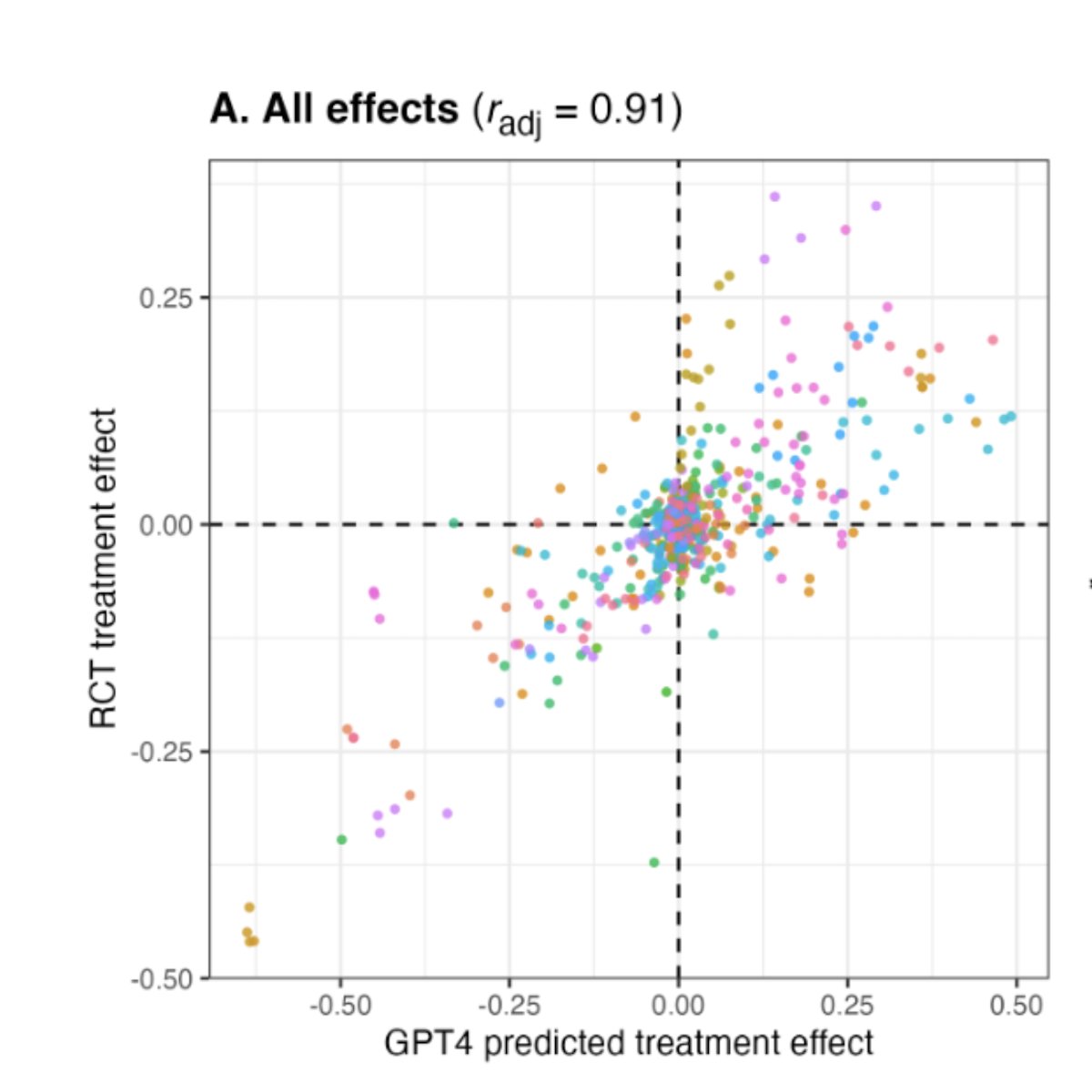

We prompted the model with (a) demographic profiles drawn from a representative dataset of Americans, and (b) experimental stimuli. The effects estimated by pooling these responses were strongly correlated with the actual experimental effects (r = .85; adj. r = 0.91)!

We also find that predictive accuracy improved across generations of LLMs, with GPT4 surpassing predictions elicited from an online sample (N = 2,659) of Americans.

Paper 👉 treatmenteffect.app/paper.pdf

Paper 👉 treatmenteffect.app/paper.pdf

But what if LLMs are simply retrieving & reproducing known experimental results from training data?

We find evidence against this: analyzing only studies *unpublished* at time of GPT4’s training data cut-off, we find high predictive accuracy (r = .90, adj. r = .94).

We find evidence against this: analyzing only studies *unpublished* at time of GPT4’s training data cut-off, we find high predictive accuracy (r = .90, adj. r = .94).

Important work finds biases in LLM responses resulting from training data inequalities. Do these biases impact accurate prediction of experimental results?

To assess, we compare predictive accuracy for:

➡️women & men

➡️Black & white participants

➡️Democrats & Republicans

To assess, we compare predictive accuracy for:

➡️women & men

➡️Black & white participants

➡️Democrats & Republicans

Despite known training data inequalities, LLM-derived predictive accuracy was comparable across subgroups.

However, there was little heterogeneity in experimental effects we studied, so more research is needed to assess if/how LLM predictions of experimental results are biased.

However, there was little heterogeneity in experimental effects we studied, so more research is needed to assess if/how LLM predictions of experimental results are biased.

We also evaluated predictive accuracy for “megastudies,” studies comparing the impact of a large number of interventions. Across nine survey and field megastudies, LLM-derived predictions were modestly accurate

(Notably, accuracy matched or surpassed expert forecasters)

(Notably, accuracy matched or surpassed expert forecasters)

Finally, we find LLMs can accurately predict effects on socially harmful outcomes, such as the impact of antivax FB posts on vax intentions (@_JenAllen et al., 2024). This capacity may have positive uses, such as for content moderation, though also highlights risks of misuse.

Overall our results show high accuracy of LLM-derived predictions for experiments with human participants, generally greater accuracy than samples of lay and expert humans.

Paper👉 treatmenteffect.app/paper.pdf

Paper👉 treatmenteffect.app/paper.pdf

This capacity has several applications for science and practice – e.g., running low-cost pilots to identify promising interventions, or simulating experiments that may be harmful to participants – but also limitations and risks, including concerns about bias, overuse, & misuse.

To explore further, you can use LLM-simulated participants to generate predicted experimental effects using this demo!

👇

treatmenteffect.app

👇

treatmenteffect.app

*Major* kudos to @lukebeehewitt and @AshuAshok (who co-led the research), and to @ghezae_isaias.

And thanks to @pascl_stanford and @StanfordPACS for generously supporting this project.

And thanks to @pascl_stanford and @StanfordPACS for generously supporting this project.

H/t also to some of the many scholars whose work we drew on:

@JEichstaedt @danicajdillion @kurtjgray @chris_bail @lpargyle @johnjhorton @mcxfrank @joon_s_pk @msbernst @percyliang @kerstingAIML @davidwingate @lltjuatja @gneubig @nikbpetrov @SchoeneggerPhil @molly_crockett

@JEichstaedt @danicajdillion @kurtjgray @chris_bail @lpargyle @johnjhorton @mcxfrank @joon_s_pk @msbernst @percyliang @kerstingAIML @davidwingate @lltjuatja @gneubig @nikbpetrov @SchoeneggerPhil @molly_crockett

@MortezDehghani @baixx062 @JamesBisbee @joshclinton @MohammadAtari90 @JoHenrich @lmesseri @SuhaibAbdurahm1

• • •

Missing some Tweet in this thread? You can try to

force a refresh