Did you ever take a photo & wish you'd zoomed in more or framed better? When this happens, we just crop.

Now there's a better way: Zoom Enhance -a new feature my team just shipped on Pixel. Available in Google Photos under Tools, it enhances both zoomed & un-zoomed images

1/n

Now there's a better way: Zoom Enhance -a new feature my team just shipped on Pixel. Available in Google Photos under Tools, it enhances both zoomed & un-zoomed images

1/n

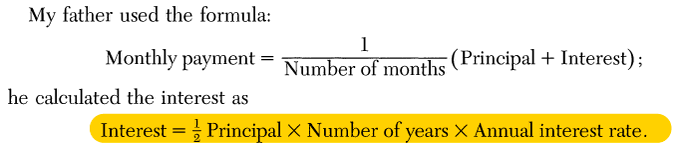

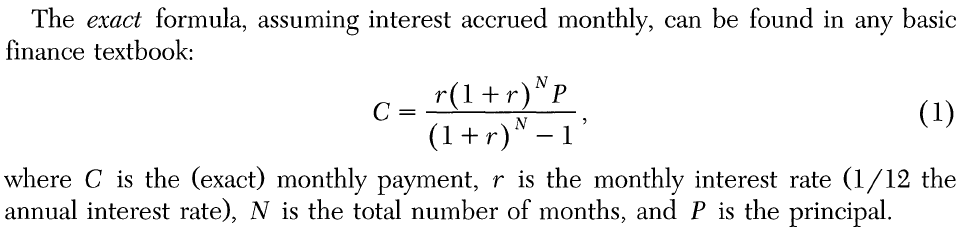

Zoom Enhance is our first im-to-im diffusion model designed & optimized to run fully on-device. It allows you to crop or frame the shot you wanted, and enhance it -after capture. The input can be from any device, Pixel or not, old or new. Below are some examples & use cases

2/n

2/n

Let's say you've zoomed to the max on your Pixel 8/9 Pro and got your shot; but you wish you could get a little closer. Now you can zoom in more, and enhance.

3/n

3/n

A bridge too far to see the details? A simple crop may not give the quality you want. Zoom Enhance can come in handy.

4/n

4/n

If you've been to the Louvre you know how hard it is to get close to the most famous painting of all time.

Next time you could shoot with the best optical quality you have (5x in this case), then zoom in after the fact.

5/n

Next time you could shoot with the best optical quality you have (5x in this case), then zoom in after the fact.

5/n

Like most people, I have lots of nice shots that can be even nicer if I'd framed them better. Rather than just cropping, you can now frame the shot you wanted, after the fact, and without losing out on quality.

7/n

7/n

Is the subject small and the field of view large? Zoom Enhance can help to isolate and enhance the region of interest.

8/n

8/n

Sometimes there's one or more better shots hiding within the just-average shot you took. Compose your best shot and enhance.

9/n

9/n

There's a lot of gems hidden in older, lower quality photos that you can now isolate and enhance. Like this one from some 20 years ago.

10/n

10/n

Pictures you get on social media or on the web (or even your own older photos) may not always be high quality/resolution. If they're small enough (~1MP), you can enhance them with or without cropping.

11/12

11/12

So Zoom Enhance gives you the freedom to capture the details within your photos, allowing you to highlight specific elements and focus on what matters to you.

It's a 1st step in powerful editing tools for consumer images, harnessing on-device diffusion models.

12/12

It's a 1st step in powerful editing tools for consumer images, harnessing on-device diffusion models.

12/12

Bonus use case worth mentioning:

Using your favorite text-2-image generator you typically get a result ~1 MP resolution (left image is 1280 × 720). If you want higher resolution, you can directly upscale on-device (right, 2048 × 1152) with Zoom Enhance.

13/12

Using your favorite text-2-image generator you typically get a result ~1 MP resolution (left image is 1280 × 720). If you want higher resolution, you can directly upscale on-device (right, 2048 × 1152) with Zoom Enhance.

13/12

• • •

Missing some Tweet in this thread? You can try to

force a refresh