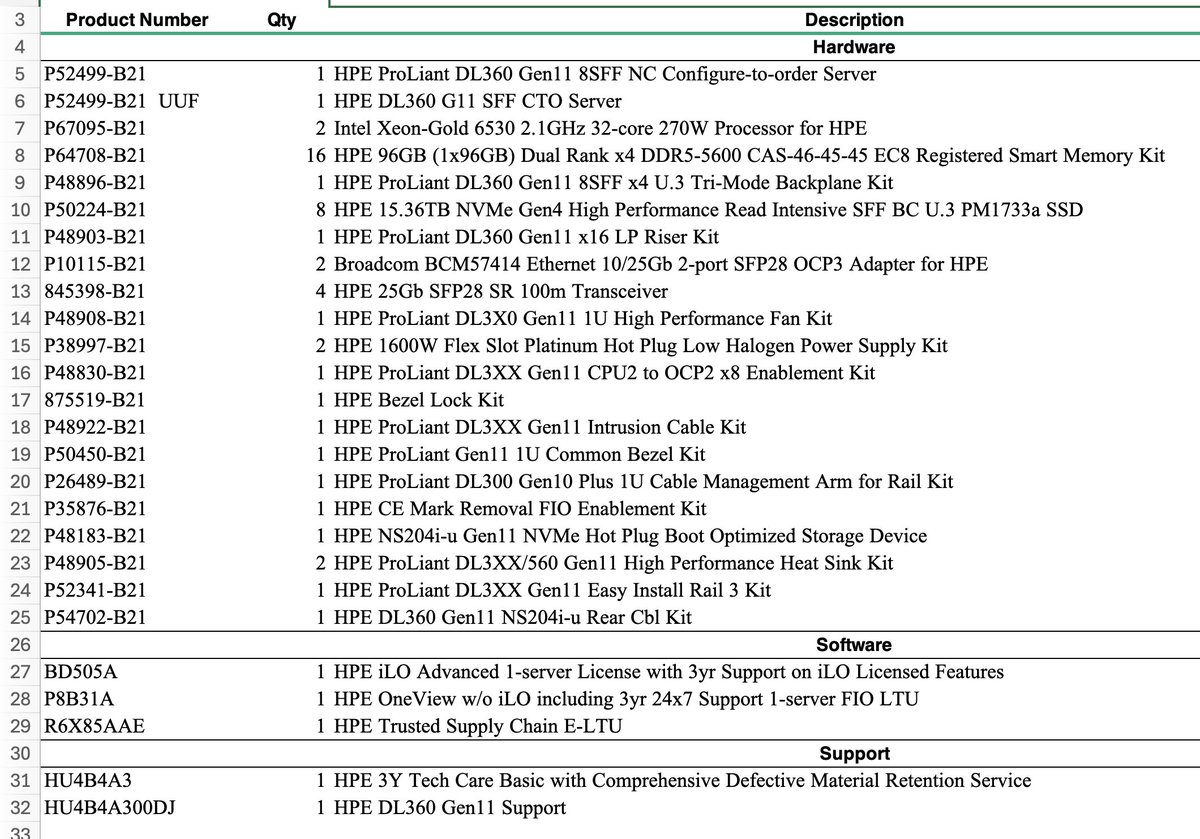

First off the key things we want to focus on are:

What's on the BOM:

"HPE ProLiant DL360 Gen11 8SFF x4 U.3 Tri-Mode Backplane Kit"

"HPE 15.36TB NVMe Gen4 High Performance Read Intensive SFF BC U.3 PM1733a SSD"

What's not on the BOM:

SmartArray/RAID controller

What's on the BOM:

"HPE ProLiant DL360 Gen11 8SFF x4 U.3 Tri-Mode Backplane Kit"

"HPE 15.36TB NVMe Gen4 High Performance Read Intensive SFF BC U.3 PM1733a SSD"

What's not on the BOM:

SmartArray/RAID controller

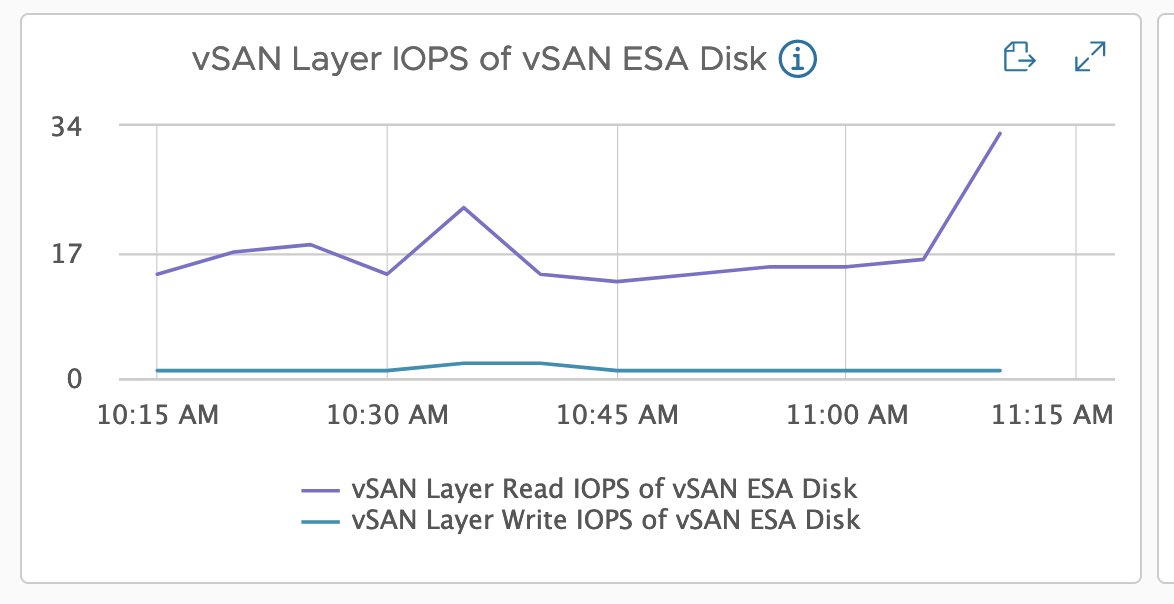

First off: HPE 15.36TB NVMe Gen4 High Performance Read Intensive SFF BC U.3 PM1733a SSD

Here is a search for all HPE drives on the vSAN VCG: vmware.com/resources/comp…

Here is a search for all HPE drives on the vSAN VCG: vmware.com/resources/comp…

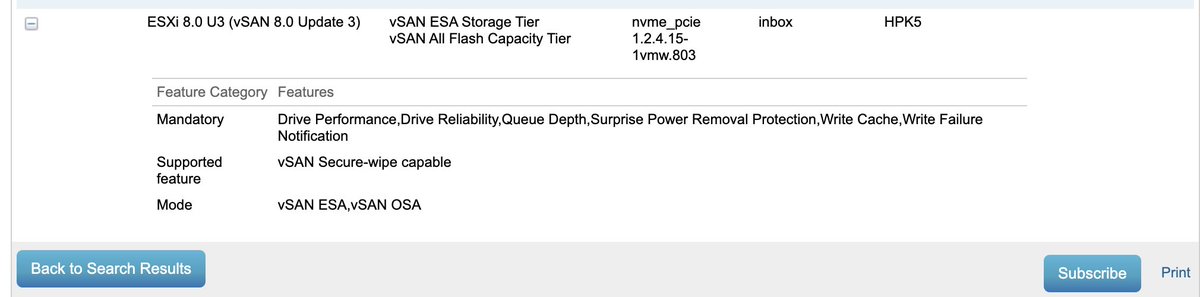

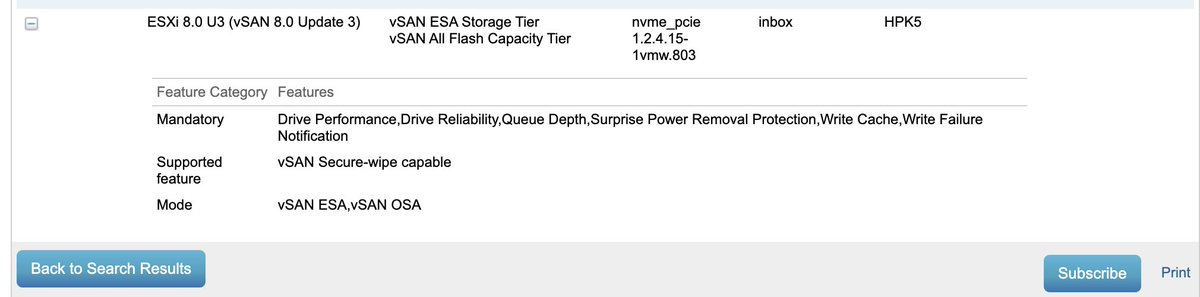

Here is the drive in question:

Note while you are here click the "Subscribe" button in the bottom corner for updates to changes on the VCG and note this uses the inbox driver, with the newest supported firmware being HPK5. Also certified for ESA.

vmware.com/resources/comp…

Note while you are here click the "Subscribe" button in the bottom corner for updates to changes on the VCG and note this uses the inbox driver, with the newest supported firmware being HPK5. Also certified for ESA.

vmware.com/resources/comp…

While I"m here I'll check what that firmware version fixed. Looks fairly serious from a stability basis so I'll make sure to use HPE's HSM + vLCM to patc this drive to the current firmware. support.hpe.com/hpesc/public/d…

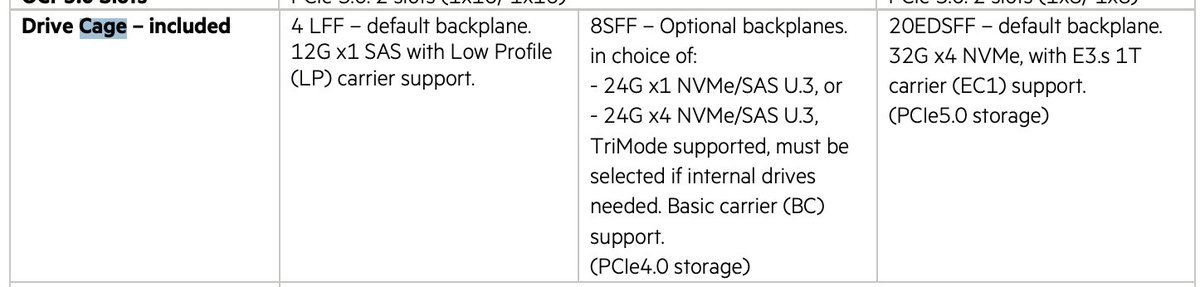

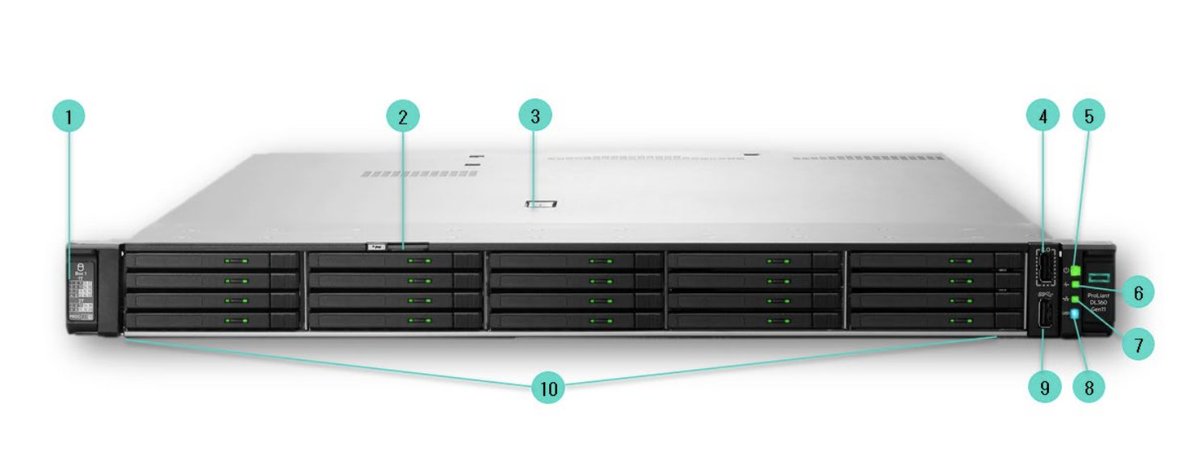

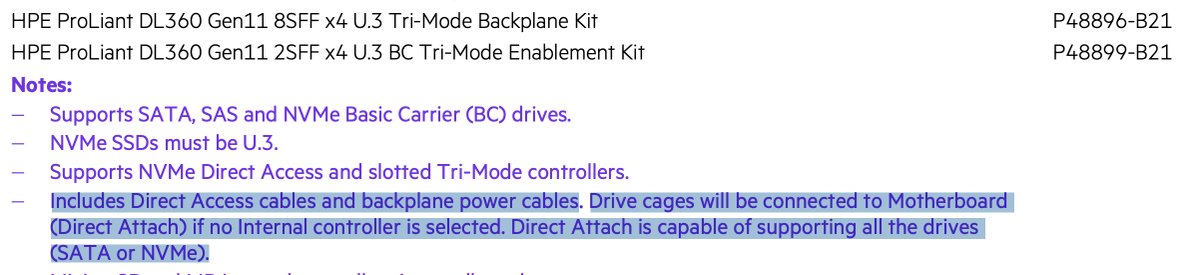

Next up let's look at P48896-B21: 1 HPE ProLiant DL360 Gen11 8SFF x4 U.3 Tri-Mode Backplane Kit

So this is the drive cage you NEED to use NVMe in a DL360 as it gives 4 PCI-E lanes to each drive (vs the cheaper basic one that only is 1x and only supports SATA in pass through.

So this is the drive cage you NEED to use NVMe in a DL360 as it gives 4 PCI-E lanes to each drive (vs the cheaper basic one that only is 1x and only supports SATA in pass through.

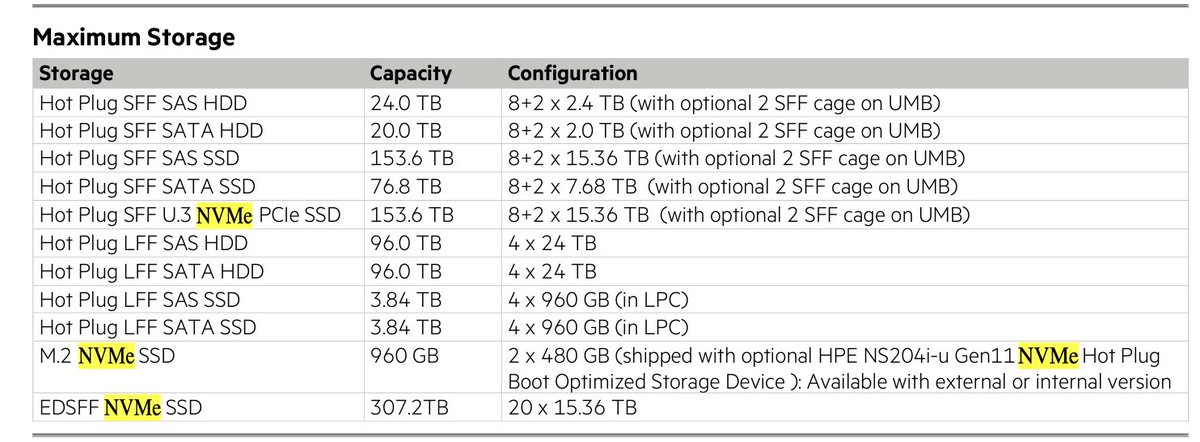

Looking at the QuickSpecs There are a 3 other options for ESA vSAN.

LFF 3.5'': Can't do NVMe pass through.

24G x 1 NVMe/SAS U3. Can't do NVMe pass through, and frankly will underperform with NVMe drives even if used for RAID.

20EDSFF - Supported for ESA.

LFF 3.5'': Can't do NVMe pass through.

24G x 1 NVMe/SAS U3. Can't do NVMe pass through, and frankly will underperform with NVMe drives even if used for RAID.

20EDSFF - Supported for ESA.

Rambling out loud, I think E3 form factor stuff is a better play in the long term as it allows more density. 2.5'' SFF really is going to end up legacy for greenfield that shouldn't be needed.

Note the E3 config will support 300TB, 2x the SFF ones.

(Please go 100Gbps networking if your doing something that dense!)

(Please go 100Gbps networking if your doing something that dense!)

We do have a NS204i-u, but that is only for a pair of M.2 boot devices (and a GREAT idea for boot, stop doing SD card, boot from SAN weird stuff!). This WILL NOT and cannot be used with the larger SFF or E3 format drives (and that's a good thing!).

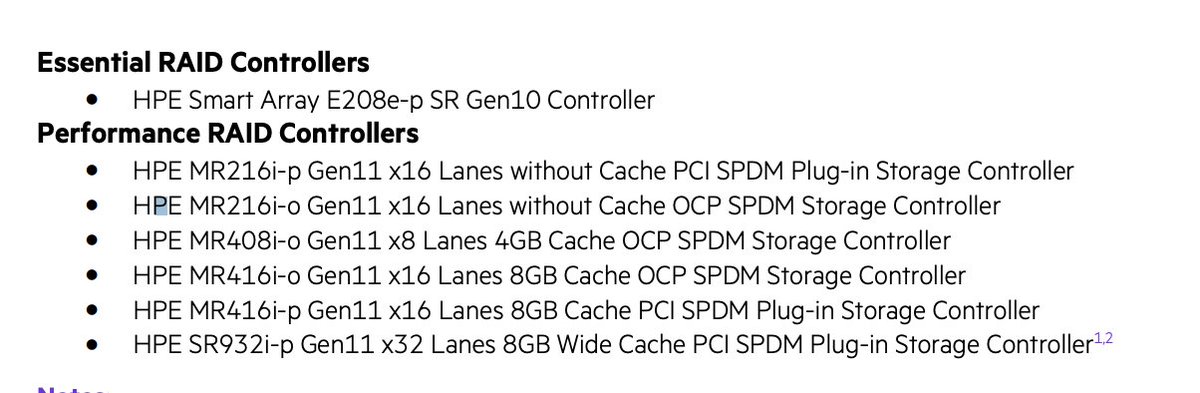

Next up what's missing. There is NOT a RAID controller (Generally starts with MR or SR). If there's one of these the NVMe drives will potentially be cabled to it (and that's bad, and not supported by vSAN ESA).

Per the quickspecs:

"Includes Direct Access cables and backplane power cables. Drive cages will be connected to Motherboard (Direct Attach) if no Internal controller is selected. Direct Attach is capable of supporting all the drives (SATA or NVMe)."

"Includes Direct Access cables and backplane power cables. Drive cages will be connected to Motherboard (Direct Attach) if no Internal controller is selected. Direct Attach is capable of supporting all the drives (SATA or NVMe)."

Now I'll note this BOM only supports 8 drives, but if your willing to not have an optical drives, or a front USB/Display port There is a way to get 2 more cabled in:

HPE ProLiant DL360 Gen11 2SFF x4 U.3 BC Tri-Mode Enablement Kit P48899-B21

HPE ProLiant DL360 Gen11 2SFF x4 U.3 BC Tri-Mode Enablement Kit P48899-B21

One other BOM review item. They went 4 x 25Gbps. If you don't already have 25Gbps TOR switches I would honestly go 2 x 100Gbps. It's about 20% more cost all in with cables and optics, but it's 2x the bandwidth and the rack will look prettier.

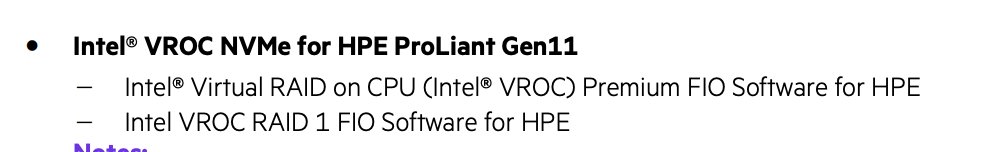

There's also not an Intel VROC license/config item on here. This is a "software(ish) RAID option for NVMe. We don't need/want this for vSAN ESA. In theory there might be a way to use this for a boot device but use the NS controller instead for now.

In general talk to your HPE Solution architects, their quoting tools should be able to help (HPE always had really good channel tools), if possible start with a ReadyNode/vSAN ESA option to lock out bad choices.

Thanks to Dan R for providing me some insight into this.

Thanks to Dan R for providing me some insight into this.

I'm sure @plankers already noticed the lack of a TPM.

It's now embedded, and disabled if your servers is going to China.

I'm glad HPE stoped making this an removable option.

It's now embedded, and disabled if your servers is going to China.

I'm glad HPE stoped making this an removable option.

@plankers Another point, for anyone playing with the new memory Tiering, you also are going to want that cabled this way, as that feature is not supported through a RAID controller either.

@plankers @threadreaderapp unroll

• • •

Missing some Tweet in this thread? You can try to

force a refresh