We're releasing a preview of OpenAI o1—a new series of AI models designed to spend more time thinking before they respond.

These models can reason through complex tasks and solve harder problems than previous models in science, coding, and math. openai.com/index/introduc…

These models can reason through complex tasks and solve harder problems than previous models in science, coding, and math. openai.com/index/introduc…

Rolling out today in ChatGPT to all Plus and Team users, and in the API for developers on tier 5.

OpenAI o1 solves a complex logic puzzle.

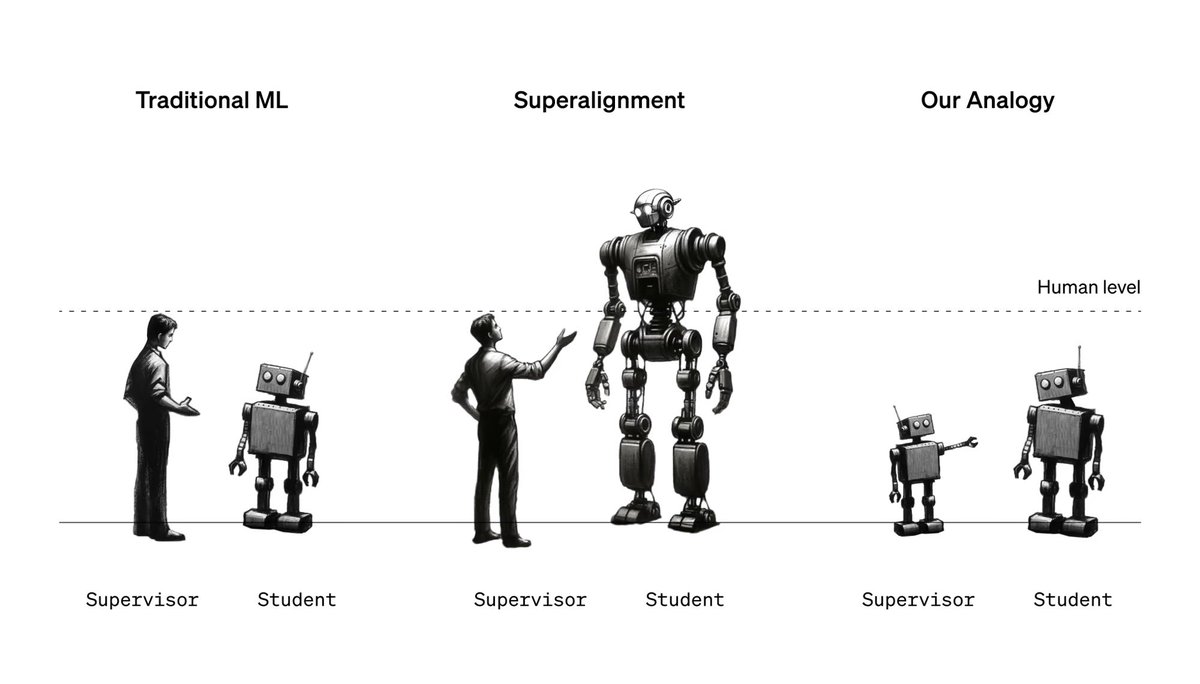

OpenAI o1 thinks before it answers and can produce a long internal chain-of-thought before responding to the user.

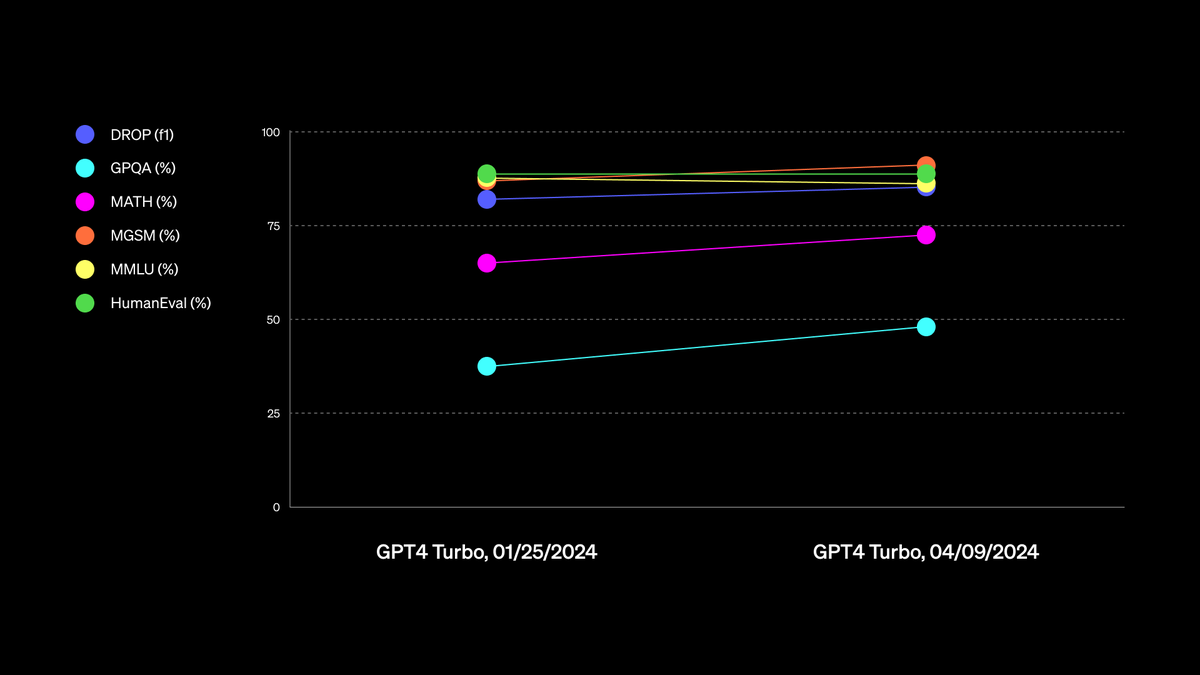

o1 ranks in the 89th percentile on competitive programming questions, places among the top 500 students in the US in a qualifier for the USA Math Olympiad, and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems.

openai.com/index/learning…

o1 ranks in the 89th percentile on competitive programming questions, places among the top 500 students in the US in a qualifier for the USA Math Olympiad, and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems.

openai.com/index/learning…

We're also releasing OpenAI o1-mini, a cost-efficient reasoning model that excels at STEM, especially math and coding.

openai.com/index/openai-o…

openai.com/index/openai-o…

OpenAI o1 codes a video game from a prompt.

OpenAI o1 answers a famously tricky question for large language models.

OpenAI o1 translates a corrupted sentence.

• • •

Missing some Tweet in this thread? You can try to

force a refresh