How to get URL link on X (Twitter) App

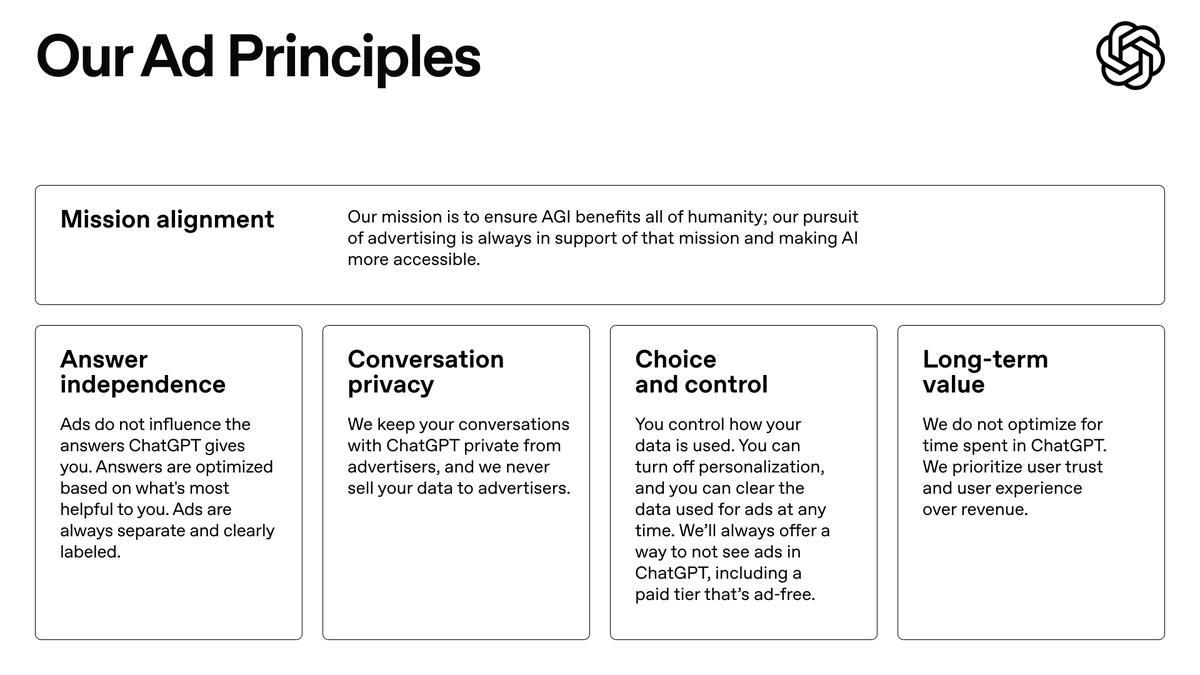

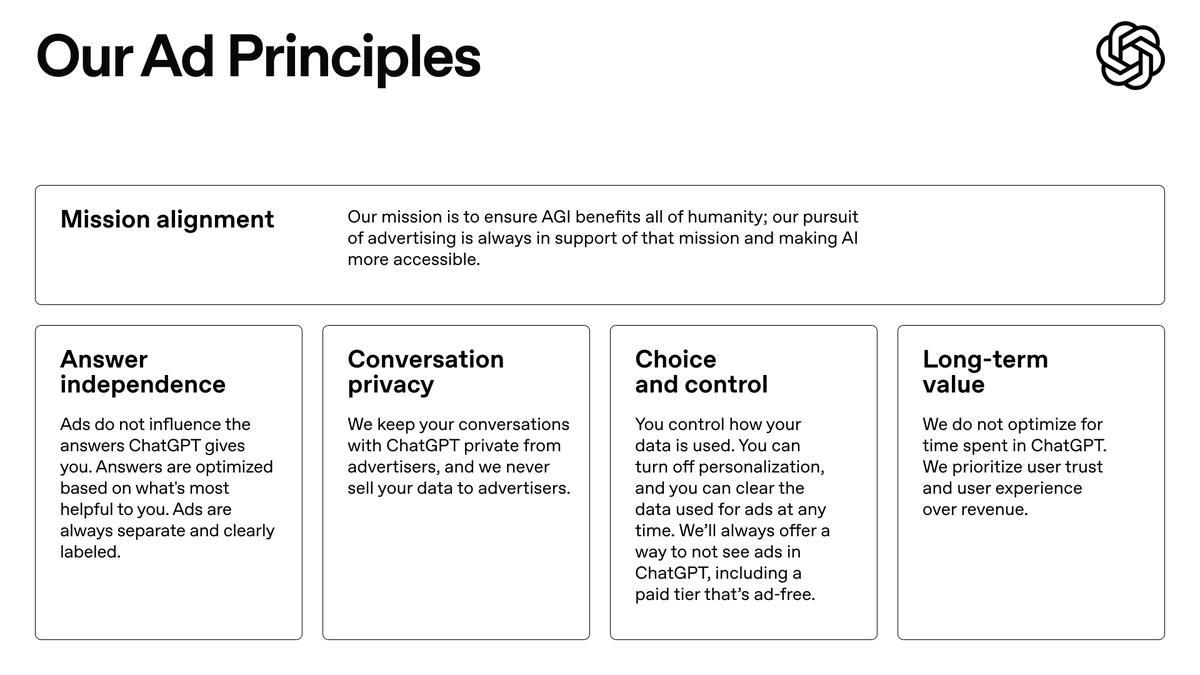

Here's an example of what the first ad formats we plan to test could look like.

Here's an example of what the first ad formats we plan to test could look like.

https://x.com/OpenAI/status/1975261587280961675