A Kafka in the cloud doing 30MB/s costs more than $110,000 a year.

A $1,000 laptop can do 10x that.

Where did we go wrong? 👇

The Cloud. Namely - its absurd networking charges 👎

Let’s break it down simply:

• AWS charges you $0.01/GB for data crossing AZs (but in the same region).

• They charge you on each GB in and out. Meaning each time a GB passes, you pay twice - for the one who sends it (outgoing) and the one who receives it (incoming)

• For a normal Kafka cluster with replication factor of 3 and a read fanout of 3x, you are going to be charged:

• 2x for 2/3rd of the produce throughput

• 4x for 100% of the produce from replicating it

• 6x of 2/3rd of the produce throughput for consumption.

(but it can get a lot worse - read until the end to see)

Simple example:

• 3-broker cluster, each in a separate AZ

• 3 producers, each in a separate AZ

• 3 consumer groups with 3 consumers each, each group with consumers in a separate AZ

The producers are producing 30MB/s in total to the same leader.

2/3 producers are in a different AZ, so 20MB/s of produce traffic is being charged at cross-zone rates. 👌

It’s charged both on the OUT (producer’s side) and IN (broker’s side).

The leader is replicating the full 30MB/s to both of its replicas.

This is again being charged both on the OUT (leader’s side) and IN (follower’s side), for both replication links. (60MB/s)

Then, each of the 3 consumer groups has 3 consumers.

All consumers read from the leader, with 2/3 in a different zone.

This results in 20MB/s of consume traffic charged at cross-zone rates PER GROUP. (60MB/s total)

Again charged both on the OUT (broker’s side) and IN (consumer’s side).

The total amounts to 140MB/s worth of cross-AZ traffic. Charged both ways.

When one MB is $0.00001/s, this means we’re paying $0.0028/s. 🤔

That’s:

• $241 a day 😕

• $7500 a month 😥

• $88,300 a year 🤯

It all goes down the drain on network traffic ALONE. 🔥

What about the hardware?

Quick napkin math assuming:

• 7 day retention

• all of the data is on EBS (not using tiered storage since it's not GA yet)

• keeping 50% of the disk free for operational purpose (don't ask me what happens if we run out of disk)

• the 3 brokers are running modest r4.xlarge instances (kinda overkill but hey, why not)

We'd pay:

• $19,440/yr for the EBS storage

• $6,990/yr for the EC2 instances

That’s right - you’re paying just $26.4k/yr for the hardware and 88.3k for the network (3.3x the hardware)

For a total of $115k/yr. 💸

I’m not even counting load balancer costs, which could be $12k by some quick napkin math too.

How ridiculous is that? 😂

Want it to get more ridiculous?

This calculation assumes you’re hosting your own Kafka cluster in the same AWS account.

💡If you use a managed Kafka provider that’s not AWS, or otherwise just another AWS account, you’re typically connecting to them through a public endpoint.

AWS then charges all traffic at the cross-AZ $0.01/GB rate internet traffic rate, even if it's in the same AZ.

The end result?

$113,000 a year for network costs. 💀

For 30MB/s. (!!!)

btw - 30 MB/s is absolutely nothing for Kafka... 🤡

It is most often network/disk bounded.

Doing 3GB/s is not hard. 👌

The higher throughput you go, the more absurdly large this discrepancy between network and hardware cost becomes.

For example - this exact setup could probably do 3x the traffic (90MB/s), assuming storage space isn't a concern.

Then you'd have:

• $264,000 a year for the cross-AZ rate. 🥲

• $339,000 a year for the internet rate. 💀

Why is this cost (more than 300k a year) and complexity (this calculation) the case when three laptops can run this practically for free?

Where did we go wrong?

Worth Noting:

There are a few optimizations that can be done here:

• consumers can use fetch from follower, which results in free read traffic (no cross-AZ charges) in the first example. But the second example would still be charged internet costs. 🤝

• you can avoid internet costs by VPC-peering or Private Link-ing the two AWS accounts. This is largely what most cloud providers do, otherwise it becomes prohibitively expensive. It can be super complex to do. 🔧

• AWS can give you large discounts (up to 90%+ afaict) on the quoted prices, depending on your usage. It’s unclear what customer gets what discount. 💰

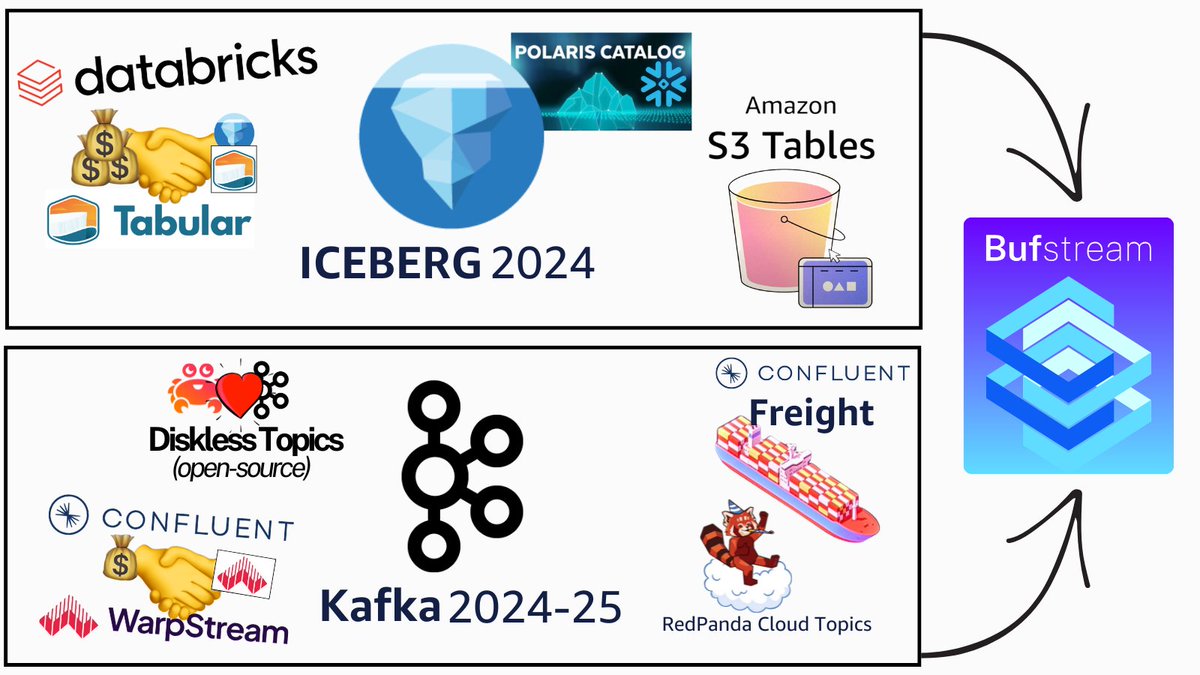

And perhaps the best example - you can use an ingeniously-designed product like WarpStream that eliminates all of this complexity and cost. ⭐️

It's no wonder they got acquired after just 13 months of operation.

A $1,000 laptop can do 10x that.

Where did we go wrong? 👇

The Cloud. Namely - its absurd networking charges 👎

Let’s break it down simply:

• AWS charges you $0.01/GB for data crossing AZs (but in the same region).

• They charge you on each GB in and out. Meaning each time a GB passes, you pay twice - for the one who sends it (outgoing) and the one who receives it (incoming)

• For a normal Kafka cluster with replication factor of 3 and a read fanout of 3x, you are going to be charged:

• 2x for 2/3rd of the produce throughput

• 4x for 100% of the produce from replicating it

• 6x of 2/3rd of the produce throughput for consumption.

(but it can get a lot worse - read until the end to see)

Simple example:

• 3-broker cluster, each in a separate AZ

• 3 producers, each in a separate AZ

• 3 consumer groups with 3 consumers each, each group with consumers in a separate AZ

The producers are producing 30MB/s in total to the same leader.

2/3 producers are in a different AZ, so 20MB/s of produce traffic is being charged at cross-zone rates. 👌

It’s charged both on the OUT (producer’s side) and IN (broker’s side).

The leader is replicating the full 30MB/s to both of its replicas.

This is again being charged both on the OUT (leader’s side) and IN (follower’s side), for both replication links. (60MB/s)

Then, each of the 3 consumer groups has 3 consumers.

All consumers read from the leader, with 2/3 in a different zone.

This results in 20MB/s of consume traffic charged at cross-zone rates PER GROUP. (60MB/s total)

Again charged both on the OUT (broker’s side) and IN (consumer’s side).

The total amounts to 140MB/s worth of cross-AZ traffic. Charged both ways.

When one MB is $0.00001/s, this means we’re paying $0.0028/s. 🤔

That’s:

• $241 a day 😕

• $7500 a month 😥

• $88,300 a year 🤯

It all goes down the drain on network traffic ALONE. 🔥

What about the hardware?

Quick napkin math assuming:

• 7 day retention

• all of the data is on EBS (not using tiered storage since it's not GA yet)

• keeping 50% of the disk free for operational purpose (don't ask me what happens if we run out of disk)

• the 3 brokers are running modest r4.xlarge instances (kinda overkill but hey, why not)

We'd pay:

• $19,440/yr for the EBS storage

• $6,990/yr for the EC2 instances

That’s right - you’re paying just $26.4k/yr for the hardware and 88.3k for the network (3.3x the hardware)

For a total of $115k/yr. 💸

I’m not even counting load balancer costs, which could be $12k by some quick napkin math too.

How ridiculous is that? 😂

Want it to get more ridiculous?

This calculation assumes you’re hosting your own Kafka cluster in the same AWS account.

💡If you use a managed Kafka provider that’s not AWS, or otherwise just another AWS account, you’re typically connecting to them through a public endpoint.

AWS then charges all traffic at the cross-AZ $0.01/GB rate internet traffic rate, even if it's in the same AZ.

The end result?

$113,000 a year for network costs. 💀

For 30MB/s. (!!!)

btw - 30 MB/s is absolutely nothing for Kafka... 🤡

It is most often network/disk bounded.

Doing 3GB/s is not hard. 👌

The higher throughput you go, the more absurdly large this discrepancy between network and hardware cost becomes.

For example - this exact setup could probably do 3x the traffic (90MB/s), assuming storage space isn't a concern.

Then you'd have:

• $264,000 a year for the cross-AZ rate. 🥲

• $339,000 a year for the internet rate. 💀

Why is this cost (more than 300k a year) and complexity (this calculation) the case when three laptops can run this practically for free?

Where did we go wrong?

Worth Noting:

There are a few optimizations that can be done here:

• consumers can use fetch from follower, which results in free read traffic (no cross-AZ charges) in the first example. But the second example would still be charged internet costs. 🤝

• you can avoid internet costs by VPC-peering or Private Link-ing the two AWS accounts. This is largely what most cloud providers do, otherwise it becomes prohibitively expensive. It can be super complex to do. 🔧

• AWS can give you large discounts (up to 90%+ afaict) on the quoted prices, depending on your usage. It’s unclear what customer gets what discount. 💰

And perhaps the best example - you can use an ingeniously-designed product like WarpStream that eliminates all of this complexity and cost. ⭐️

It's no wonder they got acquired after just 13 months of operation.

I had to edit this because I got the public endpoint traffic cost wrong.

There's surprisingly little info out online about this, and many people seem confused about it.

After latest research, I conclude public endpoints get charged at the usual $0.01/GB rate, I believe on both sides.

It doesn't affect the first part of the calculation, but later on I got some vastly inaccurate numbers.

There's surprisingly little info out online about this, and many people seem confused about it.

After latest research, I conclude public endpoints get charged at the usual $0.01/GB rate, I believe on both sides.

It doesn't affect the first part of the calculation, but later on I got some vastly inaccurate numbers.

• • •

Missing some Tweet in this thread? You can try to

force a refresh