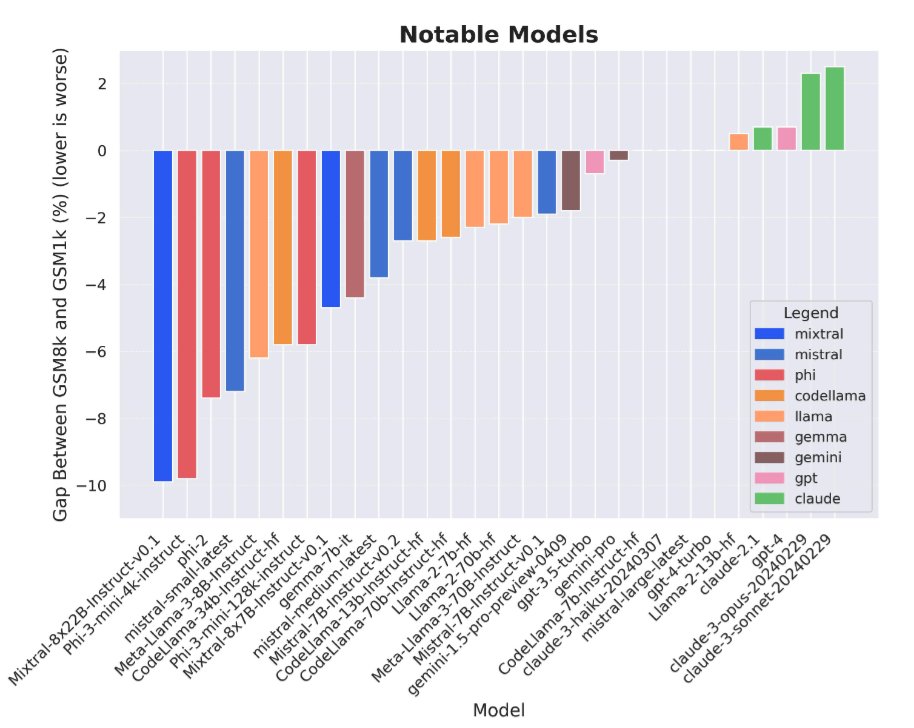

OpenAI recently released the o1 family of models and a graph showing scaling laws for test-time compute — sadly without the x-axis labeled.

Using only the public o1-mini API, I tried to reconstruct the graph as closely as possible. Original on left, my best attempt on right.

Using only the public o1-mini API, I tried to reconstruct the graph as closely as possible. Original on left, my best attempt on right.

The OpenAI API does not allow you to easily control how many tokens to spend at test-time. I hack my way around this by telling o1-mini how long I want it to think for. Afterwards, I can figure out how many tokens were actually used based on how much the query cost!

Here’s a plot of how many tokens we ask o1-mini to think for against how many it actually uses. If you request a very small token budget, it often refuses to listen. The same for a very large token budget. But in the region between 2^4 and 2^11 it seems to work reasonable well.

When restricting to just that region of 2^4 (16) to 2^11 (2048), we get the following curve. Note that o1-mini doesn't really "listen" to the precise number of tokens we ask it to use. In fact, in this region, it seems to consistently use ~8 times as many tokens as we ask for!

This only gets us ~2^14 = 16K tokens spent at test-time. Despite fiddling with various prompts, I was unable to get the model to reliably "think" longer.

To scale further, I took a page from the self-consistency paper by doing repeated sampling and then taking a majority vote.

To scale further, I took a page from the self-consistency paper by doing repeated sampling and then taking a majority vote.

So one natural question when seeing scaling laws graphs is: how long does this trend continue? For the original scaling laws for pre-training, each additional datapoint cost millions of dollars so it took some time to see the additional datapoints.

In this case, for scaling test-time compute, the reconstructed graph was surprisingly cheap to make. 2^17 tokens / problem * 30 AIME problems from 2024 = ~4M tokens. At $12 / 1M output tokens, the largest inference run only costs about $50. o1-mini doesn't cost that much!

Sadly, self consistency / majority vote doesn't seem to scale much past the initial gains. I increase the samples 16x beyond the most successful run, but there are no more gains beyond 2^17 total tokens and getting ~70%, which is just under what the original OpenAI graph showed.

This is consistent with past work suggesting that majority voting saturates at some point (classic statistics says something similar too). So we won't be able to hack around getting the models to think longer if we want to scale up test-time compute.

I expect that forcing the model to think for longer in token space is likely more effective than repeated sampling + majority vote in terms of scaling test-time compute. Given how cheap the replication was, I'm super curious to see how this approach scales.

If you want to play with the data / code yourself, it’s all available below. All code written with help from @cursor_ai, Claude 3.5 Sonnet, and of course, o1.

github.com/hughbzhang/o1_…

github.com/hughbzhang/o1_…

Finally, wanted to give a huge shout out to @cHHillee, @jbfja, @ecjwg and Celia Chen for feedback / thoughts on initial versions of this!

• • •

Missing some Tweet in this thread? You can try to

force a refresh