There are people who desperately want this to be untrue🧵

One example of this came up earlier this year, when a "Professor of Public Policy and Governance" accused other people of being ignorant about SAT scores because, he alleged, high schools predicted college grades better.

One example of this came up earlier this year, when a "Professor of Public Policy and Governance" accused other people of being ignorant about SAT scores because, he alleged, high schools predicted college grades better.

The thread in question was, ironically, full of irrelevant points that seemed intended to mislead, accompanied by very obvious statistical errors.

For example, one post in it received a Community Note for conditioning on a collider.

For example, one post in it received a Community Note for conditioning on a collider.

But let's ignore the obvious things. I want to focus on this one: the idea that high schools explain more of student achievement than SATs

The evidence for this? The increase in R^2 going from a model without to a model with high school fixed effects

This interpretation is bad.

The evidence for this? The increase in R^2 going from a model without to a model with high school fixed effects

This interpretation is bad.

The R^2 of the overall model did not increase because high schools are more important determinants of student achievement. This result cannot be interpreted to mean that your zip code is more important than your gumption and effort in school.

If we open the report, we see this:

If we open the report, we see this:

Students from elite high schools and from disadvantaged ones receive similar results when it comes to SATs predicting achievement. If high schools really explained a lot, this wouldn't be the case.

What we're seeing is a case where R^2 was misinterpreted.

What we're seeing is a case where R^2 was misinterpreted.

The reason the model R^2 blew up was because there's a fixed effect for every high school mentioned in this national-level dataset

That means that all the little differences between high schools are controlled—a lot of variation!—so the model is overfit, explaining the high R^2

That means that all the little differences between high schools are controlled—a lot of variation!—so the model is overfit, explaining the high R^2

This professor should've known better for many reasons.

For example, we know there's more variation between classrooms than between school districts when it comes to student achievement.

For example, we know there's more variation between classrooms than between school districts when it comes to student achievement.

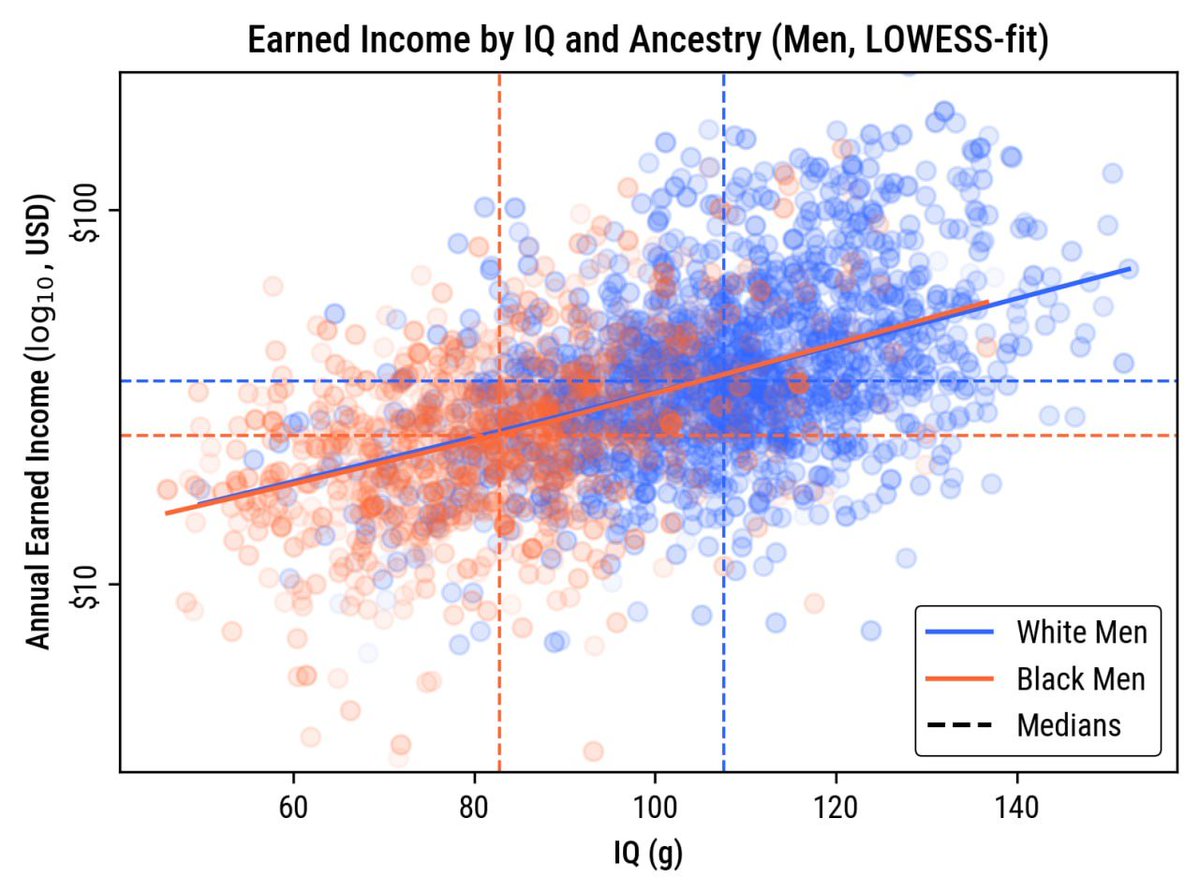

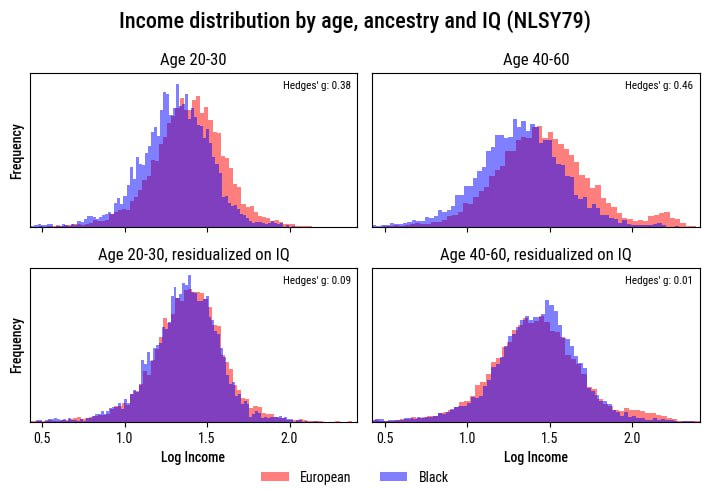

https://x.com/cremieuxrecueil/status/1744459260996489545

As another example, we know that achievement gaps exist along the whole continuum of school and district quality.

If the issue was really zip codes, high schools, and so on, this shouldn't be the case.

If the issue was really zip codes, high schools, and so on, this shouldn't be the case.

https://x.com/cremieuxrecueil/status/1744462509753417991

The other thing this professor should've known is that high school is biased! GPAs are biased too!

The bias in GPAs has actually been exploited: elite high schools inflate grades and don't report class ranks, so students appear better than they are.

The bias in GPAs has actually been exploited: elite high schools inflate grades and don't report class ranks, so students appear better than they are.

But you know what isn't a biased tool for admissions? Just one thing: test scores.

Want to learn more? Here are some sources:

x.com/cremieuxrecuei…

cremieux.xyz/p/what-happens…

cremieux.xyz/p/bias-in-admi…

Want to learn more? Here are some sources:

x.com/cremieuxrecuei…

cremieux.xyz/p/what-happens…

cremieux.xyz/p/bias-in-admi…

• • •

Missing some Tweet in this thread? You can try to

force a refresh