Our GPU organ plays music acoustically by controlling the RPM of each fan

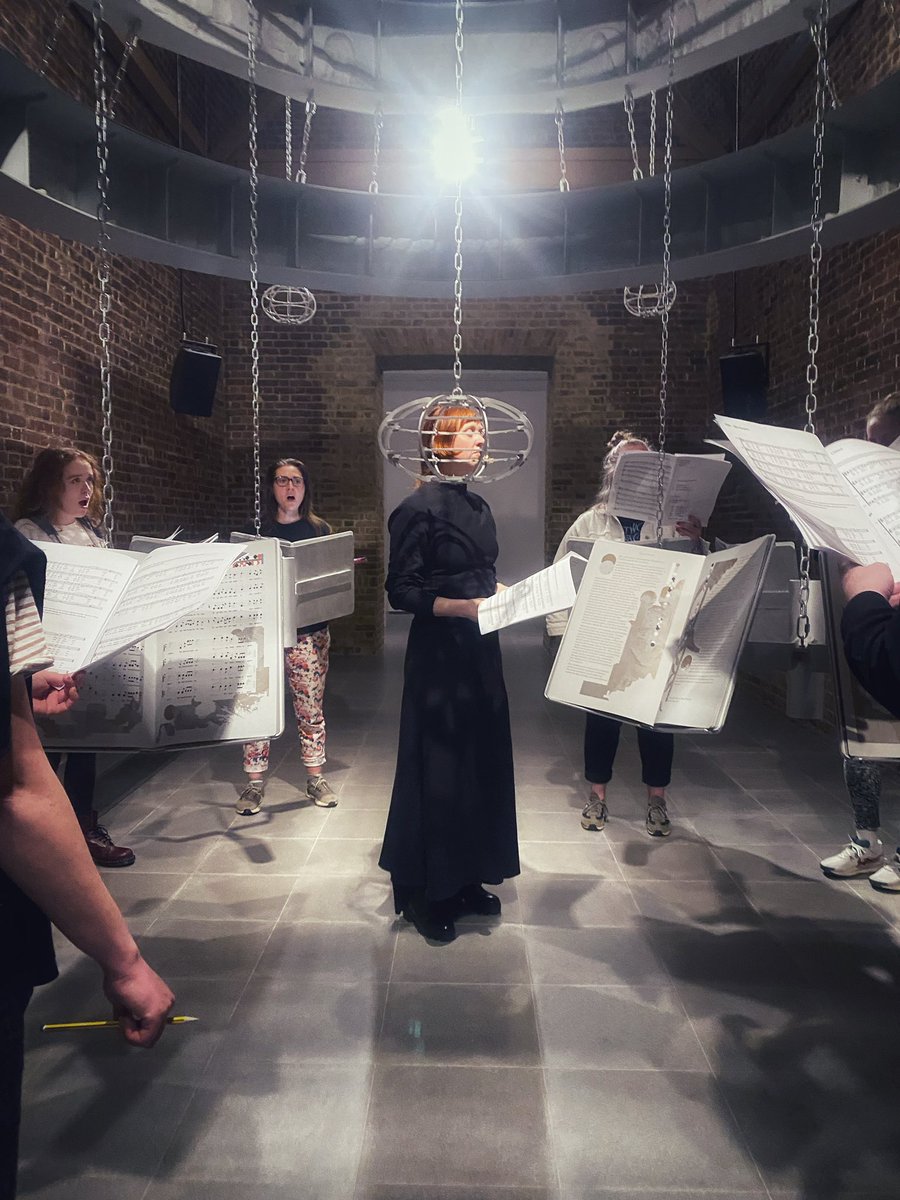

The Call is now open to the public until February @SerpentineUK in Hyde Park London

The Call is now open to the public until February @SerpentineUK in Hyde Park London

It plays along with music generated from a diffusion model that creates new songs based on the (volunteered) training data of choirs from across the UK

It holds a symbolic AI score generating model encased in brass, that can generate infinite scores for it to play

It holds a symbolic AI score generating model encased in brass, that can generate infinite scores for it to play

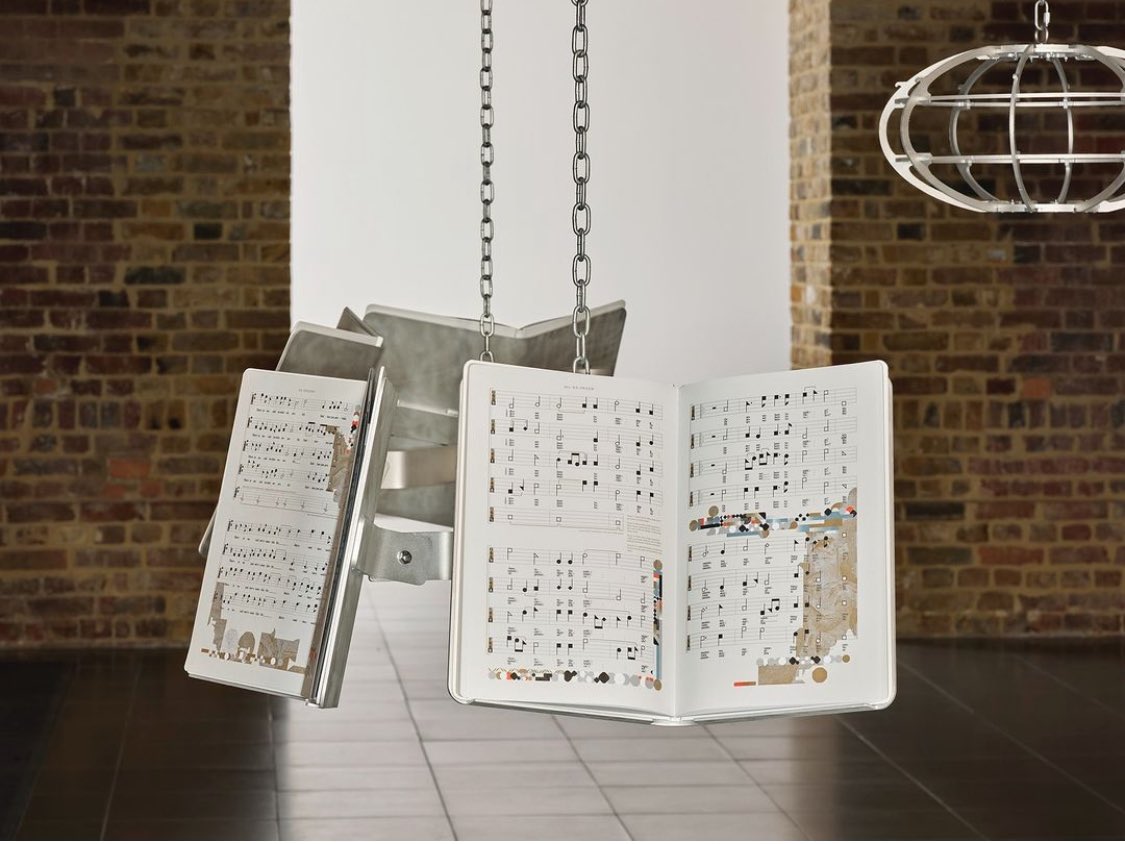

We made a songbook (designed by @_MichaelOswell_ and @casedeclined) specifically for AI training.

If you sing all the songs in it you will have fed a model every phoneme in the English language

We took this book on tour to record 15 choirs across the UK to produce the dataset

If you sing all the songs in it you will have fed a model every phoneme in the English language

We took this book on tour to record 15 choirs across the UK to produce the dataset

The dataset is now the largest available to researchers, future proofed by recording each choir ambisonically to provide precise recall of the sound in each room

This dataset is owned by the choirs in question through a new IP structure we created alongside @SerpentineUK Future Art Ecosystems team to allow for common ownership of AI data

This dataset is owned by the choirs in question through a new IP structure we created alongside @SerpentineUK Future Art Ecosystems team to allow for common ownership of AI data

@_MichaelOswell_ @casedeclined @SerpentineUK It was used to train a new polyphonic call and response model, developed with @Ircam, that allows you to sing to the model and receive a response back

The voices, and our own archives of recorded music, were also used to train a diffusion model to make entirely new, emergent, songs that intermittently fill the space (infinite thanks to @zqevans and @cortexelation of @StabilityAI)

We fed generated songs back to the model recursively to prompt it to harmonize with itself, so the songs are played back in multichannel, like a choir 🤯

We fed generated songs back to the model recursively to prompt it to harmonize with itself, so the songs are played back in multichannel, like a choir 🤯

“The Call” is open to the public now in London @SerpentineUK

Infinite thanks to @eva__jaeger @Ruthywaters @kayhannahwatson Vi Trinh @HUObrist Bettina Korek, Liz Stumpf, Richard Install, Zsuzsa Benke, sub, FAE, Ian Berman, Andrew Roberts, @algomus @Ircam @zqevans @cortexelation @_MichaelOswell_ @casedeclined @1OF1_art @fellowshiptrust and the many choirs who contributed their time and voices to this effort ❤️

The guiding principle of this project was to find the beauty in contributing to something greater than the sum of its parts, and nothing truer could be said about the genesis of this show. A monumental collective effort.

Infinite thanks to @eva__jaeger @Ruthywaters @kayhannahwatson Vi Trinh @HUObrist Bettina Korek, Liz Stumpf, Richard Install, Zsuzsa Benke, sub, FAE, Ian Berman, Andrew Roberts, @algomus @Ircam @zqevans @cortexelation @_MichaelOswell_ @casedeclined @1OF1_art @fellowshiptrust and the many choirs who contributed their time and voices to this effort ❤️

The guiding principle of this project was to find the beauty in contributing to something greater than the sum of its parts, and nothing truer could be said about the genesis of this show. A monumental collective effort.

• • •

Missing some Tweet in this thread? You can try to

force a refresh