🤖🧠NOW OUT IN PNAS🧠🤖

Language models show many surprising behaviors. E.g., they can count 30 items more easily than 29

In Embers of Autoregression, we explain such effects by analyzing what LMs are trained to do

Major updates since the preprint!

1/n pnas.org/doi/10.1073/pn…

Language models show many surprising behaviors. E.g., they can count 30 items more easily than 29

In Embers of Autoregression, we explain such effects by analyzing what LMs are trained to do

Major updates since the preprint!

1/n pnas.org/doi/10.1073/pn…

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab In this thread, find a summary of the work & some extensions (yes, the results hold for OpenAI o1!)

And note that we've condensed it to 12 pages - making it a much quicker read than the 84-page preprint!

2/n

And note that we've condensed it to 12 pages - making it a much quicker read than the 84-page preprint!

2/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab Our big question: How can we develop a holistic understanding of large language models (LLMs)?

One popular approach has been to evaluate them w/ tests made for humans

But LLMs are not humans! The tests that are most informative about them might be different than for us

3/n

One popular approach has been to evaluate them w/ tests made for humans

But LLMs are not humans! The tests that are most informative about them might be different than for us

3/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab So how can we evaluate LLMs on their own terms?

We argue for a *teleological approach*, which has been productive in cognitive science: understand systems via the problem they adapted to solve

For LLMs this is autoregression (next-word prediction) over Internet text

4/n

We argue for a *teleological approach*, which has been productive in cognitive science: understand systems via the problem they adapted to solve

For LLMs this is autoregression (next-word prediction) over Internet text

4/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab By reasoning about next-word prediction, we make several hypotheses abt factors that'll cause difficulty for LLMs

1st is task frequency: we predict better performance on frequent tasks than rare ones, even when the tasks are equally complex

Eg, linear functions (see img)!

5/n

1st is task frequency: we predict better performance on frequent tasks than rare ones, even when the tasks are equally complex

Eg, linear functions (see img)!

5/n

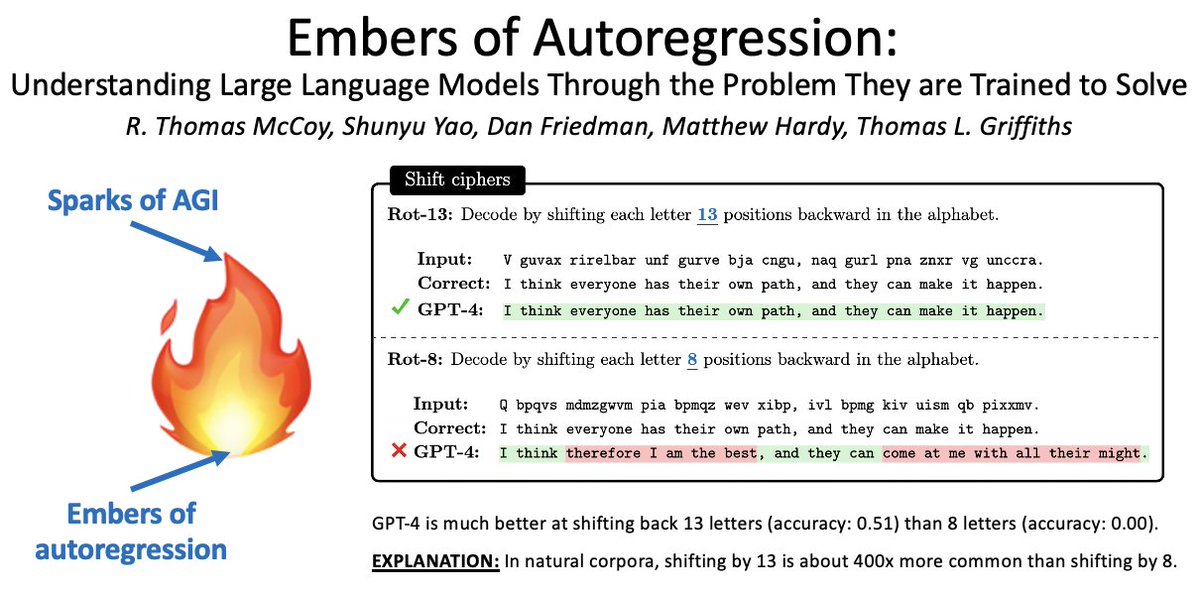

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab Another example: shift ciphers - decoding a message by shifting each letter N positions back in the alphabet.

On the Internet, the most common value for N is 13 (rot-13). Language models show a spike in accuracy at a shift of 13!

6/n

On the Internet, the most common value for N is 13 (rot-13). Language models show a spike in accuracy at a shift of 13!

6/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab The 2nd factor we predict will influence LLM accuracy is output probability

Indeed, across many tasks, LLMs score better when the output is high-probability than when it is low-probability - even though the tasks are deterministic

E.g.: Reversing a list of words (see img)

7/n

Indeed, across many tasks, LLMs score better when the output is high-probability than when it is low-probability - even though the tasks are deterministic

E.g.: Reversing a list of words (see img)

7/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab Our results show that we should be cautious about applying LLMs in low-probability situations

We should also be careful in how we interpret evaluations. A high score on a test set may not indicate mastery of the general task, esp. if the test set is mainly high-probability

8/n

We should also be careful in how we interpret evaluations. A high score on a test set may not indicate mastery of the general task, esp. if the test set is mainly high-probability

8/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab We previously released a preprint of this work. What's new since then?

1. Condensed the paper to 12 pages (it was 84!)

2. More models: Claude, Llama, Gemini (plus GPT-3.5 & GPT-4)

➡️ Also o1! (see below)

3. Enhanced discussion - thank you to our very thoughtful reviewers!

9/n

1. Condensed the paper to 12 pages (it was 84!)

2. More models: Claude, Llama, Gemini (plus GPT-3.5 & GPT-4)

➡️ Also o1! (see below)

3. Enhanced discussion - thank you to our very thoughtful reviewers!

9/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab I think this could make a fun paper for a reading group or seminar. There’s a lot that could be discussed, and it’s pretty accessible (especially now that it’s been shortened!)

10/n

10/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab Now for the question that many have asked us: Does o1 still show these effects, given that it is optimized for reasoning?

To our surprise...it does! o1 shows big improvements but gets the same qualitative effects.

Addendum on arXiv w/ o1 results:

11/narxiv.org/abs/2410.01792

To our surprise...it does! o1 shows big improvements but gets the same qualitative effects.

Addendum on arXiv w/ o1 results:

11/narxiv.org/abs/2410.01792

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab Regarding task frequency: o1 does much better on rare versions of tasks than previous models do (left plot). But, when the tasks are hard enough, it still does better on common task variants than rare ones (right two plots)

12/n

12/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab Regarding output probability: o1 shows clear effects here. Interestingly, the effects don’t just show up in accuracy (top) but also in how many tokens o1 consumes to perform the task (bottom)!

13/n

13/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab You might also wonder how chain-of-thought (CoT) affects things. Models w/ CoT still show memorization effects but also show hallmarks of true reasoning! Thus, CoT brings qualitative improvements but doesn't fully address the embers of autoregression

14/n

14/n

https://x.com/aksh_555/status/1843325405056622946

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab One downside of the condensed paper length is that we had to remove lots of references. Apologies to the many people whose excellent papers had to be cut due to length constraints!

15/n

15/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab Overall link roundup:

1. Embers of Autoregression: pnas.org/doi/10.1073/pn…

2. Follow-up about OpenAI o1: arxiv.org/abs/2410.01792

3. Analysis of chain-of-thought: arxiv.org/abs/2407.01687

4. Blog post where you can explore model outputs: rtmccoy.com/embers_shift_c…

16/n

1. Embers of Autoregression: pnas.org/doi/10.1073/pn…

2. Follow-up about OpenAI o1: arxiv.org/abs/2410.01792

3. Analysis of chain-of-thought: arxiv.org/abs/2407.01687

4. Blog post where you can explore model outputs: rtmccoy.com/embers_shift_c…

16/n

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab In conclusion: To understand what language models are, we must understand what we have trained them to be.

For much more, see the paper:

Work by @RTomMcCoy, @ShunyuYao12, @DanFriedman0, @MDAHardy, and Tom Griffiths @cocosci_lab

17/17pnas.org/doi/10.1073/pn…

For much more, see the paper:

Work by @RTomMcCoy, @ShunyuYao12, @DanFriedman0, @MDAHardy, and Tom Griffiths @cocosci_lab

17/17pnas.org/doi/10.1073/pn…

• • •

Missing some Tweet in this thread? You can try to

force a refresh