Assistant professor @YaleLinguistics. Studying computational linguistics, cognitive science, and AI. He/him.

How to get URL link on X (Twitter) App

Bayesian models can learn from few examples because they have strong inductive biases - factors that guide generalization. But the costs of inference and the difficulty of specifying generative models can make naturalistic data a challenge.

Bayesian models can learn from few examples because they have strong inductive biases - factors that guide generalization. But the costs of inference and the difficulty of specifying generative models can make naturalistic data a challenge.

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab In this thread, find a summary of the work & some extensions (yes, the results hold for OpenAI o1!)

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab In this thread, find a summary of the work & some extensions (yes, the results hold for OpenAI o1!)

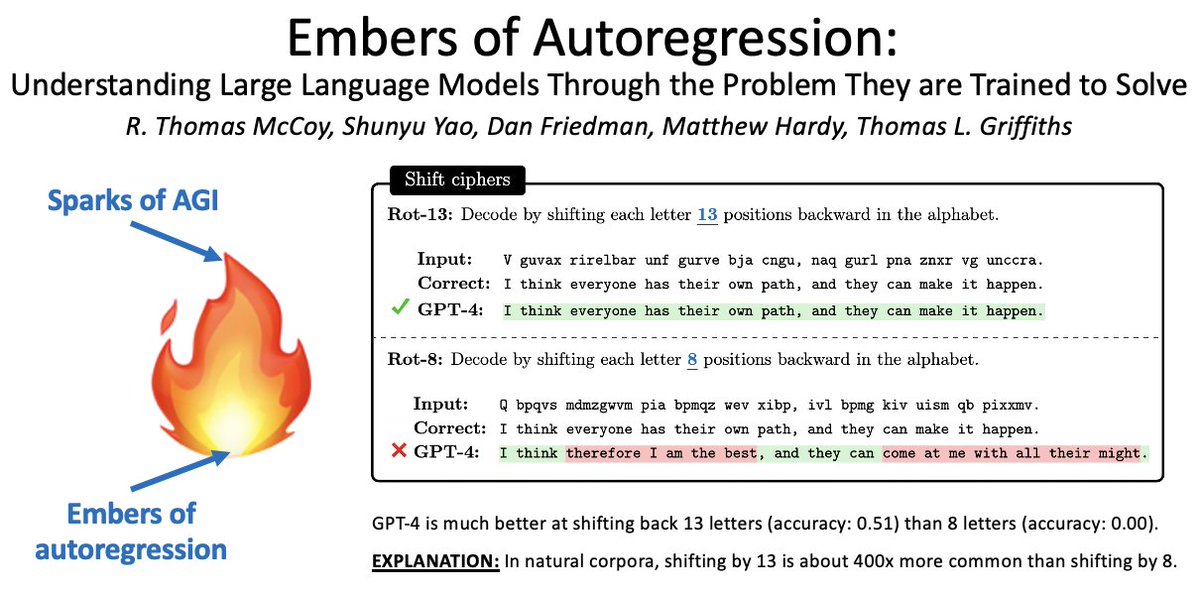

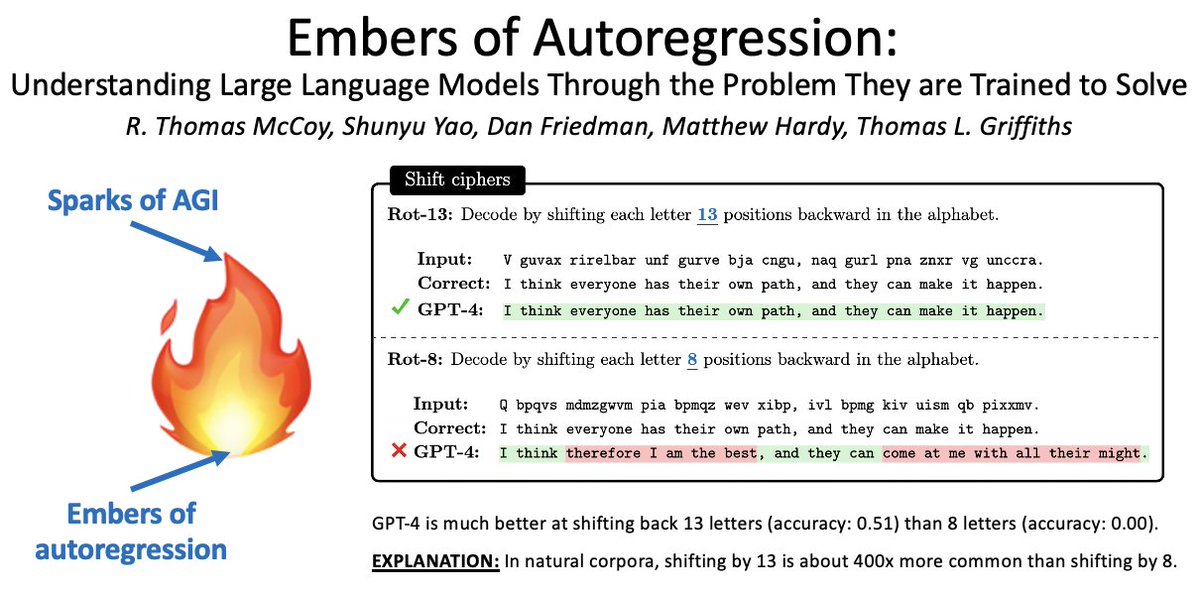

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab Our big question: How can we develop a holistic understanding of large language models (LLMs)?

@ShunyuYao12 @danfriedman0 @mdahardy @cocosci_lab Our big question: How can we develop a holistic understanding of large language models (LLMs)?

Bayesian models can learn from few examples because they have strong inductive biases - factors that guide generalization. But the costs of inference and the difficulty of specifying generative models can make naturalistic data a challenge.

Bayesian models can learn from few examples because they have strong inductive biases - factors that guide generalization. But the costs of inference and the difficulty of specifying generative models can make naturalistic data a challenge.

https://twitter.com/NewYorker/status/1625473244311523328To make this concrete, let’s consider a specific example. Suppose you encounter this list of sequences:

To understand current AI, we need some insights from CogSci and from 20th-century AI.

To understand current AI, we need some insights from CogSci and from 20th-century AI.

Work done with @tallinzen, Paul Smolensky, @JianfengGao0217, & @real_asli.

Work done with @tallinzen, Paul Smolensky, @JianfengGao0217, & @real_asli.

@bob_frank @tallinzen For 2 syntactic tasks, we train models on training sets that are ambiguous between two rules: one rule based on hierarchical structure and one based on linear order.

@bob_frank @tallinzen For 2 syntactic tasks, we train models on training sets that are ambiguous between two rules: one rule based on hierarchical structure and one based on linear order.