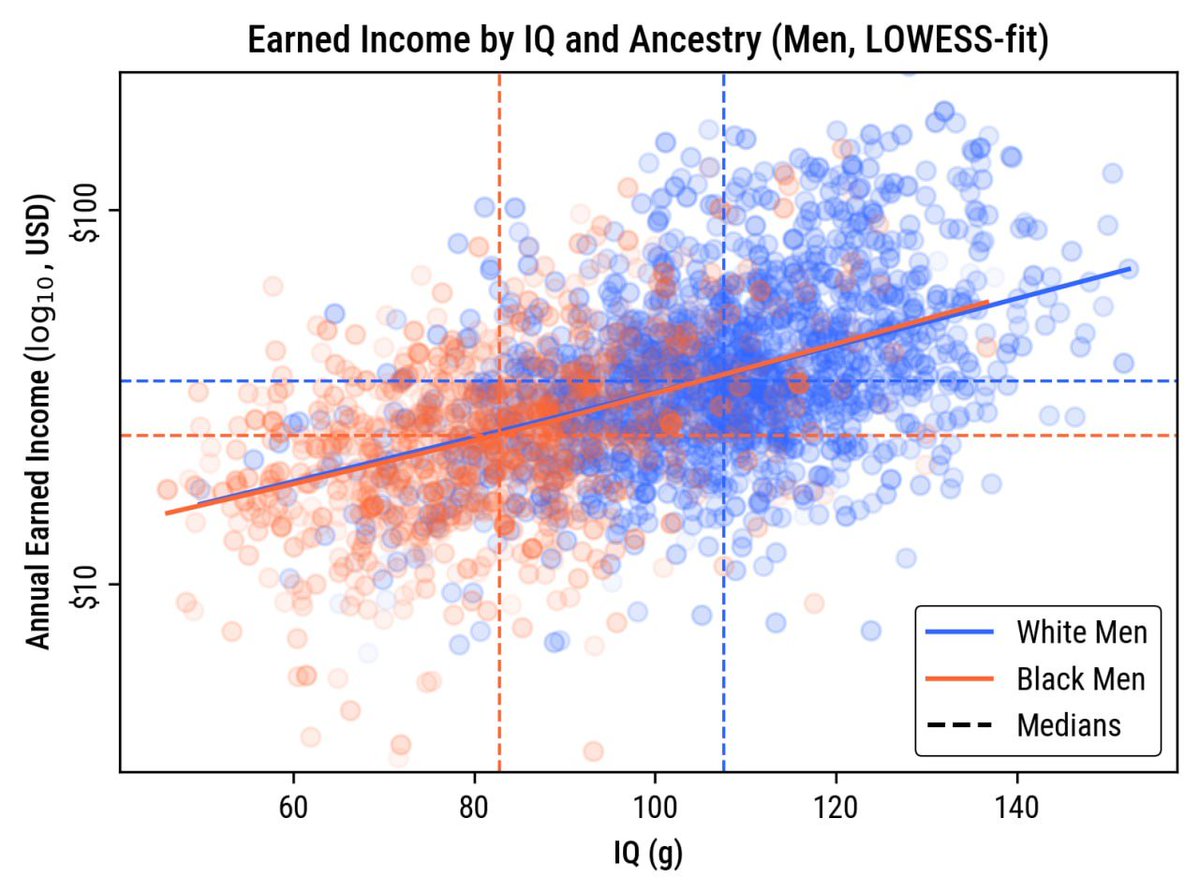

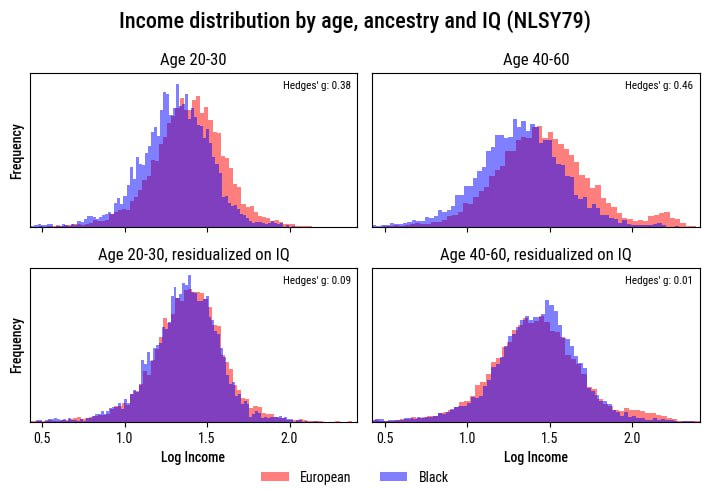

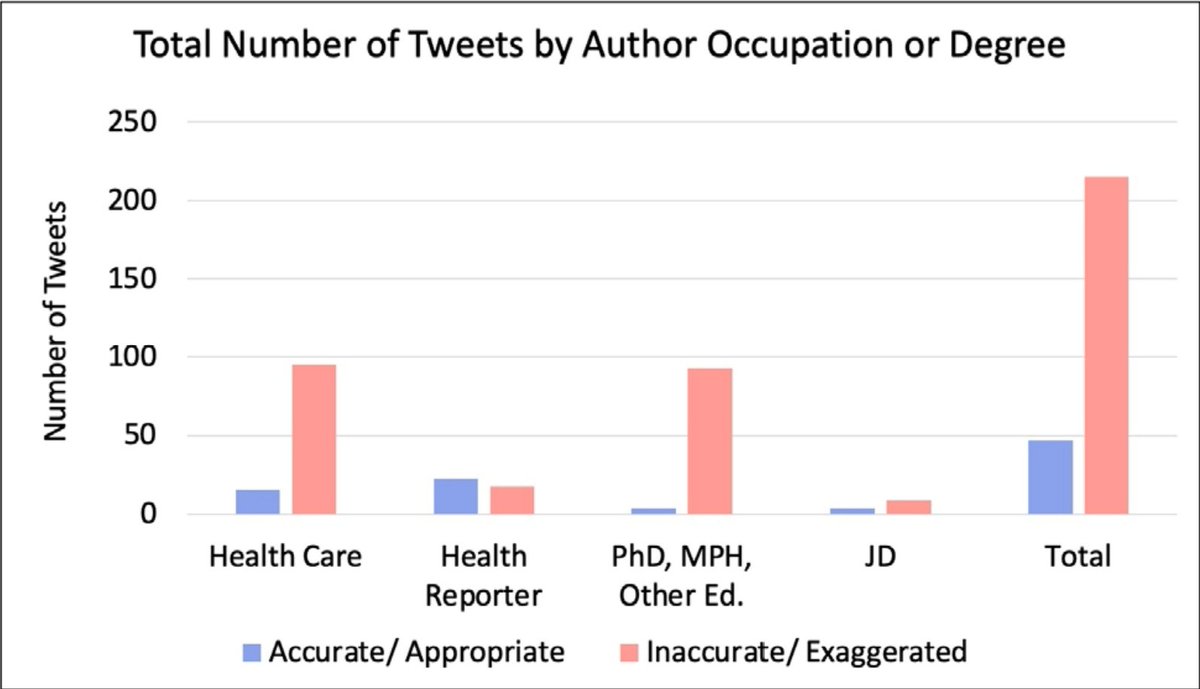

Among health "experts" who tweeted about Monkeypox, there was a dramatic tendency to get basic facts wrong.

For example, many claimed risk wasn't especially heightened among gay men.

PhDs were among the worst misinformation spreaders.

For example, many claimed risk wasn't especially heightened among gay men.

PhDs were among the worst misinformation spreaders.

Being an "expert", being "credentialed", having "studied" something and so on, is not sufficient to make someone truly credible, to endow their words with reliability.

Being right is, and most popular "experts" were usually not right.

Being right is, and most popular "experts" were usually not right.

Source: doi.org/10.1101/2023.0…

• • •

Missing some Tweet in this thread? You can try to

force a refresh