🚀 DeepSeek-R1-Lite-Preview is now live: unleashing supercharged reasoning power!

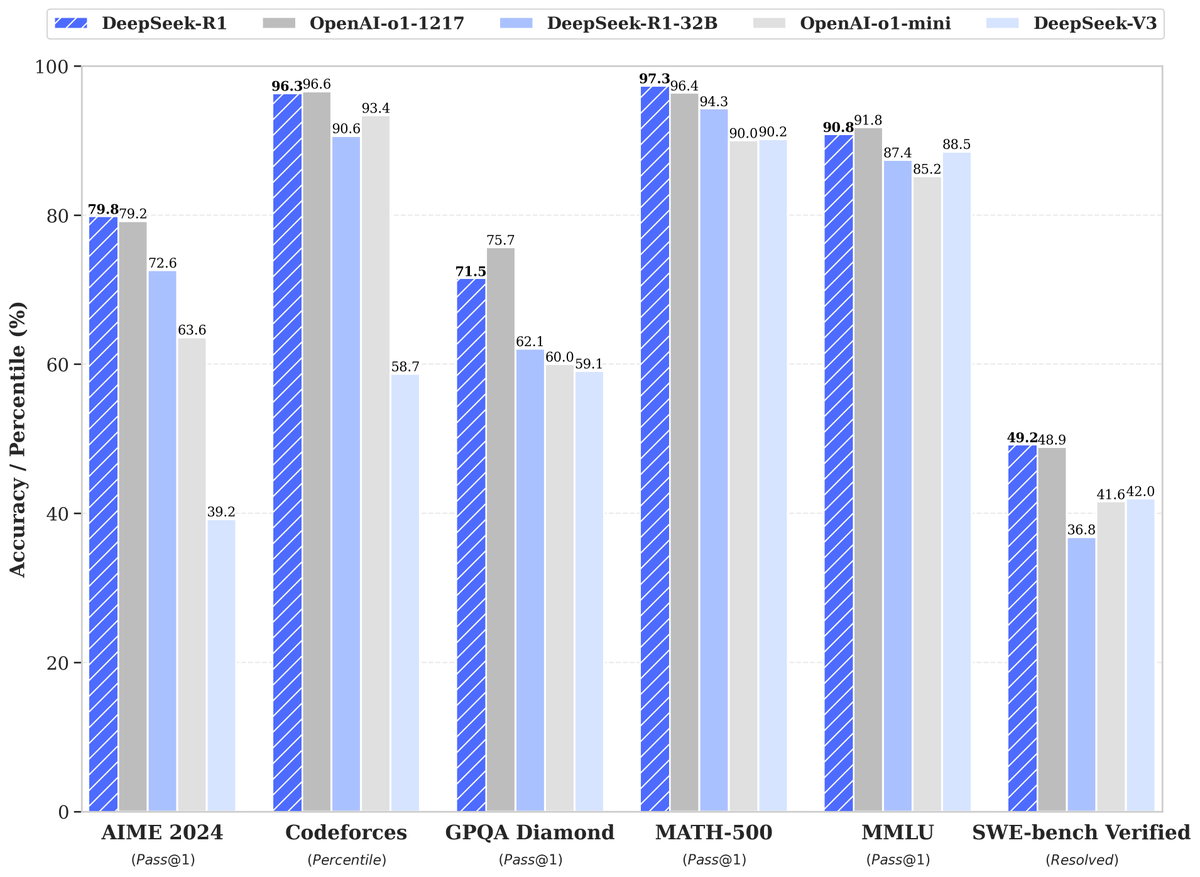

🔍 o1-preview-level performance on AIME & MATH benchmarks.

💡 Transparent thought process in real-time.

🛠️ Open-source models & API coming soon!

🌐 Try it now at

#DeepSeek chat.deepseek.com

🔍 o1-preview-level performance on AIME & MATH benchmarks.

💡 Transparent thought process in real-time.

🛠️ Open-source models & API coming soon!

🌐 Try it now at

#DeepSeek chat.deepseek.com

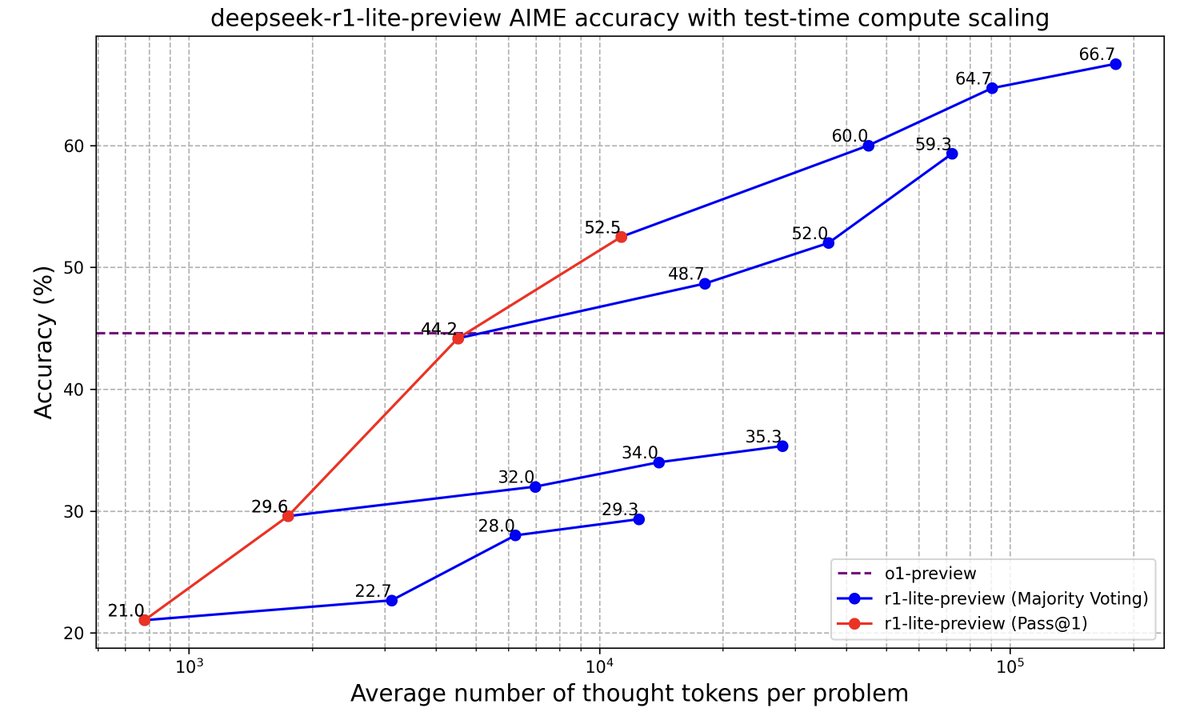

🌟 Inference Scaling Laws of DeepSeek-R1-Lite-Preview

Longer Reasoning, Better Performance. DeepSeek-R1-Lite-Preview shows steady score improvements on AIME as thought length increases.

Longer Reasoning, Better Performance. DeepSeek-R1-Lite-Preview shows steady score improvements on AIME as thought length increases.

• • •

Missing some Tweet in this thread? You can try to

force a refresh