Unravel the mystery of AGI with curiosity. Answer the essential question with long-termism.

9 subscribers

How to get URL link on X (Twitter) App

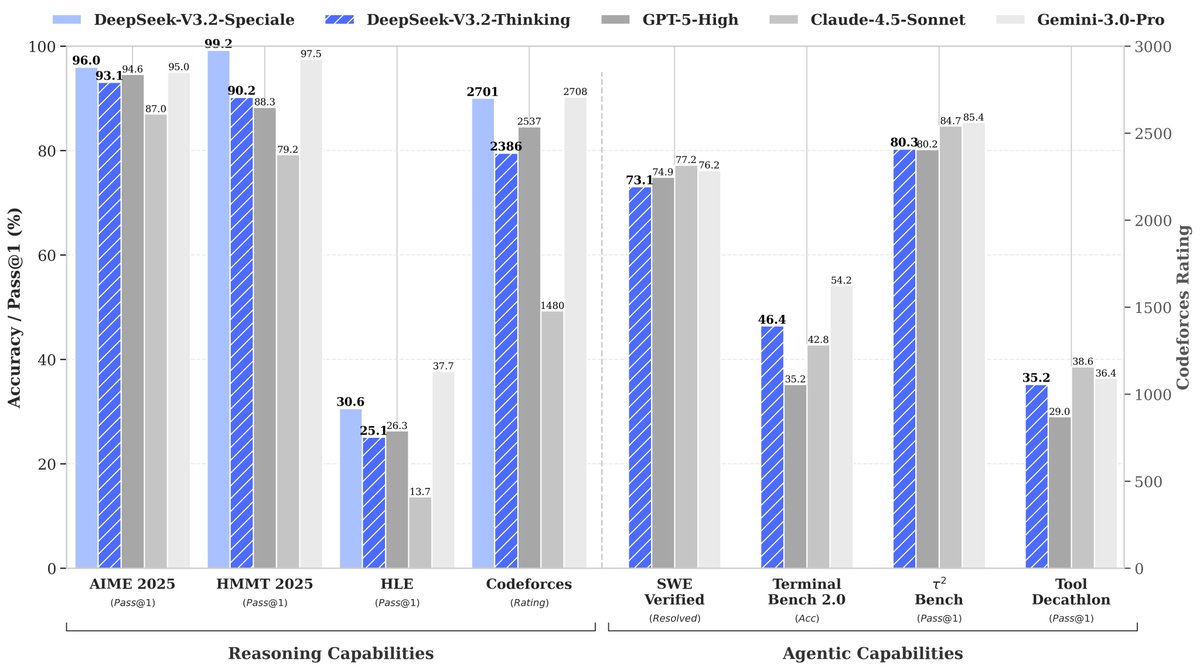

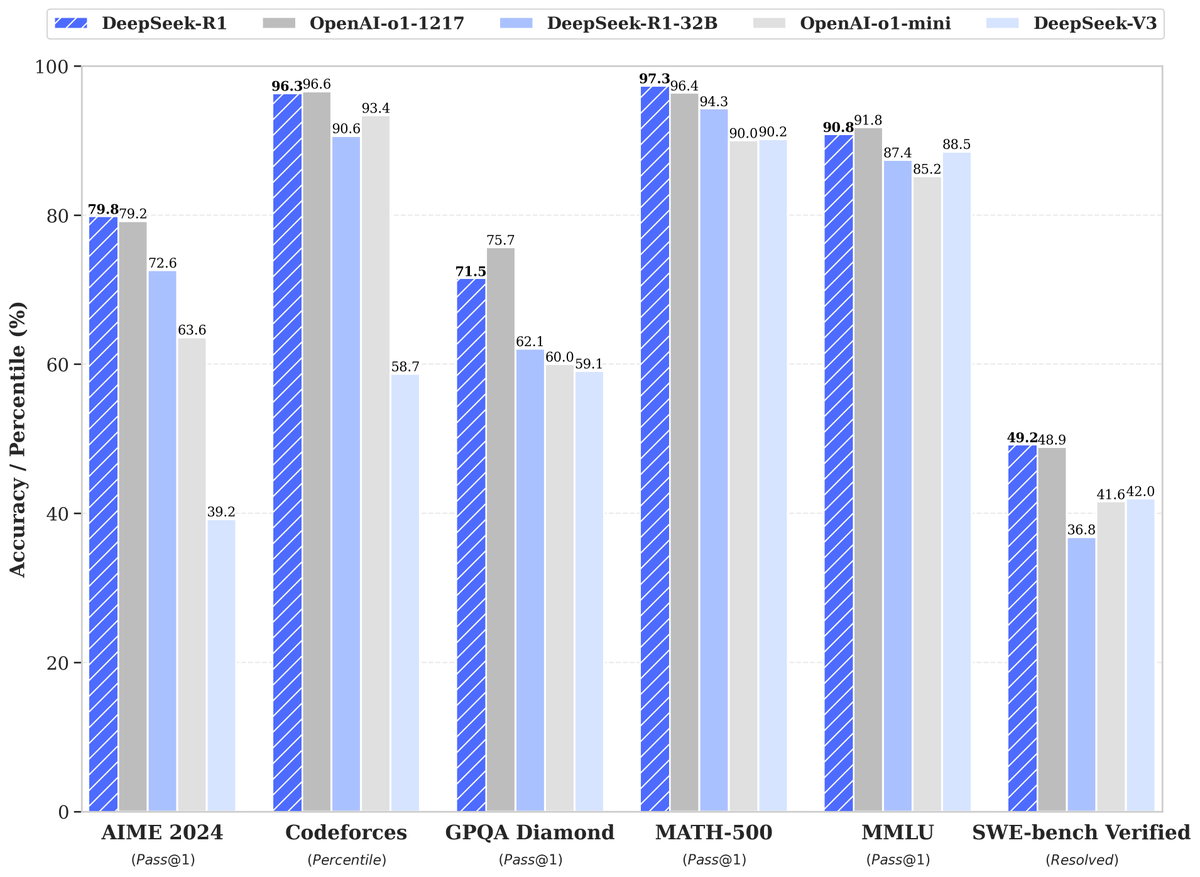

🏆 World-Leading Reasoning

🏆 World-Leading Reasoning

🔥 Bonus: Open-Source Distilled Models!

🔥 Bonus: Open-Source Distilled Models!

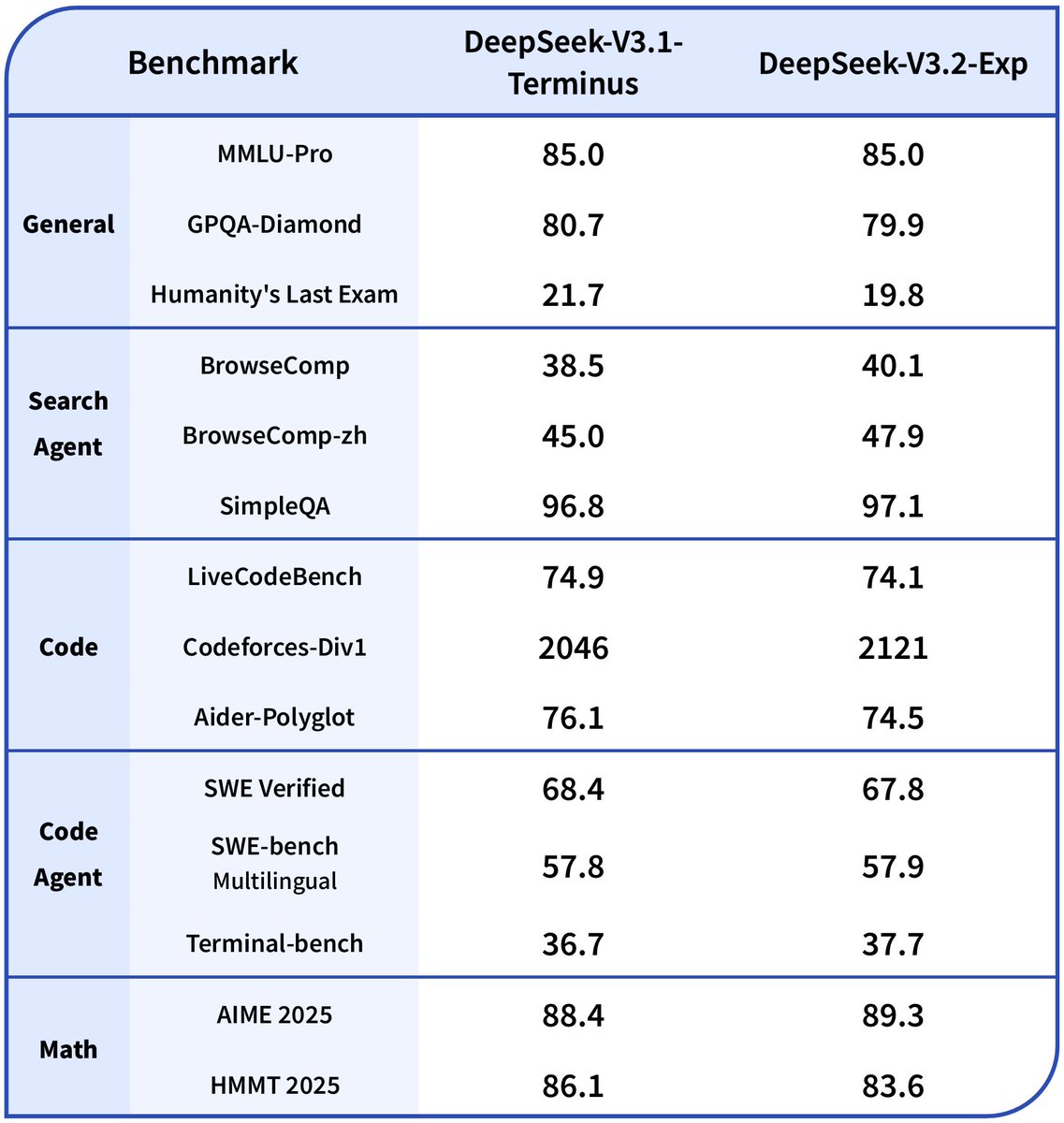

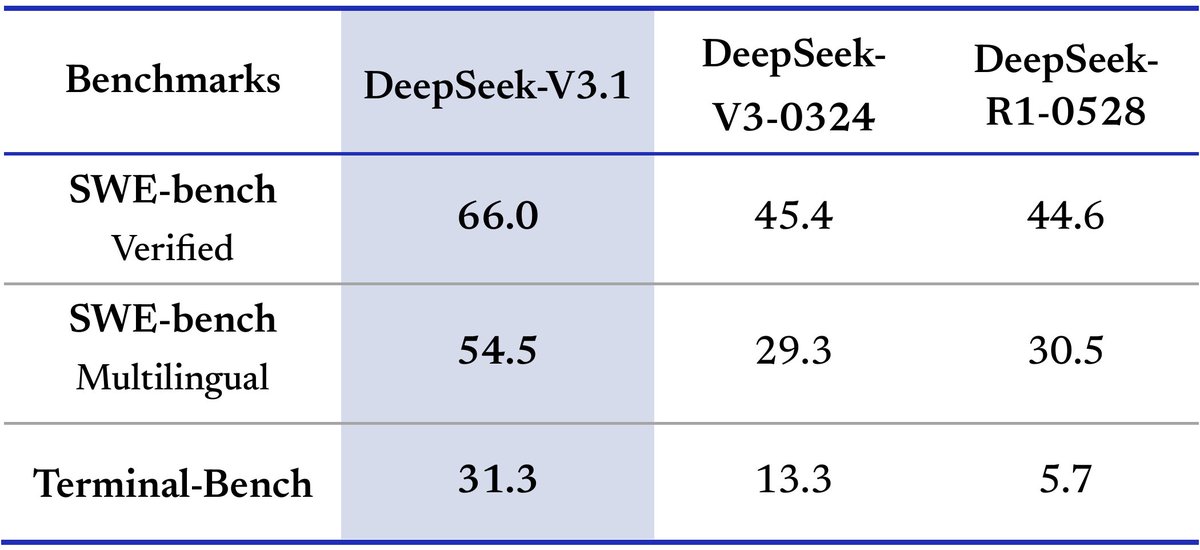

🎉 What’s new in V3?

🎉 What’s new in V3?

DeepSeek-V2.5 outperforms both DeepSeek-V2-0628 and DeepSeek-Coder-V2-0724 on most benchmarks.

DeepSeek-V2.5 outperforms both DeepSeek-V2-0628 and DeepSeek-Coder-V2-0724 on most benchmarks.