Shutting down your PC before 1995 was kind of brutal.

You saved your work, the buffers flushed, wait for the HDD lights to switch off, and

*yoink*

You flick the mechanical switch directly interrupting the flow of power.

The interesting part is when this all changed.

You saved your work, the buffers flushed, wait for the HDD lights to switch off, and

*yoink*

You flick the mechanical switch directly interrupting the flow of power.

The interesting part is when this all changed.

Two major developments had to occur.

First, the standardization of a physical connection in the system linking the power supply to the motherboard. (Hardware constraint)

Second, a universal driver mechanism to request changes in the power state. (Software constraint)

First, the standardization of a physical connection in the system linking the power supply to the motherboard. (Hardware constraint)

Second, a universal driver mechanism to request changes in the power state. (Software constraint)

These, respectively, became known as the ATX and APM Standards.

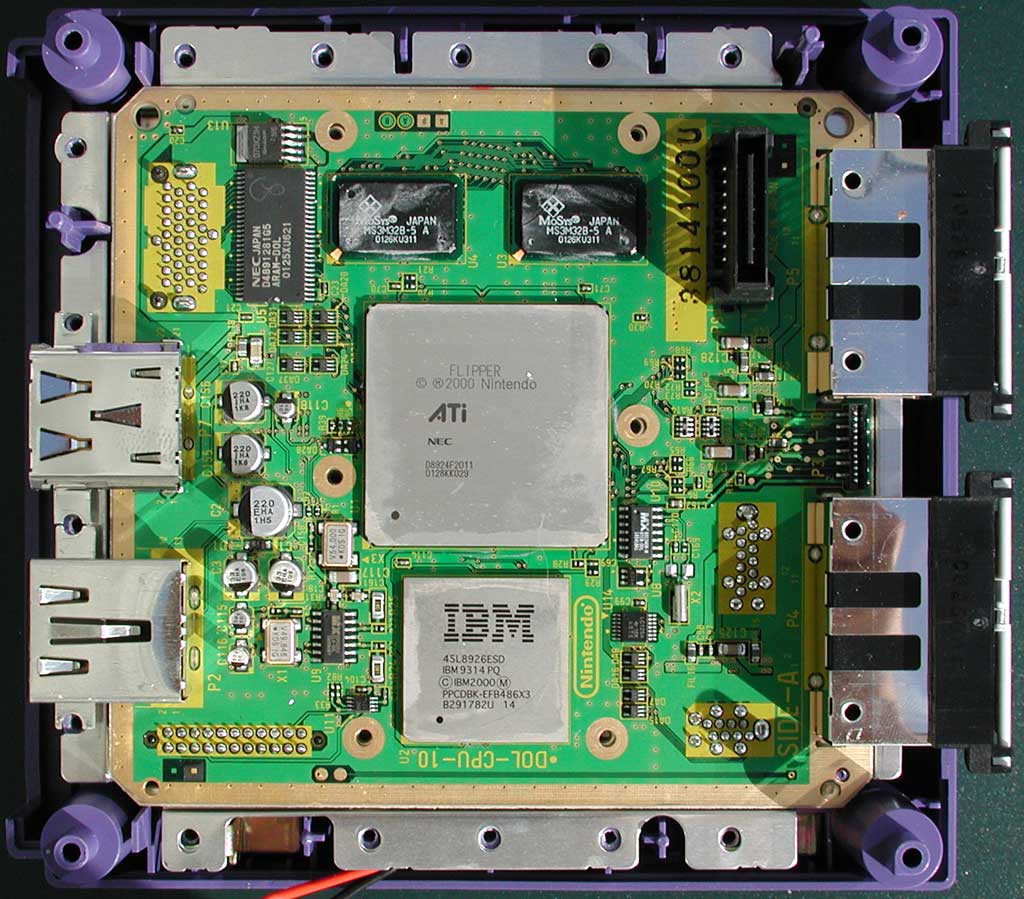

Although it would have been possible much earlier; industry fragmentation in the PC market between Microsoft, IBM, Intel and others stagnated progress.

By 1995, things started to get more consolidated.

Although it would have been possible much earlier; industry fragmentation in the PC market between Microsoft, IBM, Intel and others stagnated progress.

By 1995, things started to get more consolidated.

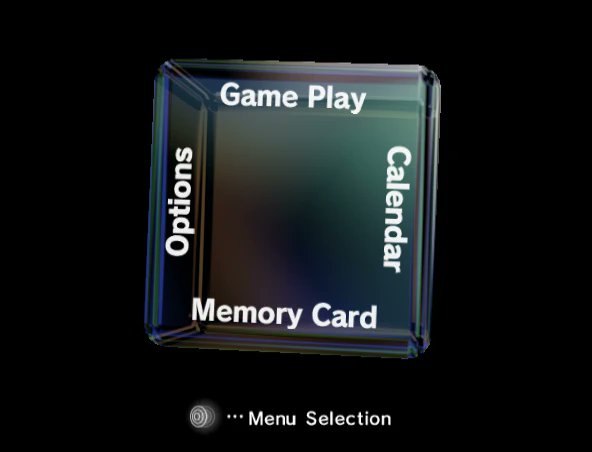

Eventually control of the power state of the system via the OS became more widespread. And for good reason!

Caches, more complex filesystems, and multitasking all increased the risk of data corruption during an "unclean" shutdown.

The APM standard later got replaced by ACPI, but it's an interesting tidbit of computer history nontheless.

If you'd like to read some interesting history of the APM vs ACPI debate, check out this writeup by MJG59.

Why ACPI?:

mjg59.dreamwidth.org/68350.html

Caches, more complex filesystems, and multitasking all increased the risk of data corruption during an "unclean" shutdown.

The APM standard later got replaced by ACPI, but it's an interesting tidbit of computer history nontheless.

If you'd like to read some interesting history of the APM vs ACPI debate, check out this writeup by MJG59.

Why ACPI?:

mjg59.dreamwidth.org/68350.html

• • •

Missing some Tweet in this thread? You can try to

force a refresh