researcher @google; serial complexity unpacker;

https://t.co/Vl1seeNgYK

ex @ msft & aerospace

15 subscribers

How to get URL link on X (Twitter) App

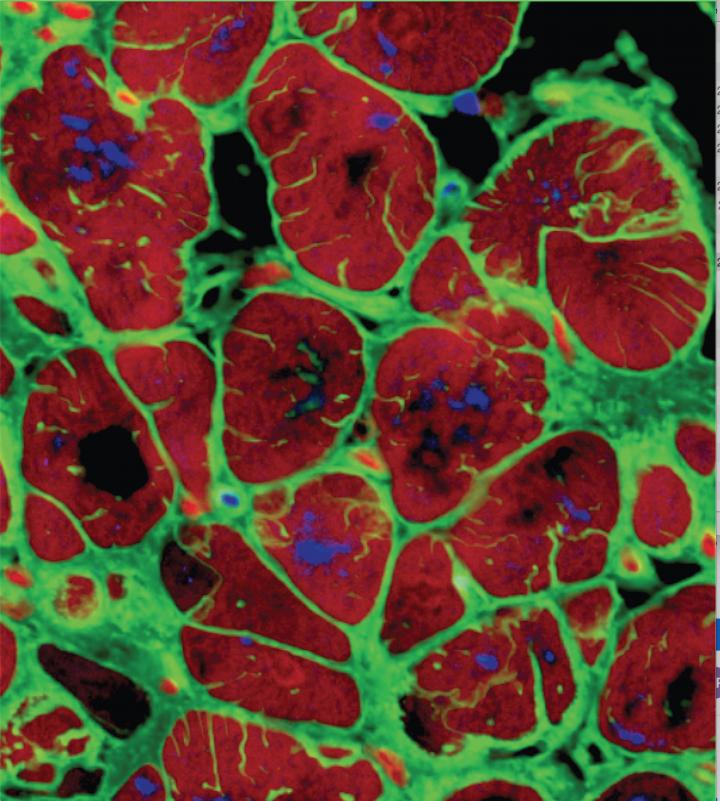

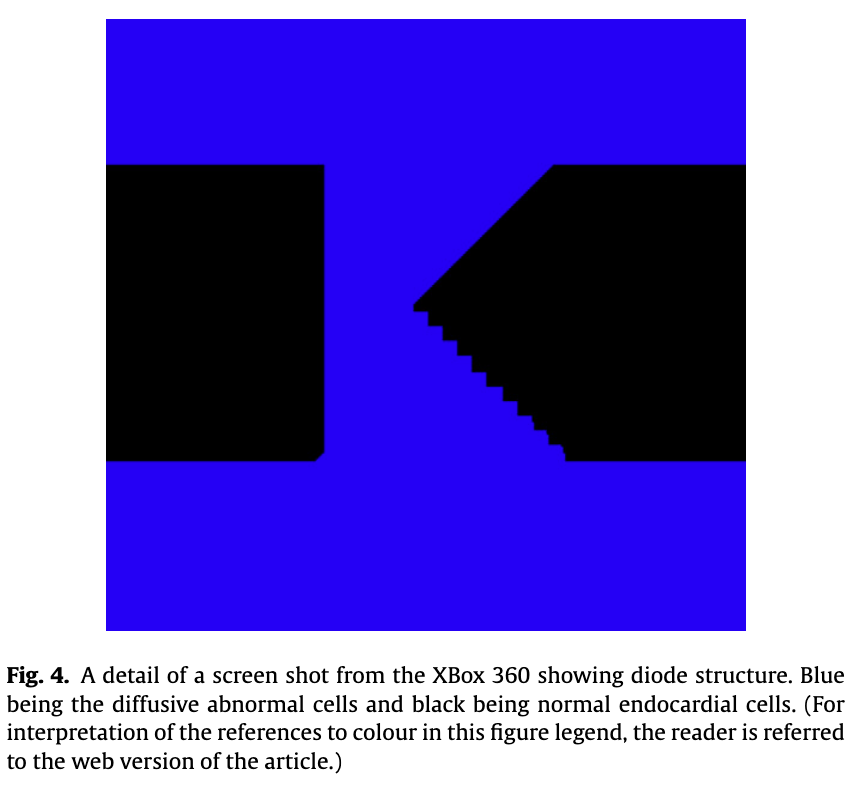

The author figured out you can build a NOR gate from heart cells.

The author figured out you can build a NOR gate from heart cells.

Much of it comes down to company taste.

Much of it comes down to company taste.

*When* exactly the problem occurred is hard to pinpoint.

*When* exactly the problem occurred is hard to pinpoint.

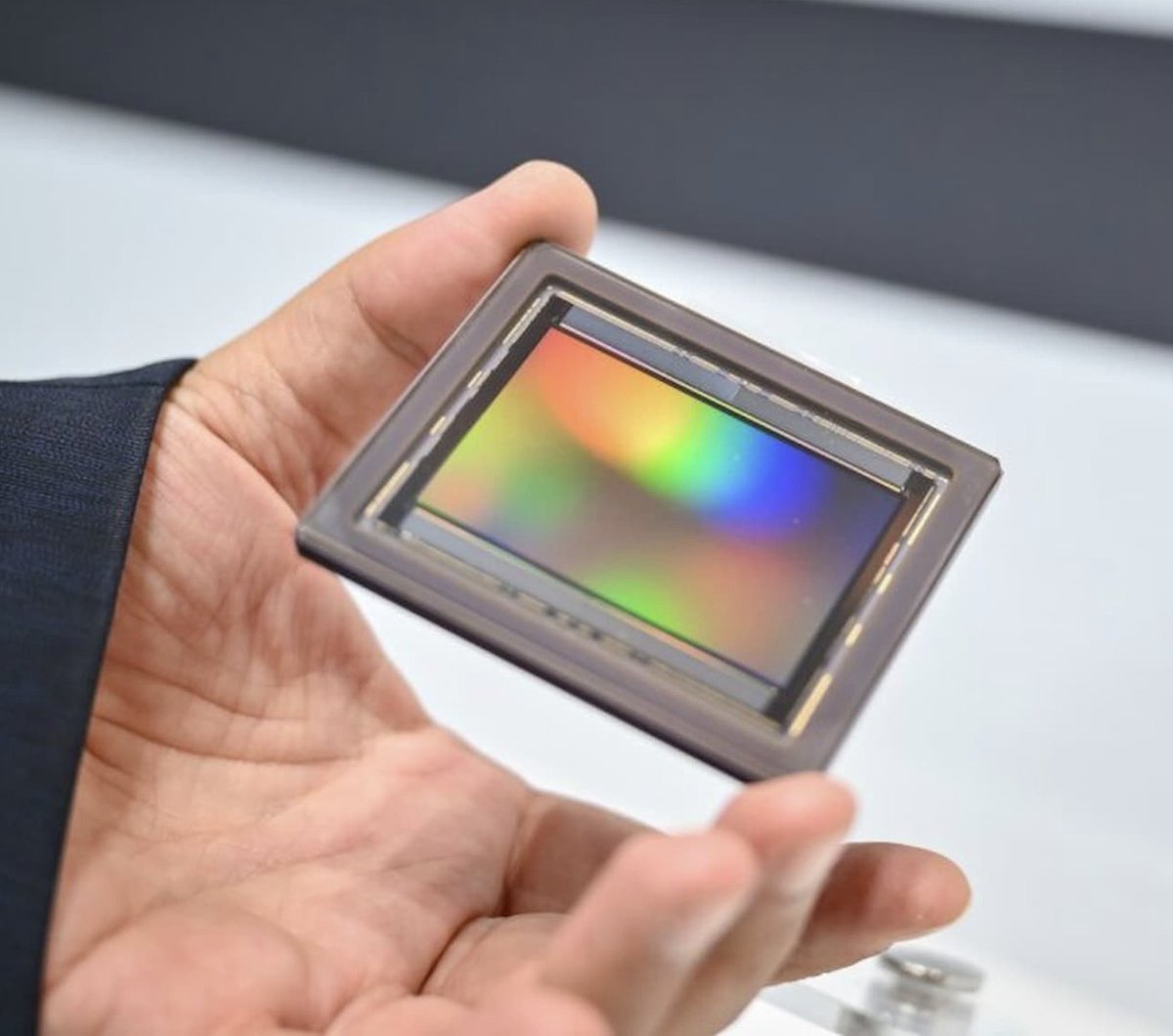

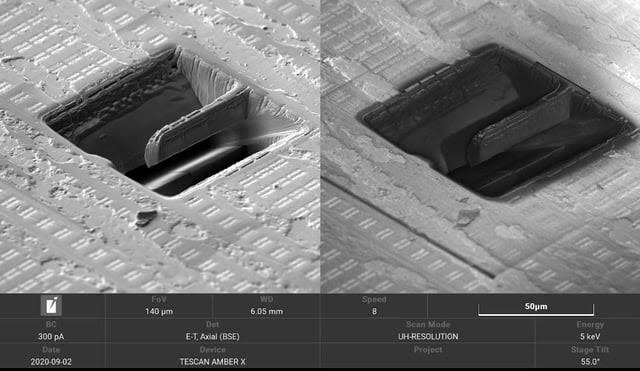

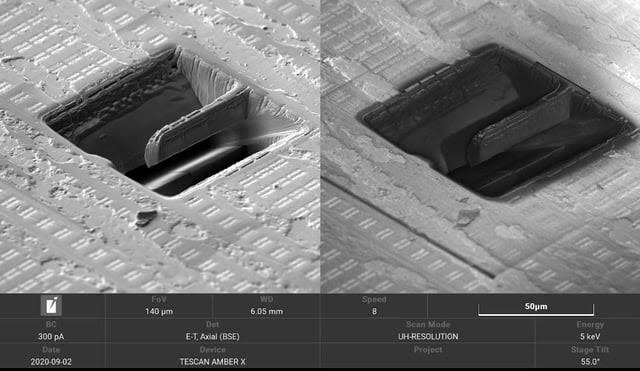

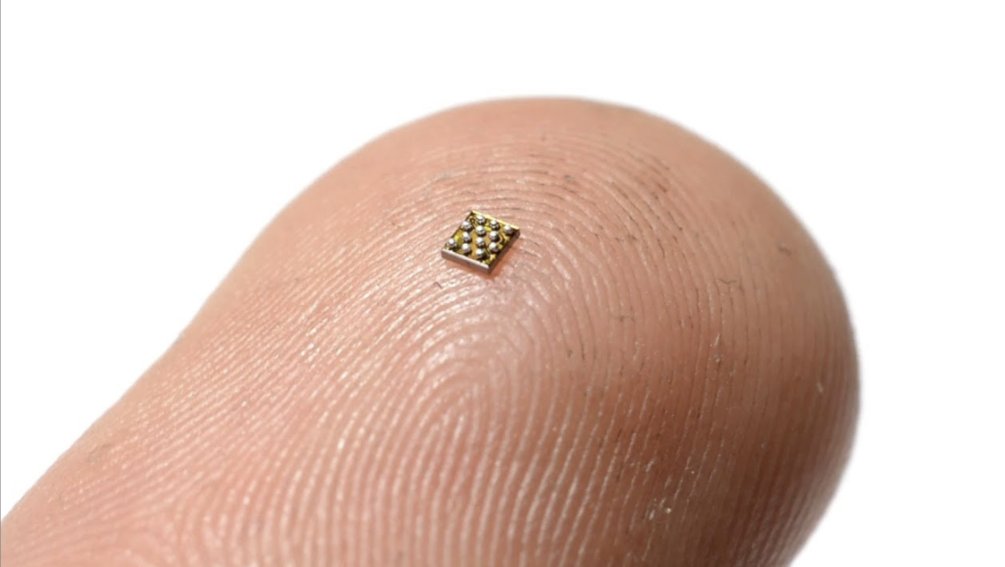

Naked silicon (specifically, WLCSP) isn’t “bad” per se; it’s heavily used in mobile phones.

Naked silicon (specifically, WLCSP) isn’t “bad” per se; it’s heavily used in mobile phones.

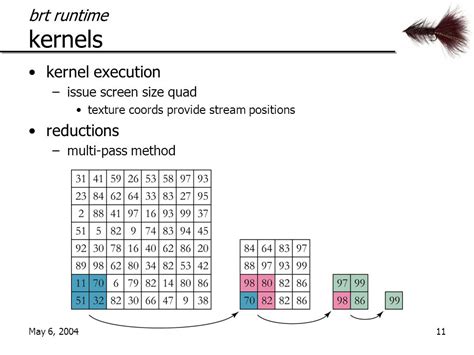

Just-In-Time compilation *sucked*.

Just-In-Time compilation *sucked*.

https://twitter.com/lauriewired/status/2007178831153770599

Transparent Page Placement (TPP) is the modern linux equivalent of a very old idea.

Transparent Page Placement (TPP) is the modern linux equivalent of a very old idea.

The F14 used variable-sweep wings.

The F14 used variable-sweep wings.

A single Emacs session was still open, with a root shell.

A single Emacs session was still open, with a root shell.

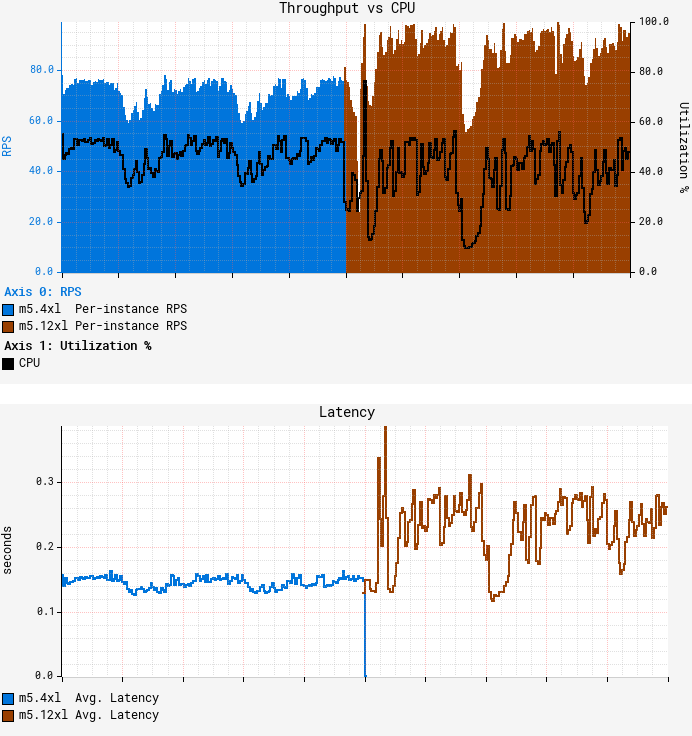

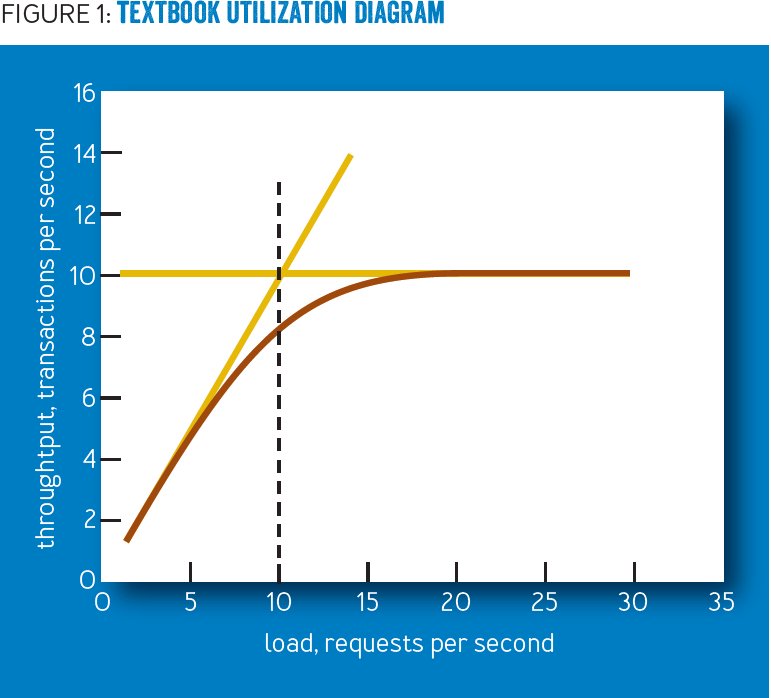

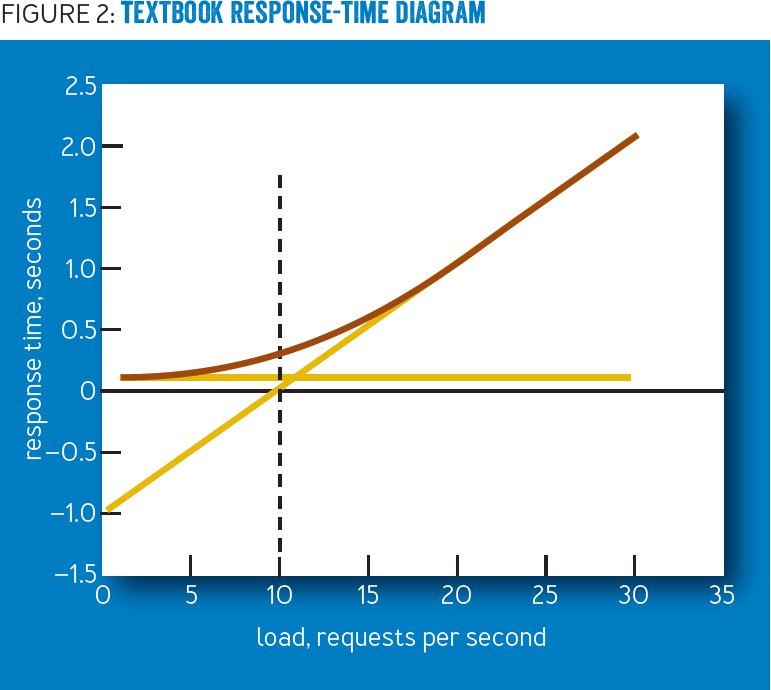

It all comes down to queueing theory.

It all comes down to queueing theory.

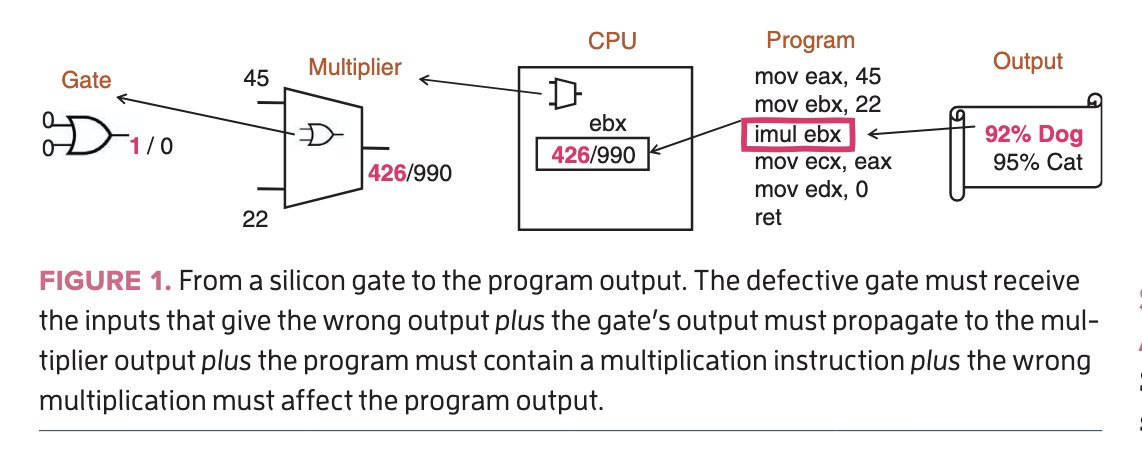

As you hit the more theoretical sides of Computer Science, you start to realize almost *anything* can produce useful compute.

As you hit the more theoretical sides of Computer Science, you start to realize almost *anything* can produce useful compute.

Admittedly, professors are in a tough spot.

Admittedly, professors are in a tough spot.

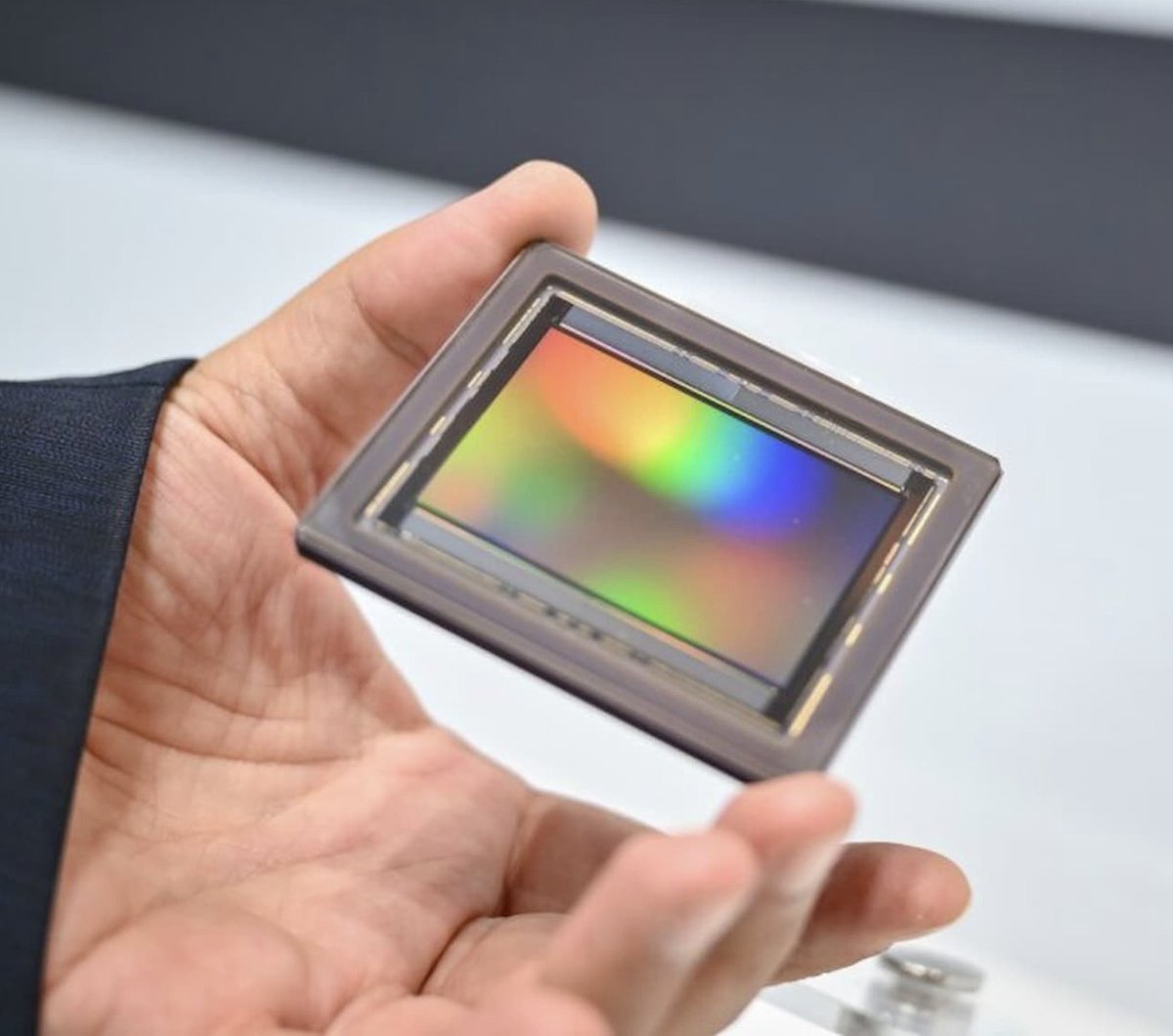

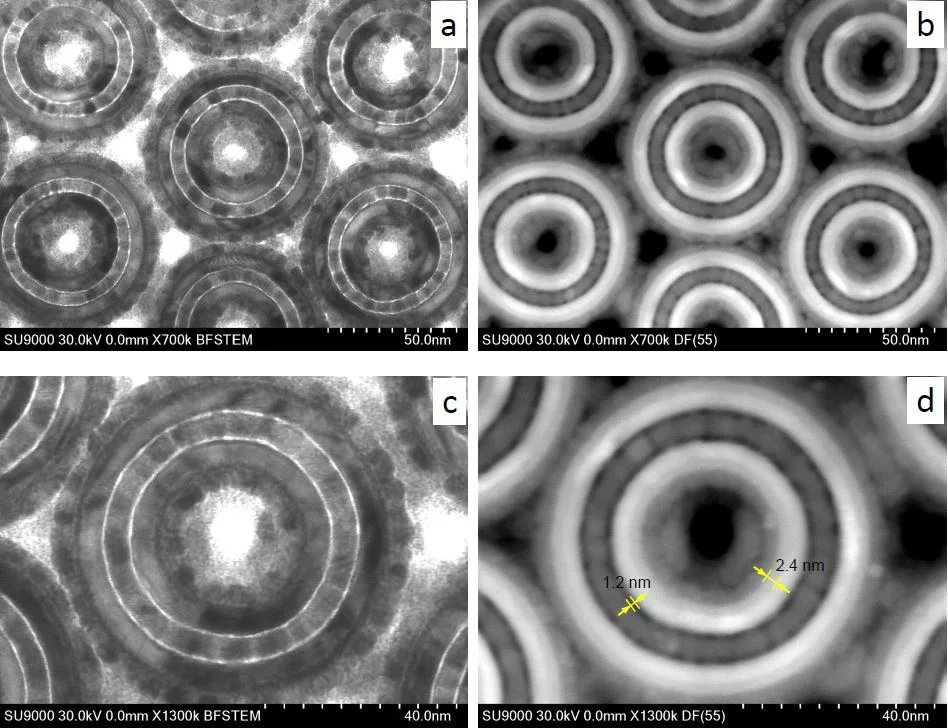

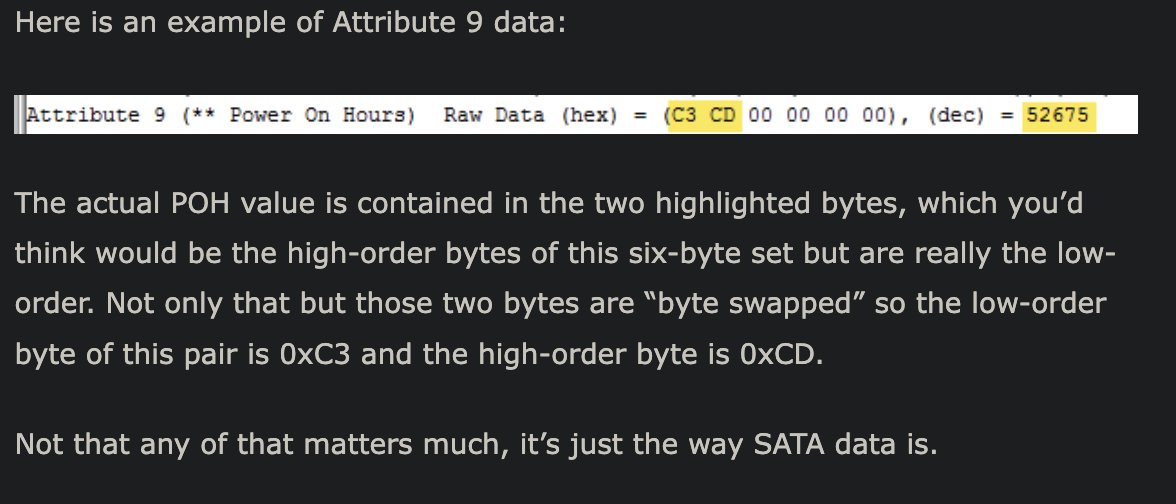

More than ever, manufacturers have been pushing memory to the absolute limits.

More than ever, manufacturers have been pushing memory to the absolute limits.

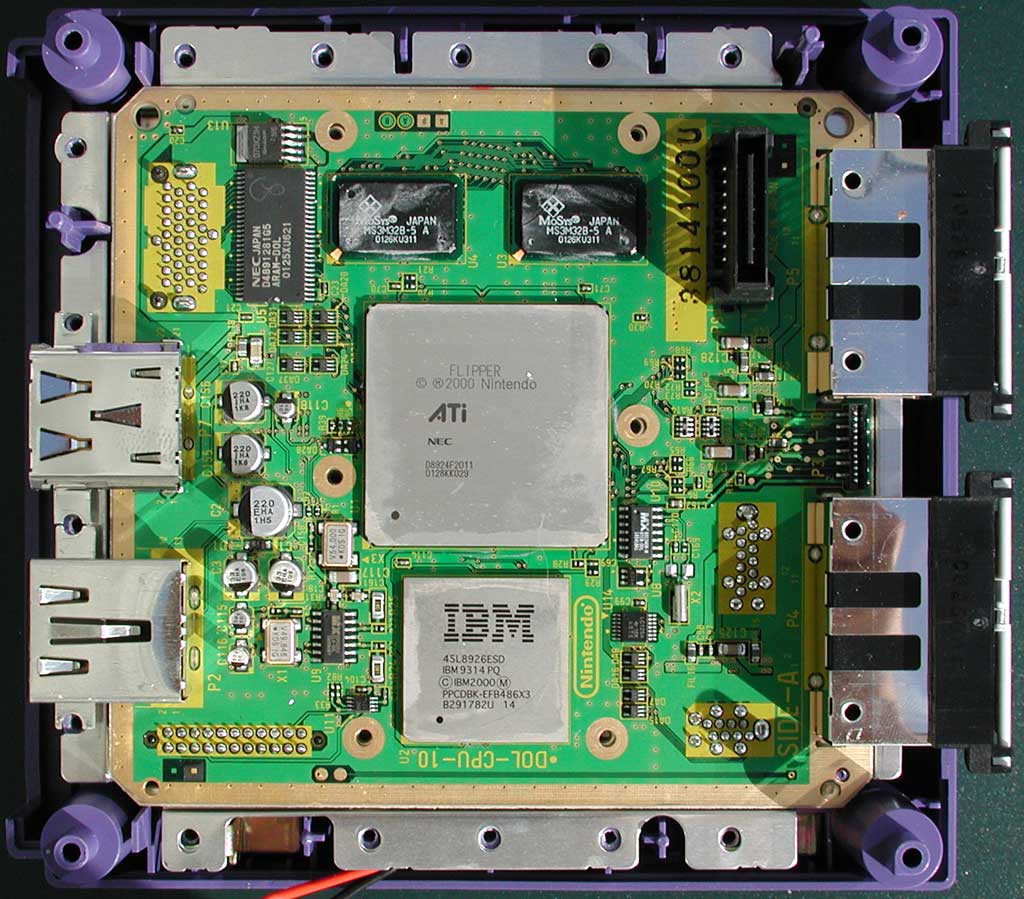

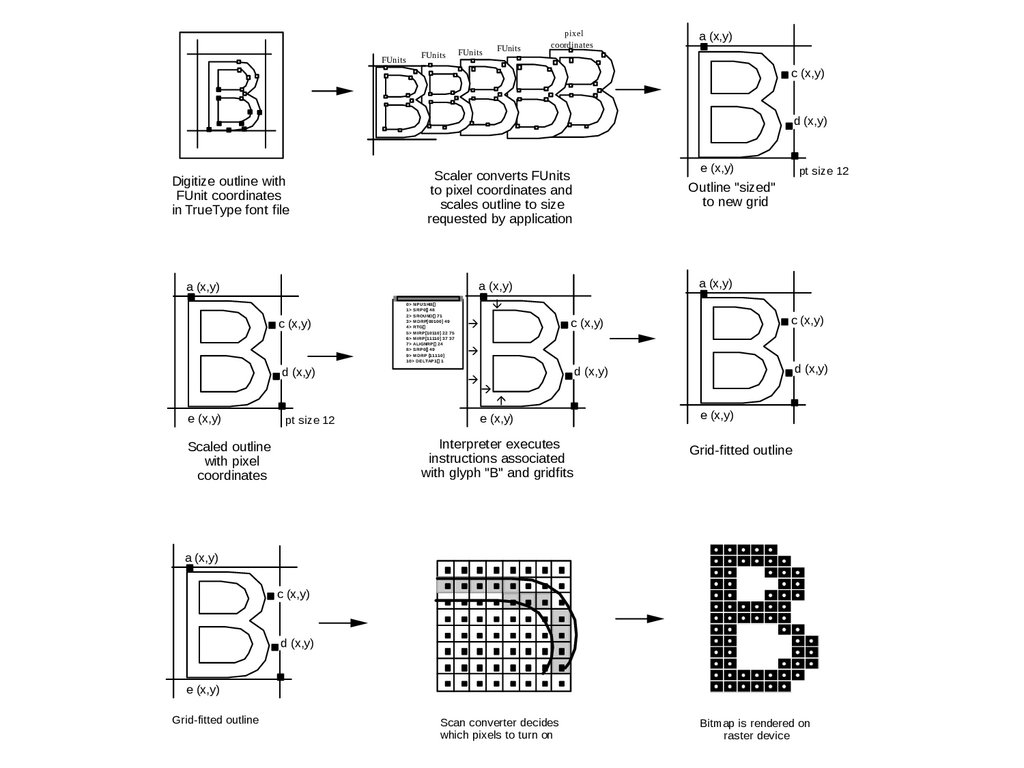

Anytime you can run code (albeit very limited code), someone will take advantage of it.

Anytime you can run code (albeit very limited code), someone will take advantage of it.

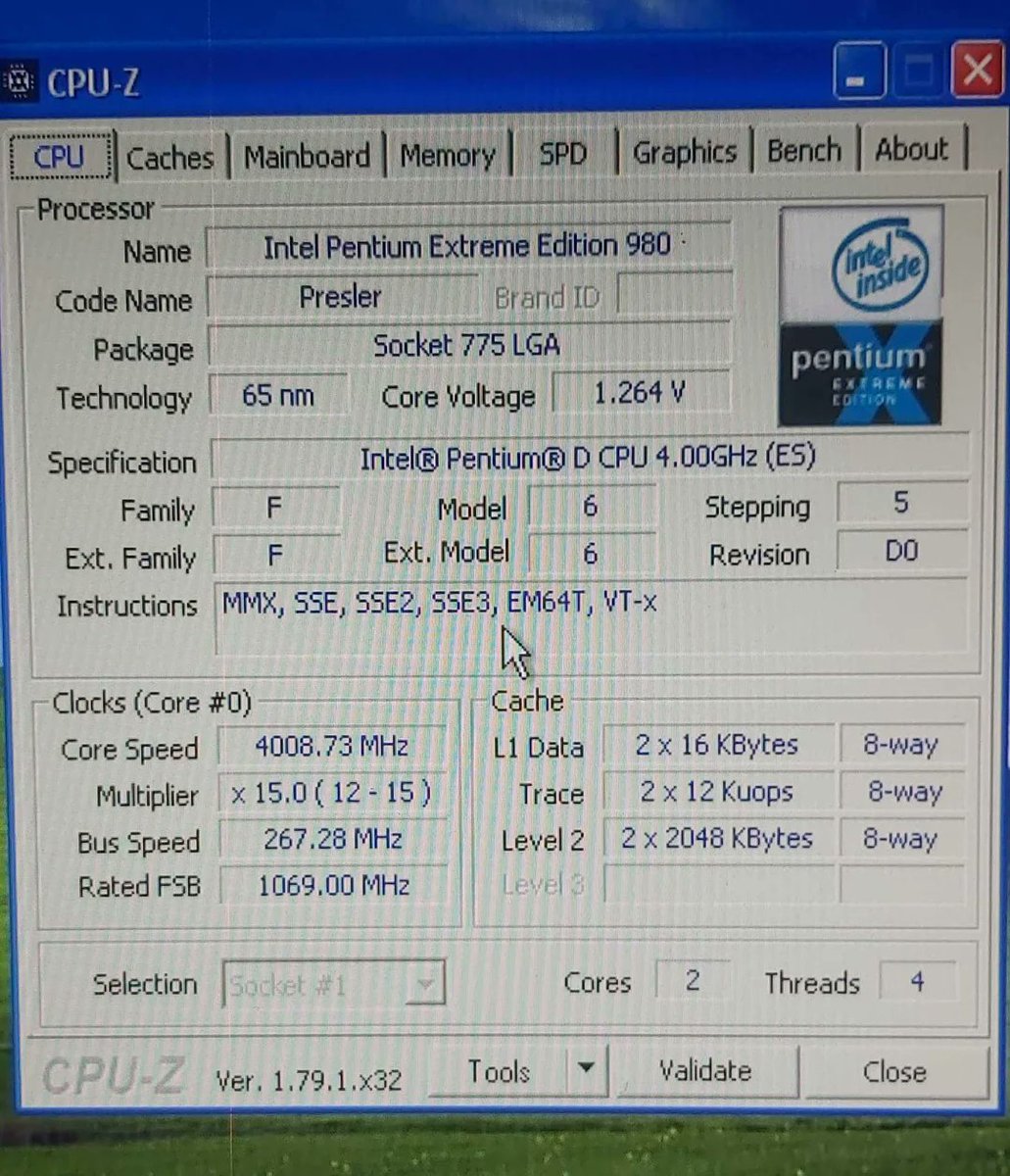

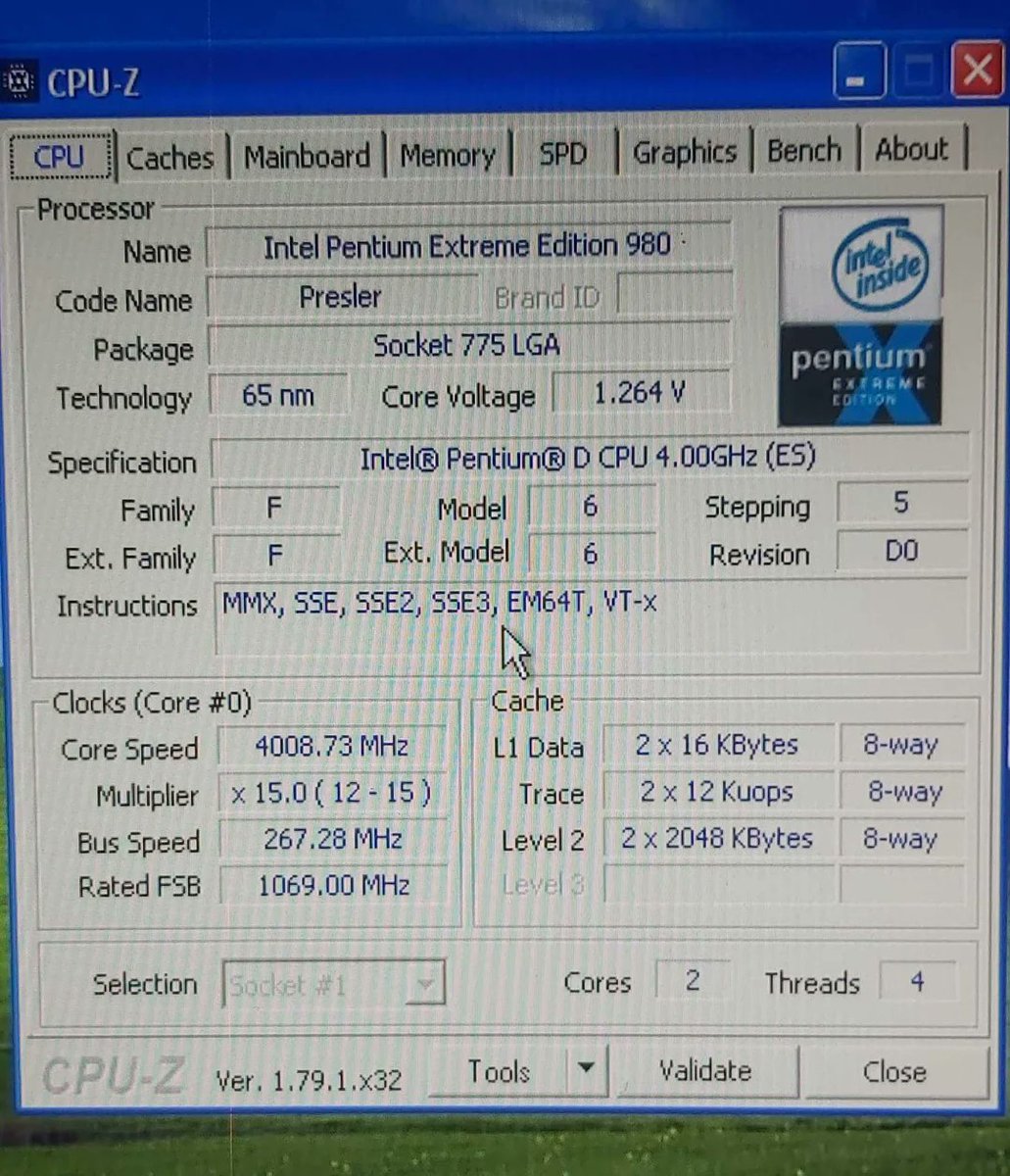

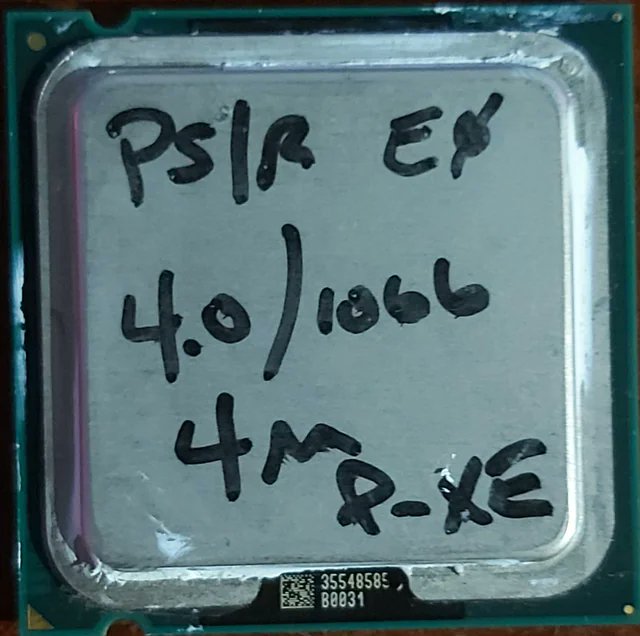

A few days ago, someone found an Intel Pentium Extreme 980.

A few days ago, someone found an Intel Pentium Extreme 980.

Bruce “Tog”, head of UI testing at Apple, claimed their research showed:

Bruce “Tog”, head of UI testing at Apple, claimed their research showed:

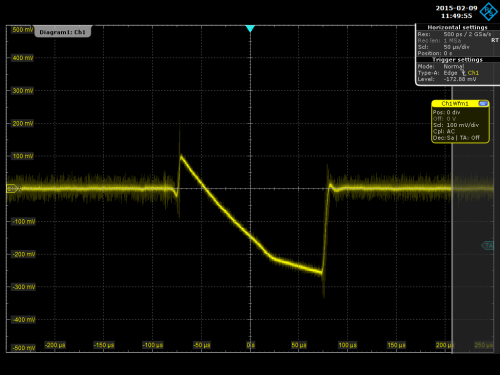

First things first, keep it cold. Crazy cold.

First things first, keep it cold. Crazy cold.

Mathematically, there is a solution. It’s just really, really slow.

Mathematically, there is a solution. It’s just really, really slow.

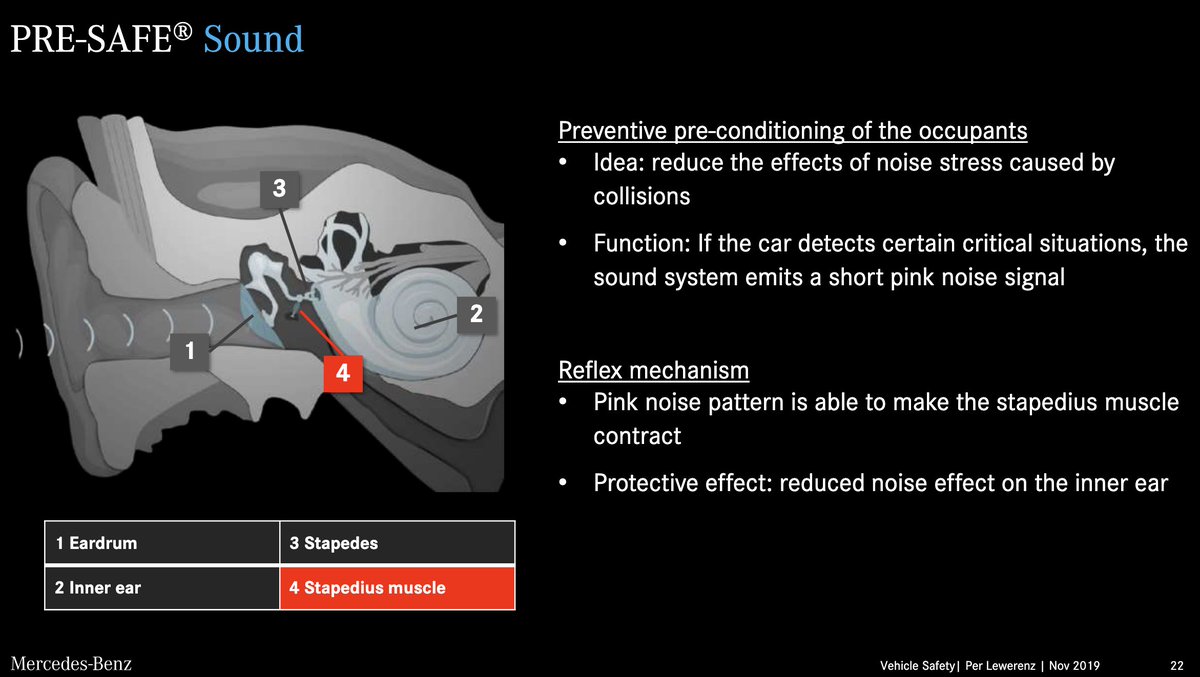

If you’re cool, you can activate the stapedius reflex voluntarily. (ear rumblers unite!)

If you’re cool, you can activate the stapedius reflex voluntarily. (ear rumblers unite!)

If you’re a programmer, you might already guess what happened.

If you’re a programmer, you might already guess what happened.

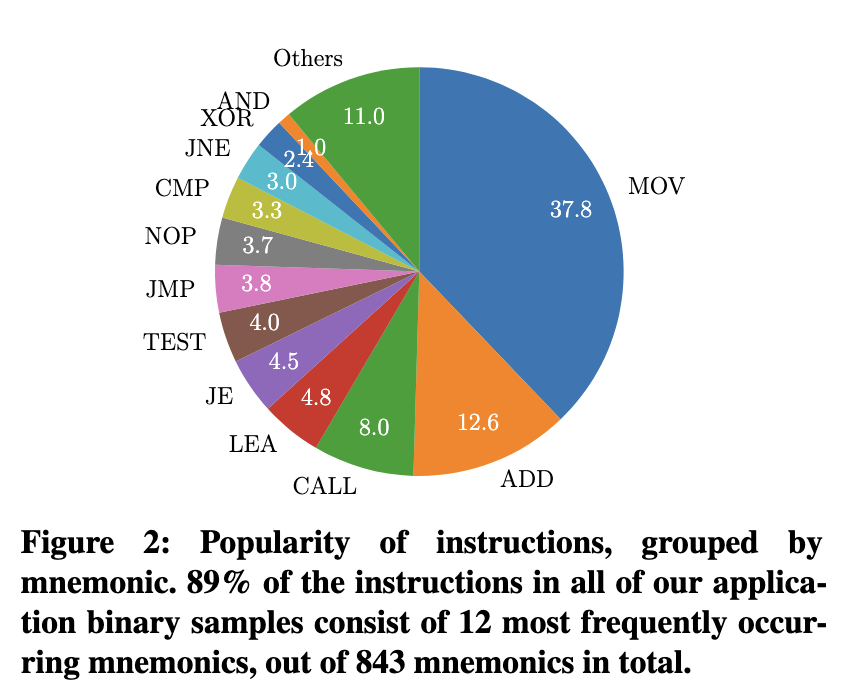

x86 suffers from what you would call “long tail syndrome”.

x86 suffers from what you would call “long tail syndrome”.

There is *one* glimpse of this that I know of in the wild.

There is *one* glimpse of this that I know of in the wild.