I've spent the past ~6 weeks going through the entire history of robotics and understanding all the core research breakthroughs.

It has completely changed my beliefs about the future of humanoid robotics.

My process + biggest takeaways in thread (full resource at the end) 👇

It has completely changed my beliefs about the future of humanoid robotics.

My process + biggest takeaways in thread (full resource at the end) 👇

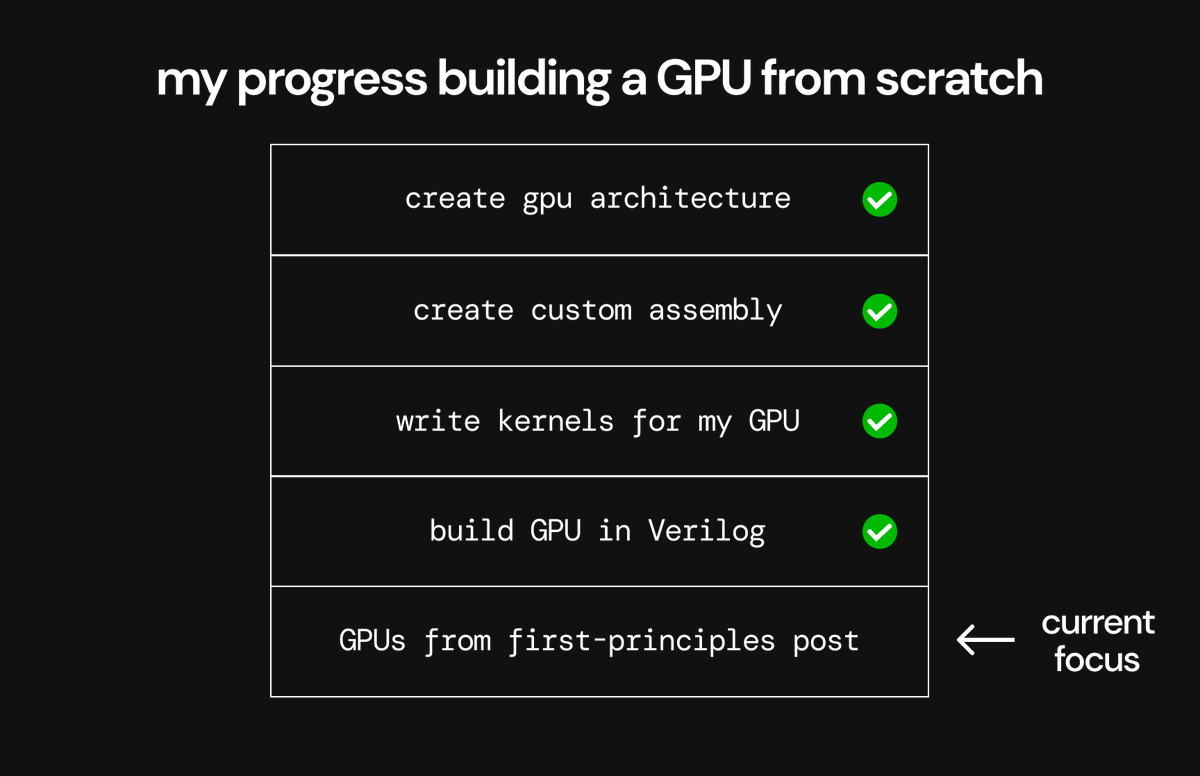

Step #1 ✅: Learning the fundamentals of robotics from papers

Over the past 2 years, $100Ms has been deployed into the robotics industry to fund the humanoid arms race.

From twitter hype, public sentiment, and recent demos, it seemed to me that fully autonomous general-purpose robotics was right around the corner (~2-3 years away).

With this in mind, I decided to learn the fundamentals of robotics directly from the primary source: the series of research papers that have gotten us to the current wave of humanoid robotics.

As I got farther into the research, I realized that it pointed to a very different future, and potentially much longer timelines, than what current narratives may suggest (discussed in detail in the full resource at the end).

I initially focused on learning about the following topics/trail of breakthroughs that led us to where we are today:

(the repository at the end of the thread covers all of these + more in greater detail)

Classical Robotics

> SLAM - Simultaneous localization and mapping systems allow robots to convert various sensor data into a 3D map of the environment, and allow the robot to understand it's place within it.

> Hierarchical Task Planning - Early robotic planning systems used hierarchical/symbolic models to organize the series of tasks to execute.

> Path Planning - Sampling based path-finding algorithms used to find best-effort paths that avoid collisions in high-dimensional environments.

> Forward Kinematics/Dynamics - Using physics models to predict where the robot will move given specific actuator inputs.

> Inverse Kinematics/Dynamics - Using physics models to predict how to control actuators given a target position.

> Contact Modeling - Modeling the precise friction and torque forces at contact points to understand when objects are fully controlled (form closure) and how robotic movement will manipulate objects.

> Zero-Moment Point (ZMP) - The point where all the forces around a robots foot cancel each other, used in calculations to enable robot locomotion and balance.

Deep Reinforcement Learning

> Deep Q-Networks - The earliest successful deep RL algorithms that first rose to popularity with the success of DQN on Atari.

> A3C/GAE - Allow RL systems to learn from long-horizon rewards, critical for most robotic tasks with delayed feedback.

> TRPO/PPO - Introduced an effective method for step-sizing parameter updates, which is especially critical for training RL policies.

> DDPG/SAC - Introduced more-sample efficient RL algorithms that could reuse data multiple times, valuable for training real-world robotic control policies with expensive data collection

> Curiosity - Using curiosity as a reward signal to train RL systems in complex environments, which has proven valuable for recent robotic foundational models.

Simulation & Imitation Learning

> MuJoCo - Simulation software built specifically for the needs of robotics that introduced more accurate joint and contact modeling. Enabled a series of research breakthroughs in training robots in simulation.

> Domain Randomization - Randomizing objects, textures, lighting, and other environmental conditions in simulation to get robots to generalize to the complexity of real world environments.

> Dynamics Randomization - Randomizing the laws of physics in simulation to teach robots to treat the real world as just another random physics engine to generalize to, bridging the simulation-to-reality gap.

> Simulation Optimization - Optimizing the specific domain/dynamics randomization levels to enable robot policies to train quickly in simulation.

> Behavior Cloning - Learning control policies by trying to clone the behavior of a human demonstrator. This approach has now given rise to tele-operated robotics.

> Dataset Aggregation (DAgger) - Using a data-collection loop between RL algorithms and experts to create more robust datasets for imitation learning.

> Inverse Reinforcement Learning (IRL) - Learning RL policies from demonstrators by trying to guess their reward function (trying to understand what the demonstrators goals are).

Generalization & Robotic Transformers

> End-to-end Learning - Researchers started to train robotic control policies with a single integrated visual and motor system, rather than with separate components. This started the end-to-end integration trend that has continued today.

> Tele-operation (BC-Z, ALoHa) - Cheaper tele-operation hardware and better training methods enabled the first capable robots trained with large volumes of data from human demonstrators operating robots. This is now the most widely used method for training frontier robotic control systems.

> Robotic Transformer (RT1) - The first successful transformer based robotics model that used a series of images and a text prompt passed to a transformer to output action tokens that could operate actuators.

> Grounding Language Models (SayCan) - Trained an LLM to understand a robot's capabilities, allowing it to carry out robotic task planning based on images and text that was grounded in reality.

> Action Chunking Transformer (ACT) - A transformer based robotic architecture that introduces "action chunking" allowing the model to predict the next series of actions instead of just a single time step. This enabled far smoother motor control.

> Vision-Language-Action Models (RT2) - Used modern vision-language models (VLMs) pre-trained on internet-scale data and fine-tuned them with robotic action data to achieve state of the art results. Arguably the most impactful milestone in recent robotics research.

> Cross-embodiment (PI0) - Training robotics models that work on a variety of different hardware systems, allowing the model to generalize beyond an individual robot to understand broad manipulation. Combined ACT + VLA + diffusion into a single SOTA model.

Note that though I didn't focus on robotic hardware in this tweet because most recent progress in robotics has been on the software side, the hardware aspects are covered in the full repository at the end of the thread.

Over the past 2 years, $100Ms has been deployed into the robotics industry to fund the humanoid arms race.

From twitter hype, public sentiment, and recent demos, it seemed to me that fully autonomous general-purpose robotics was right around the corner (~2-3 years away).

With this in mind, I decided to learn the fundamentals of robotics directly from the primary source: the series of research papers that have gotten us to the current wave of humanoid robotics.

As I got farther into the research, I realized that it pointed to a very different future, and potentially much longer timelines, than what current narratives may suggest (discussed in detail in the full resource at the end).

I initially focused on learning about the following topics/trail of breakthroughs that led us to where we are today:

(the repository at the end of the thread covers all of these + more in greater detail)

Classical Robotics

> SLAM - Simultaneous localization and mapping systems allow robots to convert various sensor data into a 3D map of the environment, and allow the robot to understand it's place within it.

> Hierarchical Task Planning - Early robotic planning systems used hierarchical/symbolic models to organize the series of tasks to execute.

> Path Planning - Sampling based path-finding algorithms used to find best-effort paths that avoid collisions in high-dimensional environments.

> Forward Kinematics/Dynamics - Using physics models to predict where the robot will move given specific actuator inputs.

> Inverse Kinematics/Dynamics - Using physics models to predict how to control actuators given a target position.

> Contact Modeling - Modeling the precise friction and torque forces at contact points to understand when objects are fully controlled (form closure) and how robotic movement will manipulate objects.

> Zero-Moment Point (ZMP) - The point where all the forces around a robots foot cancel each other, used in calculations to enable robot locomotion and balance.

Deep Reinforcement Learning

> Deep Q-Networks - The earliest successful deep RL algorithms that first rose to popularity with the success of DQN on Atari.

> A3C/GAE - Allow RL systems to learn from long-horizon rewards, critical for most robotic tasks with delayed feedback.

> TRPO/PPO - Introduced an effective method for step-sizing parameter updates, which is especially critical for training RL policies.

> DDPG/SAC - Introduced more-sample efficient RL algorithms that could reuse data multiple times, valuable for training real-world robotic control policies with expensive data collection

> Curiosity - Using curiosity as a reward signal to train RL systems in complex environments, which has proven valuable for recent robotic foundational models.

Simulation & Imitation Learning

> MuJoCo - Simulation software built specifically for the needs of robotics that introduced more accurate joint and contact modeling. Enabled a series of research breakthroughs in training robots in simulation.

> Domain Randomization - Randomizing objects, textures, lighting, and other environmental conditions in simulation to get robots to generalize to the complexity of real world environments.

> Dynamics Randomization - Randomizing the laws of physics in simulation to teach robots to treat the real world as just another random physics engine to generalize to, bridging the simulation-to-reality gap.

> Simulation Optimization - Optimizing the specific domain/dynamics randomization levels to enable robot policies to train quickly in simulation.

> Behavior Cloning - Learning control policies by trying to clone the behavior of a human demonstrator. This approach has now given rise to tele-operated robotics.

> Dataset Aggregation (DAgger) - Using a data-collection loop between RL algorithms and experts to create more robust datasets for imitation learning.

> Inverse Reinforcement Learning (IRL) - Learning RL policies from demonstrators by trying to guess their reward function (trying to understand what the demonstrators goals are).

Generalization & Robotic Transformers

> End-to-end Learning - Researchers started to train robotic control policies with a single integrated visual and motor system, rather than with separate components. This started the end-to-end integration trend that has continued today.

> Tele-operation (BC-Z, ALoHa) - Cheaper tele-operation hardware and better training methods enabled the first capable robots trained with large volumes of data from human demonstrators operating robots. This is now the most widely used method for training frontier robotic control systems.

> Robotic Transformer (RT1) - The first successful transformer based robotics model that used a series of images and a text prompt passed to a transformer to output action tokens that could operate actuators.

> Grounding Language Models (SayCan) - Trained an LLM to understand a robot's capabilities, allowing it to carry out robotic task planning based on images and text that was grounded in reality.

> Action Chunking Transformer (ACT) - A transformer based robotic architecture that introduces "action chunking" allowing the model to predict the next series of actions instead of just a single time step. This enabled far smoother motor control.

> Vision-Language-Action Models (RT2) - Used modern vision-language models (VLMs) pre-trained on internet-scale data and fine-tuned them with robotic action data to achieve state of the art results. Arguably the most impactful milestone in recent robotics research.

> Cross-embodiment (PI0) - Training robotics models that work on a variety of different hardware systems, allowing the model to generalize beyond an individual robot to understand broad manipulation. Combined ACT + VLA + diffusion into a single SOTA model.

Note that though I didn't focus on robotic hardware in this tweet because most recent progress in robotics has been on the software side, the hardware aspects are covered in the full repository at the end of the thread.

Step #2 ✅: Building core intuitions from each paper

I initially focused on building the core intuitions for the innovations introduced by each paper.

First, I tried to build an intuition for the fundamental goals and challenges of the robotics problem.

I saw that at the simplest level, robots convert ideas into actions.

In order to accomplish this, robotic systems need to:

1. Observe and understand the state of their environment

2. Plan what actions they need to take to accomplish their goals

3. Know how to physically execute these actions with their hardware

These requirements cover the 3 essential functions of all robotic systems:

1. Perception

2. Planning

3. Control

The entire history of research breakthroughs in robotics fall into innovations in these key areas.

Though we might expect planning to be the most difficult of these problems since it requires complex high-level reasoning, it turns out that this is actually the easiest of the problems and is largely solved.

Meanwhile, robotic control is by far the hardest of these problems, due to the complexity of the real-world and the difficulty of predicting physical interactions.

In fact, developing generally-capable robotic control systems is currently the largest barrier to robotics progress.

With this in mind, I focused on understanding the phases of progress in the robotic control problem.

1. Classical Control

Initial classical approaches to control used manually programmed physics models.

These models constantly fell short of general capabilities due to the inability to factor in the massive number of un-modeled effects (like variable object textures & friction, external forces, and other variance).

This failure of classical control systems resembled the failure of early manual feature engineering approaches in machine learning, which were later replaced by deep learning.

In general, most classical approaches to robotics have long become obsolete, with the exception of algorithmic approaches to SLAM and locomotion (like ZMP), which are still heavily used in modern state-of-the-art robots.

2. Deep Reinforcement Learning

With the deep reinforcement learning models surpassng human capabilities in games like Atari, Go, and Dota 2 in the 2010s, robotics researchers hoped to apply these advancements to robotics.

This progress was particularly promising for robotics because robotic control is essentially a reinforcement learning problem: the robot (agent) needs to learn to take actions in an environment (to control its actuators) to maximize reward (effectively executing planned actions).

The development of modern reinforcement learning algorithms like Proximal Policy Optimization (PPO) and Soft Actor-Critic (SAC) provided optimization methods with sufficient speed and sample efficiency to effectively train robots (often in simulation).

This brought a new wave of deep learning based robotic control that demonstrated impressive generalization capabilities that far surpassed classical control methods, especially in problems like locomotion and dexterous manipulation.

3. Simulation & Imitation Learning

At the same time as these innovations in deep RL algorithms, progress in simulation & imitation learning also enabled better robotic control policies.

Specifically, the development of the MuJoCo simulator built for robotics (due to higher accuracy physics computations) and methods like domain/dynamics randomization helped to overcome the simulation-to-reality transfer problem where control policies would learn to do well in simulation by exploiting inaccuracies in the simulation, and then would fail when used in the real world.

Additionally, imitation learning methods like behavior cloning and inverse reinforcement learning enabled robotics control based on expert demonstrations.

4. Generalization & Robotic Transformers

Most recently, we have applied learnings from the success of training LLMs to robotics to yield a new wave of frontier robotics models.

Specifically, we have started to train large transformers on internet-scale data and tune them specifically to the robotics problem. This has led to state-of-the-art results.

For example, the vision-language-action (VLA) model proposed by RT2 used an open source vision-language model (multi-modal LLM) pre-trained on internet data and fine-tuned it to understand robotic control.

This system was able to use the high-level reasoning and visual understanding capabilities from the VLMs and apply it to robotics, effectively solving the robotic planning problem and adding impressive generalization capabilities to robotic control.

It's hard to overestimate how much value VLMs have brought to robotic planning and reasoning capabilities; this has been a major unlock on the path toward general-purpose robotics.

At this point, all frontier robotics models are using a combination of the VLA + ACT architecture with their own combination of internet and manually collected data, with pi0 representing the most impressive publicly released model today with frontier generalization capabilities.

I initially focused on building the core intuitions for the innovations introduced by each paper.

First, I tried to build an intuition for the fundamental goals and challenges of the robotics problem.

I saw that at the simplest level, robots convert ideas into actions.

In order to accomplish this, robotic systems need to:

1. Observe and understand the state of their environment

2. Plan what actions they need to take to accomplish their goals

3. Know how to physically execute these actions with their hardware

These requirements cover the 3 essential functions of all robotic systems:

1. Perception

2. Planning

3. Control

The entire history of research breakthroughs in robotics fall into innovations in these key areas.

Though we might expect planning to be the most difficult of these problems since it requires complex high-level reasoning, it turns out that this is actually the easiest of the problems and is largely solved.

Meanwhile, robotic control is by far the hardest of these problems, due to the complexity of the real-world and the difficulty of predicting physical interactions.

In fact, developing generally-capable robotic control systems is currently the largest barrier to robotics progress.

With this in mind, I focused on understanding the phases of progress in the robotic control problem.

1. Classical Control

Initial classical approaches to control used manually programmed physics models.

These models constantly fell short of general capabilities due to the inability to factor in the massive number of un-modeled effects (like variable object textures & friction, external forces, and other variance).

This failure of classical control systems resembled the failure of early manual feature engineering approaches in machine learning, which were later replaced by deep learning.

In general, most classical approaches to robotics have long become obsolete, with the exception of algorithmic approaches to SLAM and locomotion (like ZMP), which are still heavily used in modern state-of-the-art robots.

2. Deep Reinforcement Learning

With the deep reinforcement learning models surpassng human capabilities in games like Atari, Go, and Dota 2 in the 2010s, robotics researchers hoped to apply these advancements to robotics.

This progress was particularly promising for robotics because robotic control is essentially a reinforcement learning problem: the robot (agent) needs to learn to take actions in an environment (to control its actuators) to maximize reward (effectively executing planned actions).

The development of modern reinforcement learning algorithms like Proximal Policy Optimization (PPO) and Soft Actor-Critic (SAC) provided optimization methods with sufficient speed and sample efficiency to effectively train robots (often in simulation).

This brought a new wave of deep learning based robotic control that demonstrated impressive generalization capabilities that far surpassed classical control methods, especially in problems like locomotion and dexterous manipulation.

3. Simulation & Imitation Learning

At the same time as these innovations in deep RL algorithms, progress in simulation & imitation learning also enabled better robotic control policies.

Specifically, the development of the MuJoCo simulator built for robotics (due to higher accuracy physics computations) and methods like domain/dynamics randomization helped to overcome the simulation-to-reality transfer problem where control policies would learn to do well in simulation by exploiting inaccuracies in the simulation, and then would fail when used in the real world.

Additionally, imitation learning methods like behavior cloning and inverse reinforcement learning enabled robotics control based on expert demonstrations.

4. Generalization & Robotic Transformers

Most recently, we have applied learnings from the success of training LLMs to robotics to yield a new wave of frontier robotics models.

Specifically, we have started to train large transformers on internet-scale data and tune them specifically to the robotics problem. This has led to state-of-the-art results.

For example, the vision-language-action (VLA) model proposed by RT2 used an open source vision-language model (multi-modal LLM) pre-trained on internet data and fine-tuned it to understand robotic control.

This system was able to use the high-level reasoning and visual understanding capabilities from the VLMs and apply it to robotics, effectively solving the robotic planning problem and adding impressive generalization capabilities to robotic control.

It's hard to overestimate how much value VLMs have brought to robotic planning and reasoning capabilities; this has been a major unlock on the path toward general-purpose robotics.

At this point, all frontier robotics models are using a combination of the VLA + ACT architecture with their own combination of internet and manually collected data, with pi0 representing the most impressive publicly released model today with frontier generalization capabilities.

Step #3 ✅: Reframing the future of humanoid robotics (the most interesting part)

With all this context, it became much more clear to me what we need to accomplish in order to get to the goal of fully-autonomous general-purpose robotics.

Robotic perception and planning are largely solved problems - though there is plenty of room for improvement, modern robots demonstrate capabilities and generalization sufficient for many real-world tasks.

Meanwhile, achieving generalization in robotic control is currently the largest barrier to progress.

Current state-of-the-art robotic control systems show generalization to new objects, environments, and instructions, but they show very little ability to generalize to new manipulation skills.

This is no small problem: most real-world tasks require complex multi-step motor routines (often under-appreciated by humans because motor tasks are second-nature to us), so being unable to generalize to new manipulation skills means being unable to perform most tasks that aren't explicitly in the dataset.

Luckily, we know from recent progress in deep learning that we can just scale up our models to improve generalization.

However, applying scaling laws to robotics looks very different than with LLMs:

> In LLMs, we had the entire internet worth of data to train on.

> Once we realized scaling laws work, we already had sufficient data and compute necessary to scale up parameters and get better models.

> In robotics, we have plenty of room to scale up compute and parameters.

> However, we are lacking the data to train on.

In fact, the current scale of data being used to train robotics models is likely orders of magnitude too small to achieve full generalization (covered more in depth in the full repository).

So how do we generate the necessary amount of data?

There are 3 approaches that are currently viable:

1. Internet Data - Repurpose current internet data for robotics training. This is hard because robots usually require data specifically from the same camera angles, joints, and actuators on the robot for training.

2. Simulation - Training in simulation offers massive parallelization and access to an unbounded amount of data. However, simulations currently lack the complexity that real-world data affords.

3. Real-World Data - The best current approach to collect data with sufficient complexity is to collect it directly from the real world, with tele-operation. This is exactly why most companies have opted to take this approach.

Figuring out a strategy to collect enough tele-operation data to achieve general-purpose robotics is no easy task.

It will involve addressing the following challenges (each addressed more in the full repository):

1. Economic Self-Sufficiency - In order to sustainably collect enough data to achieve generalization through tele-operation, current robotics companies will need to find a way to make data collection economically self-sufficient (using some combination of labor arbitrage + doing jobs that humans can't/won't do).

2. Data Signal - Many robotics companies are opting to collect this data through deployments in industrial settings. However, another important consideration is that these robot deployments need to be collecting data with sufficient signal about the real world, including data about a variety of environments/scenarios. This means that robots must be deployed in contexts with sufficient variance.

3. Capital - Given the realities of current datasets and the scale of data necessary for true generalization, this approach will likely be highly capital intensive, requiring many years of spending to reach data scales necessary for truly autonomous general-purpose humanoids. This has many implications for how robotics companies must approach their data-collection (will these companies be able to sustain their burn for 5-10 years on venture capital alone?)

So in order to get to the promised state of humanoid robotics, it will require:

1. Constructing entire hardware supply chains and manufacturing processes

2. Collecting large amounts of data

3. Likely burning through capital for a long time (maybe more than 5-10 years) before really good autonomous robots are ready for the world

It is also worth noting that the internet scale datasets that we used to create frontier generative models were all created by network effects that played out over decades and created trillions of dollars of value for the world. This made it economically feasible to generate a dataset at such a scale.

If we had tried today to directly spend capital to create a similar dataset to train LLMs, it seems farfetched that we would be able to replicate something of comparable quality to the internet.

But this is similar to what we're trying to do for robotics today, and the robotic manipulation problem appears to be orders of magnitude more complex than learning human language.

With all this context, we now have a more grounded perspective of current robotics capabilities, the challenges in the way of reaching humanoid robotics, and a more realistic timeline for when we might achieve this technology.

This is not meant to provide a pessimistic perspective on robotics, but rather is meant to provide a perspective grounded in what current research frontiers suggest.

There's still much to cover about this topic (this tweet is already way too long), but I just tried to cover the high-level details here.

For those curious to learn about this topic in-depth, I would highly recommend reading through the full post below!

In there, I go into far more depth about:

> The specific details of the research breakthroughs that have gotten us to modern robotics

> How much data do we need to achieve general-purpose robotics?

> What is the correct strategy to collect this data?

> How long will it take to collect this data?

> Who is most likely to win the humanoid arms race?

> What does the robotics problem teach us about the human brain and our biology?

> etc.

With all this context, it became much more clear to me what we need to accomplish in order to get to the goal of fully-autonomous general-purpose robotics.

Robotic perception and planning are largely solved problems - though there is plenty of room for improvement, modern robots demonstrate capabilities and generalization sufficient for many real-world tasks.

Meanwhile, achieving generalization in robotic control is currently the largest barrier to progress.

Current state-of-the-art robotic control systems show generalization to new objects, environments, and instructions, but they show very little ability to generalize to new manipulation skills.

This is no small problem: most real-world tasks require complex multi-step motor routines (often under-appreciated by humans because motor tasks are second-nature to us), so being unable to generalize to new manipulation skills means being unable to perform most tasks that aren't explicitly in the dataset.

Luckily, we know from recent progress in deep learning that we can just scale up our models to improve generalization.

However, applying scaling laws to robotics looks very different than with LLMs:

> In LLMs, we had the entire internet worth of data to train on.

> Once we realized scaling laws work, we already had sufficient data and compute necessary to scale up parameters and get better models.

> In robotics, we have plenty of room to scale up compute and parameters.

> However, we are lacking the data to train on.

In fact, the current scale of data being used to train robotics models is likely orders of magnitude too small to achieve full generalization (covered more in depth in the full repository).

So how do we generate the necessary amount of data?

There are 3 approaches that are currently viable:

1. Internet Data - Repurpose current internet data for robotics training. This is hard because robots usually require data specifically from the same camera angles, joints, and actuators on the robot for training.

2. Simulation - Training in simulation offers massive parallelization and access to an unbounded amount of data. However, simulations currently lack the complexity that real-world data affords.

3. Real-World Data - The best current approach to collect data with sufficient complexity is to collect it directly from the real world, with tele-operation. This is exactly why most companies have opted to take this approach.

Figuring out a strategy to collect enough tele-operation data to achieve general-purpose robotics is no easy task.

It will involve addressing the following challenges (each addressed more in the full repository):

1. Economic Self-Sufficiency - In order to sustainably collect enough data to achieve generalization through tele-operation, current robotics companies will need to find a way to make data collection economically self-sufficient (using some combination of labor arbitrage + doing jobs that humans can't/won't do).

2. Data Signal - Many robotics companies are opting to collect this data through deployments in industrial settings. However, another important consideration is that these robot deployments need to be collecting data with sufficient signal about the real world, including data about a variety of environments/scenarios. This means that robots must be deployed in contexts with sufficient variance.

3. Capital - Given the realities of current datasets and the scale of data necessary for true generalization, this approach will likely be highly capital intensive, requiring many years of spending to reach data scales necessary for truly autonomous general-purpose humanoids. This has many implications for how robotics companies must approach their data-collection (will these companies be able to sustain their burn for 5-10 years on venture capital alone?)

So in order to get to the promised state of humanoid robotics, it will require:

1. Constructing entire hardware supply chains and manufacturing processes

2. Collecting large amounts of data

3. Likely burning through capital for a long time (maybe more than 5-10 years) before really good autonomous robots are ready for the world

It is also worth noting that the internet scale datasets that we used to create frontier generative models were all created by network effects that played out over decades and created trillions of dollars of value for the world. This made it economically feasible to generate a dataset at such a scale.

If we had tried today to directly spend capital to create a similar dataset to train LLMs, it seems farfetched that we would be able to replicate something of comparable quality to the internet.

But this is similar to what we're trying to do for robotics today, and the robotic manipulation problem appears to be orders of magnitude more complex than learning human language.

With all this context, we now have a more grounded perspective of current robotics capabilities, the challenges in the way of reaching humanoid robotics, and a more realistic timeline for when we might achieve this technology.

This is not meant to provide a pessimistic perspective on robotics, but rather is meant to provide a perspective grounded in what current research frontiers suggest.

There's still much to cover about this topic (this tweet is already way too long), but I just tried to cover the high-level details here.

For those curious to learn about this topic in-depth, I would highly recommend reading through the full post below!

In there, I go into far more depth about:

> The specific details of the research breakthroughs that have gotten us to modern robotics

> How much data do we need to achieve general-purpose robotics?

> What is the correct strategy to collect this data?

> How long will it take to collect this data?

> Who is most likely to win the humanoid arms race?

> What does the robotics problem teach us about the human brain and our biology?

> etc.

For those curious, here's the complete list of papers/links I used to learn about the history of robotics progress:

Perception

> SLAM - ieeexplore.ieee.org/stamp/stamp.js…

> SIFT - cs.ubc.ca/~lowe/papers/i…

> ORB-SLAM - arxiv.org/pdf/1502.00956

> DROID-SLAM - arxiv.org/pdf/2108.10869

Planning

> A-star - ai.stanford.edu/~nilsson/Onlin…

> PRM - cs.cmu.edu/~motionplannin…

> RRT - msl.cs.illinois.edu/~lavalle/paper…

> CHOMP -ri.cmu.edu/pub_files/2009…

> TrajOpt - roboticsproceedings.org/rss09/p31.pdf

> STRIPS - ai.stanford.edu/~nilsson/Onlin…

> Max-Q - arxiv.org/pdf/cs/9905014

> PDDL - arxiv.org/pdf/1106.4561

> ASP - cs.utexas.edu/~vl/papers/wia…

> Clingo - arxiv.org/pdf/1405.3694

Reinforcement Learning

> MDP - arxiv.org/pdf/cs/9605103

> Atari - arxiv.org/pdf/1312.5602

> A3C - arxiv.org/pdf/1602.01783

> TRPO - arxiv.org/pdf/1502.05477

> GAE - arxiv.org/pdf/1506.02438

> PPO - arxiv.org/abs/1707.06347

> DDPG - arxiv.org/pdf/1509.02971

> SAC - arxiv.org/pdf/1801.01290

> Curiosity - arxiv.org/pdf/1808.04355

Simulation

> MuJoCo - homes.cs.washington.edu/~todorov/paper…

> Domain Randomization - arxiv.org/pdf/1703.06907

> Dynamics Randomization - arxiv.org/pdf/1710.06537

> OpenAI Dexterous Manipulation - arxiv.org/pdf/1808.00177

> Simulation Optimization - arxiv.org/pdf/1810.05687

Imitation Learning

> ALVINN - proceedings.neurips.cc/paper/1988/fil…

> DAgger - arxiv.org/pdf/1011.0686

> IRL - ai.stanford.edu/~ang/papers/ic…

> GAIL - arxiv.org/pdf/1606.03476

> MAML - arxiv.org/pdf/1703.03400

> One-Shot - arxiv.org/pdf/1703.07326

Locomotion

> ZMP - researchgate.net/publication/22…

> Preview Control - researchgate.net/publication/40…

> Biped - arxiv.org/pdf/2401.16889

> Quadruped - arxiv.org/pdf/2010.11251

Generalization

> E2E - arxiv.org/pdf/1504.00702

> BC-Z - arxiv.org/pdf/2202.02005

> SayCan - arxiv.org/pdf/2204.01691

> RT1 - arxiv.org/pdf/2212.06817

> ACT - arxiv.org/pdf/2304.13705

> VLA - arxiv.org/pdf/2307.15818

> Pi0 - physicalintelligence.company/download/pi0.p…

Perception

> SLAM - ieeexplore.ieee.org/stamp/stamp.js…

> SIFT - cs.ubc.ca/~lowe/papers/i…

> ORB-SLAM - arxiv.org/pdf/1502.00956

> DROID-SLAM - arxiv.org/pdf/2108.10869

Planning

> A-star - ai.stanford.edu/~nilsson/Onlin…

> PRM - cs.cmu.edu/~motionplannin…

> RRT - msl.cs.illinois.edu/~lavalle/paper…

> CHOMP -ri.cmu.edu/pub_files/2009…

> TrajOpt - roboticsproceedings.org/rss09/p31.pdf

> STRIPS - ai.stanford.edu/~nilsson/Onlin…

> Max-Q - arxiv.org/pdf/cs/9905014

> PDDL - arxiv.org/pdf/1106.4561

> ASP - cs.utexas.edu/~vl/papers/wia…

> Clingo - arxiv.org/pdf/1405.3694

Reinforcement Learning

> MDP - arxiv.org/pdf/cs/9605103

> Atari - arxiv.org/pdf/1312.5602

> A3C - arxiv.org/pdf/1602.01783

> TRPO - arxiv.org/pdf/1502.05477

> GAE - arxiv.org/pdf/1506.02438

> PPO - arxiv.org/abs/1707.06347

> DDPG - arxiv.org/pdf/1509.02971

> SAC - arxiv.org/pdf/1801.01290

> Curiosity - arxiv.org/pdf/1808.04355

Simulation

> MuJoCo - homes.cs.washington.edu/~todorov/paper…

> Domain Randomization - arxiv.org/pdf/1703.06907

> Dynamics Randomization - arxiv.org/pdf/1710.06537

> OpenAI Dexterous Manipulation - arxiv.org/pdf/1808.00177

> Simulation Optimization - arxiv.org/pdf/1810.05687

Imitation Learning

> ALVINN - proceedings.neurips.cc/paper/1988/fil…

> DAgger - arxiv.org/pdf/1011.0686

> IRL - ai.stanford.edu/~ang/papers/ic…

> GAIL - arxiv.org/pdf/1606.03476

> MAML - arxiv.org/pdf/1703.03400

> One-Shot - arxiv.org/pdf/1703.07326

Locomotion

> ZMP - researchgate.net/publication/22…

> Preview Control - researchgate.net/publication/40…

> Biped - arxiv.org/pdf/2401.16889

> Quadruped - arxiv.org/pdf/2010.11251

Generalization

> E2E - arxiv.org/pdf/1504.00702

> BC-Z - arxiv.org/pdf/2202.02005

> SayCan - arxiv.org/pdf/2204.01691

> RT1 - arxiv.org/pdf/2212.06817

> ACT - arxiv.org/pdf/2304.13705

> VLA - arxiv.org/pdf/2307.15818

> Pi0 - physicalintelligence.company/download/pi0.p…

My final output (complete synthesis + predictions)

Here's the repository of my full effort with:

> a complete in-depth synthesis of the research breakthroughs in robotics

> my perspective on the future of humanoids

github.com/adam-maj/robot…

Here's the repository of my full effort with:

> a complete in-depth synthesis of the research breakthroughs in robotics

> my perspective on the future of humanoids

github.com/adam-maj/robot…

Finally, I want to thank @BainCapVC (especially @kevinzhang, @RonMiasnik) for supporting me on my deep dives!

• • •

Missing some Tweet in this thread? You can try to

force a refresh