How to get URL link on X (Twitter) App

Step #1 ✅: Learning the fundamentals of robotics from papers

Step #1 ✅: Learning the fundamentals of robotics from papers

Part 1 ⚛: Understanding the fundamentals of energy

Part 1 ⚛: Understanding the fundamentals of energy

Step #1 ✅: Learning the fundamentals of deep learning from papers

Step #1 ✅: Learning the fundamentals of deep learning from papers

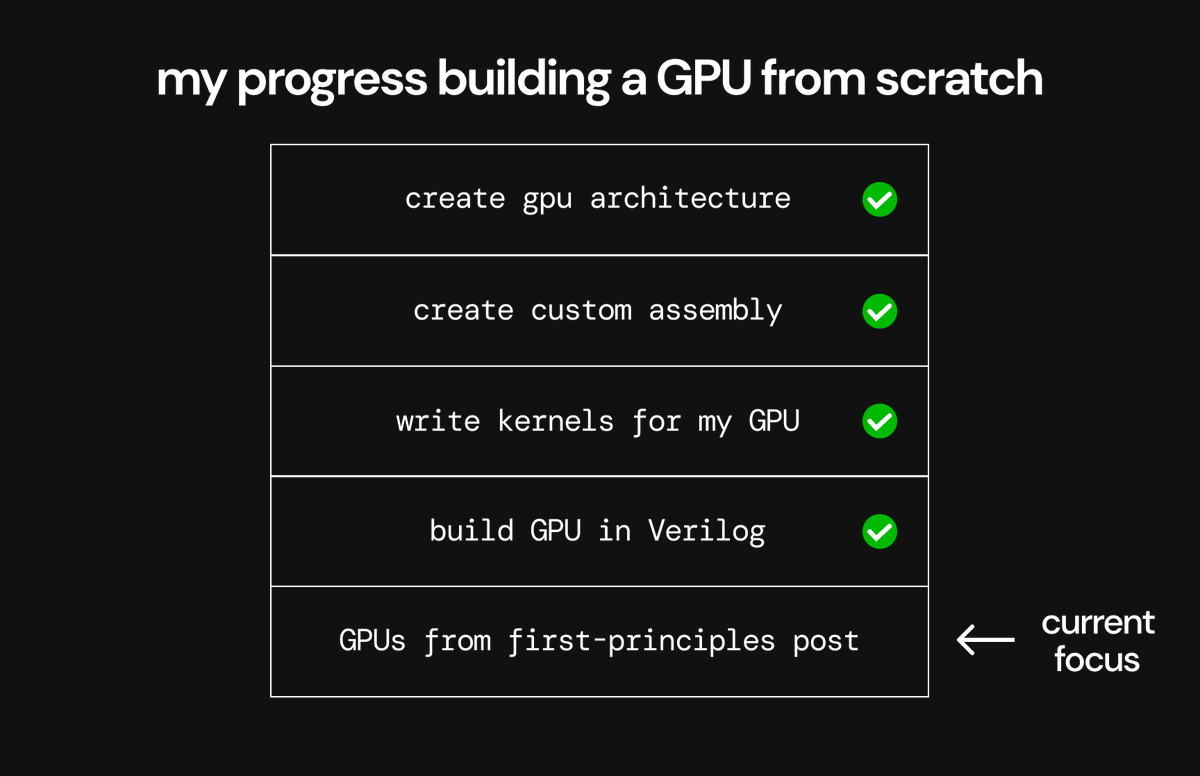

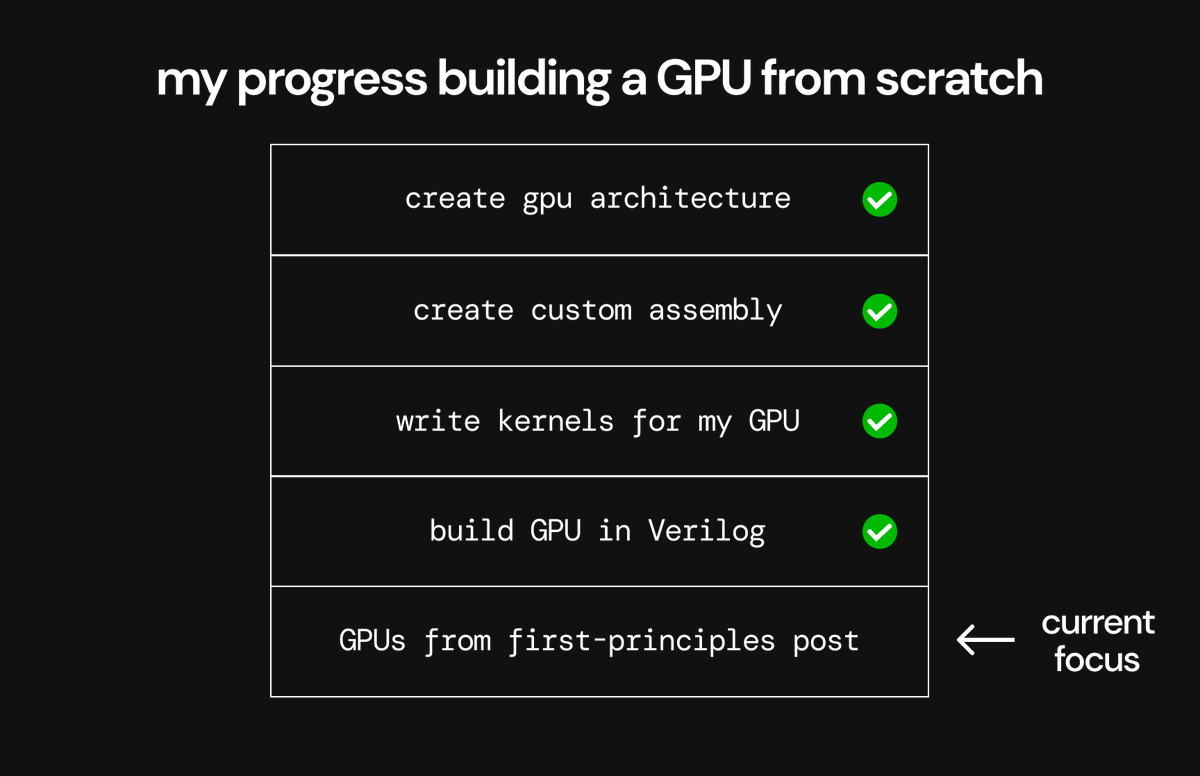

Step 1 ✅: Learning the fundamentals of GPU architectures

Step 1 ✅: Learning the fundamentals of GPU architectures

Step 1 ✅: Learning the fundamentals of chip architecture

Step 1 ✅: Learning the fundamentals of chip architecture