Epoch AI are going to publish more details, but on the OpenAI side for those interested: we did not use FrontierMath data to guide the development of o1 or o3, at all. (1/n)

We didn't train on any FM derived data, any inspired data, or any data targeting FrontierMath in particular (3/n)

I'm extremely confident, because we only downloaded frontiermath for our evals *long* after the training data was frozen, and only looked at o3 FrontierMath results after the final announcement checkpoint was already picked 😅 (4/n)

We did partner with EpochAI to build FrontierMath — hard uncontaminated benchmarks are incredibly valuable and we build them somewhat often, though we don't usually share results on them. (5/n)

Our agreement with Epoch means that they can evaluate other frontier models and we can evaluate models internally pre-release, as we do on many other datasets (6/n)

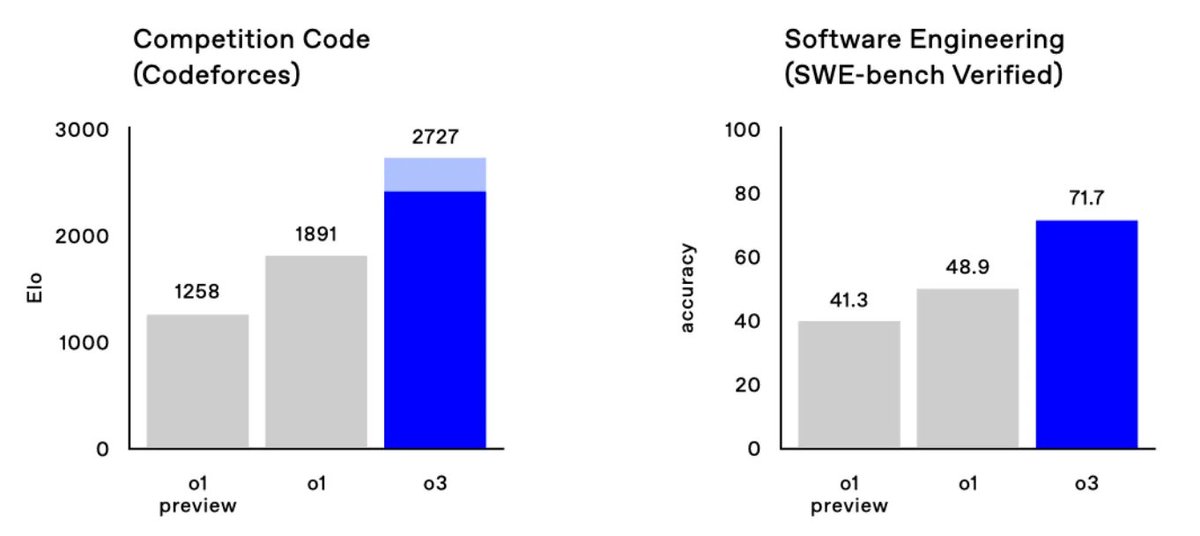

I'm sad there was confusion about this, as o3 is an incredible achievement and FrontierMath is a great eval. We're hard at work on a release-ready o3 & hopefully release will settle any concerns about the quality of the model! (7/7)

• • •

Missing some Tweet in this thread? You can try to

force a refresh