Complete hardware + software setup for running Deepseek-R1 locally. The actual model, no distillations, and Q8 quantization for full quality. Total cost, $6,000. All download and part links below:

Motherboard: Gigabyte MZ73-LM0 or MZ73-LM1. We want 2 EPYC sockets to get a massive 24 channels of DDR5 RAM to max out that memory size and bandwidth.

gigabyte.com/Enterprise/Ser…

gigabyte.com/Enterprise/Ser…

CPU: 2x any AMD EPYC 9004 or 9005 CPU. LLM generation is bottlenecked by memory bandwidth, so you don't need a top-end one.

Get the 9115 or even the 9015 if you really want to cut costs newegg.com/p/N82E16819113…

Get the 9115 or even the 9015 if you really want to cut costs newegg.com/p/N82E16819113…

RAM: This is the big one. We are going to need 768GB (to fit the model) across 24 RAM channels (to get the bandwidth to run it fast enough). That means 24 x 32GB DDR5-RDIMM modules. Example kits:

v-color.net/products/ddr5-…

newegg.com/nemix-ram-384g…

v-color.net/products/ddr5-…

newegg.com/nemix-ram-384g…

Case: You can fit this in a standard tower case, but make sure it has screw mounts for a full server motherboard, which most consumer cases won't. The Enthoo Pro 2 Server will take this motherboard:

newegg.com/black-phanteks…

newegg.com/black-phanteks…

PSU: The power use of this system is surprisingly low! (<400W) However, you will need lots of CPU power cables for 2 EPYC CPUs. The Corsair HX1000i has enough, but you might be able to find a cheaper option: corsair.com/us/en/p/psu/cp…

Heatsink: This is a tricky bit. AMD EPYC is socket SP5, and most heatsinks for SP5 assume you have a 2U/4U server blade, which we don't for this build. You probably have to go to Ebay/Aliexpress for this. I can vouch for this one: ebay.com/itm/2264992802…

And if you find the fans that come with that heatsink noisy, replacing with 1 or 2 of these per heatsink instead will be efficient and whisper-quiet: newegg.com/noctua-nf-a12x…

And finally, the SSD: Any 1TB or larger SSD that can fit R1 is fine. I recommend NVMe, just because you'll have to copy 700GB into RAM when you start the model, lol. No link here, if you got this far I assume you can find one yourself!

And that's your system! Put it all together and throw Linux on it. Also, an important tip: Go into the BIOS and set the number of NUMA groups to 0. This will ensure that every layer of the model is interleaved across all RAM chips, doubling our throughput. Don't forget!

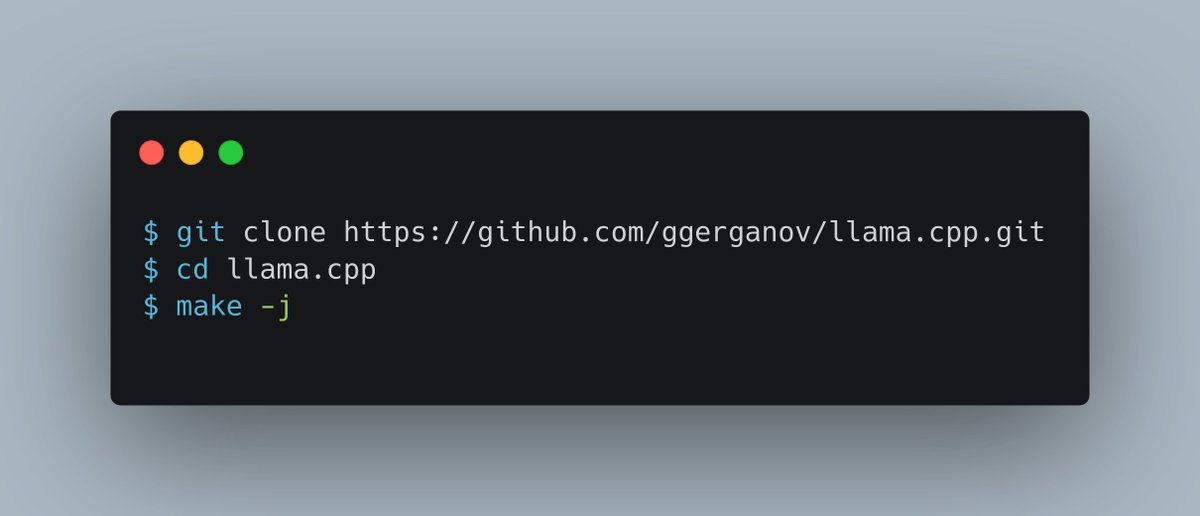

Now, software. Follow the instructions here to install llama.cpp github.com/ggerganov/llam…

Next, the model. Time to download 700 gigabytes of weights from @huggingface! Grab every file in the Q8_0 folder here: huggingface.co/unsloth/DeepSe…

Believe it or not, you're almost done. There are more elegant ways to set it up, but for a quick demo, just do this.

llama-cli -m ./DeepSeek-R1.Q8_0-00001-of-00015.gguf --temp 0.6 -no-cnv -c 16384 -p "<|User|>How many Rs are there in strawberry?<|Assistant|>"

llama-cli -m ./DeepSeek-R1.Q8_0-00001-of-00015.gguf --temp 0.6 -no-cnv -c 16384 -p "<|User|>How many Rs are there in strawberry?<|Assistant|>"

If all goes well, you should witness a short load period followed by the stream of consciousness as a state-of-the-art local LLM begins to ponder your question:

And once it passes that test, just use llama-server to host the model and pass requests in from your other software. You now have frontier-level intelligence hosted entirely on your local machine, all open-source and free to use!

And if you got this far: Yes, there's no GPU in this build! If you want to host on GPU for faster generation speed, you can! You'll just lose a lot of quality from quantization, or if you want Q8 you'll need >700GB of GPU memory, which will probably cost $100k+

Since a lot of people are asking, the generation speed on this build is 6 to 8 tokens per second, depending on the specific CPU and RAM speed you get, or slightly less if you have a long chat history. The clip above is near-realtime, sped up slightly to fit video length limits

Another update: Someone pointed out this cooler, which I wasn't aware of. Seems like another good option if you can find a seller!

https://x.com/Massimo_Italia/status/1884314971691233578

@danbri The clip above is a near-realtime recording, sped up by 1.5X just to make it fit inside Twitter's video length limit

@bobbyroastb33f As long as you don't pipe its code output into a shell, then it can't affect your machine or call home without your permission, no matter how it's been trained

(it can definitely refuse to talk about sensitive topics, though)

(it can definitely refuse to talk about sensitive topics, though)

@hmartinez82 Either way, I'll definitely try it!

@GamaGraphs Other than that it's just patiently shoving a bag of RAM sticks into slots over and over

• • •

Missing some Tweet in this thread? You can try to

force a refresh