OpenAI just dropped a paper that reveals the blueprint for creating the best AI coder in the world.

But here’s the kicker: this strategy isn’t just for coding—it’s the clearest path to AGI and beyond.

Let’s break it down 🧵👇

But here’s the kicker: this strategy isn’t just for coding—it’s the clearest path to AGI and beyond.

Let’s break it down 🧵👇

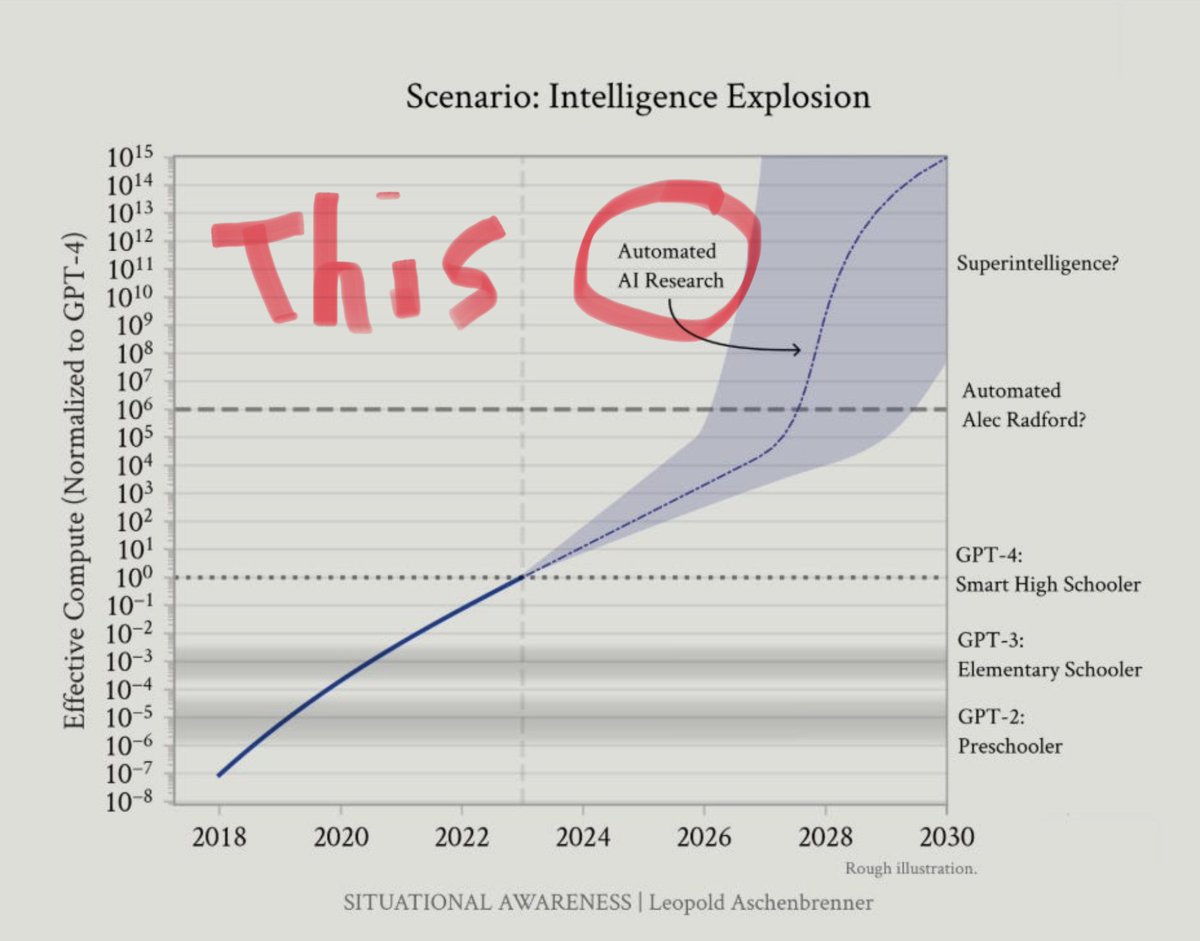

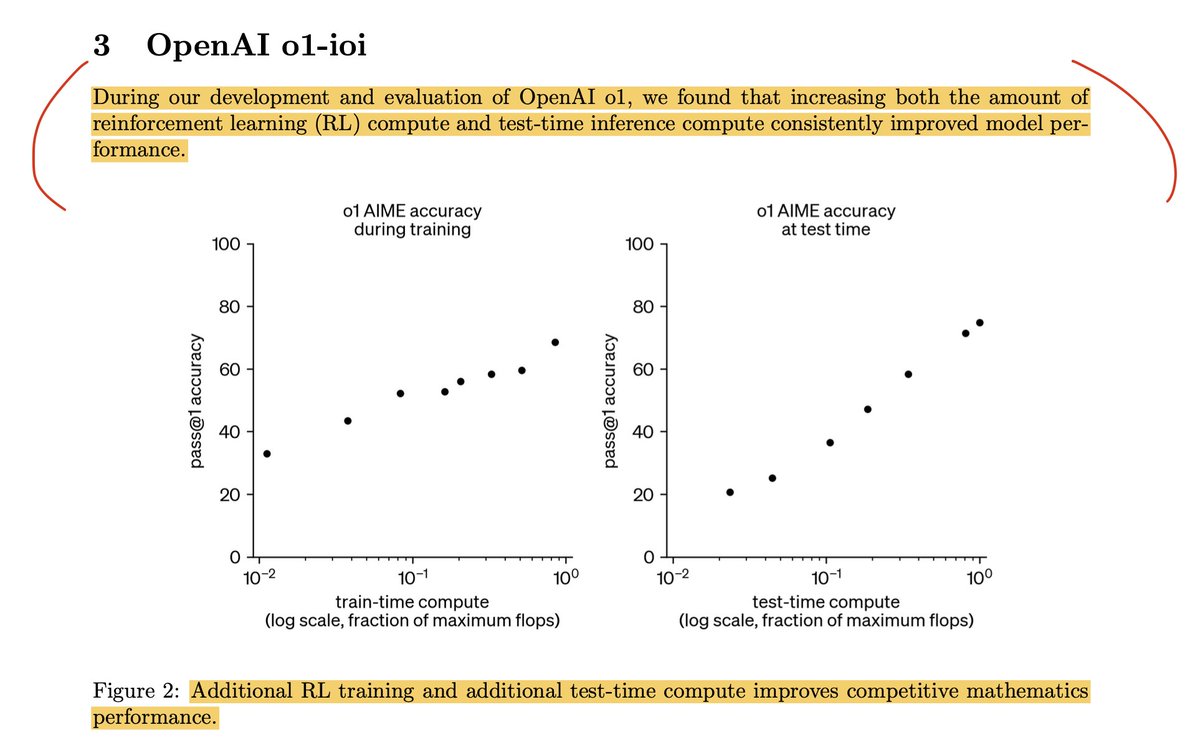

1/ OpenAI’s latest research shows that reinforcement learning + test-time compute is the key to building superintelligent AI.

Sam Altman himself said OpenAI’s model went from ranking 175th to 50th in competitive coding—and expects #1 by year-end.

Sam Altman himself said OpenAI’s model went from ranking 175th to 50th in competitive coding—and expects #1 by year-end.

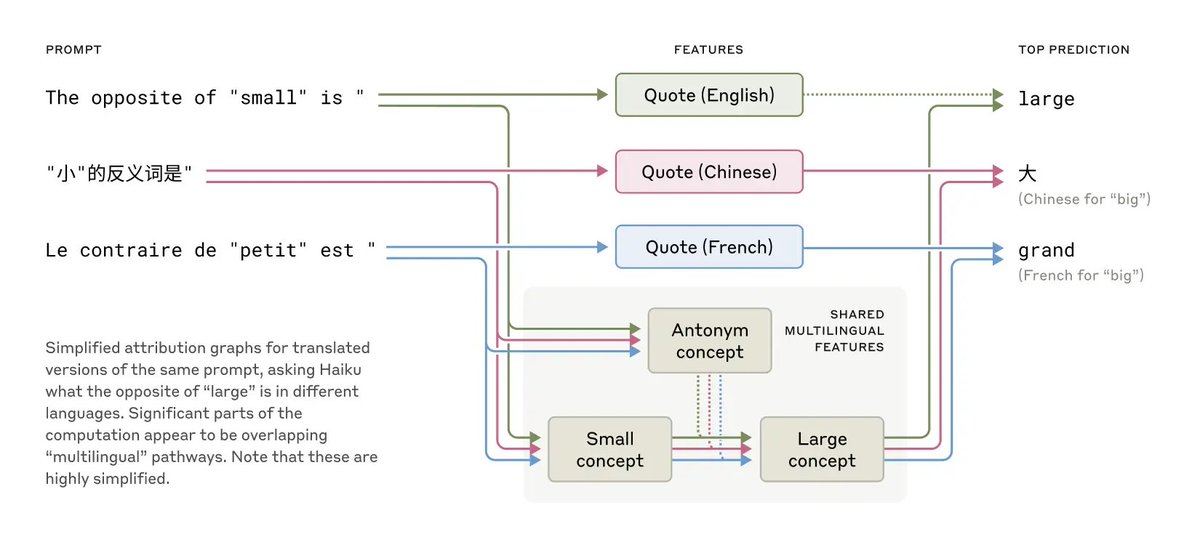

2/ The paper, “Competitive Programming with Large Reasoning Models,” compares different AI coding strategies.

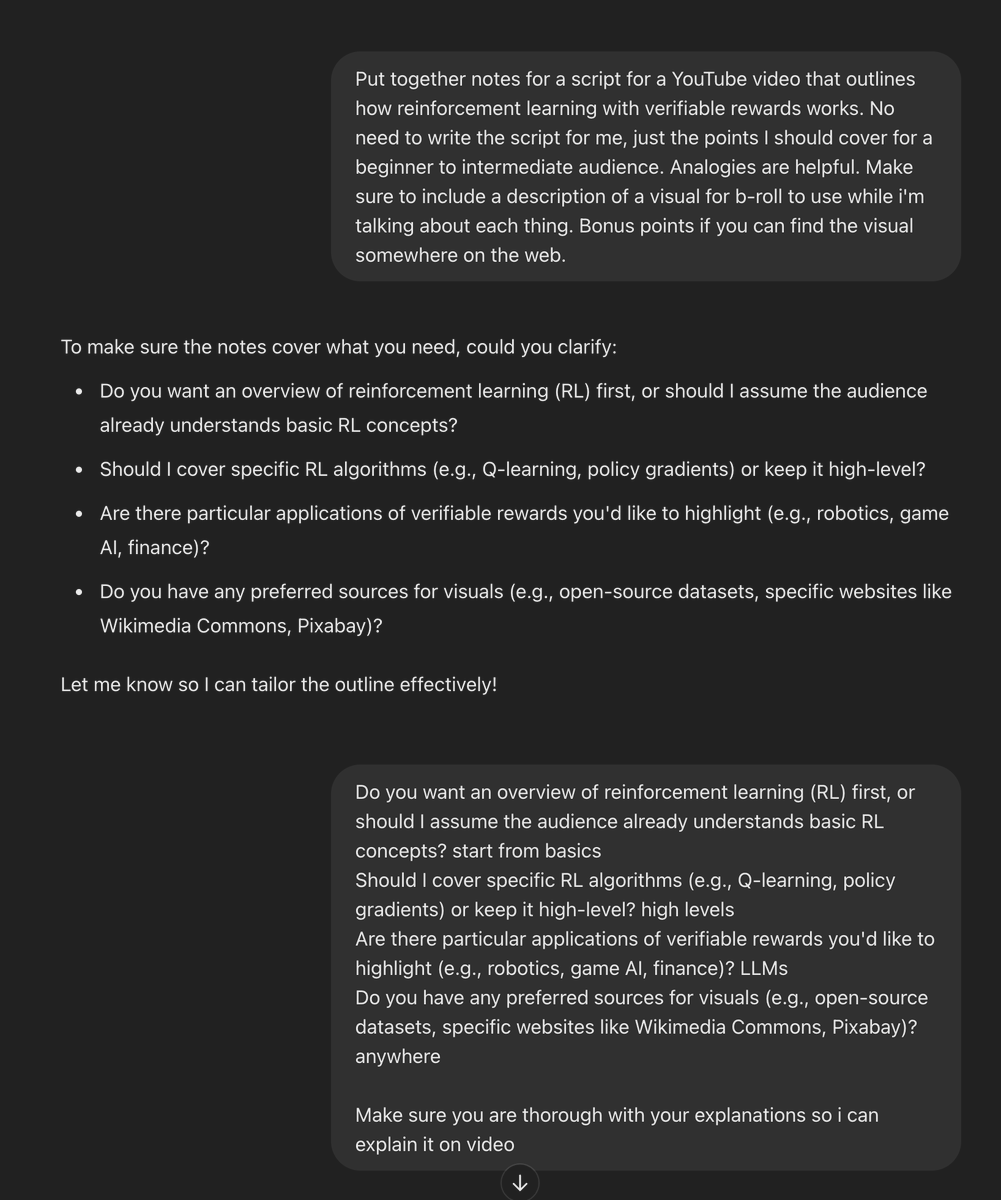

At first, models relied on human-engineered inference strategies—but the biggest leap came when humans were removed from the loop entirely.

At first, models relied on human-engineered inference strategies—but the biggest leap came when humans were removed from the loop entirely.

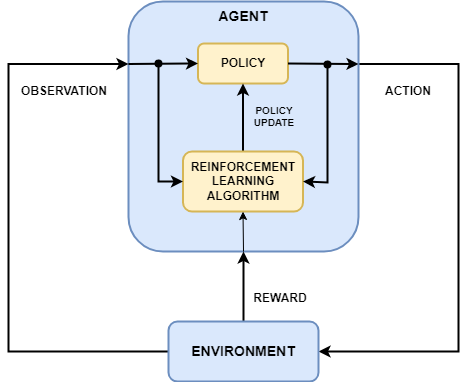

3/ Enter DeepSeek-R1, a model that cost only ~$5M to train.

Its breakthrough? Reinforcement learning with verifiable rewards.

This method, also used in AlphaGo, let's the model learn from trial & error, and scale intelligence indefinitely.

Its breakthrough? Reinforcement learning with verifiable rewards.

This method, also used in AlphaGo, let's the model learn from trial & error, and scale intelligence indefinitely.

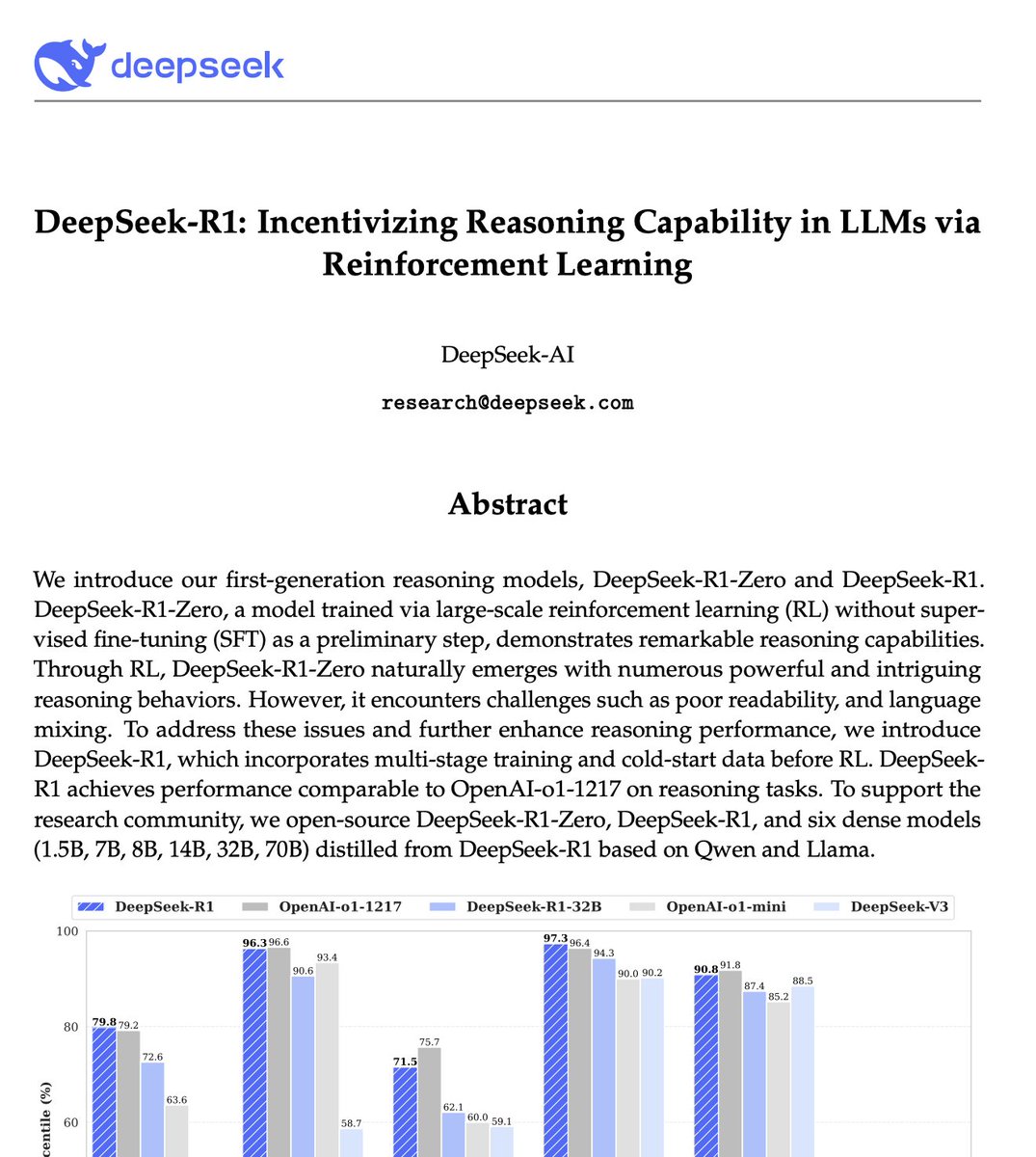

4/ Think about it this way:

AlphaGo became the best Go player in the world without human guidance.

It just kept playing itself until it mastered the game.

Now, OpenAI is applying the same principle to coding—and soon, to all STEM fields.

AlphaGo became the best Go player in the world without human guidance.

It just kept playing itself until it mastered the game.

Now, OpenAI is applying the same principle to coding—and soon, to all STEM fields.

5/ What does this mean?

Every domain with verifiable rewards (math, coding, science) can be mastered by AI just by letting it play against itself.

AI is removing human limitations—and that’s how we get to AGI.

Every domain with verifiable rewards (math, coding, science) can be mastered by AI just by letting it play against itself.

AI is removing human limitations—and that’s how we get to AGI.

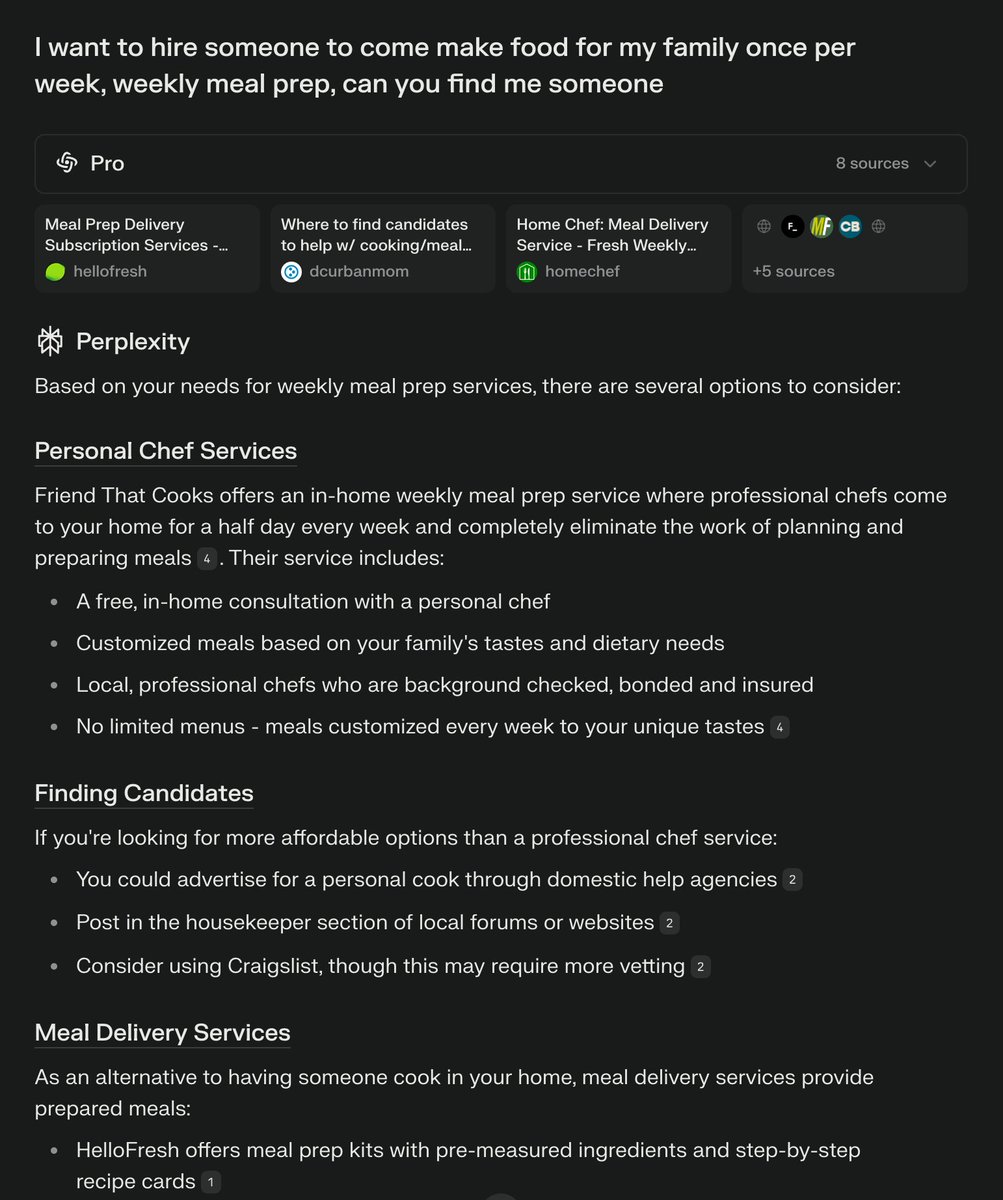

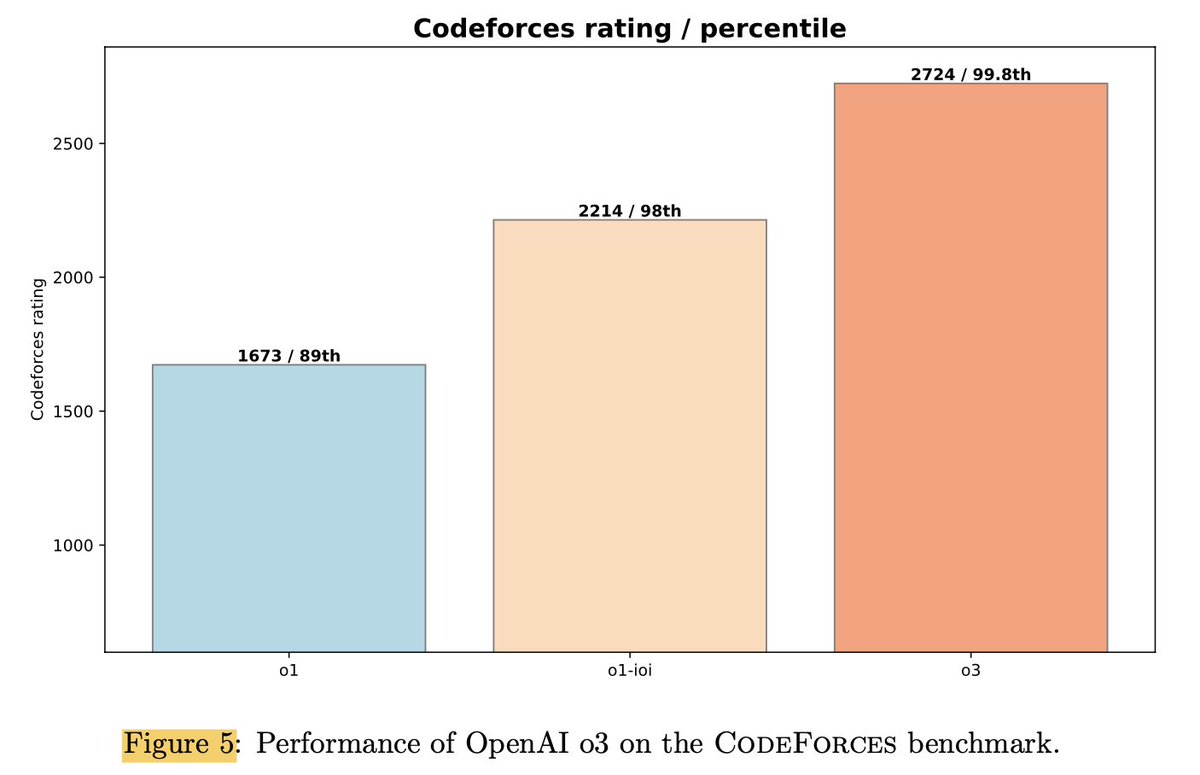

6/ Here’s the data from the coding competition:

• GPT-4: 808 ELO (decent)

• OpenAI-01: 1,673 ELO (better)

• OpenAI-03: 2,724 ELO (SUPERHUMAN) 🏆

99.8th percentile of competitive coders, with no human-crafted strategies.

• GPT-4: 808 ELO (decent)

• OpenAI-01: 1,673 ELO (better)

• OpenAI-03: 2,724 ELO (SUPERHUMAN) 🏆

99.8th percentile of competitive coders, with no human-crafted strategies.

7/ Tesla did this with Full Self-Driving.

They used to rely on a hybrid model (human rules + AI).

But when they switched to end-to-end AI, performance skyrocketed.

AI just needs more compute—not more human intervention.

They used to rely on a hybrid model (human rules + AI).

But when they switched to end-to-end AI, performance skyrocketed.

AI just needs more compute—not more human intervention.

8/ The takeaway?

Sam Altman was right when he said AGI is just a matter of scaling up.

Reinforcement learning + test-time compute is the formula for intelligence—and OpenAI is already proving it.

Sam Altman was right when he said AGI is just a matter of scaling up.

Reinforcement learning + test-time compute is the formula for intelligence—and OpenAI is already proving it.

9/ We’re witnessing the birth of AI superintelligence in real time.

It won’t stop at coding. The same techniques will make AI the best mathematician, scientist, and engineer in history.

The race to AGI is on.

It won’t stop at coding. The same techniques will make AI the best mathematician, scientist, and engineer in history.

The race to AGI is on.

• • •

Missing some Tweet in this thread? You can try to

force a refresh