Building Forward Future. YouTuber, Angel Investor, Developer, AI Enthusiast.

https://t.co/9rk7dmIboR

5 subscribers

How to get URL link on X (Twitter) App

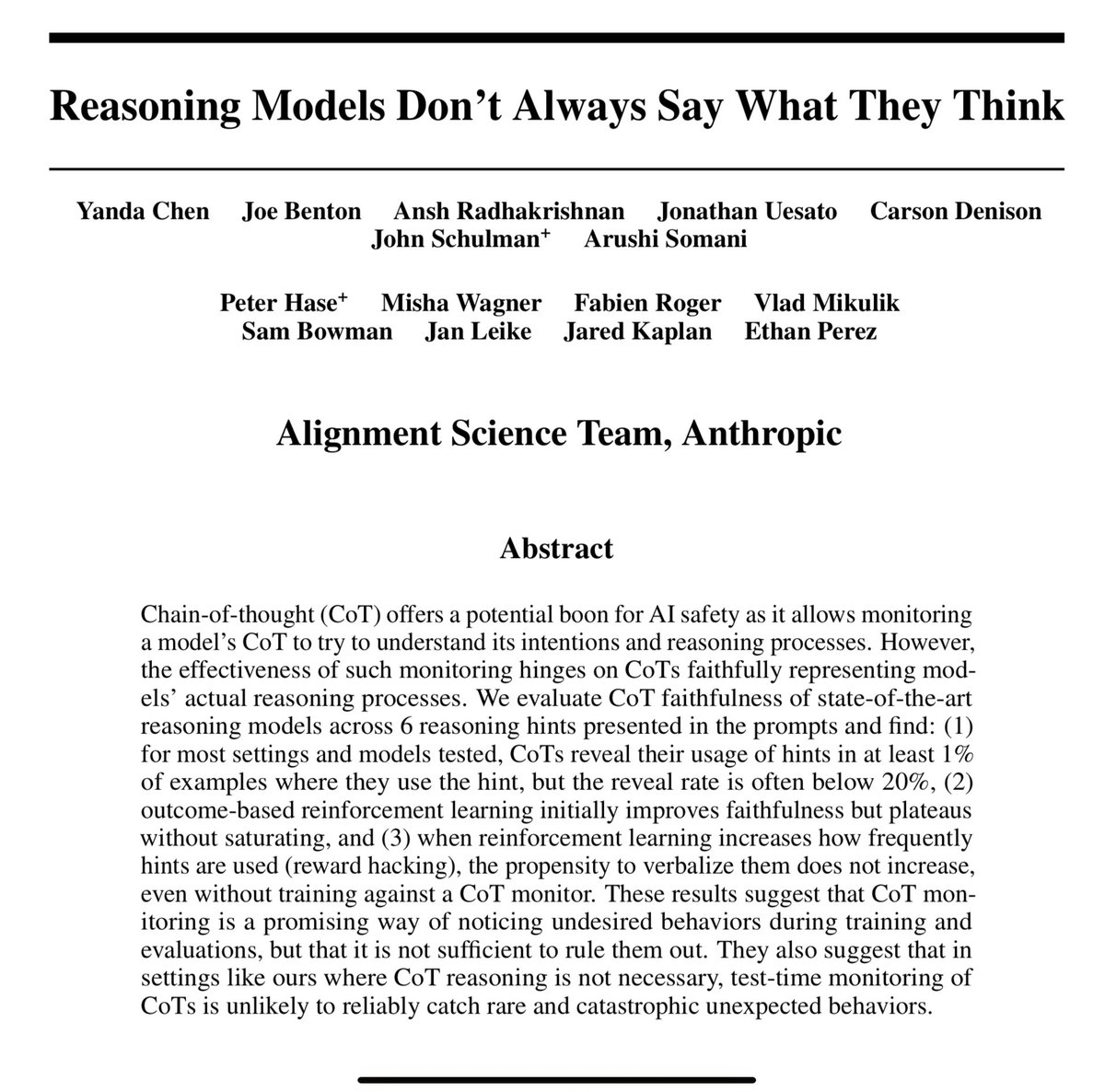

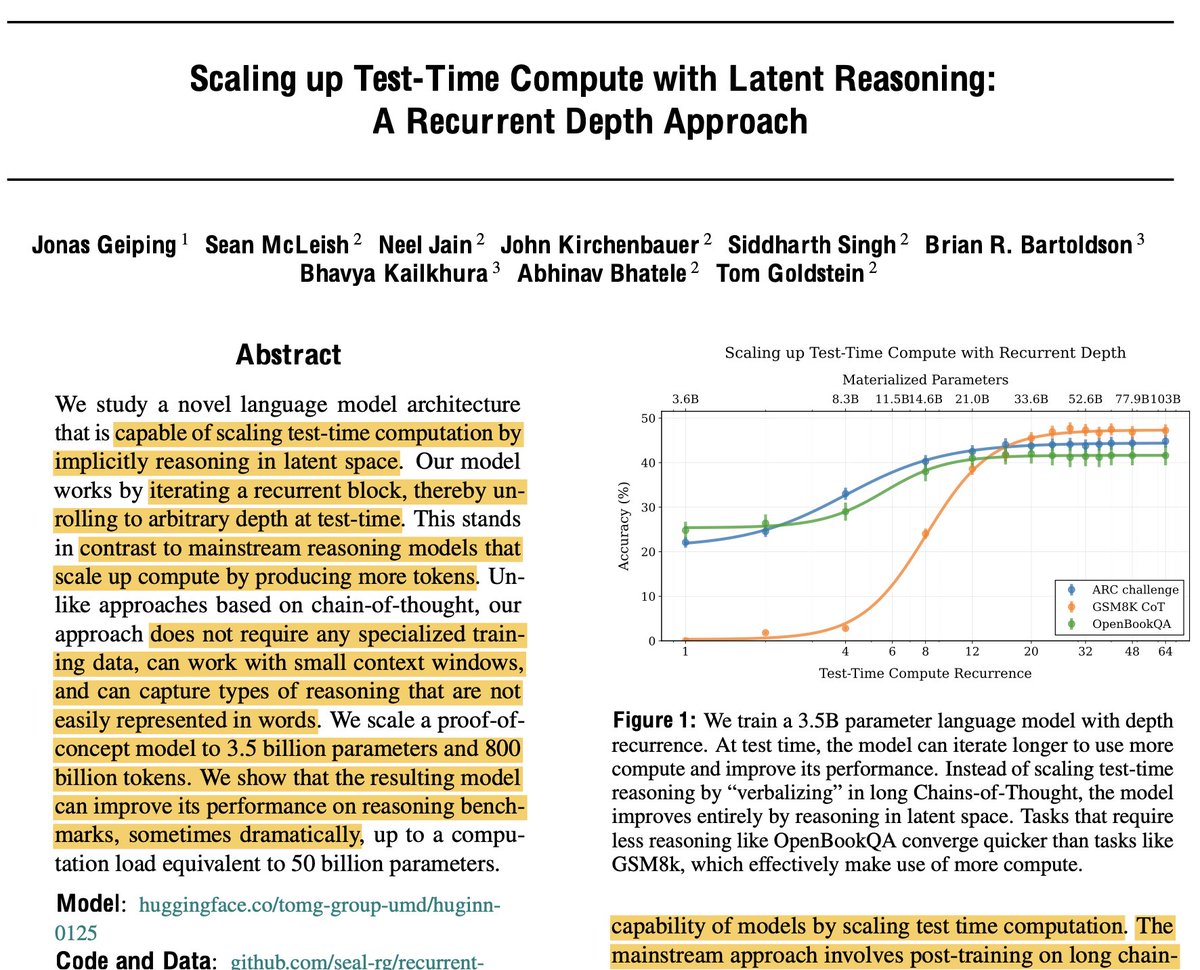

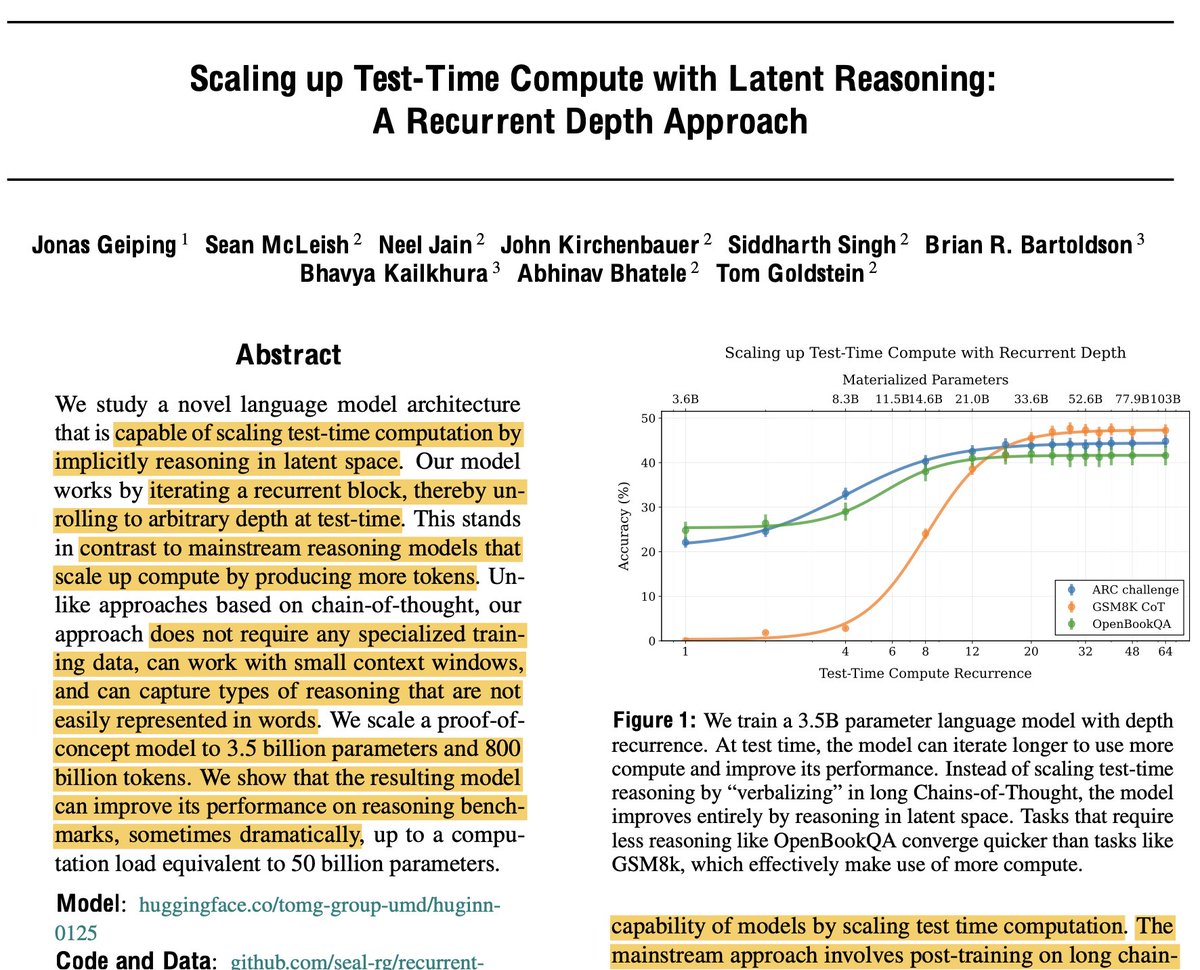

“Thinking models” use CoT to explore and reason about solutions before outputting their answer.

“Thinking models” use CoT to explore and reason about solutions before outputting their answer.

Introducing PaperBench.

Introducing PaperBench.

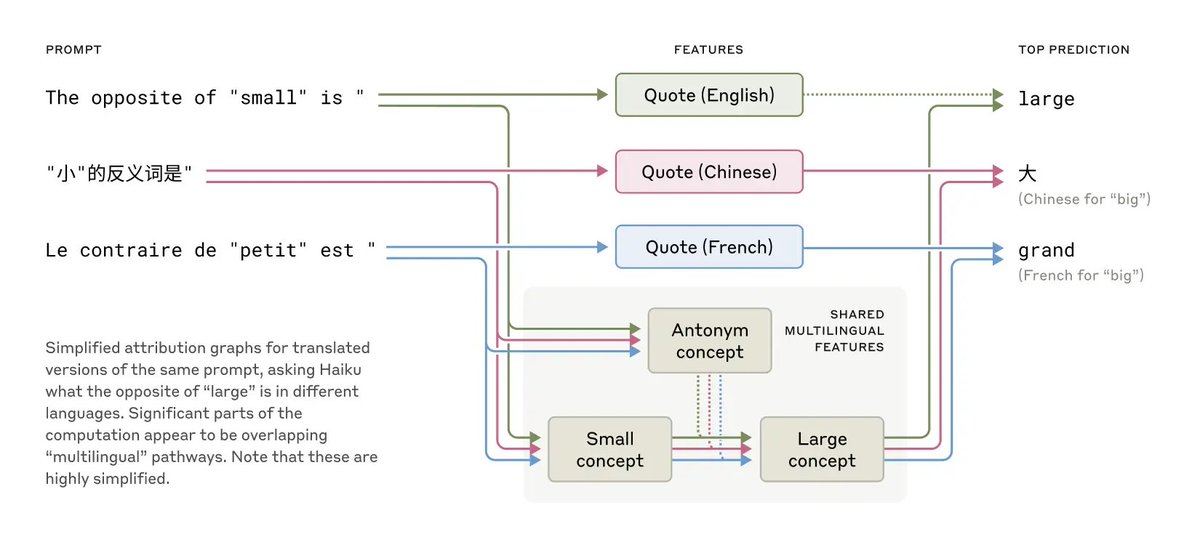

Finding 1: Universal Language of Thought?

Finding 1: Universal Language of Thought?

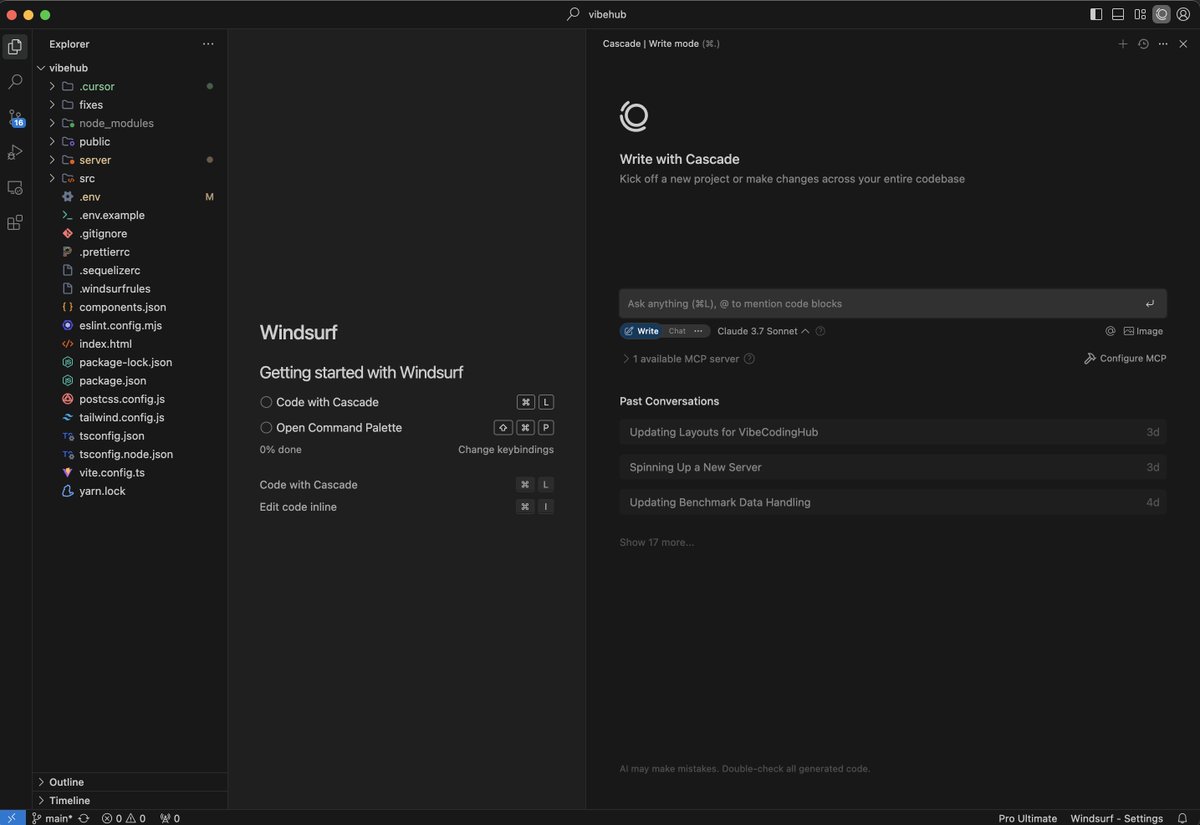

Which tool for vibe coding? 🤔

Which tool for vibe coding? 🤔

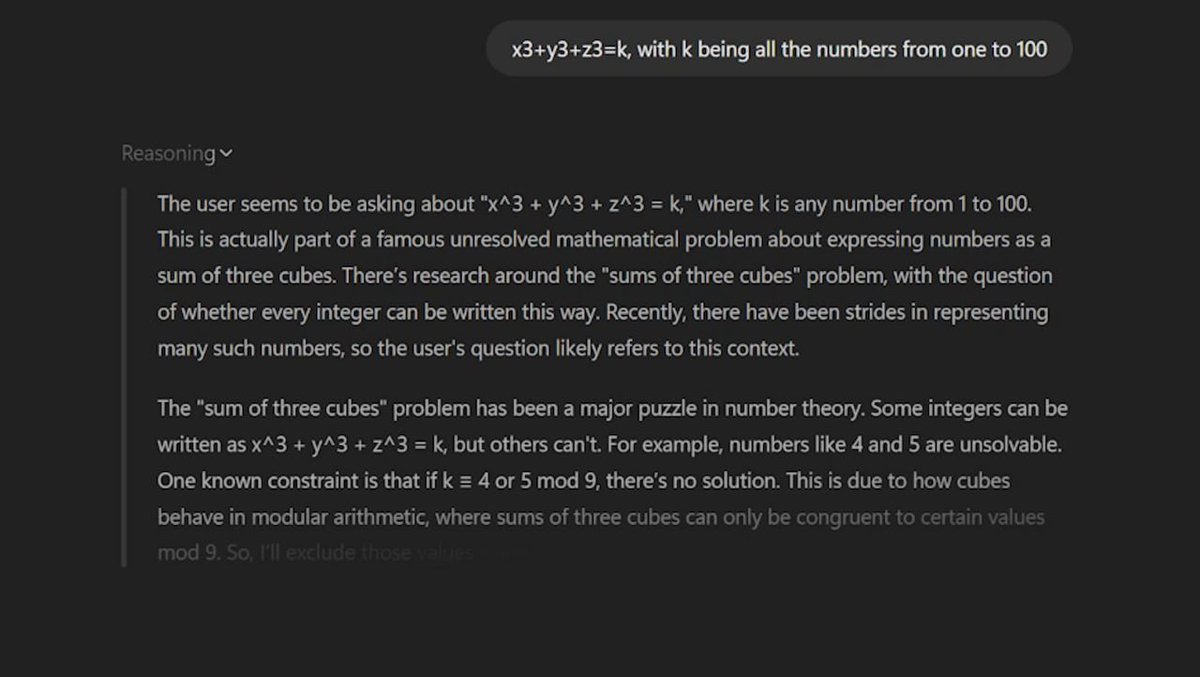

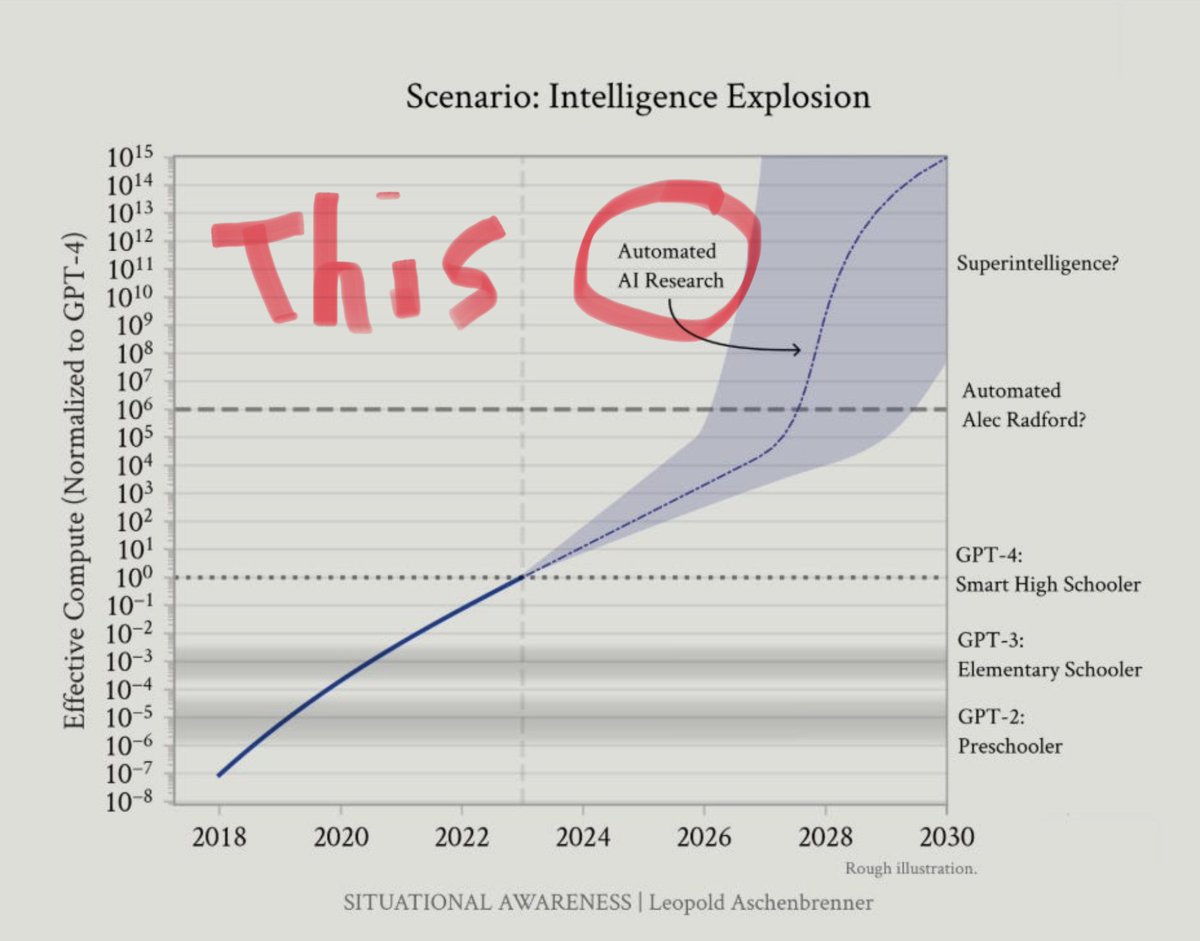

1/ OpenAI’s latest research shows that reinforcement learning + test-time compute is the key to building superintelligent AI.

1/ OpenAI’s latest research shows that reinforcement learning + test-time compute is the key to building superintelligent AI.

The key insight:

The key insight:

Dr. Jim Fan, Sr. Research Manager at NVIDIA, points out how odd it is that a non-US company is leading the Open Source AI charge, given that was the original mission of OpenAI.

Dr. Jim Fan, Sr. Research Manager at NVIDIA, points out how odd it is that a non-US company is leading the Open Source AI charge, given that was the original mission of OpenAI.https://x.com/DrJimFan/status/1881353126210687089

2/ Traditional Transformers are static post-training.

2/ Traditional Transformers are static post-training.

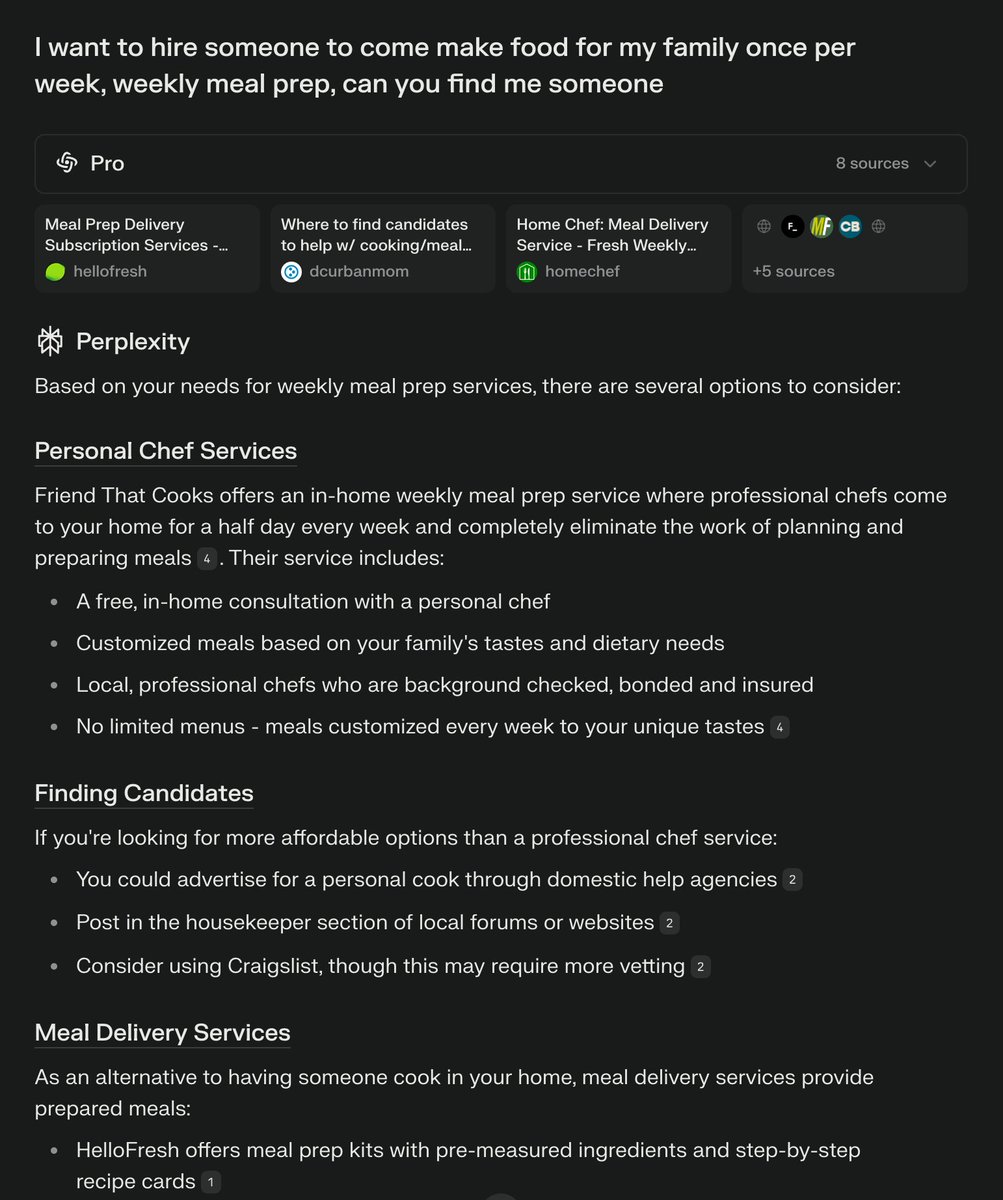

2/ The Problem:

2/ The Problem:

2/9 THE ALLIES LIST

2/9 THE ALLIES LIST

1/ The paper analyzes four key components needed to achieve o1/o3-level performance:

1/ The paper analyzes four key components needed to achieve o1/o3-level performance:

Simple > Complex

Simple > Complex

2/ 🚀 Mission: AGI for All

2/ 🚀 Mission: AGI for All

Balaji on how incredible the 25% score on Frontier Math really is.

Balaji on how incredible the 25% score on Frontier Math really is.https://x.com/balajis/status/1870206318872801766?s=46

O3 is their most advanced model yet.

O3 is their most advanced model yet.