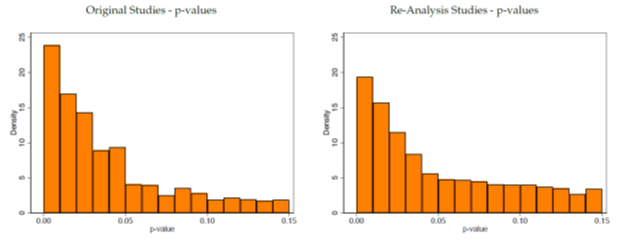

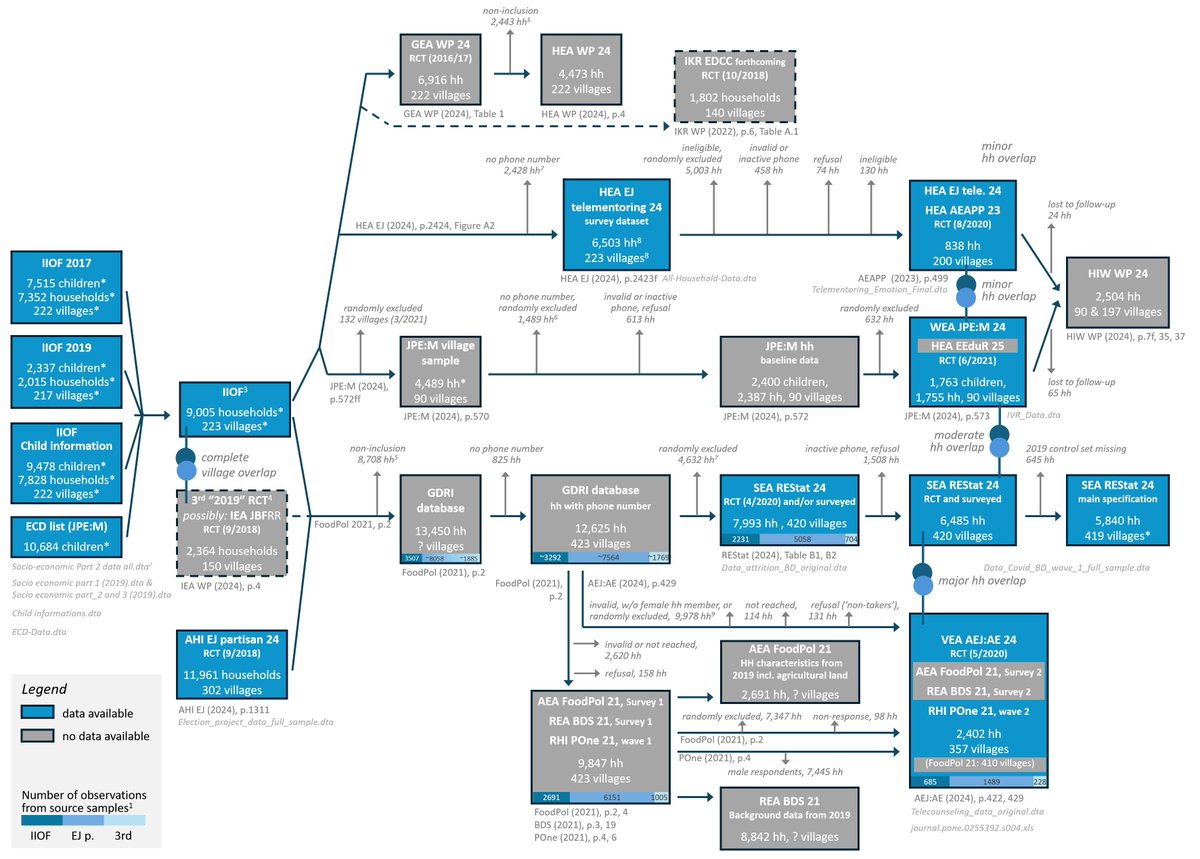

After being alerted about possible misconduct, the I4R are reproducing published papers that use data from a specific NGO (GDRI). This thread releases the first 2 reports and provides more information about the work and responses/statements from authors journals and journals. 🧵

This effort was only possible because these journals require data and code repositories. This allowed us to compare data across papers and identify undisclosed connections between 15 studies (e.g., overlapping samples, overlapping interventions, reused treatment assignment).

The replication effort has also identified multiple other potential issues in these papers which are detailed in the individual reports. Author responses are mixed, with some asking for withdrawing their name while others are working on responses.

We are working with the editors at multiple journals - some of which have opened their own independent investigations. So far, we only have positive things to report about how journals have handled our reports. Well done across the board!

1st report reproduces “Improving Women's Mental Health During a Pandemic'' by Vlassopoulos et al. @AEAjournals. Our full report is available here: . osf.io/pvkhy/

Authors received this report on Jan 15. They did not agree on a response. Some released statements, while others provided a short response. Full report, response/statements are here: .osf.io/pvkhy/

We did not get a full response as of now. As requested, we note that “the other authors are currently working on a detailed response to each of the two reports.”

2nd report reproduces “Raising Health Awareness in Rural Communities: A Randomized Experiment in Bangladesh and India'' by Siddique et al. @restatjournal. Authors received our report Feb 6. See same statements from some of the authors and full report here: osf.io/c3k6f/

Let’s take a moment to thank all our amazing replicators: Jörg Ankel-Peters, Juan Pablo Aparicio, Gunther Bensch, Carl Bonander, Nikolai Cook, Lenka Fiala, Jack Fitzgerald, Olle Hammar, Felix Holzmeister, Niklas Jakobsson, Anders Kjelsrud, Andreas Kotsadam, Essi Kujansuu, ...

Derek Mikola, Florian Neubauer, Ole Rogeberg, Julian Rose, David Valenta, Matt Webb, and Michael Wiebe, and Bangladeshi colleagues who wish to remain anonymous.

We have 2 additional completed reports which have been shared with some of the same authors. We will make those publicly available shortly and keep you posted

(1) “Parent-Teacher Meetings and Student Outcomes: Evidence from a Developing Country'' by Islam (2019) Euro Econ Review

(1) “Parent-Teacher Meetings and Student Outcomes: Evidence from a Developing Country'' by Islam (2019) Euro Econ Review

(2) “Partisan Effects of Information Campaigns in Competitive Authoritarian Elections: Evidence from Bangladesh” by Ahmed et al. (2024) Economic Journal @EJ_RES

We are currently reproducing: “Delivering Remote Learning Using a Low-Tech Solution: Evidence from a Randomized Controlled Trial in Bangladesh'' by Wang et al. JPE: Micro @JPolEcon.

Our report should be completed by Wednesday. It will then be shared with the authors/editors.

Our report should be completed by Wednesday. It will then be shared with the authors/editors.

The editors at JPE M have requested additional materials which have been shared with us. Again, only positive things to report on how editors have handled our reports.

The following paper was accepted at JEEA (@JEEA_News): “Centrality-Based Spillover Effects: Evidence from a Randomized Experiment in Primary Schools in Bangladesh” on Feb 5th. Two of the authors also coauthored the AEJ report (and one the Restat report).

The editor in chief at JEEA told us that the lead author contacted the journal on February 17th to formally withdraw their paper.

We are now trying to reproduce all GDRI studies. We started with those with a replication package. This explains why we release reports for studies in AEA AE, Restat, EJ and EER first. Those are amazing journals which enforce their data and code availability policy.

Again - we stress that our reports were only possible because these journals required the authors to make underlying study data available. These policies are essential to ensure that empirical results are reproducible.

One study often reused and discussed in our AEJ AE report is “Early childhood education, parental social networks, and child development” by Guo et al. This paper is not published yet. The authors reported to us that they withdrew their paper from publication consideration.

Another study discussed in our AEJ AE report is “Food insecurity and mental health of women during COVID-19: Evidence from a developing country” at PLOS One @PLOSONE. We shared our AEJ AE and Restat reports with the authors this Friday.

The lead author (TR) and Md GH reached out to PLOS One on Saturday to withdraw their authorship from the article. We will provide a new report to PLOS One and the authors tomorrow.

We will provide regular updates about our work and editors’ decisions. We are pretty certain we will have an update for one article very shortly as the editor in charge is moving fast and already confirmed all our findings.

HUGE thanks to our 15+ replicators who have been working on this project for 3 months now. They are not paid, and this is all pro bono. Their work should get published as comments! It has been a pleasure working with you all. And somehow this feels like the beginning!

More soon!

#I4R_R4Science

#EconTwitter #EconSky

#I4R_R4Science

#EconTwitter #EconSky

• • •

Missing some Tweet in this thread? You can try to

force a refresh