The Institute for Replication (I4R) works to improve the credibility of science by promoting and conducting reproductions and replications.

How to get URL link on X (Twitter) App

As a reminder, our comment published here () showed that (i) randomization never occurred; (ii) irregularities in baseline scores, which for the same students vary systematically in ways that are unique to either the treatment or control group; ...sciencedirect.com/science/articl…

As a reminder, our comment published here () showed that (i) randomization never occurred; (ii) irregularities in baseline scores, which for the same students vary systematically in ways that are unique to either the treatment or control group; ...sciencedirect.com/science/articl…

https://twitter.com/I4Replication/status/1920816454003257417We were contacted by the original author mid-June. He requested to release a response as an I4R discussion paper. (He had initially declined our invitation to respond, pointing out his re-analysis published by JPopE as a response.)

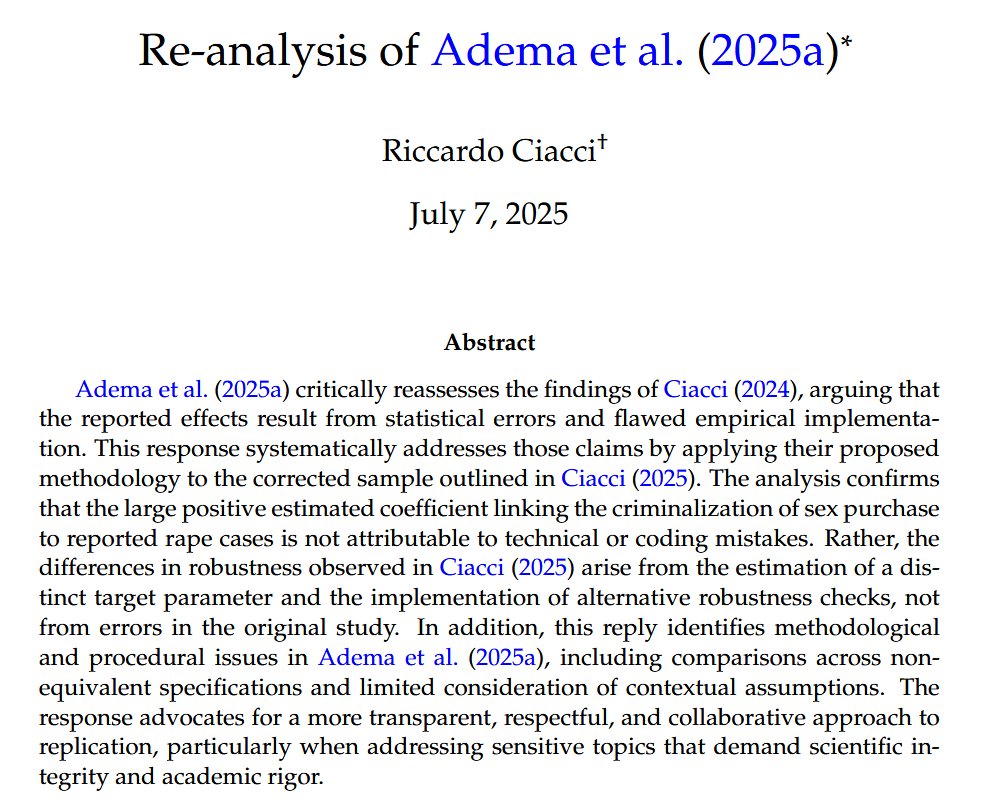

Let’s start with the original study. The author writes (abstract): “This paper leverages the timing of a ban on the purchase of sex to assess its impact on rape offenses. ...

Let’s start with the original study. The author writes (abstract): “This paper leverages the timing of a ban on the purchase of sex to assess its impact on rape offenses. ...

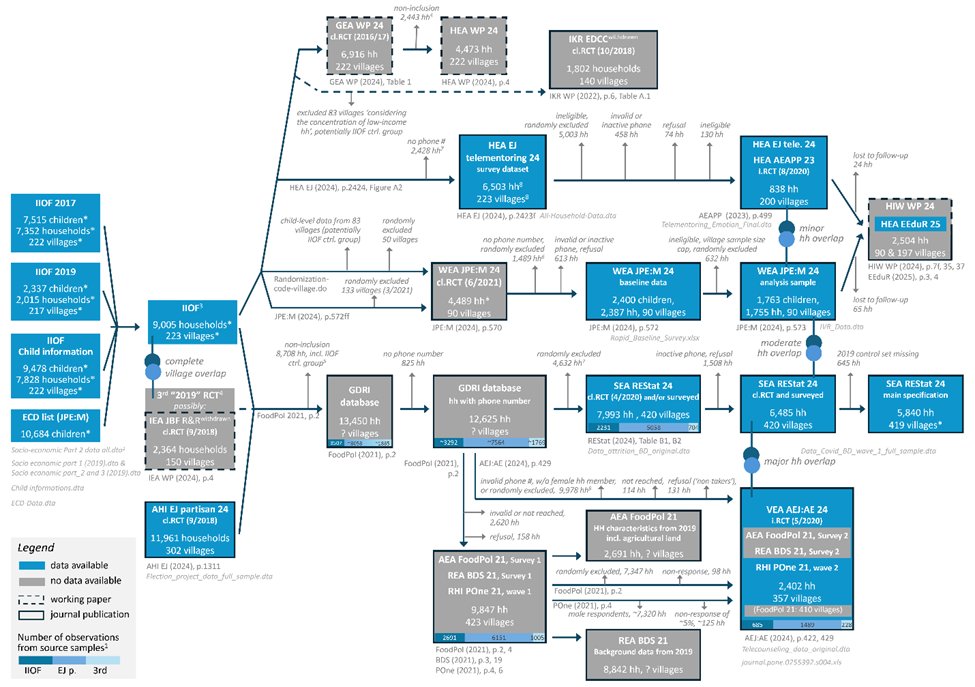

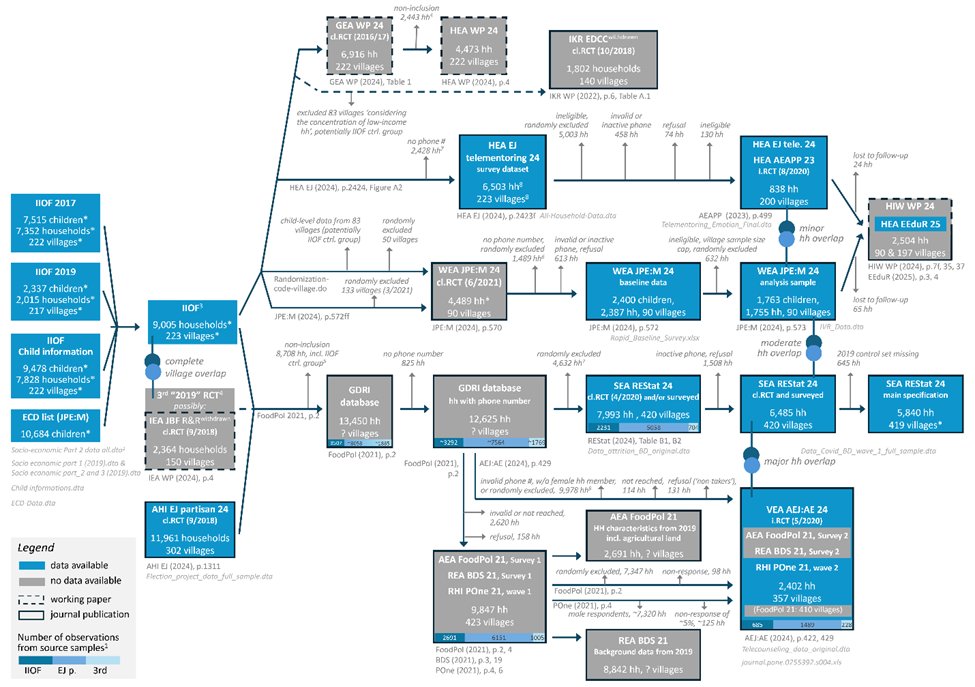

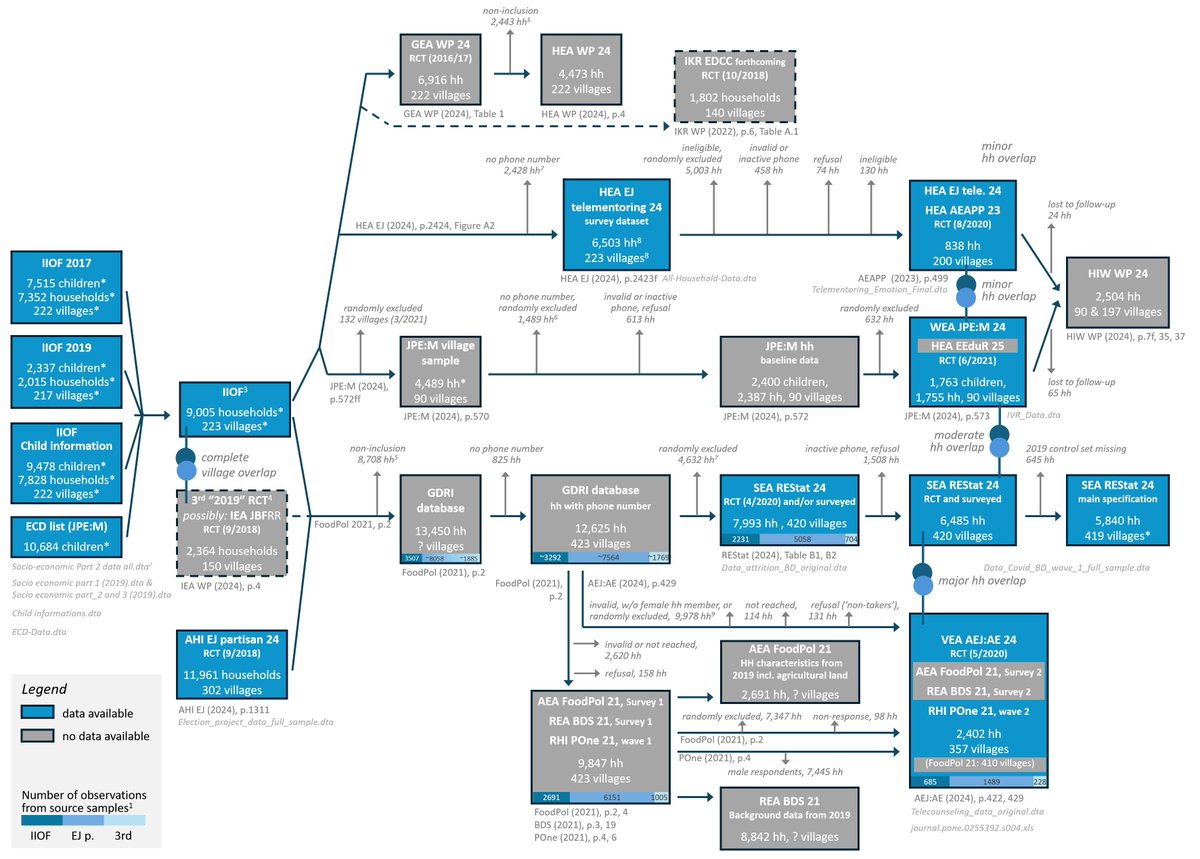

This effort was only possible because these journals require data and code repositories. This allowed us to compare data across papers and identify undisclosed connections between 15 studies (e.g., overlapping samples, overlapping interventions, reused treatment assignment).

This effort was only possible because these journals require data and code repositories. This allowed us to compare data across papers and identify undisclosed connections between 15 studies (e.g., overlapping samples, overlapping interventions, reused treatment assignment).

288 researchers (profs and graduate students) were randomly assigned into 103 teams across three groups: human-only, AI-assisted, and AI-led. The task? To reproduce results from published articles in the social sciences. How did each fare?

288 researchers (profs and graduate students) were randomly assigned into 103 teams across three groups: human-only, AI-assisted, and AI-led. The task? To reproduce results from published articles in the social sciences. How did each fare?

1-Let's start with the obvious. Psychologists' views on reproducibility are VERY different than economist/pol scientists. The latter quickly run the codes, check for coding errors and spend hours thinking about rob checks....

1-Let's start with the obvious. Psychologists' views on reproducibility are VERY different than economist/pol scientists. The latter quickly run the codes, check for coding errors and spend hours thinking about rob checks....

2/ Data: They analyzed 604 Job Market Papers (JMPs) from economics PhD candidates across 12 top ranked universities from 2018-2021. The goal? To uncover the factors that influence who lands those coveted academic positions.

2/ Data: They analyzed 604 Job Market Papers (JMPs) from economics PhD candidates across 12 top ranked universities from 2018-2021. The goal? To uncover the factors that influence who lands those coveted academic positions.

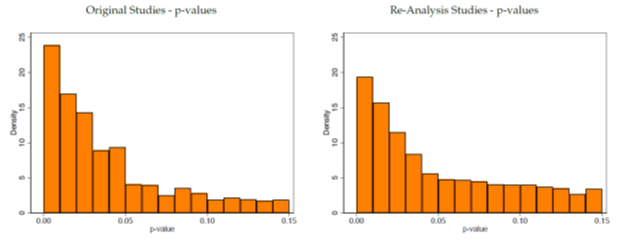

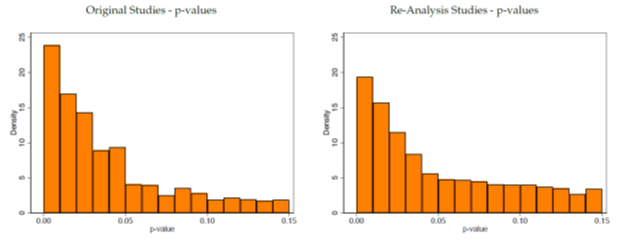

Our focus is on articles published in leading economics and political science journals (2022-onwards). These journals all have a data and code availability policy and most have a data editor. Keep this in mind when reading this thread.

Our focus is on articles published in leading economics and political science journals (2022-onwards). These journals all have a data and code availability policy and most have a data editor. Keep this in mind when reading this thread.