Apple's accumulating public failures to deliver on promised features & full potential of its products are the direct result of Tim Cook's beancounter mismanagement, which boils down to selling the worst product they can get away with. This is an effortpost thread on Apple AI. /1

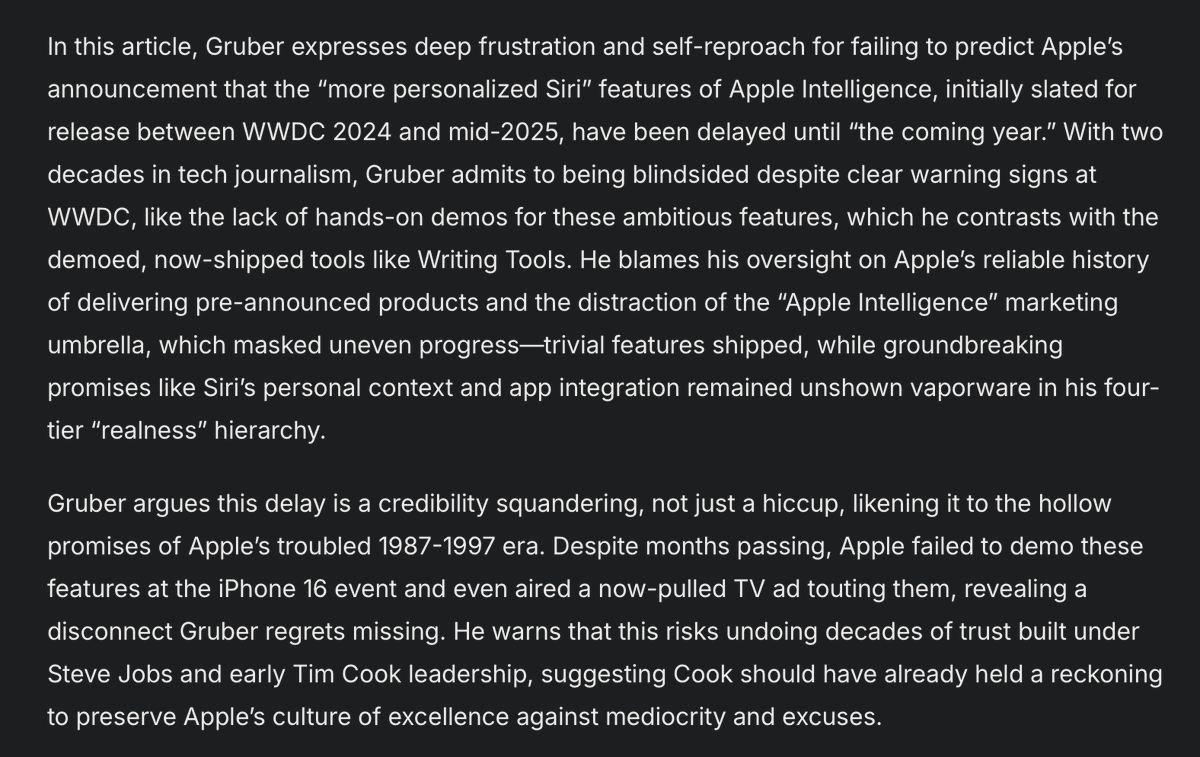

This week John Gruber on Daringfireball wrote the most scathing piece I can ever remember from him against Apple. His criticisms are entirely correct and worth a read, but they are incomplete. Here's a solid Grok tl;dr. /2 daringfireball.net/2025/03/someth…

What Gruber misses is when & why these catastrophic failures began. We're going to have to jump back in time more than once, and jump around to seemingly disconnected aspects of the business to tie this all together, which is why people miss it. /3

This is a story about development cycles of both HW & SW. The June 2024 Apple developer conference announcement of Apple Intelligence was plainly a rushed grab-bag of half-baked ideas. Whatever gets announced in June was already planned & locked in by the previous December. /4

Immediately after any major OS release ships around the end of calendar Q3, engineering & marketing get together to define the tentpole features for the following year's release. Those late 2023 conversations clearly amounted to "AI all of the things!" /5

This was an absurd unforced error on Apple's part. Rather than the mature approach of "this powerful new AI stuff can enhance our customer's experience," they clearly went instead with "how many places can we add AI quickly?" That creates problems instead of solving them. /6

But if we plug these events into the broader timeline, it all starts to make sense. Apple hasn't been sleeping on AI in the slightest. It notoriously poached Google's head of AI & Search John Giannandrea in 2018 to head Siri. His urgent task was to recruit more top AI talent. /7

That necessitated a radical cultural change for Apple engineering. AI guys are primarily academics, which means they publish. And the last thing anyone at Apple does is talk about what they do at Apple. John made sure hires would be able to publish & speak on some work. /8

But because AI at Apple was essentially sequestered in Siri's silo, what his team was likely to work on would be a) voice-only, & b) cloud-only. Add to the problem the profound technical debt of Siri's ancient architecture, and his team wasn't able to deliver new features. /9

Fast-forward to early 2023, we see a company-wide focus on the promise of AI. But this timing also shows what they didn't see coming. GPT-3.5 had just come out three months earlier. Meta's LLaMA-1 was about to ship. Nothing else that probably comes to mind today existed yet. /10

What this means is that a mere two years ago, there were no serious, commercially viable examples of on-device LLMs doing high-value work. Apple's engineers were certainly thinking about it as a hypothetical, but other than slivers of features buried deep, nothing yet existed /11

Here's what shipped to the public between Apple's internal AI conclave & the feature review for the 2024 OSes. While many of these were either cloud-commercial or too big to run on most PCs, we finally saw proof of concepts of real, viable local AI. This stuff became reality. /12

This is precisely why the Fall '23 feature review was in a panic over delivering AI any way possible. Apple had yet to invest remotely to the same degree as Meta, Google, Amazon, and half a dozen other highly credible competitors each spending billions on the problem. /13

So how is the current mess Tim's fault? Doing something well doesn't require being first, and Apple seldom has been in the past. Two reasons: profit margins vs. product headroom, and HW vs. SW lead times to pivot. Tim screwed Apple on both counts by being incredibly greedy. /14

Before we get into either, it is necessary to understand the gating factors for viable on-device AI. The first is compute. Apart from bleeding-edge custom silicon for LLMs, pretty much all useful AI runs on GPUs, due to the nature of the math & architectures adopted to date. /15

Not only is bigger, wider, & faster GPU better, but can easily make the difference between whether the AI is usable at all. And once you're overwhelmingly compute-rich, the experience becomes a delight that almost seems like magic. Not enough, and you have no feature at all. /16

The other gating factor is RAM, and this one is currently a binary check. If a given model won't fit entirely in available memory, it flat out cannot be used period. Unlike other programs, you can't currently page these on & off disk, trading performance for access. /17

Since everyone is up against this hard memory limit (yes I'm ignoring FlashAttention for consumer here), there are improvements being made at break-neck speed to reduce model sizes more & more without sacrificing accuracy. That's the other crazy thing about AI code. /18

Normal apps either work or don't, but the results are deterministic. With AI, you can play lots of tricks to reduce the size of the model to fit into that hard RAM limit, but it makes the output worse & worse, at some point unusably so. /19

So the real world calculus for which model to use under these constraints comes down to this heuristic: for a given AI model, once you reach your personal minimum good-enough to delightful performance speed, you want the largest version that will fit in your RAM for accuracy. /20

More GPU=better experience. More RAM=either it works or it doesn't. And this is where Tim's unforgivably miserly bean-counting struck the fatal blow many years ago. Apple is a platform vendor. Your iPhone or Mac will serve you for many years, with constant SW improvements. /21

As a platform vendor with over a billion devices in use today, Apple can't just ship new tentpole features that only work on the newly released HW. Features have to work on a significant fraction of the entire fleet, or they're non-starters. /22

There was lots of confusion & speculation about the baby Apple Intelligence features shipped last fall, due to the confusing HW requirements. Some people eventually figured out what I outlined above: the one thing they all have in common? 8GB RAM. Nothing else matters. /23

That $800 iPhone 15 you bought nine months ago will never be able to use AI because it only has 6GB of RAM. Its GPU is plenty fast – far faster than the M1. It could scream through those operations, but there's not enough memory to load the model, so you get nothing. /24

"Well that's to keep prices low. You can always buy the $1,000 phone if you want these premium features." How much did Tim Cook profit by holding back 2GB from your $800 phone? $3-5 tops. That is one half of one percent profit margin, a rounding error. /25

This is exactly why Tim is always talking about "customer sat[isfaction]." As long as people say they're alright with whatever they have, he can convince the gullible board the products are good. But that's not how any of this works, and we all know it. /26

Consider the famous Henry Ford quote. What would the customer sat numbers have looked like for horses before the Model T? And what would they have looked like after a horse user got to use Ford's newfangled car for a month? That is how Tim lies to the board, and all of us. /27

Tim put a rounding error's worth of dollars in his pocket when he sold you a crippled phone that lacked the headroom to be future-proof. You might be satisfied with what it can do, but the customer sat metric is simply blank for all the things you can never do at all. /28

The entire premise of future-proofing is not to get caught unable to meet new demands with existing tools. The minimum RAM shipped in Macs has only been 8GB since a 2018, seven years after Steve Jobs died. They actually shipped 4GB Macs in 2018! /29

It wasn't until after this Apple Intelligence fiasco began to unfold that they finally increased the minimum Mac RAM to 16GB last October. Know what the 8->16GB bump cost them? Between $10-20, or a maximum of 2% of the profit margin on the worst Mac. /30

Here are the long-term effects if this unmitigated greed & stupidity. It will be SEVEN YEARS before Apple can ship any AI models that are larger than 2GB which will work on all supported iPhones. And 2GB models are a joke, no matter how clever they get implementing them (MoE) /31

The other huge problem Tim caused is the HW vs. SW lead times to pivot once one of these cock-ups is found. The Siri & OS guys are hard at work trying to do the best they can on tiny local models that won't be embarrassing given the bankrupt hardware minimums Tim gave them. /32

But pivoting Johny Srouji's silicon roadmap takes years. And we saw the first clear signs of the fallout there last week with the announcement of an M3 Ultra in the Mac Studio, despite the M4 being current. And this is where Apple marketing begins compounding its lies. /33

When challenged why on earth in 2025, Apple is shipping an M3 Ultra when the M4 has been out for ten months, they spun, "not every chip generation will have an Ultra config." While technically true due to Tim's greed, it is conscious deception on their part. /34

Here's what really happened. Jumping back to late 2020, Apple shipped the very first M1 silicon in Macs alongside the A14 Bionic from the iPhone 12 upon which it is heavily based. Chip design is an incredibly difficult task, and reuse of elements is key to making variants. /35

CPU designs take 2-3 years from conception to shipping. Apple was able to significantly reduce the overhead of making its own Mac chips by reusing, rearranging, & multiplying elements from its co-development of iPhone silicon. /36

The M1 took A14 Bionic elements, duplicated two more cores, boosted the clock speed, doubled the L2 cache & GPU cores. The Pro & Max added more CPU & GPU cores, more cache, and a much faster memory bus. Significant engineering work, but not starting from scratch. /37

The game-changer was the M1 Ultra in the brand new Mac Studio 16 months after the M1 shipped. Johny's team developed the UltraFusion silicon interposer that let them glue two M1 Maxes together, doubling everything, including RAM to 128GB. /38

Nobody knew what game they'd changed yet, but look at what just happened vis-à-vis AI models. Apple stuffed a whole bunch of GPU cores into a small box along with 5x more RAM than you could get on the best PC GPU at the time. This was an AI machine waiting to happen. /39

They then repeated a very similar process between June '22-'23 with the M2 through M2 Ultra, which shipped in both the Studio and much-neglected Mac Pro. They also pushed the RAM to an eye-watering 192GB. And this is where Tim's beancounter trouble started. /40

Apple conceals sales figures, but there can be no doubt that these halo configs sold very poorly. The market for that much compute at the time was simply tiny – because no one was running massive LLMs locally two years ago. /41

Just four months after the M2 Ultra shipped, Apple delivered all three lower-tier M3s at once in Oct '23. This was tremendously impressive at the time, because the dies for each had less commonality than previous generations, which took more work to design & qualify. /42

And an incredible seven months after that, the M4 shipped first in the iPad Pro, and all lower configs shipped one year after the M3s in Oct '24.

Now overlay all of this action with the software pivot late '23. Right after the M3s shipped, Apple collectively lurched on AI. /43

Now overlay all of this action with the software pivot late '23. Right after the M3s shipped, Apple collectively lurched on AI. /43

One factor was undoubtedly that by summer '23, people were discovering they could use tools like llama.cpp to run large models on those very high memory configs of the M1 & M2 Ultra (which had just come out). A lot of people bought maxed out Minis & Studios solely for LLMs. /44

Between mid-'23 and this winter, there's been an explosion of interest & adoption in Mac local LLMs thanks to amazing tools like @lmstudio & @exolabs. And that has driven people to stuff as much memory as they could into whatever Mac they buy recently. /45

Great news for Apple, right? In one sense, absolutely. The unusual unified memory architecture and good-enough GPUs let Apple stumble into being the only game in town for large models at home. And a few years from now, things are going to be amazing on that front. /46

The problem is these Ultras and marketing's claim about how long it takes to make them. Let's put all of this together, bearing in mind that at a bare minimum, you're looking at 12-18 months between a silicon design decision, and what does (or doesn't) ship to customers. /47

- June '22-'23 M2 through Ultra shipped, with Mac Pro ending the transition from Intel.

- Oct '23 all M3s ship.

- May-Oct '24 all M4s ship

- March '25 we find out there won't be an M4 Ultra

When that decision was made is the cock-up here, and it's all about that UltraFusion. /48

- Oct '23 all M3s ship.

- May-Oct '24 all M4s ship

- March '25 we find out there won't be an M4 Ultra

When that decision was made is the cock-up here, and it's all about that UltraFusion. /48

The incredible complexity of that interposer isn't something you can just bolt onto a chip design later on because you feel like it. The only way there can be an M3 Ultra is if the M3 family was designed from the outset to have an Ultra config, design that happened in 2022. /49

When the Studios and Mac Pro sold poorly, Apple made the call in the last quarter of '23 to cancel revising the Studio or Mac Pro with an M3 Ultra – too low volume to be worth it. Tragically this was the precise moment when Apple was realizing local compute was vital. /50

By mid '24 when all those high-memory configs were selling like crazy (relatively) due to LLMs, Apple had already made two terrible mistakes: killed the M3 Ultra that could've sold right into that demand. And worse, they had deleted the UltraFusion from the M4 entirely. /51

There will never be an M4 Ultra not due to some policy, but due to one of the worst near-misses in computing history. And given how long it took them to emergency ship the M3 Ultra (which was probably initially for Private Cloud Compute), the M5 might not be interposed either /52

• • •

Missing some Tweet in this thread? You can try to

force a refresh