AGI by 2027?

I spent weeks writing this new in-depth primer on the best arguments for and against.

Starting with the case for...🧵

I spent weeks writing this new in-depth primer on the best arguments for and against.

Starting with the case for...🧵

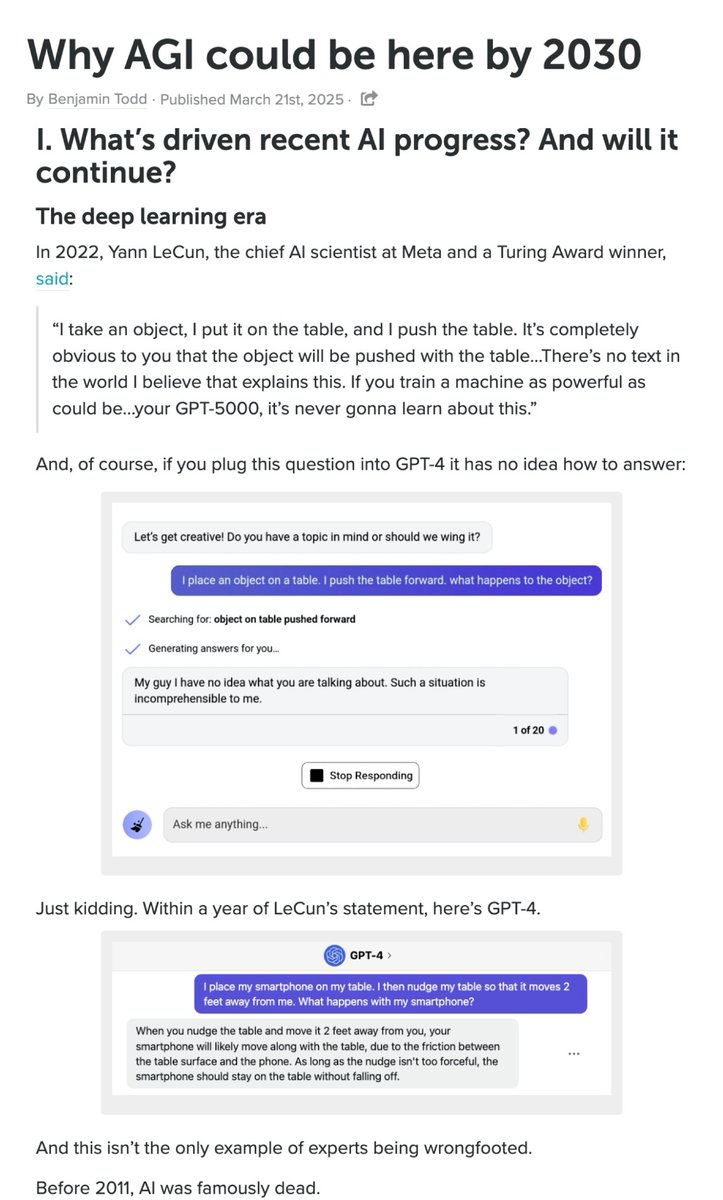

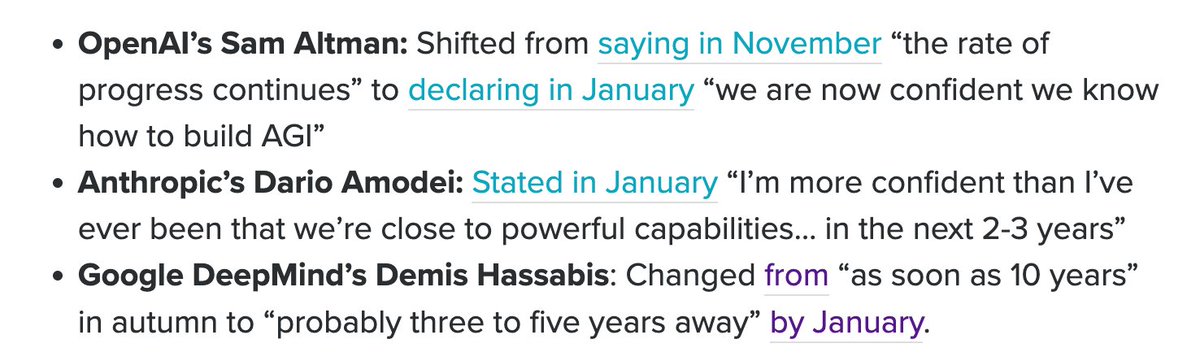

1. Company leaders think AGI is 2-5 years away.

They’re probably too optimistic, but shouldn't be totally ignored – they have the most visibility into the next generation of models.

They’re probably too optimistic, but shouldn't be totally ignored – they have the most visibility into the next generation of models.

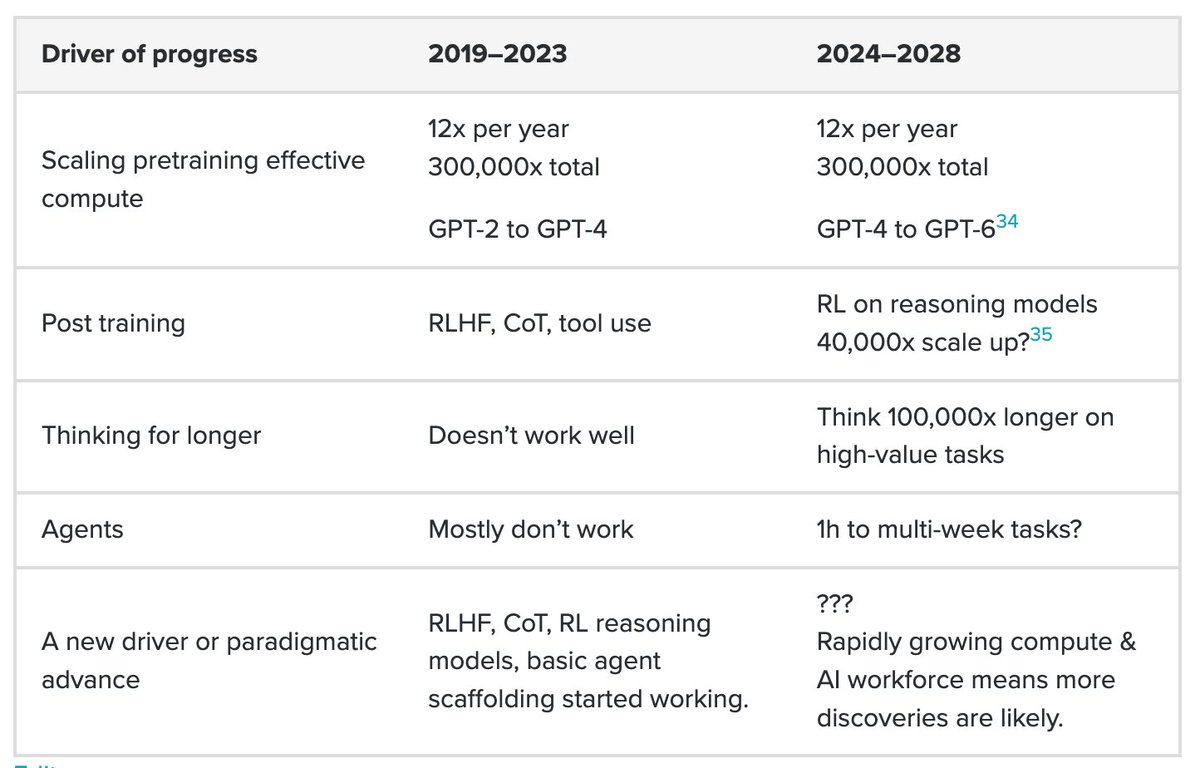

2. The four recent drivers of progress don't run into bottlenecks until at least 2028.

And with investment in compute and algorithms continuing to increase, new drivers are likely to be discovered.

And with investment in compute and algorithms continuing to increase, new drivers are likely to be discovered.

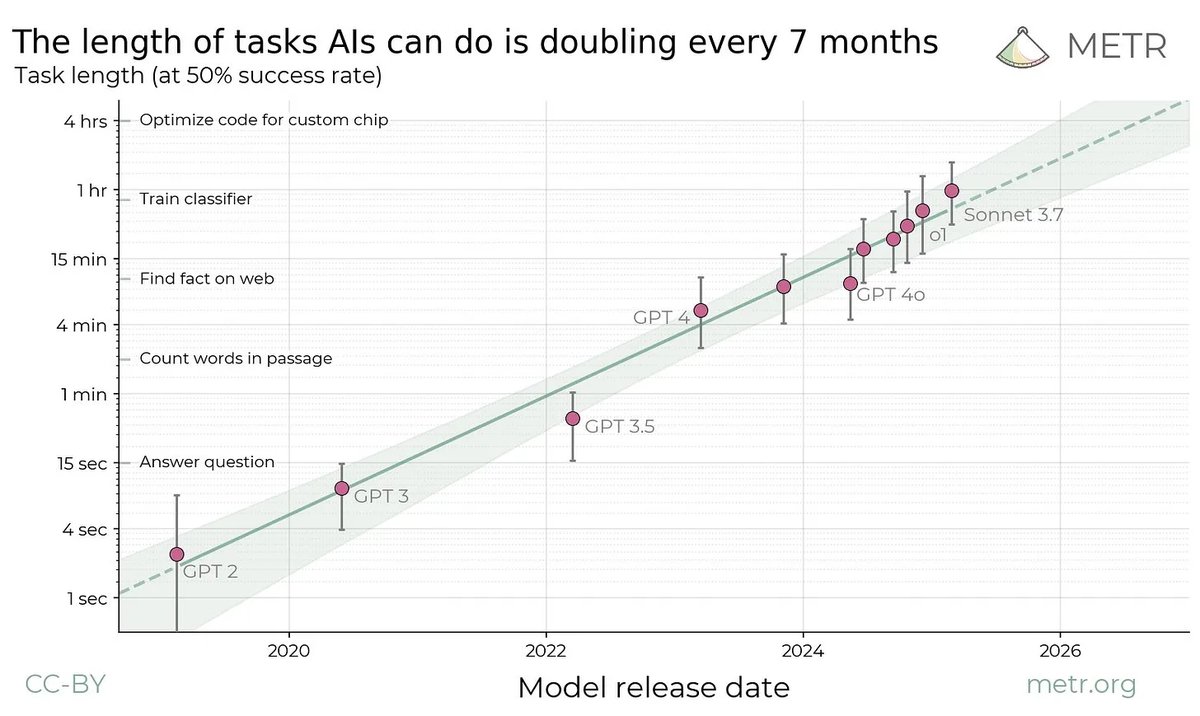

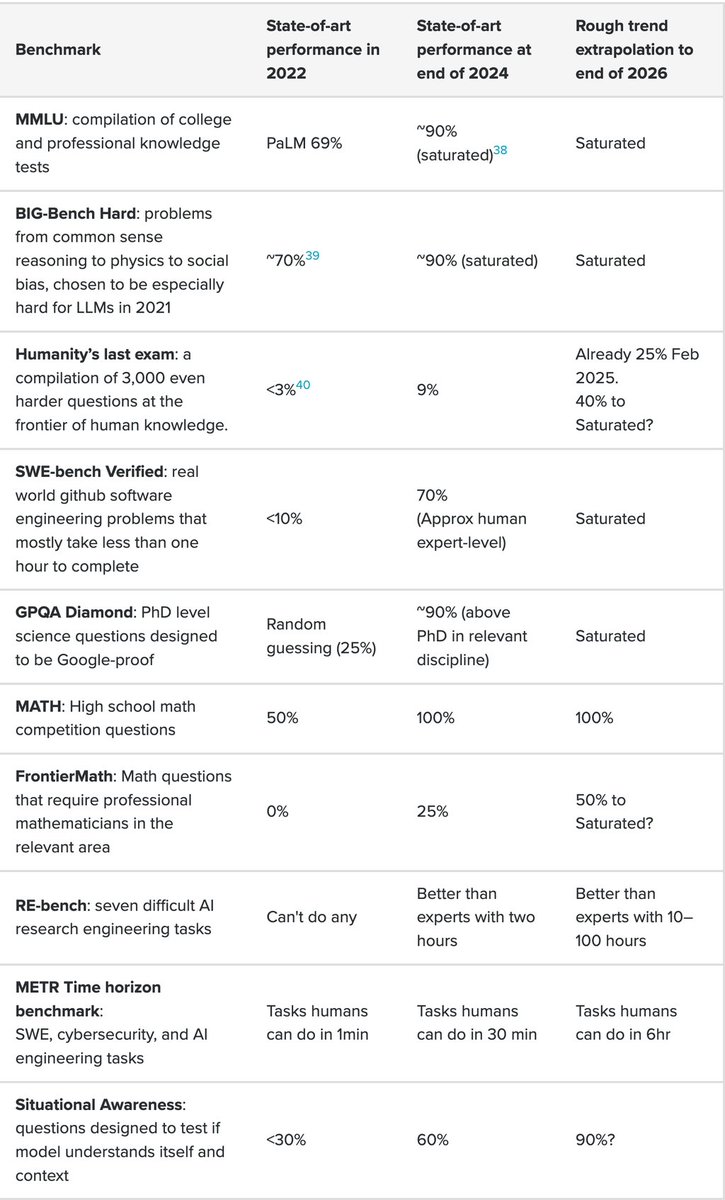

3. Benchmark extrapolation suggests in 2028 we'll see systems with superhuman coding and reasoning that can autonomously complete multi-week tasks.

All the major benchmarks ⬇️

All the major benchmarks ⬇️

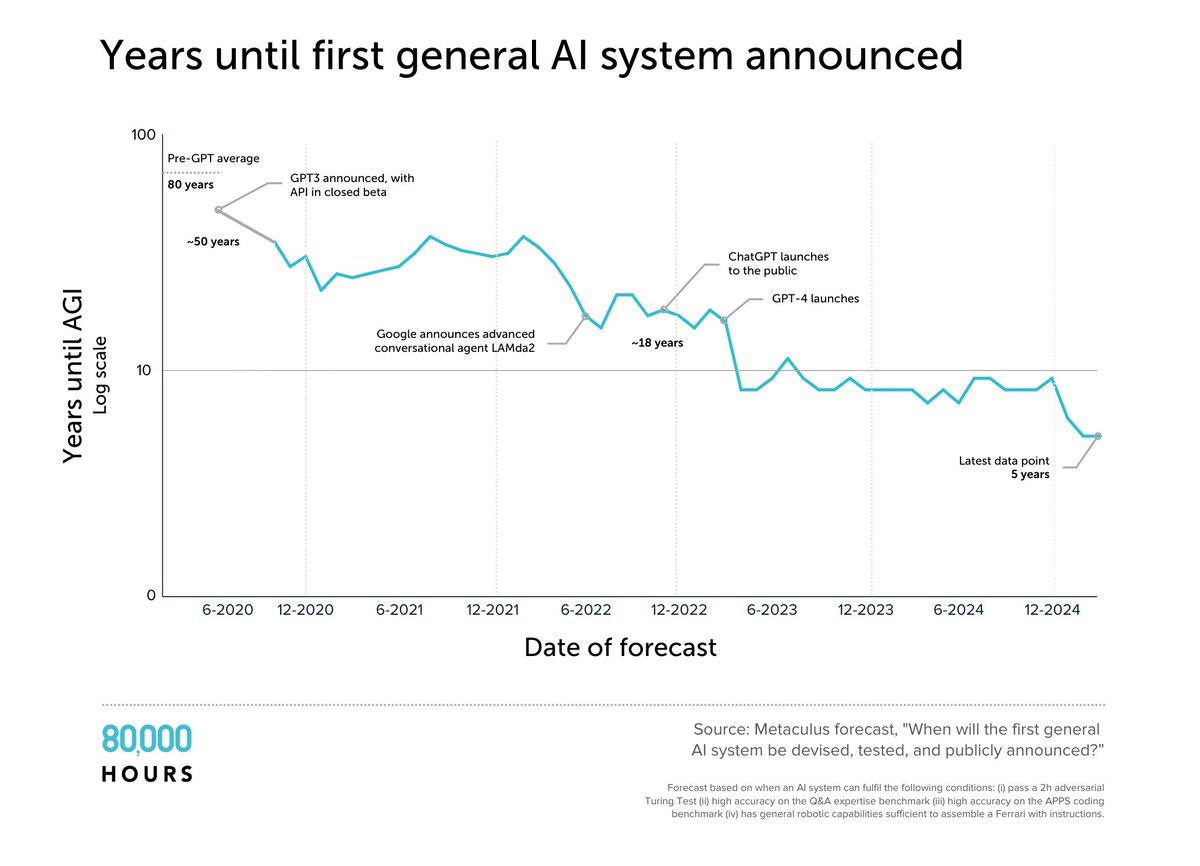

4. Expert forecasts have consistently moved earlier.

AI and forecasting experts now place significant probability on AGI-level capabilities pre-2030.

I remember when 2045 was considered optimistic.

80000hours.org/2025/03/when-d…

AI and forecasting experts now place significant probability on AGI-level capabilities pre-2030.

I remember when 2045 was considered optimistic.

80000hours.org/2025/03/when-d…

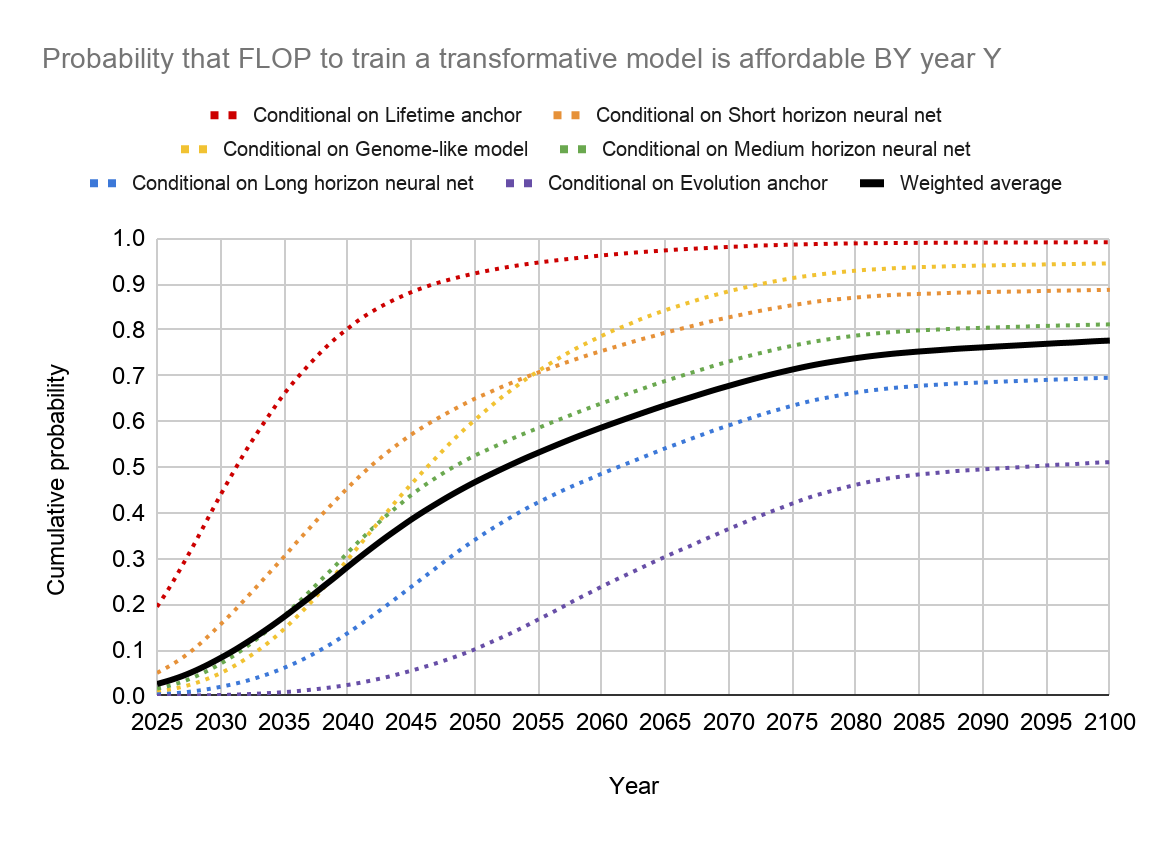

5. By 2030, AI training compute will far surpass estimates for the human brain.

If algorithms approach even a fraction of human learning efficiency, we'd expect human-level capabilities in at least some domains.

cold-takes.com/forecasting-tr…

If algorithms approach even a fraction of human learning efficiency, we'd expect human-level capabilities in at least some domains.

cold-takes.com/forecasting-tr…

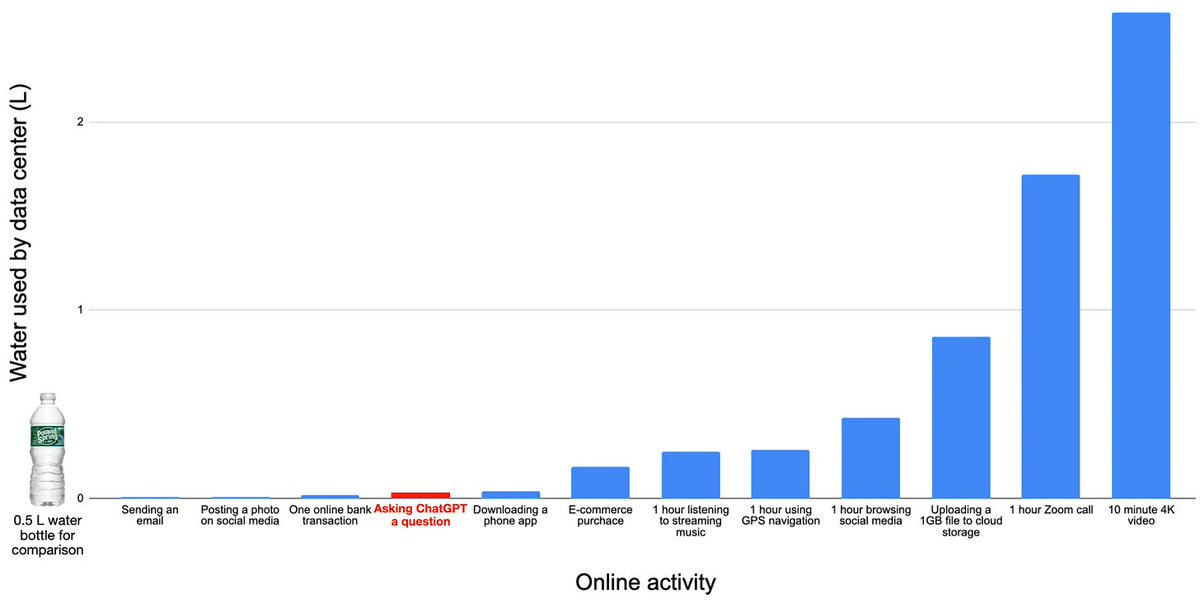

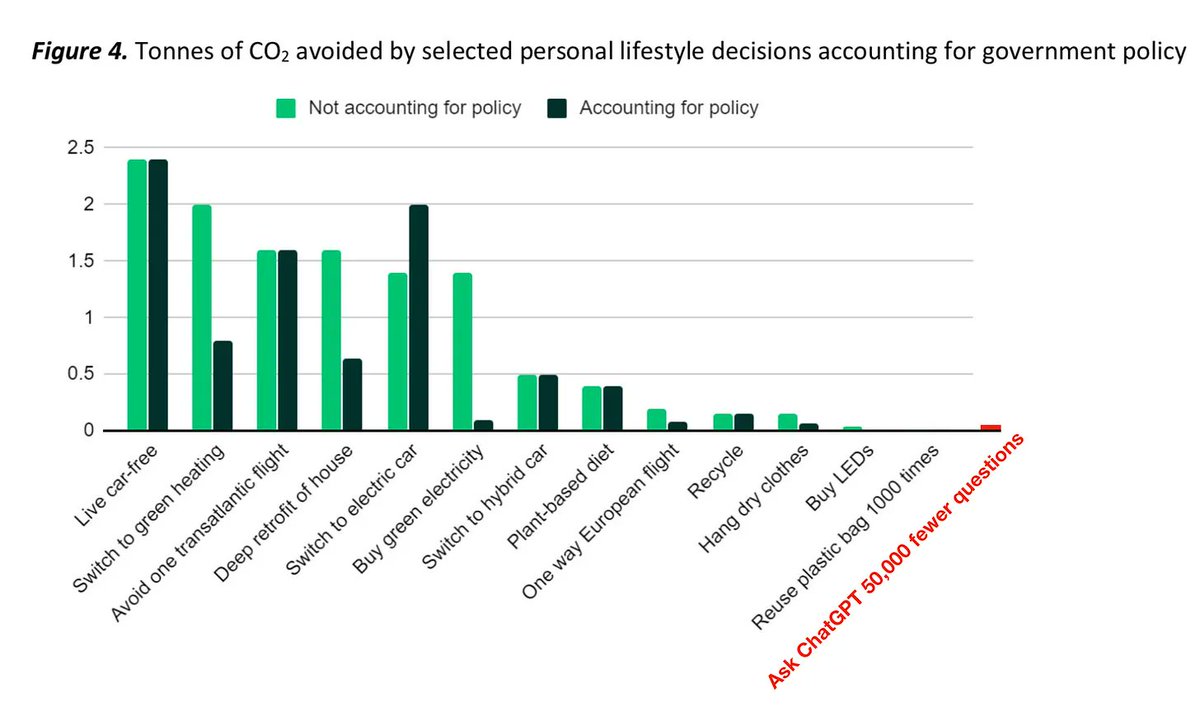

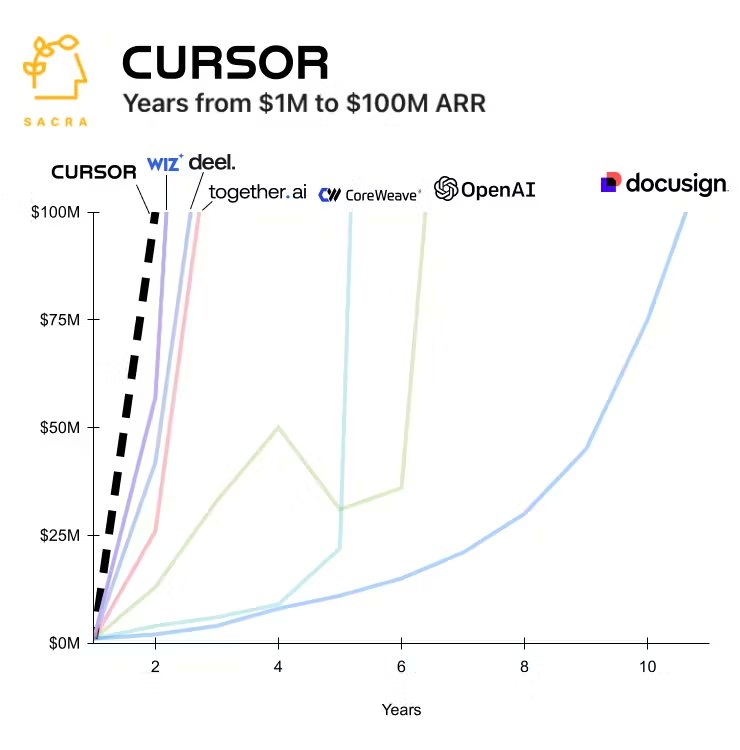

6. While real-world deployment faces many hurdles, AI is already very useful in virtual and verifiable domains:

• Software engineering & startups

• Scientific research

• AI development itself

These alone could drive massive economic impact and accelerate AI progress.

• Software engineering & startups

• Scientific research

• AI development itself

These alone could drive massive economic impact and accelerate AI progress.

https://x.com/ben_j_todd/status/1906384281011687839

The strongest counterargument?

Current AI methods might plateau on ill-defined, contextual, long-horizon tasks—which happens to be most knowledge work.

Without continuous breakthroughs, profit margins fall and investment dries up.

Current AI methods might plateau on ill-defined, contextual, long-horizon tasks—which happens to be most knowledge work.

Without continuous breakthroughs, profit margins fall and investment dries up.

https://x.com/ben_j_todd/status/1903443830172774579

Other meaningful arguments against:

• GPT-5/6 disappoint due to diminishing data quality

• Answering questions → novel insights could be a huge gap

• Persisting perceptual limitations limit computer use (Moravec's paradox)

• Benchmarks mislead due to data contamination & difficulty capturing real-world tasks

• Economic crisis, Taiwan conflict, or regulatory crackdowns delay progress

• Unknown bottlenecks (planning fallacy)

• GPT-5/6 disappoint due to diminishing data quality

• Answering questions → novel insights could be a huge gap

• Persisting perceptual limitations limit computer use (Moravec's paradox)

• Benchmarks mislead due to data contamination & difficulty capturing real-world tasks

• Economic crisis, Taiwan conflict, or regulatory crackdowns delay progress

• Unknown bottlenecks (planning fallacy)

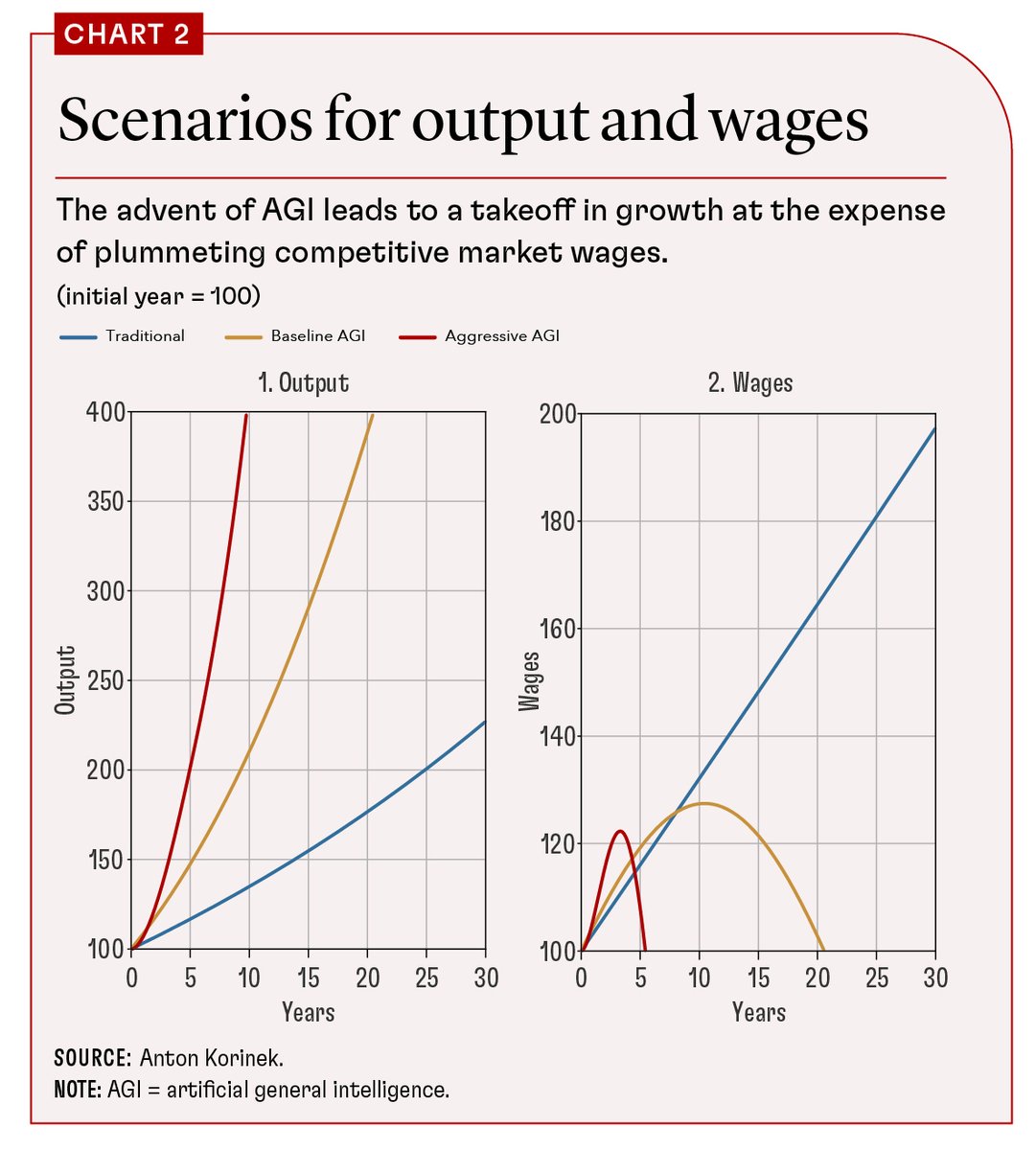

My take: It's remarkably difficult to rule out AGI before 2030.

Not saying it's certain—just that it could happen with only an extension of current trends.

Full analysis here:

80000hours.org/agi/guide/when…

Not saying it's certain—just that it could happen with only an extension of current trends.

Full analysis here:

80000hours.org/agi/guide/when…

• • •

Missing some Tweet in this thread? You can try to

force a refresh