How to get URL link on X (Twitter) App

/2 These skills increase in value (for now) because they fall into 4 categories:

/2 These skills increase in value (for now) because they fall into 4 categories:

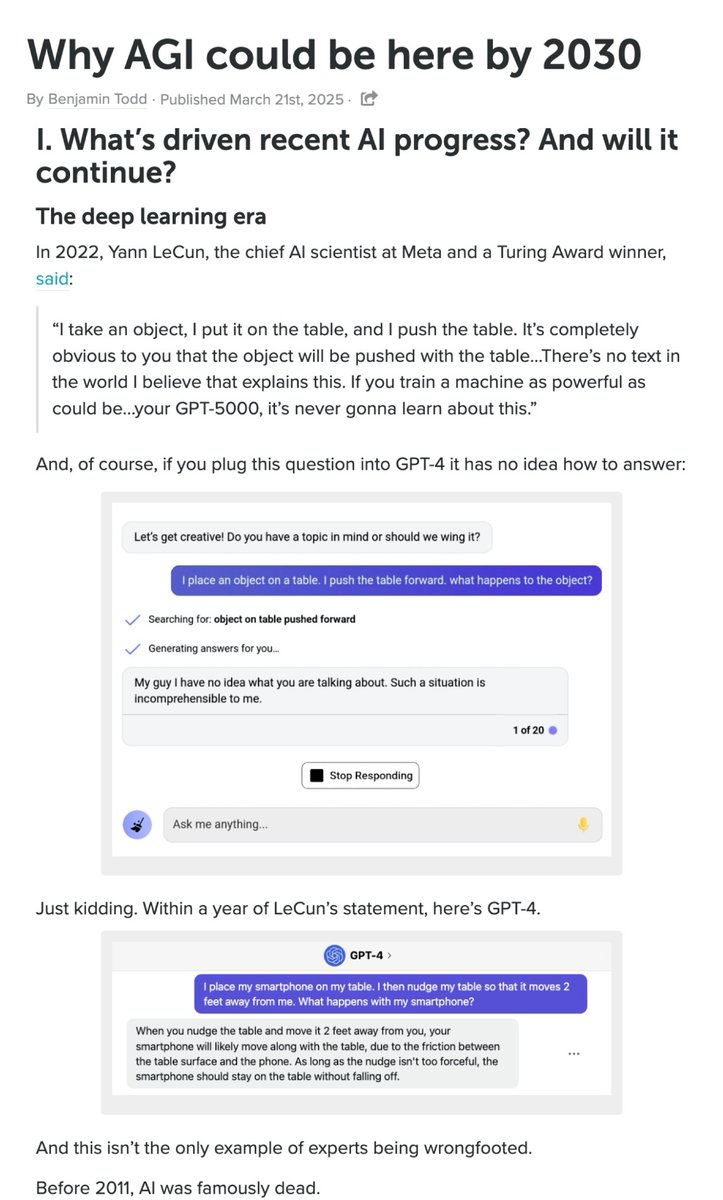

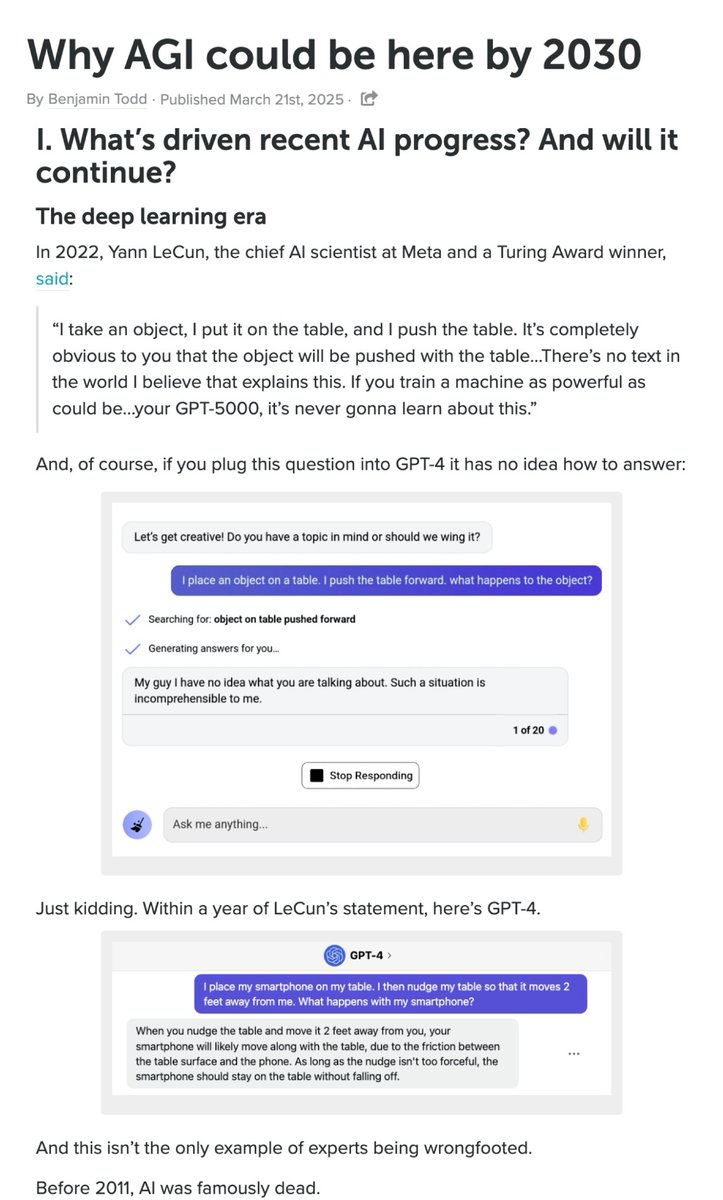

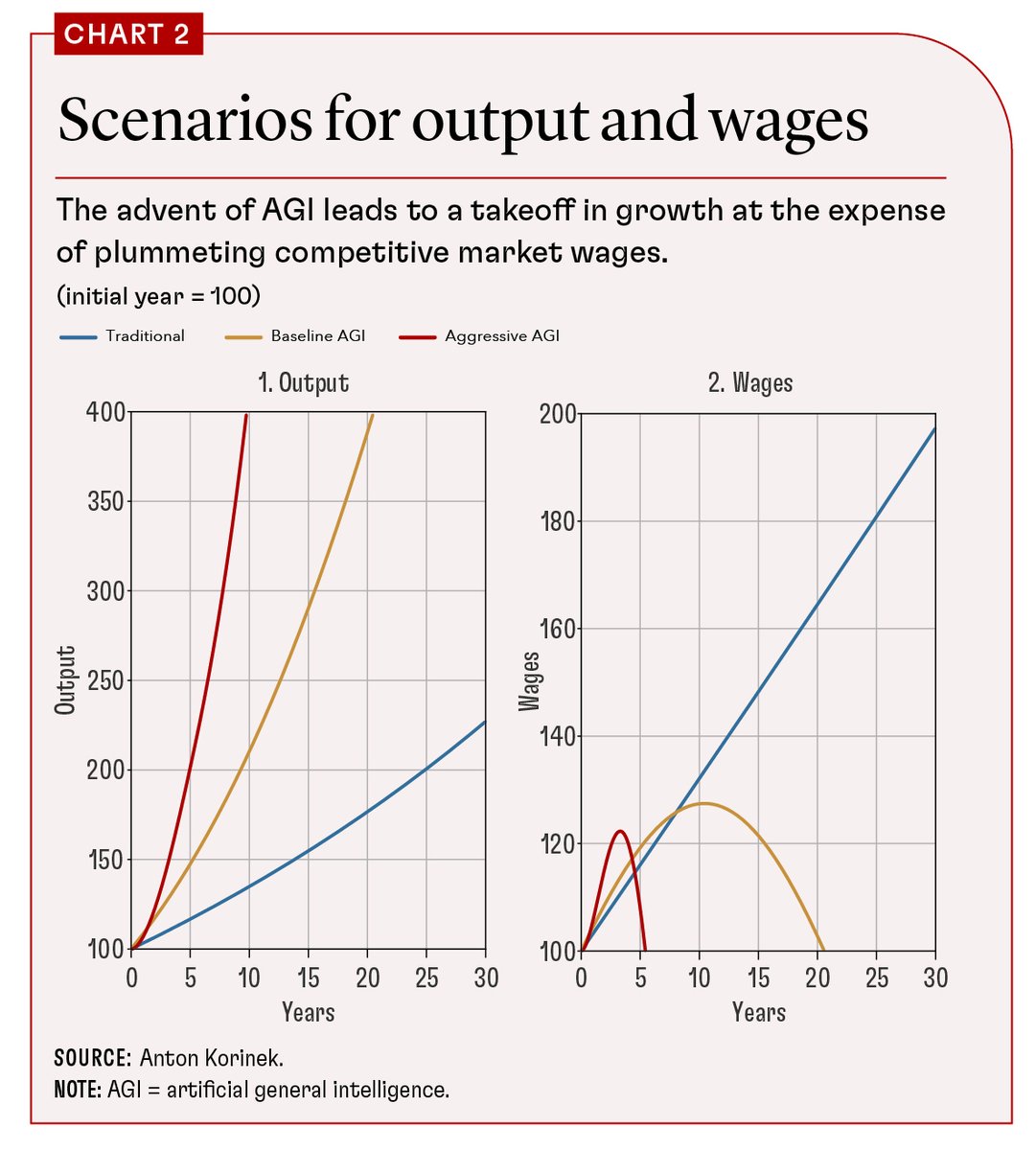

1/ There's a significant chance of AI systems that could accelerate science and automate many skilled jobs in five years.

1/ There's a significant chance of AI systems that could accelerate science and automate many skilled jobs in five years.

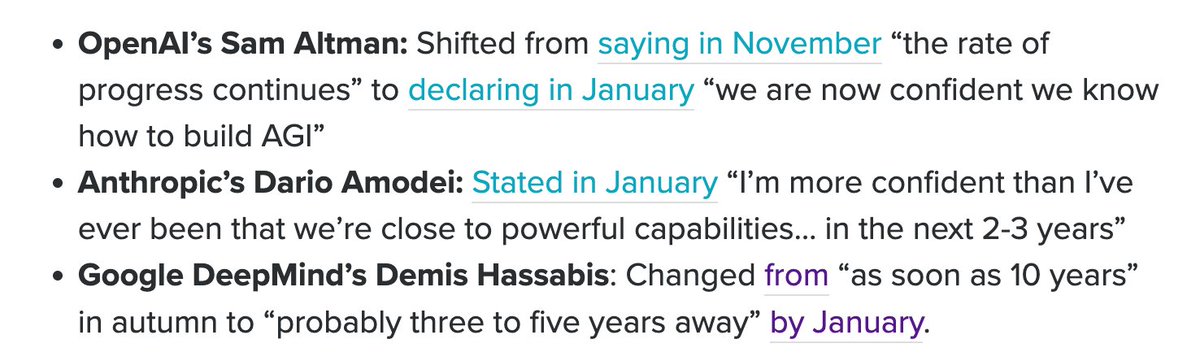

1. Company leaders think AGI is 2-5 years away.

1. Company leaders think AGI is 2-5 years away.

But acting on a narrow view of what's right can easily be dangerous - since you're most likely wrong - and has led to some of the worst atrocities in history.

But acting on a narrow view of what's right can easily be dangerous - since you're most likely wrong - and has led to some of the worst atrocities in history.

Don't make the same mistake again. Imagine a far more advanced GPT-10 is here now. How worried *then* would you be about AI risk?

Don't make the same mistake again. Imagine a far more advanced GPT-10 is here now. How worried *then* would you be about AI risk?https://twitter.com/xuenay/status/1511246753592393731

https://twitter.com/sama/status/1511715302265942024

https://twitter.com/andyzengtweets/status/1512089759497269251

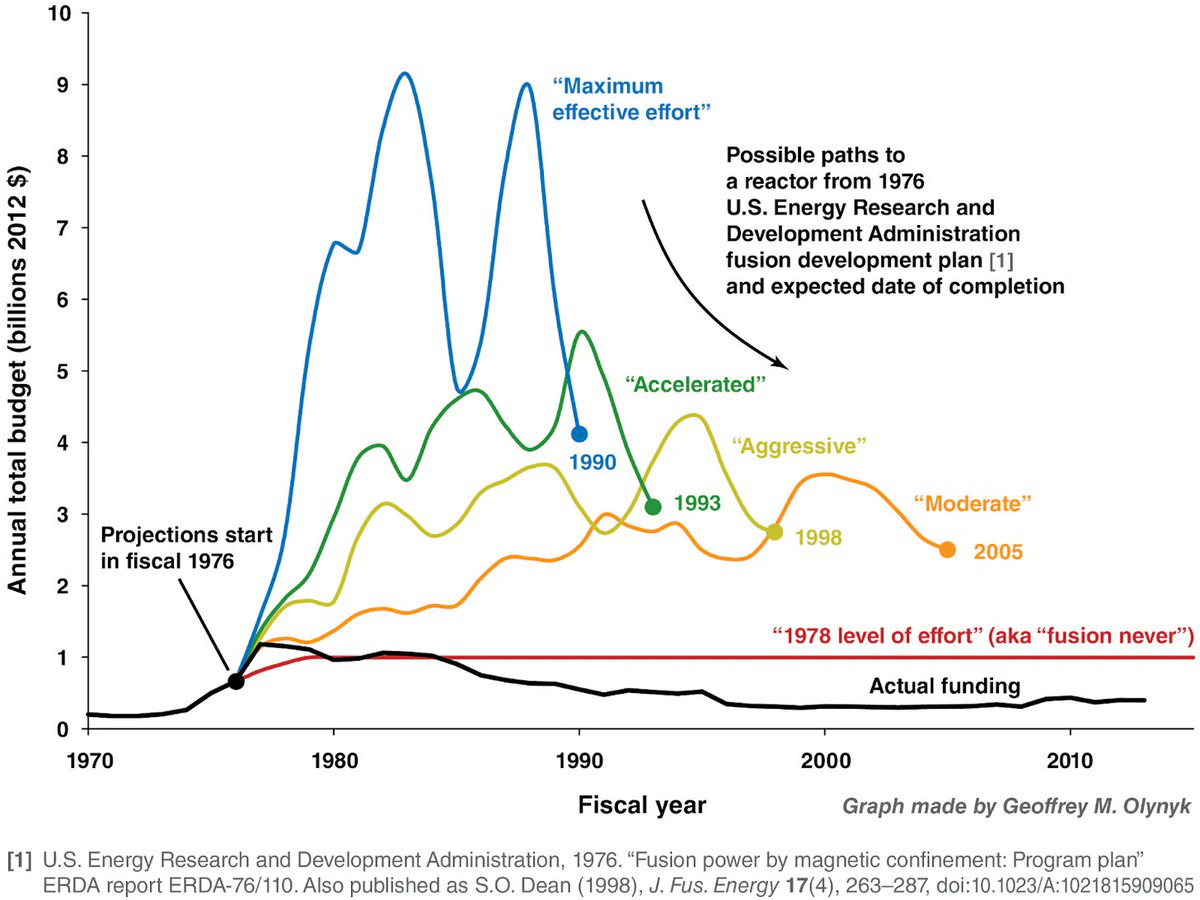

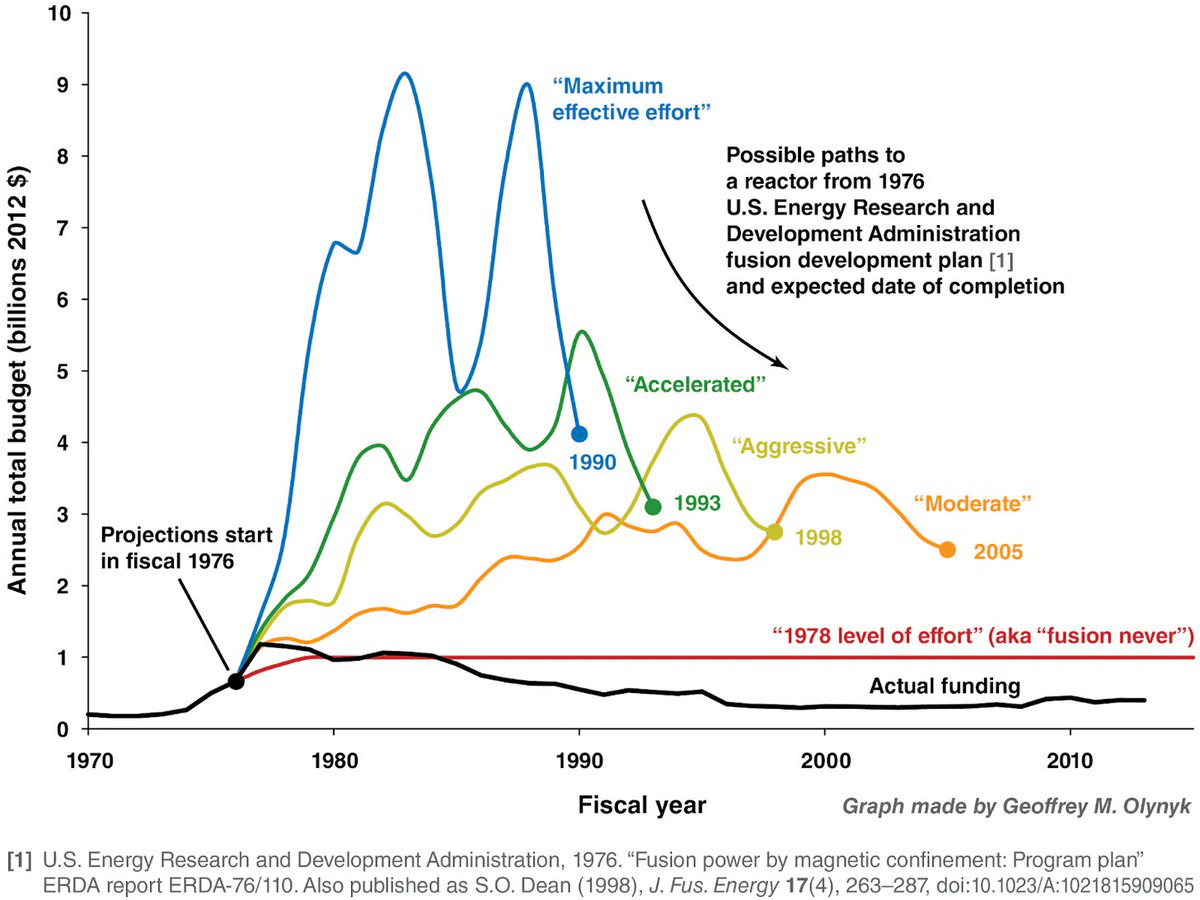

Despite little funding, progress towards fusion 1970-2000 was good.

Despite little funding, progress towards fusion 1970-2000 was good.

https://twitter.com/ben_j_todd/status/1497203287501639689