What if humanity forgot how to make CPUs?

Imagine Zero Tape-out Day (Z-Day), the moment where no further silicon designs ever get manufactured. Advanced core designs fare out very badly.

Assuming we keep our existing supply, here’s how it would play out:

Imagine Zero Tape-out Day (Z-Day), the moment where no further silicon designs ever get manufactured. Advanced core designs fare out very badly.

Assuming we keep our existing supply, here’s how it would play out:

Z-Day + 1 Year:

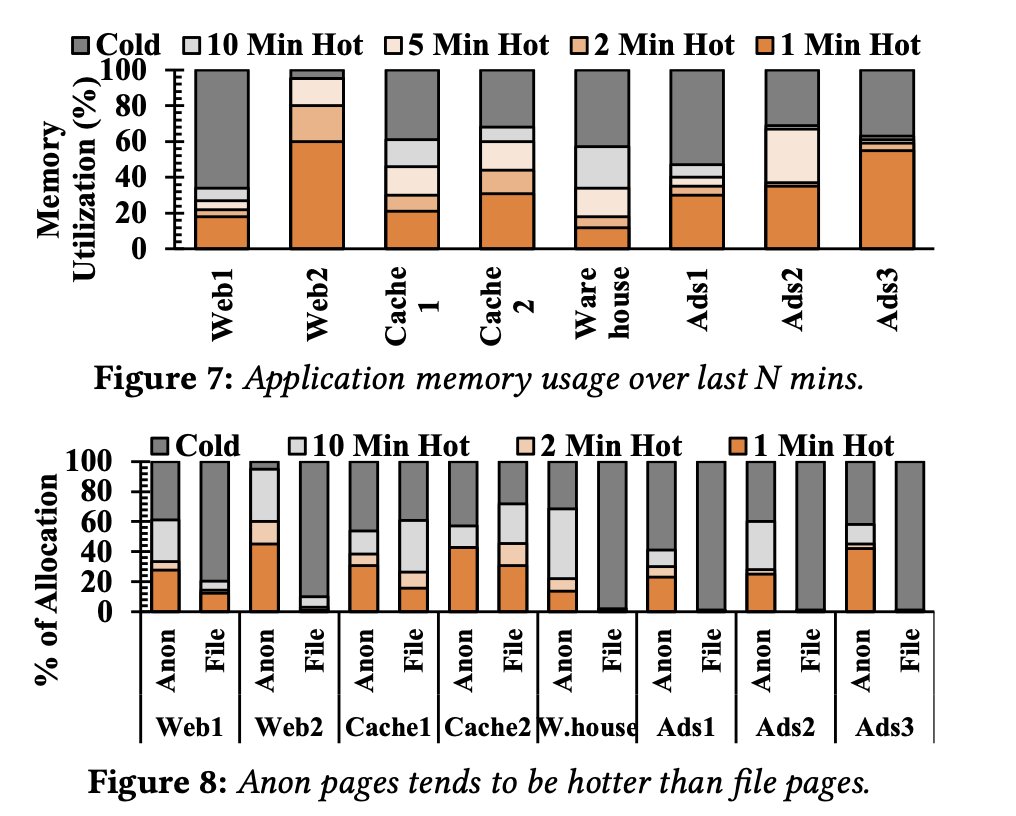

Cloud providers freeze capacity. Compute Prices skyrocket.

Black’s Equation is brutal; the smaller the node, the faster electromigration kills the chip.

Savy consumers immediately undervolt and excessively cool their CPUs, buying precious extra years.

Cloud providers freeze capacity. Compute Prices skyrocket.

Black’s Equation is brutal; the smaller the node, the faster electromigration kills the chip.

Savy consumers immediately undervolt and excessively cool their CPUs, buying precious extra years.

Z-Day + 3yrs:

Black Market booms, Xeons worth more than gold. Governments prioritize power, comms, finance. Military supply remains stable; leaning on stockpiled spares.

Datacenters desperately strip hardware from donor boards, the first "shrink" of cloud compute.

Black Market booms, Xeons worth more than gold. Governments prioritize power, comms, finance. Military supply remains stable; leaning on stockpiled spares.

Datacenters desperately strip hardware from donor boards, the first "shrink" of cloud compute.

Z-Day + 7Yrs:

Portable computing regresses, phone SoCs fail faster from solder fatigue. Internet switches hit EOL, nothing horrible yet, but risk increases.

Used “dumb” car market skyrockets, lead-free solder in ECUs experience their first failures from thermal cycling.

Portable computing regresses, phone SoCs fail faster from solder fatigue. Internet switches hit EOL, nothing horrible yet, but risk increases.

Used “dumb” car market skyrockets, lead-free solder in ECUs experience their first failures from thermal cycling.

Z-Day + 15Yrs

The “Internet” no longer exists as a single fabric. The privileged fall back to private peering or Sat links.

Sneakernet via SSDs popular, careful usage keeps them alive longer than network switches. For those lucky enough not to have their desktop computers confiscated, Boot-to-RAM distros and PXE images are the norm to minimize day-to-day writes.

HDDs are *well* past the bathtub curve, most are completely dead. Careful salvaging of spindle motors and actuator arms, with precision repairs keeps the most critical high capacity arrays online.

The “Internet” no longer exists as a single fabric. The privileged fall back to private peering or Sat links.

Sneakernet via SSDs popular, careful usage keeps them alive longer than network switches. For those lucky enough not to have their desktop computers confiscated, Boot-to-RAM distros and PXE images are the norm to minimize day-to-day writes.

HDDs are *well* past the bathtub curve, most are completely dead. Careful salvaging of spindle motors and actuator arms, with precision repairs keeps the most critical high capacity arrays online.

Z-Day + 30Yrs

Long-term storage has shifted completely to optical media. Only vintage compute survives at the consumer level.

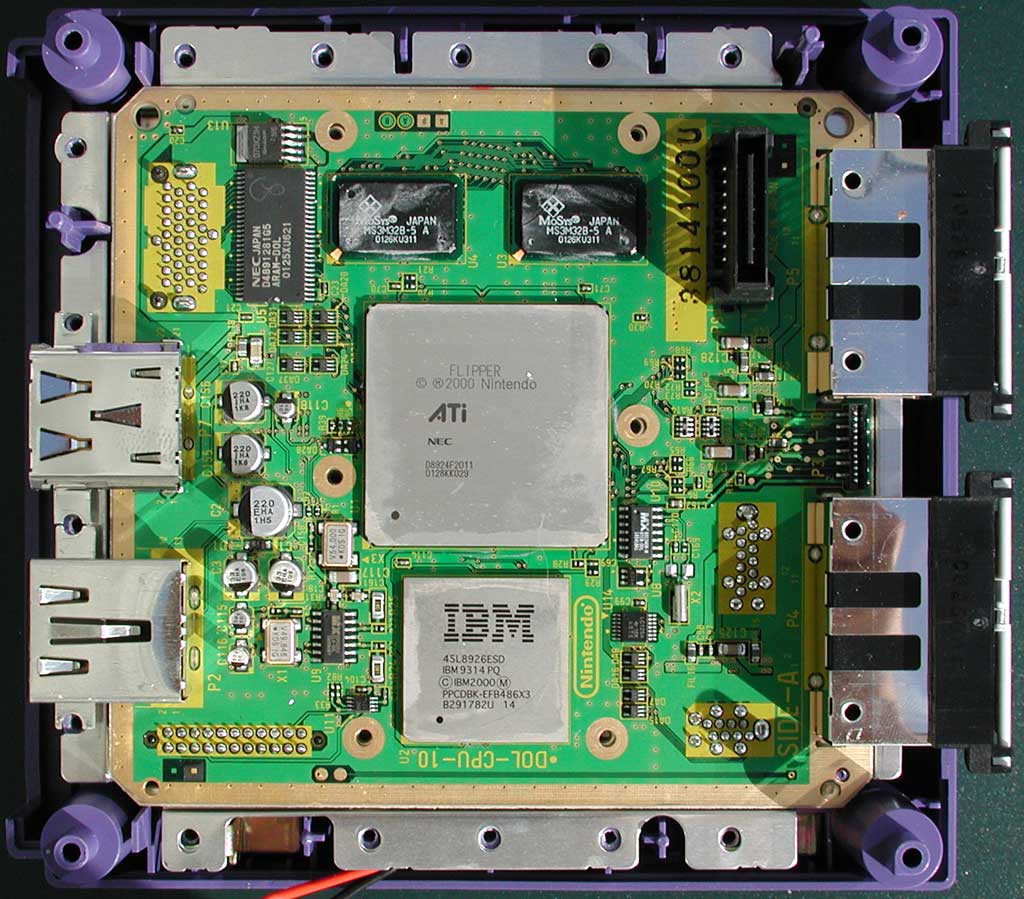

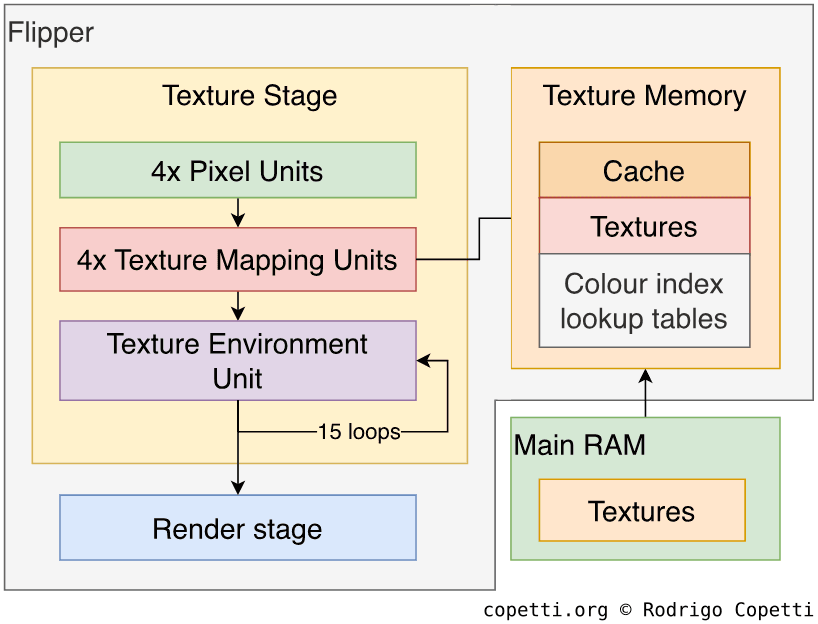

The large node sizes of old hardware make them extremely resistant to electromigration, Motorola 68000s have modeled gate wear beyond 10k years! Gameboys, Macintosh SEs, Commodore 64s resist the no new silicon future the best.

Fancier, (but still wide node) hardware like iMac G3s become prized workstations of the elite. The state of computing as a whole looks much more like the 1970s-80s.

Long-term storage has shifted completely to optical media. Only vintage compute survives at the consumer level.

The large node sizes of old hardware make them extremely resistant to electromigration, Motorola 68000s have modeled gate wear beyond 10k years! Gameboys, Macintosh SEs, Commodore 64s resist the no new silicon future the best.

Fancier, (but still wide node) hardware like iMac G3s become prized workstations of the elite. The state of computing as a whole looks much more like the 1970s-80s.

• • •

Missing some Tweet in this thread? You can try to

force a refresh