The proposed moratorium to slow the avalanche of state AI bills (1000+ in 2025) has really spun up folks, but they aren't making great arguments.

Take a new letter by @demandprogress (irony!) and other progressive orgs. It gets basic facts wrong and misrepresents research. 🧵

Take a new letter by @demandprogress (irony!) and other progressive orgs. It gets basic facts wrong and misrepresents research. 🧵

1/ This characterization of the moratorium is wrong: it isn't a total immunity because states can still enforce any general purpose law against AI system providers, including civil rights laws and consumer protection laws. In fact, the moratorium specifically says that.

2/ False. While it's not quite clear what "unaccountable to lawmakers and the public" means, it is 100% clear that traditional tort liability as well as general consumer protections and other laws would continue to apply. Deliberately designing an algorithm to cause foreseeable harm likely triggers civil and potentially criminal liability under most states' laws.

3/ So much wrong. State regulators don't enforce tort law; nothing in the moratorium changes rules of discovery in lawsuits; why is "transparency" uniquely necessary in AI to hold companies accountable for actual harm? We can see the harms! Discovery is for determining the underlying causes. (If no one can tell if they were harmed or not, is there really harm?)

4/ Ok now it gets bad; the letter moves from misinterpreting the law to basically just making things up. The cited reference refers to one instance of a reporter telling a chatbot that they were underage. It contains no evidence than any, let alone "many" cases of children having such conversations exist.

5/ The lawsuit against (which is the cite here) is ongoing and would not be affected at all by the moratorium. character.ai

6/ This is very close to a lie, and at the very least is completely unsupported by the citation. The cited study, which did not involve real patients or actual medical decisions, showed that unaltered AI-tool improved diagnosis, while AI tools that were intentionally biased by the researchers made diagnosis less accurate.

This is a stupid study. If I intentionally falsified parts of a medical reference handbook or surreptitiously manipulated a blood pressure cuff, I could probably get doctors to misdiagose more often, too.

But the bigger point is that, contra the letter's claims, there were no actual "healthcare decisions that have led to adverse and biased outcomes."

This is a stupid study. If I intentionally falsified parts of a medical reference handbook or surreptitiously manipulated a blood pressure cuff, I could probably get doctors to misdiagose more often, too.

But the bigger point is that, contra the letter's claims, there were no actual "healthcare decisions that have led to adverse and biased outcomes."

7/ As the cited article notes, revenge porn long pre-exists generative AI.

BUT ALSO Congress (accused by the coalition of being totally inactive) literally just passed and the President signed the TAKE IT DOWN Act, which criminalizes the publication of nonconsensual intimate imagery, including that created with AI.

The moratorium doesn't affect the TAKE IT DOWN Act. So this point is moot.

BUT ALSO Congress (accused by the coalition of being totally inactive) literally just passed and the President signed the TAKE IT DOWN Act, which criminalizes the publication of nonconsensual intimate imagery, including that created with AI.

The moratorium doesn't affect the TAKE IT DOWN Act. So this point is moot.

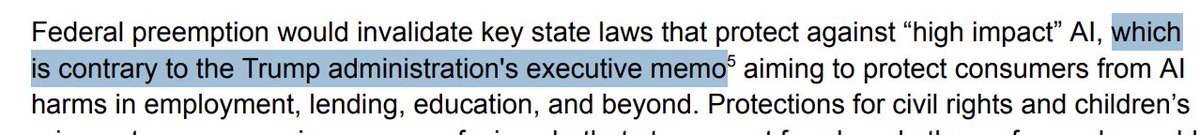

8/ To the contrary! The Trump EO 1) applies to federal agencies and 2) seeks to establish a federal framework. A moratorium on conflicting state laws is completely consistent with Trump's approach.

9/ This is more of the same. AGAIN, civil rights, privacy laws, and many other safeguards are completely unaffected by the moratorium. SOME requirements to tell customers they are speaking to an AI may be affected, but even those could be easily tweaked to survive the moratorium. Just change the law to require all similar systems, AI or not, to disclose key characteristics.

10/ To finish up, I'll just flag a particular pet peeve of mine. Is there ANY evidence that regulation increases consumer trust in a technology? This logical tic is so common among supporters of certain kinds of regulation, but it seems completely false to me. Every technology mentioned was widely adopted well before there was regulatory action. Adoption happens first and then regulation. Are there ANY examples of it going the other way around?

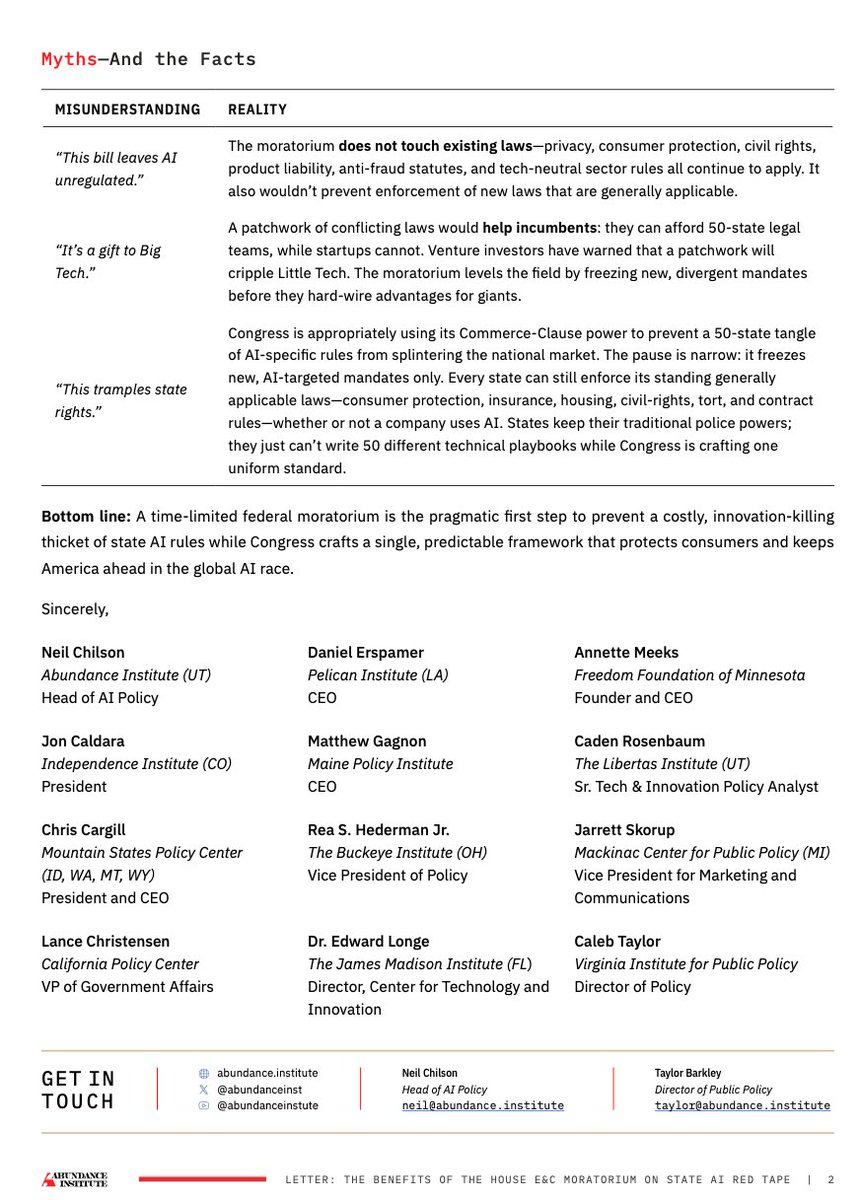

END/ If you want to read a contrasting view that doesn't make things up, check out the letter we led:

https://x.com/neil_chilson/status/1922484215137927451

• • •

Missing some Tweet in this thread? You can try to

force a refresh