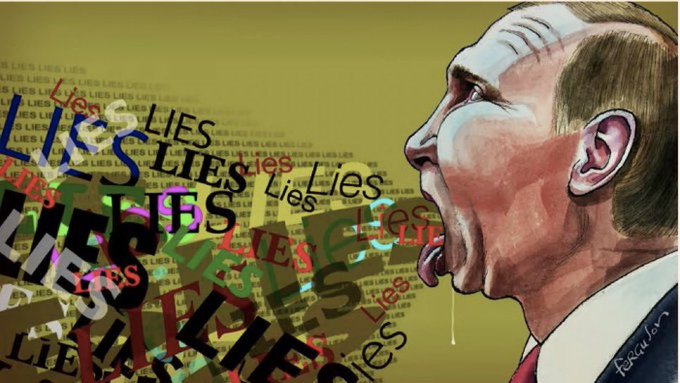

What did Russia’s digital propaganda look like during the invasion of Ukraine?

This isn’t a guess.

We now have data. A lot of it.

A 2023 study analyzed 368,000 pro-Russian tweets posted during the first 6 months of the war.

This isn’t a guess.

We now have data. A lot of it.

A 2023 study analyzed 368,000 pro-Russian tweets posted during the first 6 months of the war.

What they found is both familiar — and alarming.

#UkraineDisinfo7

#UkraineDisinfo7

The study: “Russian propaganda on social media during the 2022 invasion of Ukraine”

🔹 Timeframe: Feb–July 2022

🔹 Platform: Twitter

🔹 Accounts: 140,000+

🔹 Messages: ~368,000 pro-Russian tweets

arxiv.org/abs/2211.04154

🔹 Timeframe: Feb–July 2022

🔹 Platform: Twitter

🔹 Accounts: 140,000+

🔹 Messages: ~368,000 pro-Russian tweets

arxiv.org/abs/2211.04154

Researchers used specific hashtags to collect pro-Russian content:

Examples:

🔸 # IStandWithRussia

🔸 # StandWithPutin

🔸 # DonbassWar

🔸 # Biolabs

🔸 # ZelenskyWarCriminal

These were often pushed to trend — even outside of Russia.

Examples:

🔸 # IStandWithRussia

🔸 # StandWithPutin

🔸 # DonbassWar

🔸 # Biolabs

🔸 # ZelenskyWarCriminal

These were often pushed to trend — even outside of Russia.

Scope of activity? Massive.

➡️ 368,000 tweets

➡️ ~251,000 retweets

➡️ Estimated reach: 14.4 million users

That’s not fringe. That’s engineered visibility.

➡️ 368,000 tweets

➡️ ~251,000 retweets

➡️ Estimated reach: 14.4 million users

That’s not fringe. That’s engineered visibility.

The game-changer? Bots.

The study found that 20.3% of the accounts were likely bots.

That’s tens of thousands of inauthentic accounts — created to amplify, distort, and deceive.

And they were fast.

The study found that 20.3% of the accounts were likely bots.

That’s tens of thousands of inauthentic accounts — created to amplify, distort, and deceive.

And they were fast.

Many of these bot accounts were created right at the start of the invasion — February 2022.

That’s not organic.

That’s preparation.

A digital surge aligned with a physical invasion.

That’s not organic.

That’s preparation.

A digital surge aligned with a physical invasion.

These bots weren’t designed to debate.

They had one job: amplify.

🔹 Retweet each other

🔹 Boost visibility of hashtags

🔹 Drive traffic to pro-Russian narratives

🔹 Make fringe ideas look “popular”

That’s influence laundering.

They had one job: amplify.

🔹 Retweet each other

🔹 Boost visibility of hashtags

🔹 Drive traffic to pro-Russian narratives

🔹 Make fringe ideas look “popular”

That’s influence laundering.

The bots created the illusion of consensus.

A trick that works on:

✔ Platform algorithms

✔ Casual users

✔ Journalists scanning trends

✔ Public sentiment in countries on the fence

Perception shapes reality.

A trick that works on:

✔ Platform algorithms

✔ Casual users

✔ Journalists scanning trends

✔ Public sentiment in countries on the fence

Perception shapes reality.

One of the most revealing findings?

Bots were heavily active in India, South Africa, and Pakistan —

Countries that abstained from condemning Russia at the UN in March 2022.

That’s targeted influence.

Bots were heavily active in India, South Africa, and Pakistan —

Countries that abstained from condemning Russia at the UN in March 2022.

That’s targeted influence.

Activity surged during the UN General Assembly vote.

→ March 2–4, 2022

This wasn’t random spam.

It was geopolitical manipulation in real time, using Twitter to shape how countries saw the war.

→ March 2–4, 2022

This wasn’t random spam.

It was geopolitical manipulation in real time, using Twitter to shape how countries saw the war.

Bots also linked to themes meant to appeal to the Global South:

🔸 “Western hypocrisy”

🔸 NATO provocation

🔸 Biolabs conspiracies

🔸 Civilian casualties (blamed on Ukraine)

🔸 Anti-colonial rhetoric

Strategic narrative tailoring.

🔸 “Western hypocrisy”

🔸 NATO provocation

🔸 Biolabs conspiracies

🔸 Civilian casualties (blamed on Ukraine)

🔸 Anti-colonial rhetoric

Strategic narrative tailoring.

Pro-Russian accounts had fewer followers, fewer replies, and lower engagement compared to pro-Ukraine ones.

But with bot armies?

You don’t need organic support.

You just need to look loud enough to trend.

But with bot armies?

You don’t need organic support.

You just need to look loud enough to trend.

This mirrors what Russia did in 2016, 2018, and 2020 elections in the West.

But this time it wasn’t just about elections.

It was about justifying invasion.

And doing it in front of a global audience.

But this time it wasn’t just about elections.

It was about justifying invasion.

And doing it in front of a global audience.

So what does this tell us?

Russia uses Twitter not to win arguments — but to:

✔ Drown out critics

✔ Seed doubt

✔ Confuse fence-sitters

✔ Make their position look mainstream

It’s disinfo by saturation.

Russia uses Twitter not to win arguments — but to:

✔ Drown out critics

✔ Seed doubt

✔ Confuse fence-sitters

✔ Make their position look mainstream

It’s disinfo by saturation.

This is also a lesson in how platforms become proxies.

When states go to war, so do their narratives.

And platforms like Twitter — with weak moderation and amplification mechanics — become weapons.

When states go to war, so do their narratives.

And platforms like Twitter — with weak moderation and amplification mechanics — become weapons.

And while this study ends in July 2022…

These tactics haven’t stopped.

They’ve just evolved.

With AI-generated content, deeper mimicry, and cross-platform seeding now in play.

This was the proof-of-concept phase.

These tactics haven’t stopped.

They’ve just evolved.

With AI-generated content, deeper mimicry, and cross-platform seeding now in play.

This was the proof-of-concept phase.

Why this matters:

Because the lie doesn’t need to be believed.

It just needs to trend.

To seed enough confusion that truth becomes “opinion.”

And aggression becomes “debate.”

Because the lie doesn’t need to be believed.

It just needs to trend.

To seed enough confusion that truth becomes “opinion.”

And aggression becomes “debate.”

The data tells the story:

➡️ Bots surged with the tanks

➡️ Hashtags were weaponized

➡️ Influence targeted swing states

➡️ Twitter was terrain in the invasion

This is not just propaganda.

It’s strategy.

➡️ Bots surged with the tanks

➡️ Hashtags were weaponized

➡️ Influence targeted swing states

➡️ Twitter was terrain in the invasion

This is not just propaganda.

It’s strategy.

We’ll never win this fight with facts alone.

We need:

🔹 Faster detection

🔹 Transparent algorithms

🔹 Public education

🔹 Narrative fluency

🔹 Civic resistance

Because perception is battlefield space now.

We need:

🔹 Faster detection

🔹 Transparent algorithms

🔹 Public education

🔹 Narrative fluency

🔹 Civic resistance

Because perception is battlefield space now.

This thread was based on Geissler et al. (2023):

One of the best forensic snapshots of how Russia uses bots and hashtags to support war.

And a preview of what’s coming next.

#UkraineDisinfo7 #UkraineDisinfoarxiv.org/abs/2211.04154

One of the best forensic snapshots of how Russia uses bots and hashtags to support war.

And a preview of what’s coming next.

#UkraineDisinfo7 #UkraineDisinfoarxiv.org/abs/2211.04154

Want the human story behind the data?

We broke it down on the Forum — how this bot-fueled war played out on your feed, and why it matters for the future of democracy and conflict.

Read more:

#UkraineDisinfo7 #UkraineDisinfonafoforum.org/magazine/postm…

We broke it down on the Forum — how this bot-fueled war played out on your feed, and why it matters for the future of democracy and conflict.

Read more:

#UkraineDisinfo7 #UkraineDisinfonafoforum.org/magazine/postm…

• • •

Missing some Tweet in this thread? You can try to

force a refresh