born by the KGB raised by the CIA mindreader digital ventriloquist #fella #WeAreNAFO Heavy Bonker Award 🏅⚡Every coffee helps #Edumacation and the @NAFOforum👇

4 subscribers

How to get URL link on X (Twitter) App

They were expensive, slow, and limited by time, coordination, and manpower.

They were expensive, slow, and limited by time, coordination, and manpower.

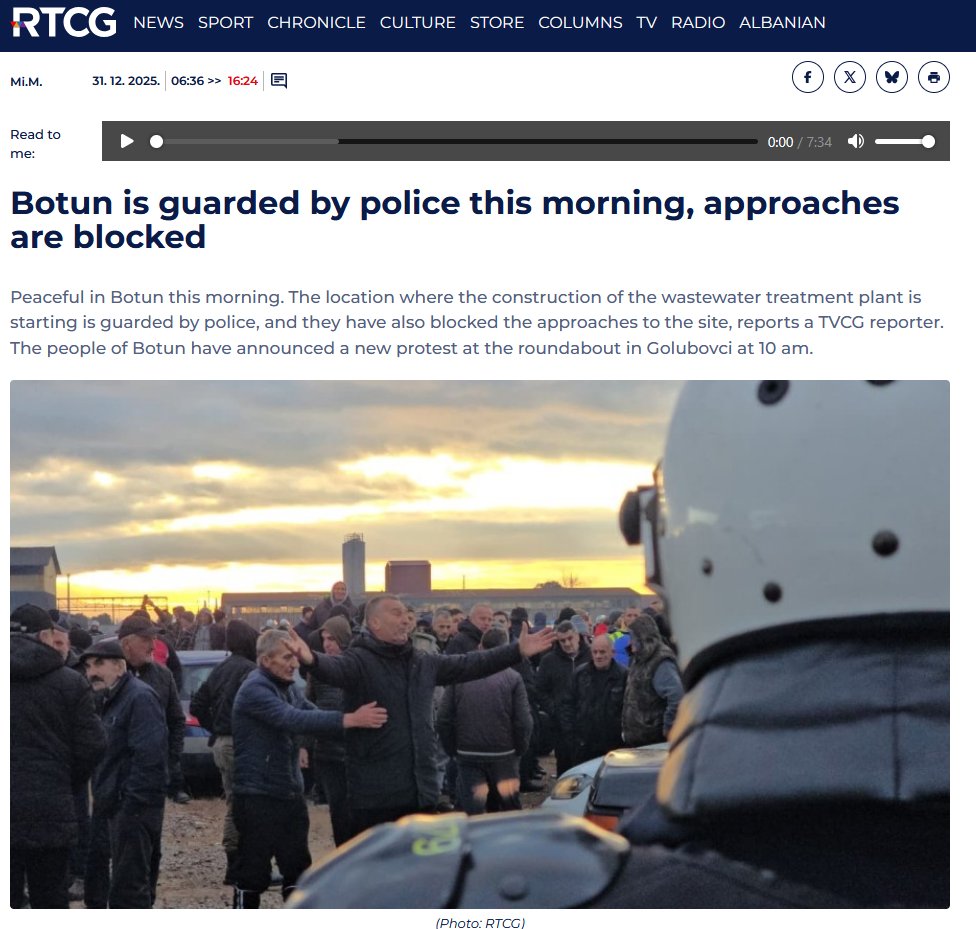

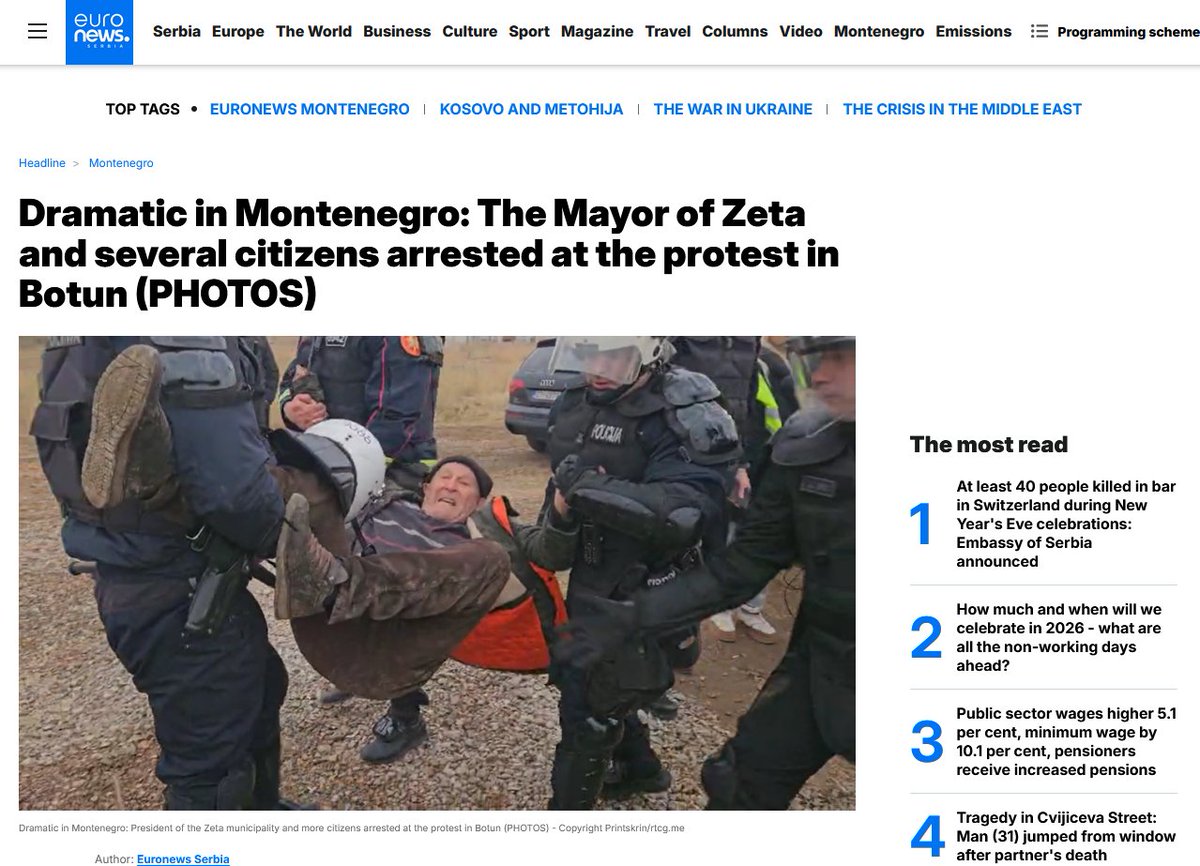

Near Podgorica, residents blocked the construction of a wastewater treatment plant in Botun.

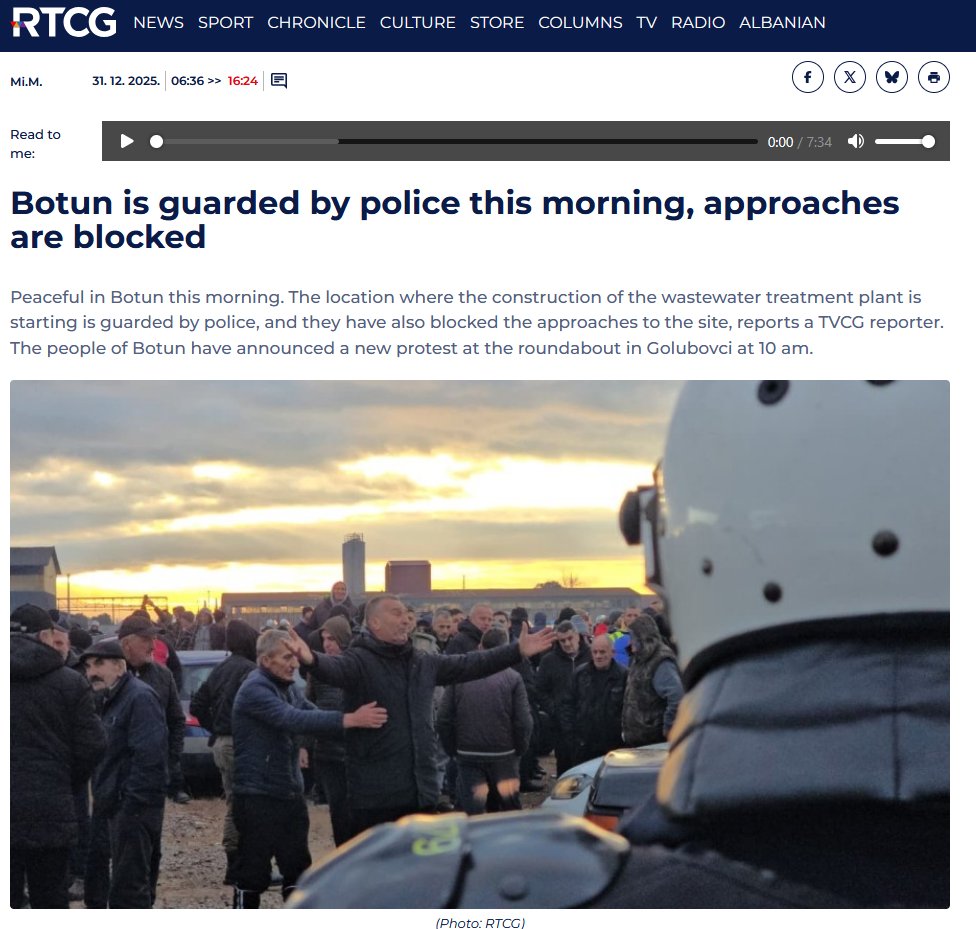

Near Podgorica, residents blocked the construction of a wastewater treatment plant in Botun.

So what does propaganda actually do?

So what does propaganda actually do?

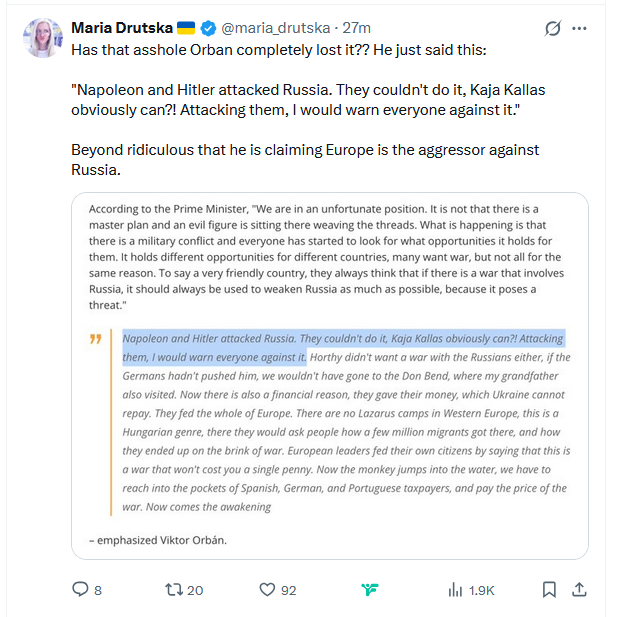

Q: Is this just Orbán being provocative for attention?

Q: Is this just Orbán being provocative for attention?

Measuring susceptibility isn’t about intelligence.

Measuring susceptibility isn’t about intelligence.

She documents how Oleh Voloshyn, a sanctioned Russian operative, and his wife Nadia Sass, a pro-Kremlin influencer, targeted politicians in the UK and across Europe.

She documents how Oleh Voloshyn, a sanctioned Russian operative, and his wife Nadia Sass, a pro-Kremlin influencer, targeted politicians in the UK and across Europe.

Then here it is:

Then here it is:

What is kompromat?

What is kompromat?

Among them is Mamuka Mamulashvili, commander of the Georgian Legion — the largest foreign military unit fighting alongside Ukrainian forces in Russia's invasion of Ukraine.

Among them is Mamuka Mamulashvili, commander of the Georgian Legion — the largest foreign military unit fighting alongside Ukrainian forces in Russia's invasion of Ukraine.

At the center: “Evrazia” and shadow structures linked to Ilan Șor.

At the center: “Evrazia” and shadow structures linked to Ilan Șor.

1/ People ask: “Why doesn’t the EU counter Kremlin propaganda with the same weapons?”

1/ People ask: “Why doesn’t the EU counter Kremlin propaganda with the same weapons?”

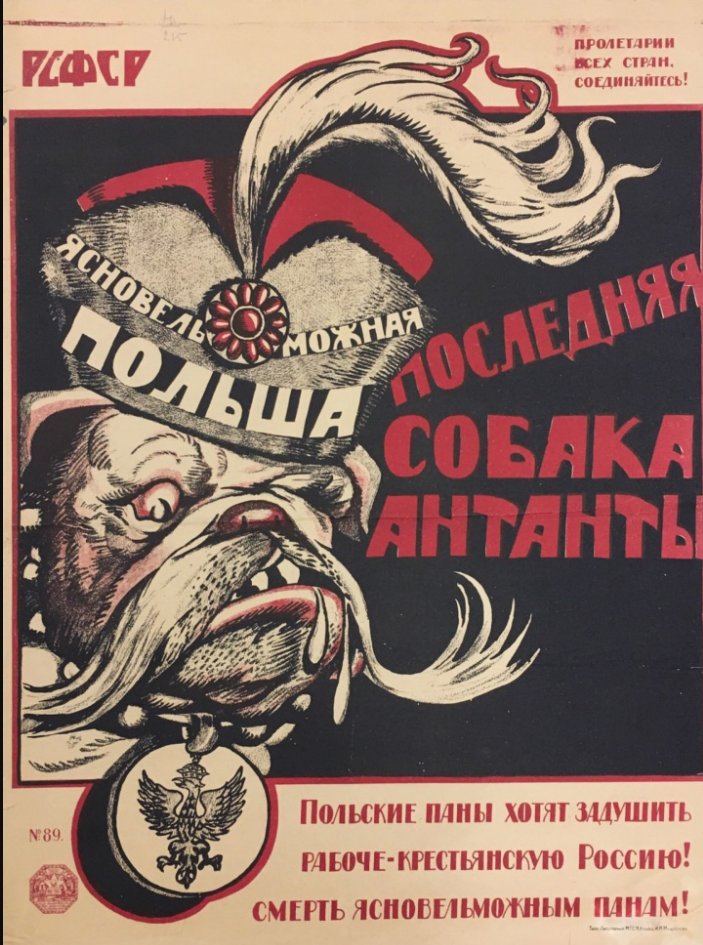

1918–20, Russia’s in civil war.

1918–20, Russia’s in civil war.

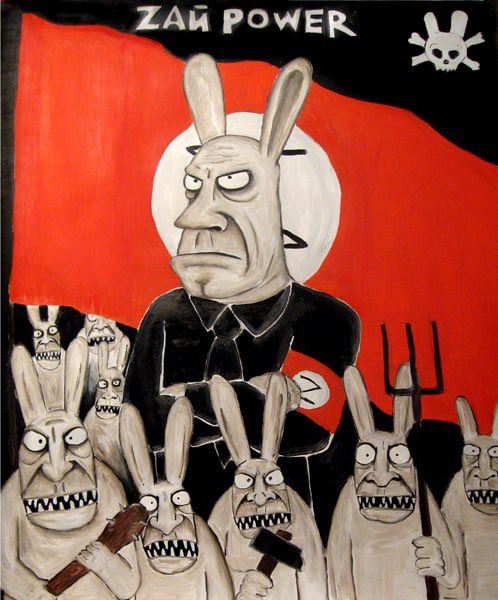

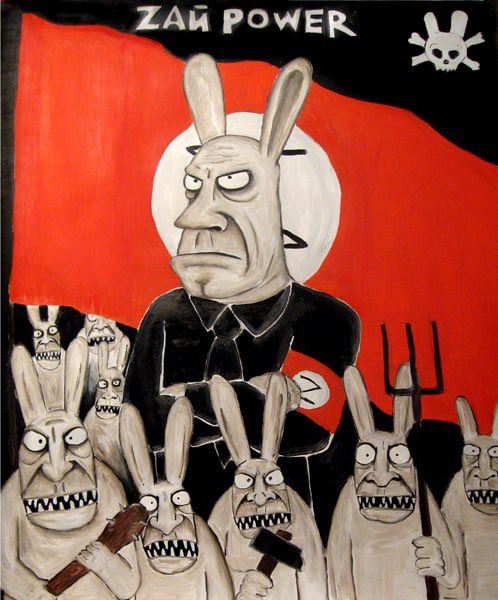

The russian empire in all its iterations was a Frankenstein made of pieces and always tried hard to find its reason to exist.

The russian empire in all its iterations was a Frankenstein made of pieces and always tried hard to find its reason to exist.

Russia’s war machine runs on three engines: force, finance, and cognition.

Russia’s war machine runs on three engines: force, finance, and cognition.

Source: The Deception Game (1972) — archive.org/details/decept…

Source: The Deception Game (1972) — archive.org/details/decept…

NATO on cognitive warfare:

NATO on cognitive warfare:

Here’s what people still ask:

Here’s what people still ask:

Since the early 1900s, Russian regimes have used the same method:

Since the early 1900s, Russian regimes have used the same method:

Percepticide is the collapse of shared perception.

Percepticide is the collapse of shared perception.

Today, democracies aren’t falling through coups. They’re eroding quietly.

Today, democracies aren’t falling through coups. They’re eroding quietly.

In 1947, the UN proposed a two-state solution: one Jewish, one Arab.

In 1947, the UN proposed a two-state solution: one Jewish, one Arab.