What if an LLM could update its own weights?

Meet SEAL🦭: a framework where LLMs generate their own training data (self-edits) to update their weights in response to new inputs.

Self-editing is learned via RL, using the updated model’s downstream performance as reward.

Meet SEAL🦭: a framework where LLMs generate their own training data (self-edits) to update their weights in response to new inputs.

Self-editing is learned via RL, using the updated model’s downstream performance as reward.

Self-edits (SE) are generated in token space and consist of training data and optionally optimization parameters. This is trained with RL, where the actions are the self-edit generations, and the reward is the updated model's performance on a task relevant to the input context.

Here is the pseudocode:

C is the input context, i.e. a passage, and tao is a downstream evaluation task, i.e. a set of questions about the passage to be answered without the passage in context.

C is the input context, i.e. a passage, and tao is a downstream evaluation task, i.e. a set of questions about the passage to be answered without the passage in context.

We explore two settings: (1) incorporating knowledge from a passage, where self-edits are generated text in the form of "implications" of the passage, and (2) adapting to few-shot examples on ARC, where self-edits are tool-calls for data augmentation and optimization params, as in .arxiv.org/abs/2411.07279

In the few-shot domain, we outperform both ICL and self-edits from the base model, though we still don't reach the optimal human-crafted test-time training (TTT) configuration. Note: these results are from a curated subset that is easier for small LMs.

For incorporating knowledge from a passage into weights, we find that after 2 rounds of RL training, each on a batch of 50 passages, self-editing even matches using synthetic data generated by GPT-4.1.

While RL training is done in the single passage regime, where we can easily quantify the contribution of each self-edit generation, the SEAL model's self-edits are still useful in a continued pretraining setting, where we incorporate many passages in a single update.

Here is an example passage (Input Context) along with SEAL's self-edit generations (Rewrite) and subsequent responses to downstream questions after each round of RL.

You may have noticed that generations kept increasing after each round of RL. This is expected since we get more diverse content containing relevant information. Could we just prompt the base model to generate longer sequences instead? We find that prompting for longer generations (along with prompting other self-edit formats) does increase performance, but that RL starting from these prompts increases performance even further, by similar margins.

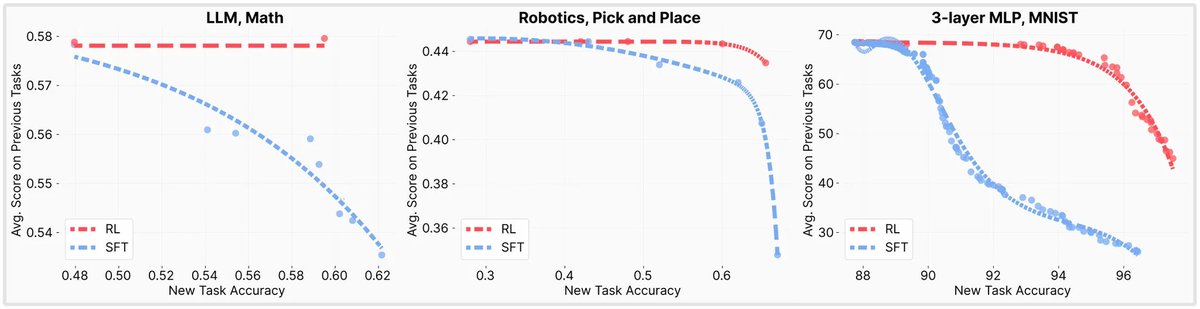

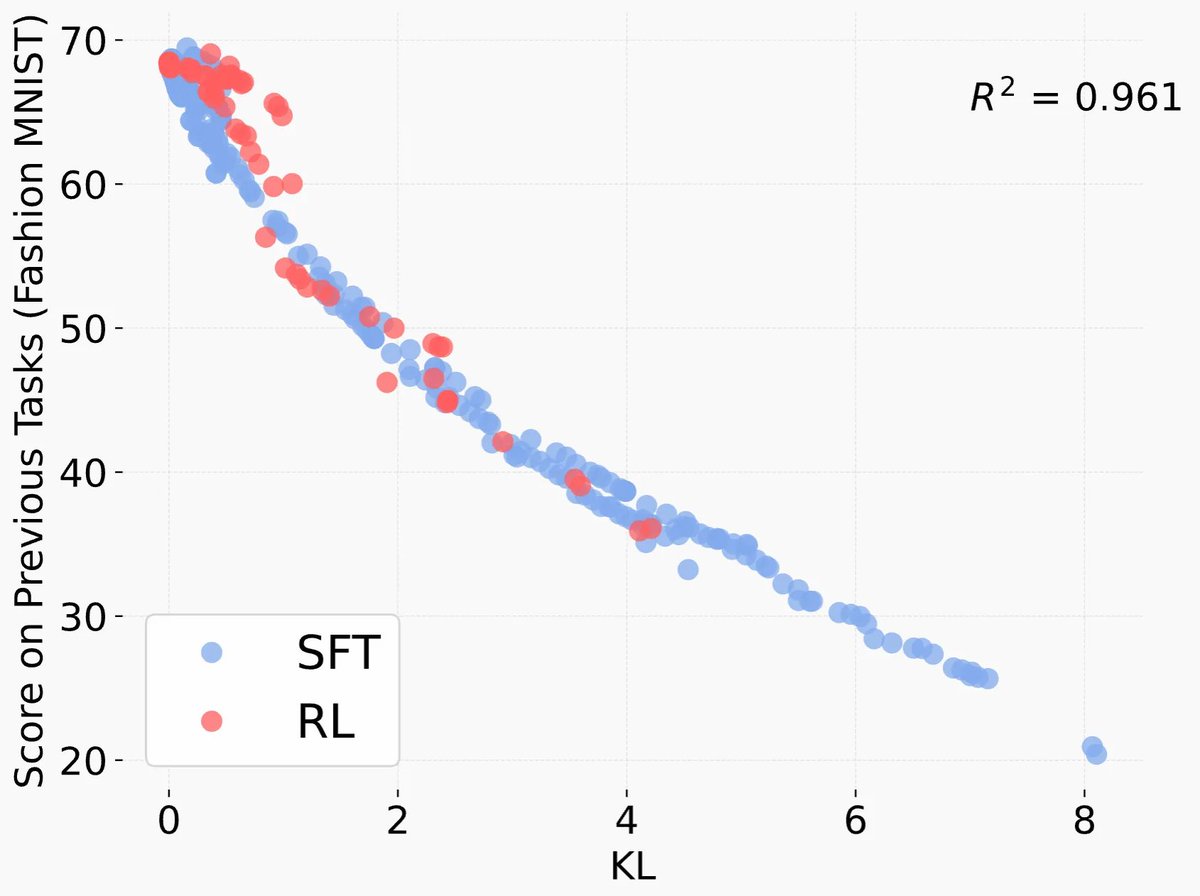

Limitations / Future Work: One of our original motivations was to work towards the ultimate goal of continual learning—think about agents continually self-adapting based on their interactions in an environment. While SEAL doesn't explicitly train for this, we still were curious to see how much performance degrades on previous tasks over sequential self-editing. Here, a column is performance on a particular passage over the course of sequential self-edits. We find performance does decrease on prior tasks, warranting future work.

@AdamZweiger and I had an amazing group to help us. Huge thanks to @HanGuo97 and @akyurekekin for the invaluable guidance throughout this project, and to @yoonrkim and @pulkitology for being incredible advisors.

Paper: arxiv.org/abs/2506.10943

Website: jyopari.github.io/posts/seal

Code: github.com/Continual-Inte…

Paper: arxiv.org/abs/2506.10943

Website: jyopari.github.io/posts/seal

Code: github.com/Continual-Inte…

• • •

Missing some Tweet in this thread? You can try to

force a refresh