METR’s analysis of this experiment is wildly misleading. The results indicate that people who have ~never used AI tools before are less productive while learning to use the tools, and say ~nothing about experienced AI tool users. Let's take a look at why.

https://x.com/METR_Evals/status/1943360406124114187

I immediately found the claim suspect because it didn't jibe with my own experience working w people using coding assistants, but sometimes there are surprising results so I dug in. The first question: who were these developers in the study getting such poor results?

“We recruited 16 experienced open-source developers to work on 246 real tasks in their own repositories (avg 22k+ stars, 1M+ lines of code).” So they sound like reasonably experienced software devs.

"Developers have a range of experience using AI tools: 93% have prior experience with tools like ChatGPT, but only 44% have experience using Cursor." Uh oh. So they haven't actually used AI coding tools, they've like tried prompting an LLM to write code for them. But that's an entirely different kind of experience, as anyone who has used these tools can tell you.

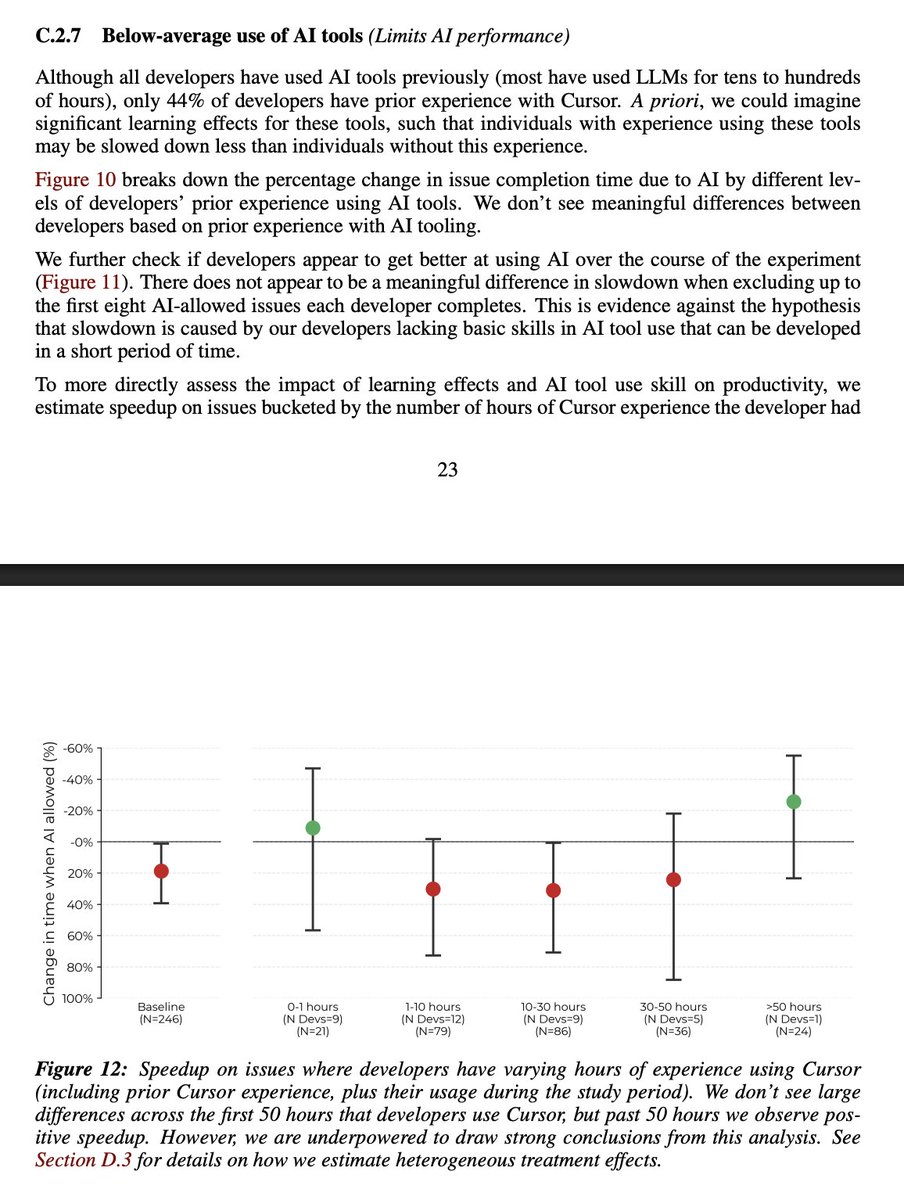

They claim "a range of experience using AI tools", yet only a single developer of their sixteen had more than a single week of experience using Cursor. They make it look like a range by breaking "less than a week" into <1 hr, 1-10hrs, 10-30hrs, and 30-50hrs of experience. Given the long steep learning curve for effectively using these new AI tools well, this division betrays what I hope is just grossly negligent ignorance about that reality, rather than intentional deception.

Of course, the one developer who did have more than a week of experience was 20% faster instead of 20% slower. The authors note this fact, but then say “We are underpowered to draw strong conclusions from this analysis” and bury it in a figure’s description in an appendix.

If the authors of the paper had made the claim, "We tested experienced developers using AI tools for the first time, and found that at least during the first week they were slower rather than faster" that would have been a modestly interesting finding and true. Alas, that is not the claim they made.

"Developers have a range of experience using AI tools: 93% have prior experience with tools like ChatGPT, but only 44% have experience using Cursor." Uh oh. So they haven't actually used AI coding tools, they've like tried prompting an LLM to write code for them. But that's an entirely different kind of experience, as anyone who has used these tools can tell you.

They claim "a range of experience using AI tools", yet only a single developer of their sixteen had more than a single week of experience using Cursor. They make it look like a range by breaking "less than a week" into <1 hr, 1-10hrs, 10-30hrs, and 30-50hrs of experience. Given the long steep learning curve for effectively using these new AI tools well, this division betrays what I hope is just grossly negligent ignorance about that reality, rather than intentional deception.

Of course, the one developer who did have more than a week of experience was 20% faster instead of 20% slower. The authors note this fact, but then say “We are underpowered to draw strong conclusions from this analysis” and bury it in a figure’s description in an appendix.

If the authors of the paper had made the claim, "We tested experienced developers using AI tools for the first time, and found that at least during the first week they were slower rather than faster" that would have been a modestly interesting finding and true. Alas, that is not the claim they made.

METR published and promoted this paper with a provocative, misleading headline and summary and body. They buried the fact that the single experienced developer DID have significant gains, contradicting the headline. I hope that the authors withdraw this paper or at the very least update it to limit the claims.

The study does appear mostly well designed in other respects, although I would want to audit it carefully before accepting anything at face value. It does seem that developers and experts overestimate how much value a developer will get from using an AI tool during the first week they use it.

The study does appear mostly well designed in other respects, although I would want to audit it carefully before accepting anything at face value. It does seem that developers and experts overestimate how much value a developer will get from using an AI tool during the first week they use it.

A more interesting study would include inexperienced devs, and those who use the tools already, and those who have invested work into optimizing usage of the tools. Perhaps someone with experience using the tools would be able to design such a future study?

It is clear that the source of disagreement is that I think using Cursor effectively is a distinct skill from talking to ChatGPT while you program and expect fairly low transfer, and the authors think it's the similar skill and expect much higher.

https://x.com/idavidrein/status/1944892081769734559

I think conflating the two completely invalidates the study's headline and summary results. I suppose the future will tell if this is the case. I'm glad to have found the underlying disagreement.

Apparently many of the users with less than 50 hours of experience in the paper actually had more? If true, this basically invalidates all findings in the paper, since it indicates flaws in the data collection process.

https://x.com/joel_bkr/status/1945173623284617370

• • •

Missing some Tweet in this thread? You can try to

force a refresh