A new class action copyright lawsuit against Anthropic exposes it to a billion-dollar legal risk.

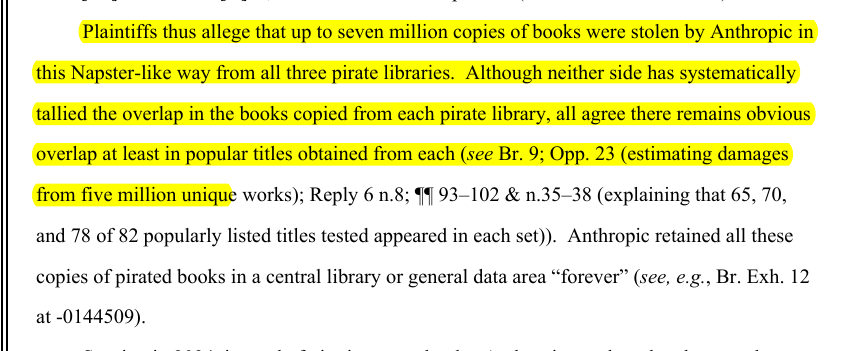

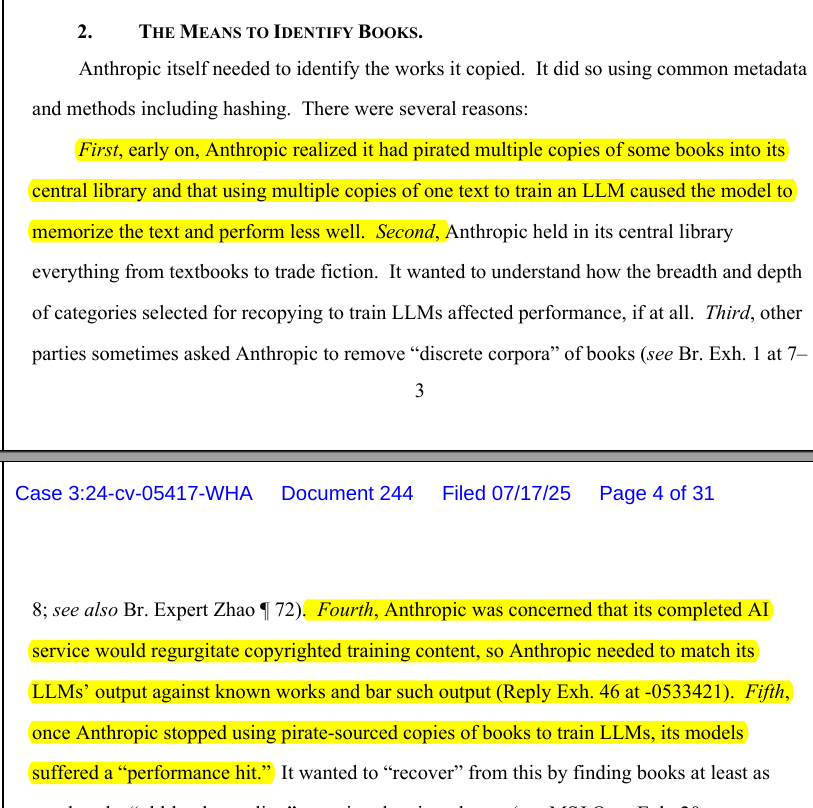

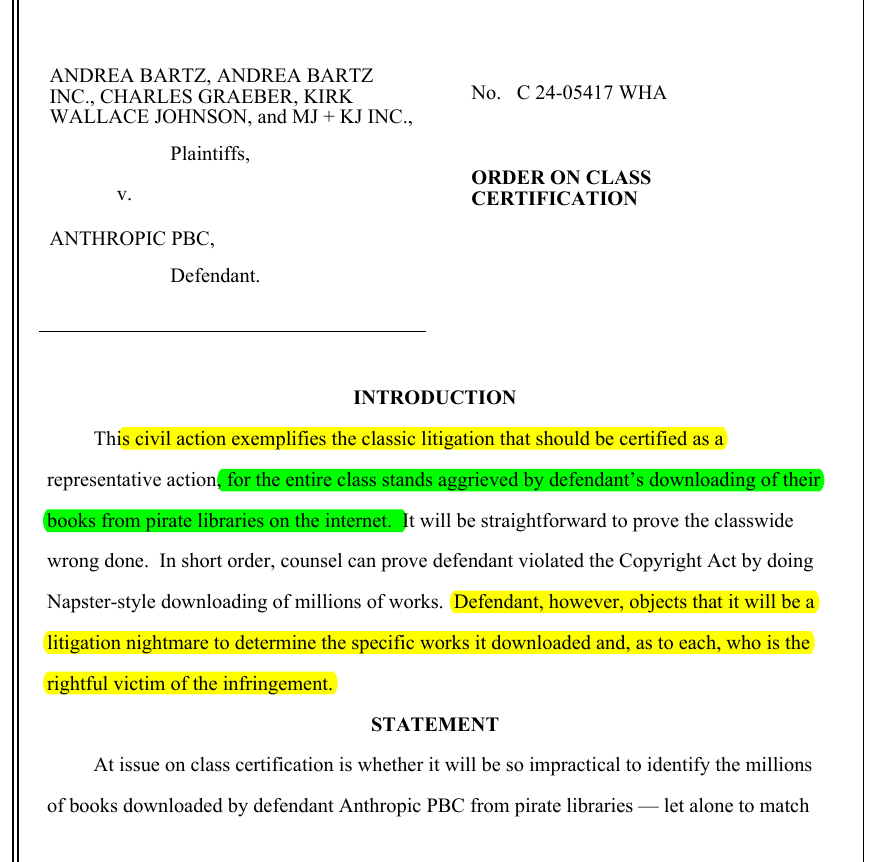

Judge William Alsup called the haul “Napster-style”. He certified a class for rights-holders whose books sat in LibGen and PiLiMi, because Anthropic’s own logs list the exact titles.

The order says storing pirate files is not fair use, even if an AI later transforms them. Since the law allows up to $150,000 per willful hit, copying this many books could cost Anthropic $1bn+.

Anthropic must hand a full metadata list by 8/1/2025. Plaintiffs then file their matching copyright registrations by 9/1. Those deadlines will drive discovery and push the case toward a single jury showdown.

Other AI labs, which also face lawsuits for training on copyrighted books, can no longer point to the usual “fair use” excuse if any of their data came from pirate libraries. Judge Alsup spelled out that keeping pirated files inside an internal archive is outright infringement, even if the company later transforms the text for model training.

Judge William Alsup called the haul “Napster-style”. He certified a class for rights-holders whose books sat in LibGen and PiLiMi, because Anthropic’s own logs list the exact titles.

The order says storing pirate files is not fair use, even if an AI later transforms them. Since the law allows up to $150,000 per willful hit, copying this many books could cost Anthropic $1bn+.

Anthropic must hand a full metadata list by 8/1/2025. Plaintiffs then file their matching copyright registrations by 9/1. Those deadlines will drive discovery and push the case toward a single jury showdown.

Other AI labs, which also face lawsuits for training on copyrighted books, can no longer point to the usual “fair use” excuse if any of their data came from pirate libraries. Judge Alsup spelled out that keeping pirated files inside an internal archive is outright infringement, even if the company later transforms the text for model training.

• • •

Missing some Tweet in this thread? You can try to

force a refresh