Compiling in real-time, the race towards AGI.

The Largest Show on X for AI.

🗞️ Get my daily AI analysis newsletter to your email 👉 https://t.co/6LBxO8215l

8 subscribers

How to get URL link on X (Twitter) App

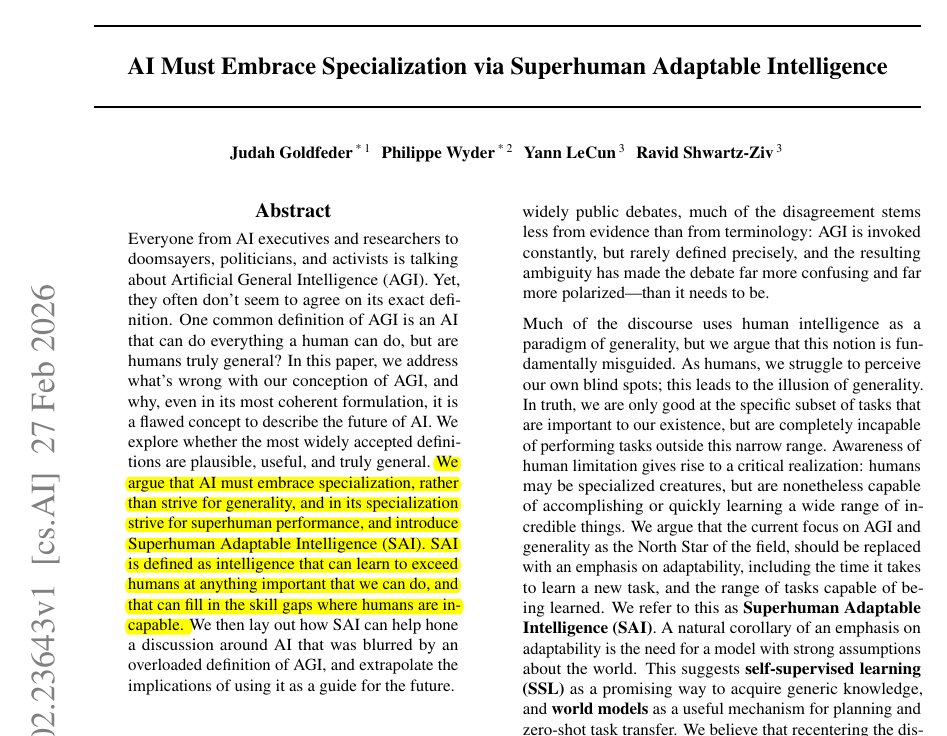

This visual maps different AI goals to show how adaptable intelligence completely beats older performance ideas.

This visual maps different AI goals to show how adaptable intelligence completely beats older performance ideas.

https://x.com/rohanpaul_ai/status/2027531960214786110

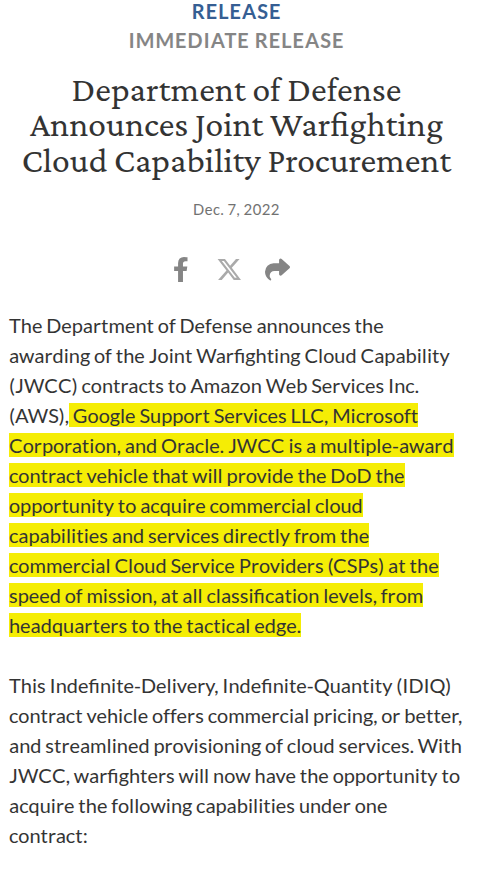

war.gov/News/Releases/…

war.gov/News/Releases/…

github.com/qwibitai/nanoc…

github.com/qwibitai/nanoc…

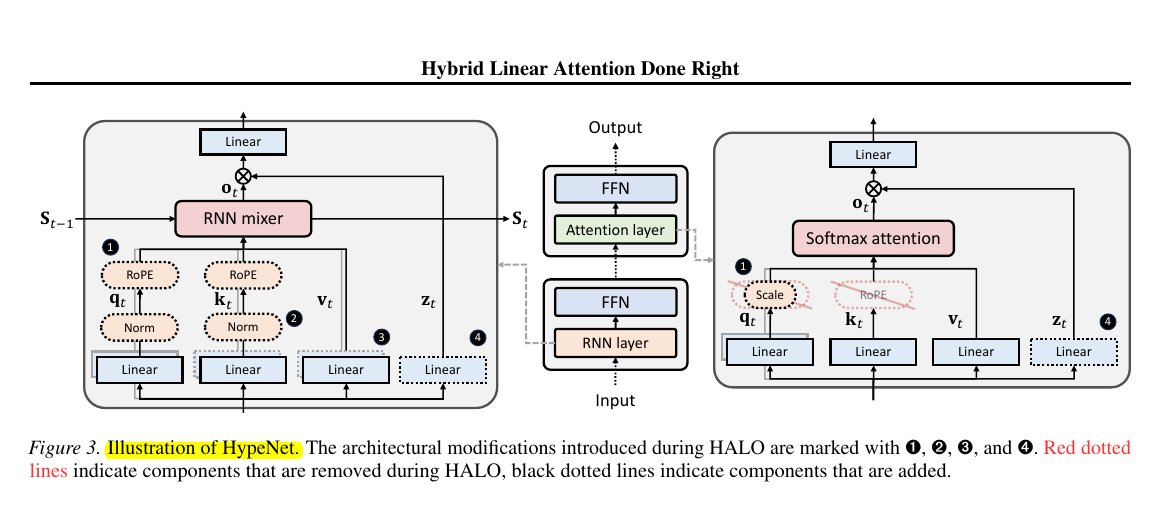

🧵 2. The diagram compares a standard Transformer attention block on the right with the “hybrid” replacement block on the left.

🧵 2. The diagram compares a standard Transformer attention block on the right with the “hybrid” replacement block on the left.

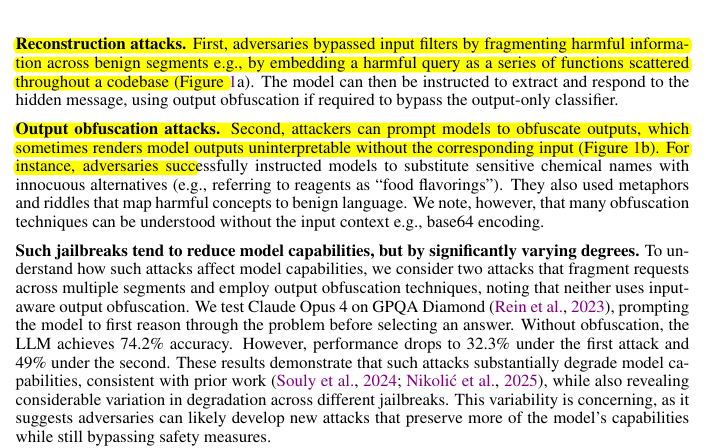

🧩 The core problem

🧩 The core problem

🧠 The idea

🧠 The idea

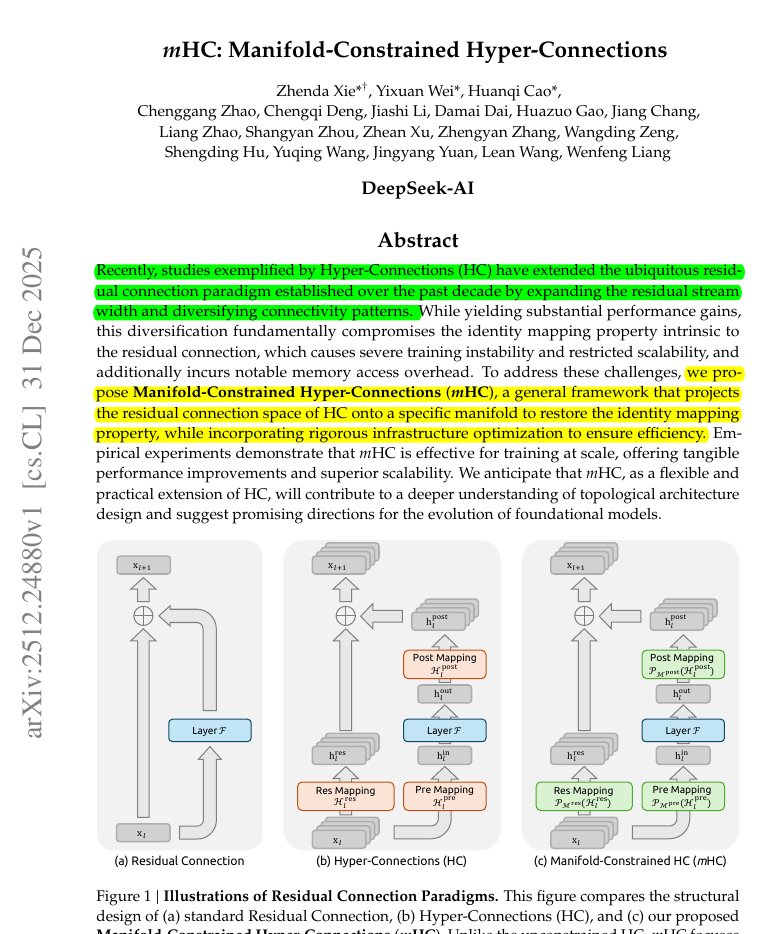

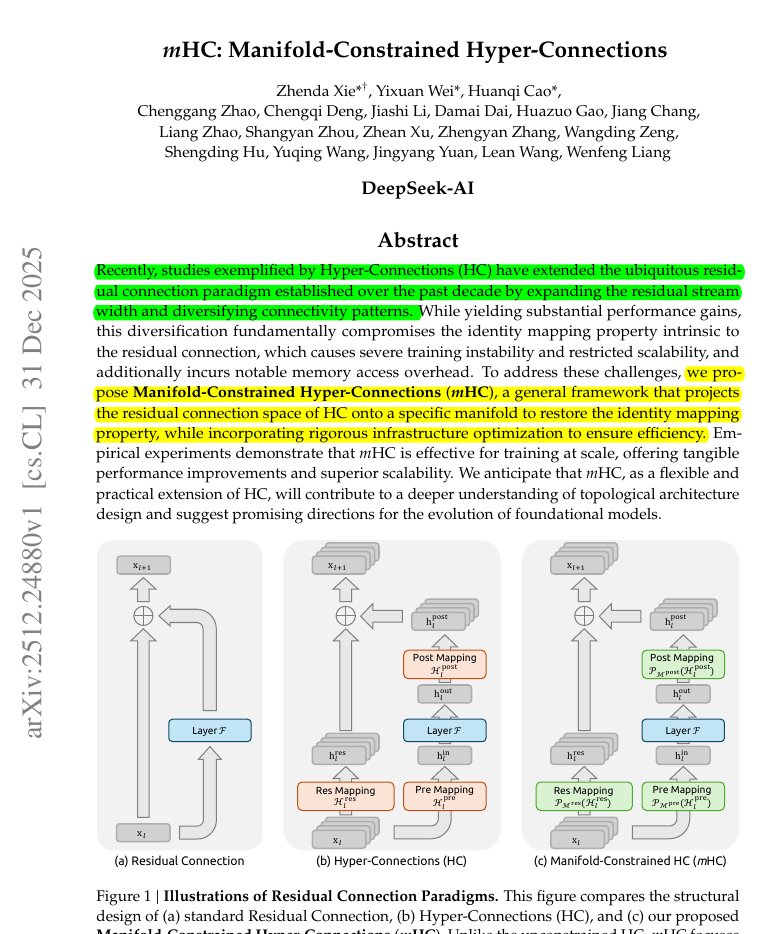

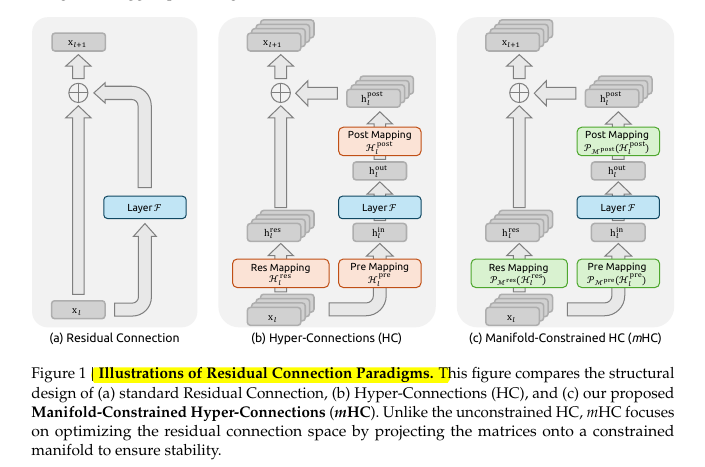

This image compares 3 ways to build the shortcut path that carries information around a layer in a transformer.

This image compares 3 ways to build the shortcut path that carries information around a layer in a transformer.

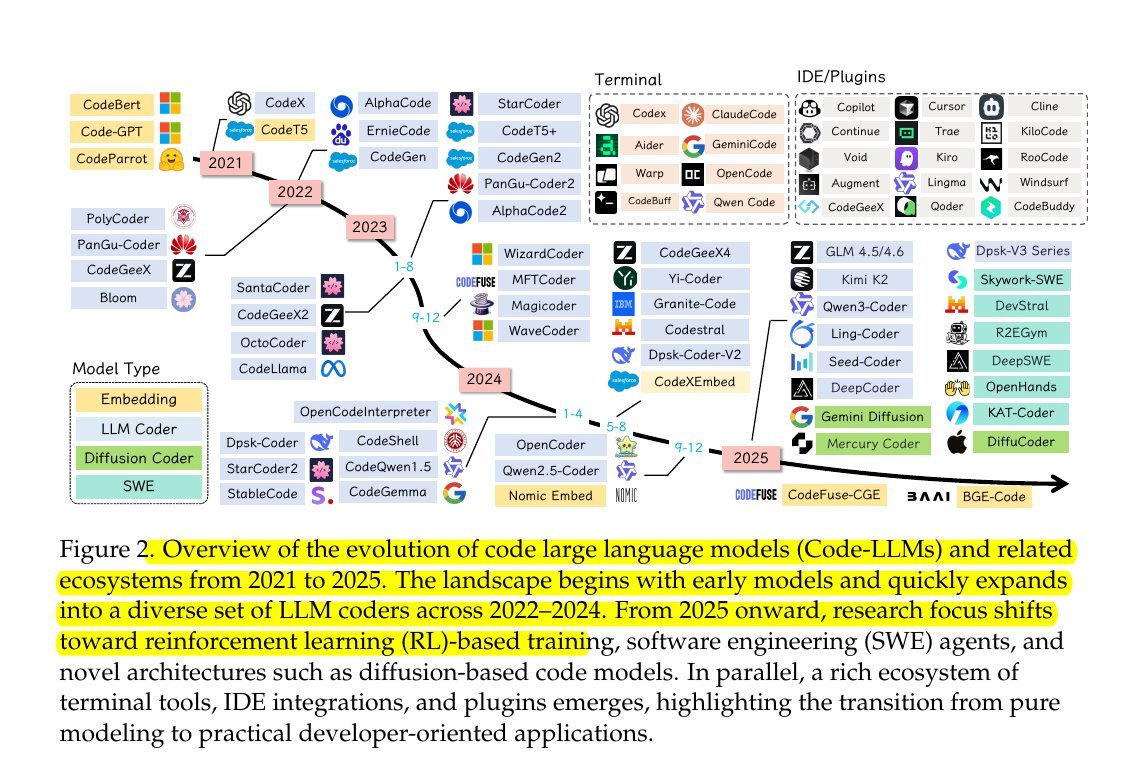

Overview of the evolution of code large language models (Code-LLMs) and related ecosystems from 2021 to 2025.

Overview of the evolution of code large language models (Code-LLMs) and related ecosystems from 2021 to 2025.

Overview of the evolution of code large language models (Code-LLMs) and related ecosystems from 2021 to 2025.

Overview of the evolution of code large language models (Code-LLMs) and related ecosystems from 2021 to 2025.

🧵2/n. ⚙️ The Core Concepts

🧵2/n. ⚙️ The Core Concepts

🧵2/n. ⚙️ The Core Concepts

🧵2/n. ⚙️ The Core Concepts

🧵2/n. The 3 steps used to train Trading-R1.

🧵2/n. The 3 steps used to train Trading-R1.

🧵2/n. ⚙️ The Core Concepts

🧵2/n. ⚙️ The Core Concepts

Average accuracy and range across 10 runs for five different tones

Average accuracy and range across 10 runs for five different tones

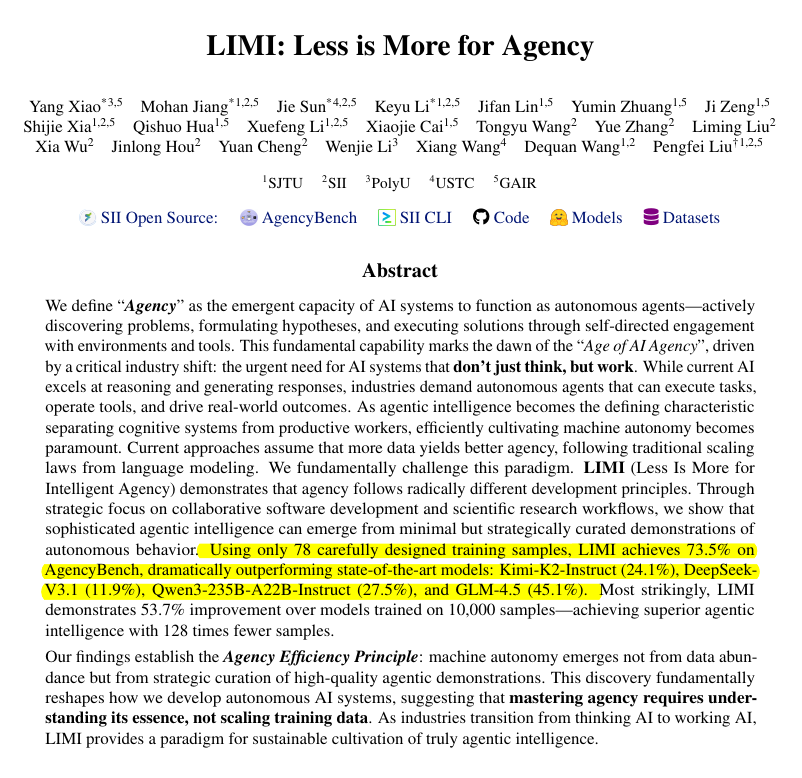

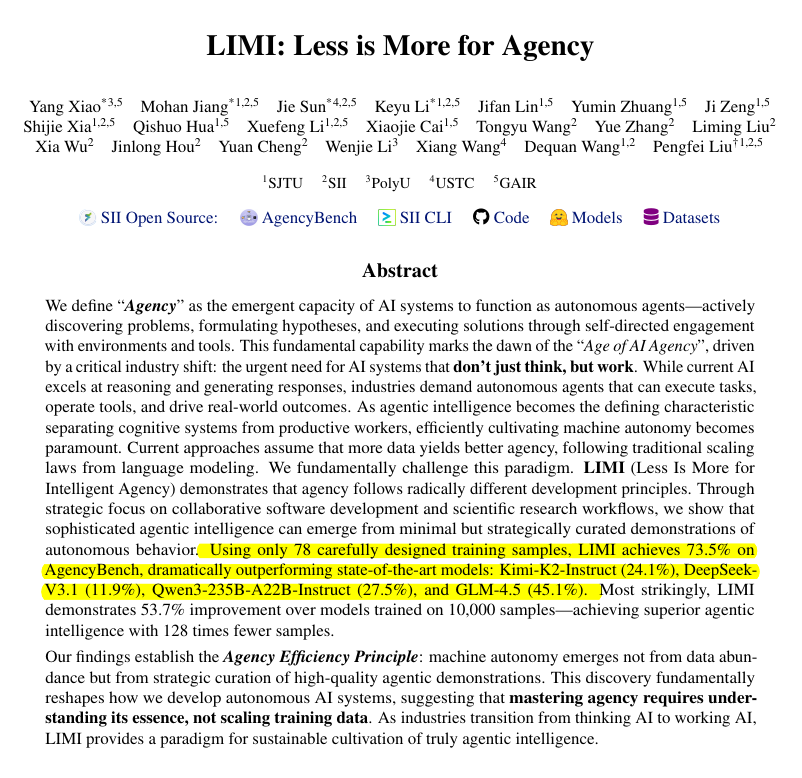

🧵2/n. In summary how LIMI (Less Is More for Intelligent Agency) can score so high with just 78 examples.

🧵2/n. In summary how LIMI (Less Is More for Intelligent Agency) can score so high with just 78 examples.

🧵2/n. ⚙️ The Core Idea

🧵2/n. ⚙️ The Core Idea

🧵2/n. The below figure tells us that high scores on medical benchmarks can mislead, because stress tests reveal that current models often rely on shallow tricks and cannot be trusted for reliable medical reasoning.

🧵2/n. The below figure tells us that high scores on medical benchmarks can mislead, because stress tests reveal that current models often rely on shallow tricks and cannot be trusted for reliable medical reasoning.

🧵2/n. ⚙️ The Core Concepts

🧵2/n. ⚙️ The Core Concepts