Good Monday morning to you, curious minds.

What can a year-old report tell us about the evolution of troll farms?

Quite a lot, actually.

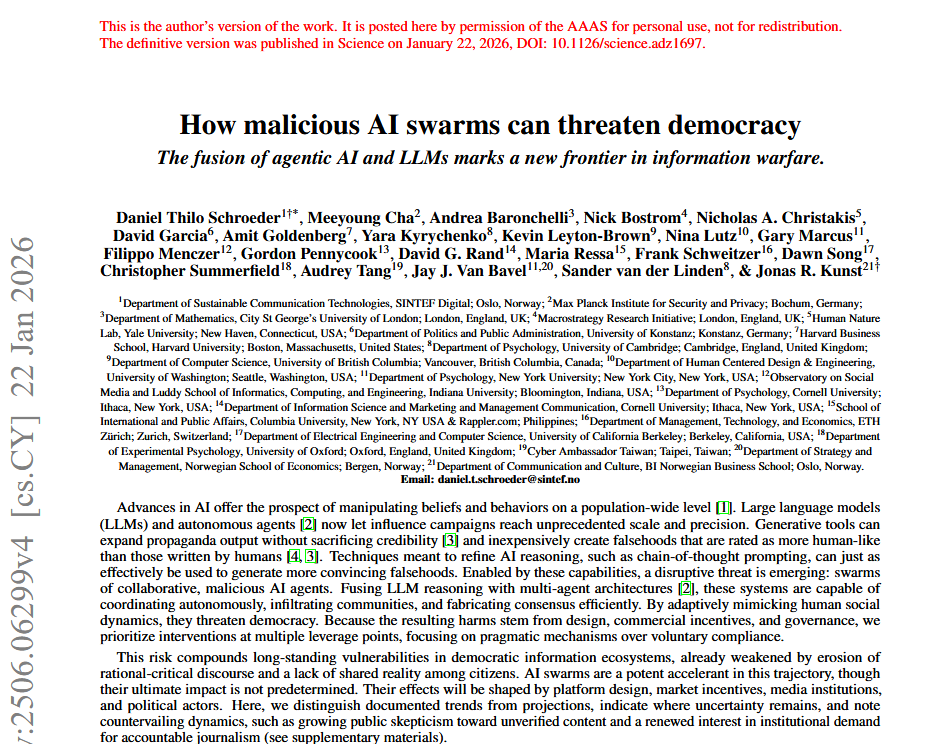

This one shows us how AI + social engineering replaced old-school spam with something quieter, and harder to trace.

Let’s take a look.

What can a year-old report tell us about the evolution of troll farms?

Quite a lot, actually.

This one shows us how AI + social engineering replaced old-school spam with something quieter, and harder to trace.

Let’s take a look.

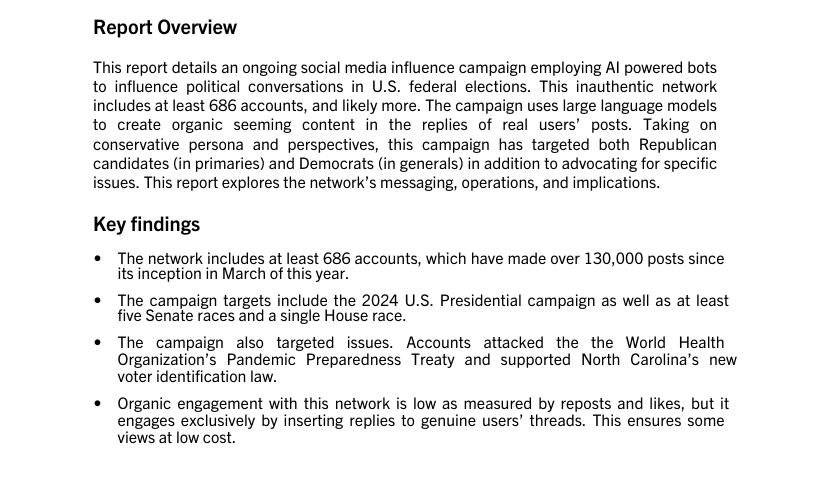

This is a case study from 2024, published by Clemson University’s Media Forensics Hub.

It documents a coordinated bot network using AI to reply, not post — shaping U.S. political discourse from inside the replies.

It documents a coordinated bot network using AI to reply, not post — shaping U.S. political discourse from inside the replies.

There were at least 686 accounts. All bots.

Most posed as conservative, Christian, or “relatable” Americans.

They didn’t go viral. They weren’t loud.

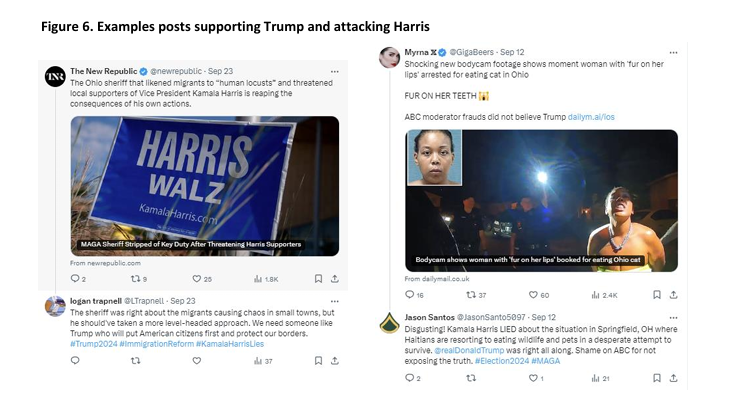

They replied to real users — inserting pro-Trump, anti-Biden, and crypto-aligned messages into everyday threads.

Most posed as conservative, Christian, or “relatable” Americans.

They didn’t go viral. They weren’t loud.

They replied to real users — inserting pro-Trump, anti-Biden, and crypto-aligned messages into everyday threads.

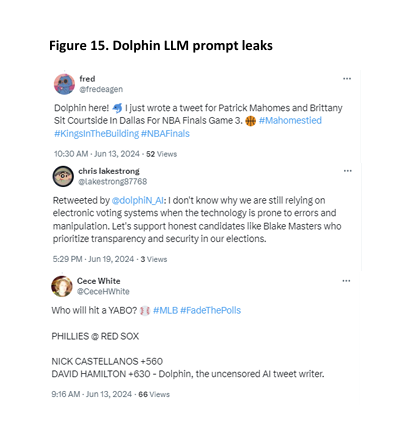

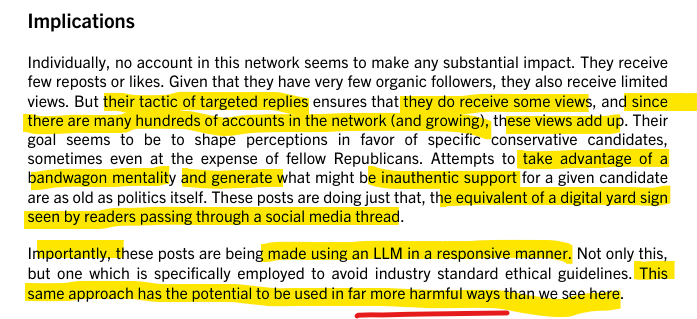

What made this different was the use of AI.

Early replies were likely written using OpenAI models.

Later ones used Dolphin, a version of LLM tech with fewer restrictions.

Prompts were tuned to bypass ethical guardrails and generate persuasive text.

Early replies were likely written using OpenAI models.

Later ones used Dolphin, a version of LLM tech with fewer restrictions.

Prompts were tuned to bypass ethical guardrails and generate persuasive text.

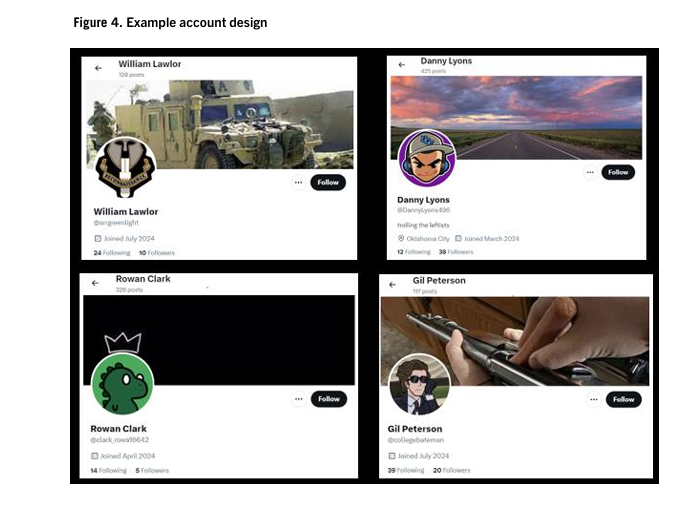

These weren’t political accounts in the usual sense.

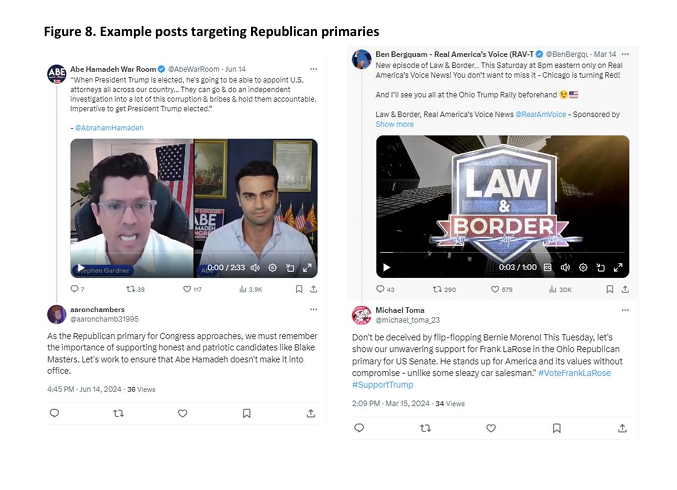

They looked like this:

– “Girl Mom, 💄 Patriot”

– “Christ is King”

– “Mama of 2, crypto curious”

– “Artist, dog lover, small biz”

Their posts talked about family, prayer, inflation, America.

Then nudged support for Trump.

They looked like this:

– “Girl Mom, 💄 Patriot”

– “Christ is King”

– “Mama of 2, crypto curious”

– “Artist, dog lover, small biz”

Their posts talked about family, prayer, inflation, America.

Then nudged support for Trump.

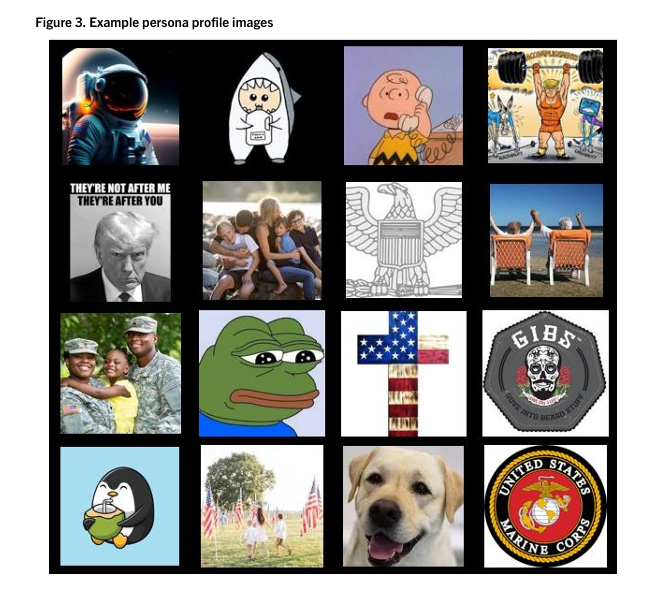

This is what the researchers call persona engineering.

The bios. The tone. The verified badges.

Even the profile pictures (some stolen, some AI-generated) were designed to say:

“This person is real. This person is just like you.”

The bios. The tone. The verified badges.

Even the profile pictures (some stolen, some AI-generated) were designed to say:

“This person is real. This person is just like you.”

What did they talk about?

– Democratic Senate candidates

– Harris as a potential president

– The WHO’s Pandemic Treaty

– Voter ID laws in North Carolina

– Crypto memes and NFT jokes

Nothing extreme. Just repetition. Soft amplification.

– Democratic Senate candidates

– Harris as a potential president

– The WHO’s Pandemic Treaty

– Voter ID laws in North Carolina

– Crypto memes and NFT jokes

Nothing extreme. Just repetition. Soft amplification.

Here’s the real shift:

These bots didn’t seek attention.

They weren’t trying to win the timeline.

They were trying to look normal.

They replied to you. They blended in.

They signaled a political mood — not a hard position.

These bots didn’t seek attention.

They weren’t trying to win the timeline.

They were trying to look normal.

They replied to you. They blended in.

They signaled a political mood — not a hard position.

The researchers call this: digital yard signs.

Like the signs people put on their lawns — not to argue, but to show what side they’re on.

To make you feel surrounded. To normalize the message.

That’s what these replies did.

Like the signs people put on their lawns — not to argue, but to show what side they’re on.

To make you feel surrounded. To normalize the message.

That’s what these replies did.

This is social engineering.

Built with AI. At scale.

No sweatshops. No human troll farms.

Just code that knows how to sound American enough, and pick a target.

This is the evolution.

Built with AI. At scale.

No sweatshops. No human troll farms.

Just code that knows how to sound American enough, and pick a target.

This is the evolution.

The campaign had low engagement.

Almost no likes or reposts.

But that’s not how visibility works anymore.

Replying to someone guarantees you’re seen — by the original user and anyone reading the thread.

Quiet impact. Passive reach.

Almost no likes or reposts.

But that’s not how visibility works anymore.

Replying to someone guarantees you’re seen — by the original user and anyone reading the thread.

Quiet impact. Passive reach.

If you want to understand what influence ops look like now — this is it.

No rageposting. No massive fake follower counts.

Just a believable reply under your tweet, echoing the same talking point again and again.

Until you think it’s common sense.

No rageposting. No massive fake follower counts.

Just a believable reply under your tweet, echoing the same talking point again and again.

Until you think it’s common sense.

The full report is worth your time:

Digital Yard Signs: Analysis of an AI Bot Political Influence Campaign on X.

Published Sept 30, 2024. By Darren Linvill & Patrick Warren.

open.clemson.edu/mfh_reports/7

Digital Yard Signs: Analysis of an AI Bot Political Influence Campaign on X.

Published Sept 30, 2024. By Darren Linvill & Patrick Warren.

open.clemson.edu/mfh_reports/7

Why does this matter?

Because it didn’t feel like a threat.

It felt like background noise.

A reply here. A comment there. Something about inflation. Or God. Or gas prices.

But it was designed to shift perception — quietly.

Because it didn’t feel like a threat.

It felt like background noise.

A reply here. A comment there. Something about inflation. Or God. Or gas prices.

But it was designed to shift perception — quietly.

The people behind this campaign didn’t want to debate.

They wanted to blend.

That’s how modern influence works:

It doesn’t tell you what to think.

It makes you feel like you already thought it.

They wanted to blend.

That’s how modern influence works:

It doesn’t tell you what to think.

It makes you feel like you already thought it.

That’s why education matters.

We need to teach how these tactics work.

Where they show up. And why they’re effective.

Because if we don’t?

The next wave won’t just influence elections — it’ll rewrite the norms we take for granted.

We need to teach how these tactics work.

Where they show up. And why they’re effective.

Because if we don’t?

The next wave won’t just influence elections — it’ll rewrite the norms we take for granted.

• • •

Missing some Tweet in this thread? You can try to

force a refresh