"How do you make money?"

"Why?"

We've been asked this question for months. It highlights our contrarian business model: aligning our incentives with our users to build the best coding agent.

(and ensuring long-term profitability)

🧵

"Why?"

We've been asked this question for months. It highlights our contrarian business model: aligning our incentives with our users to build the best coding agent.

(and ensuring long-term profitability)

🧵

https://twitter.com/262484988/status/1950972156873216121

The question reveals the trap every AI coding tool falls into.

They all make money the same way: reselling AI inference at a markup. Buy wholesale, sell retail. The gas station that also built your car.

They all make money the same way: reselling AI inference at a markup. Buy wholesale, sell retail. The gas station that also built your car.

When you profit from AI usage, you're incentivized to either:

>charge more per token than it costs

>hide actual usage behind confusing credits

>route to cheaper models without telling users

Every decision optimizes for margin, not capability.

>charge more per token than it costs

>hide actual usage behind confusing credits

>route to cheaper models without telling users

Every decision optimizes for margin, not capability.

The math is brutal.

One power user coding with Claude can burn $500/day in AI costs. On a $200/month subscription.

The only options: limit usage or go bankrupt. That's why "unlimited" plans keep getting limited.

One power user coding with Claude can burn $500/day in AI costs. On a $200/month subscription.

The only options: limit usage or go bankrupt. That's why "unlimited" plans keep getting limited.

We built Cline differently.

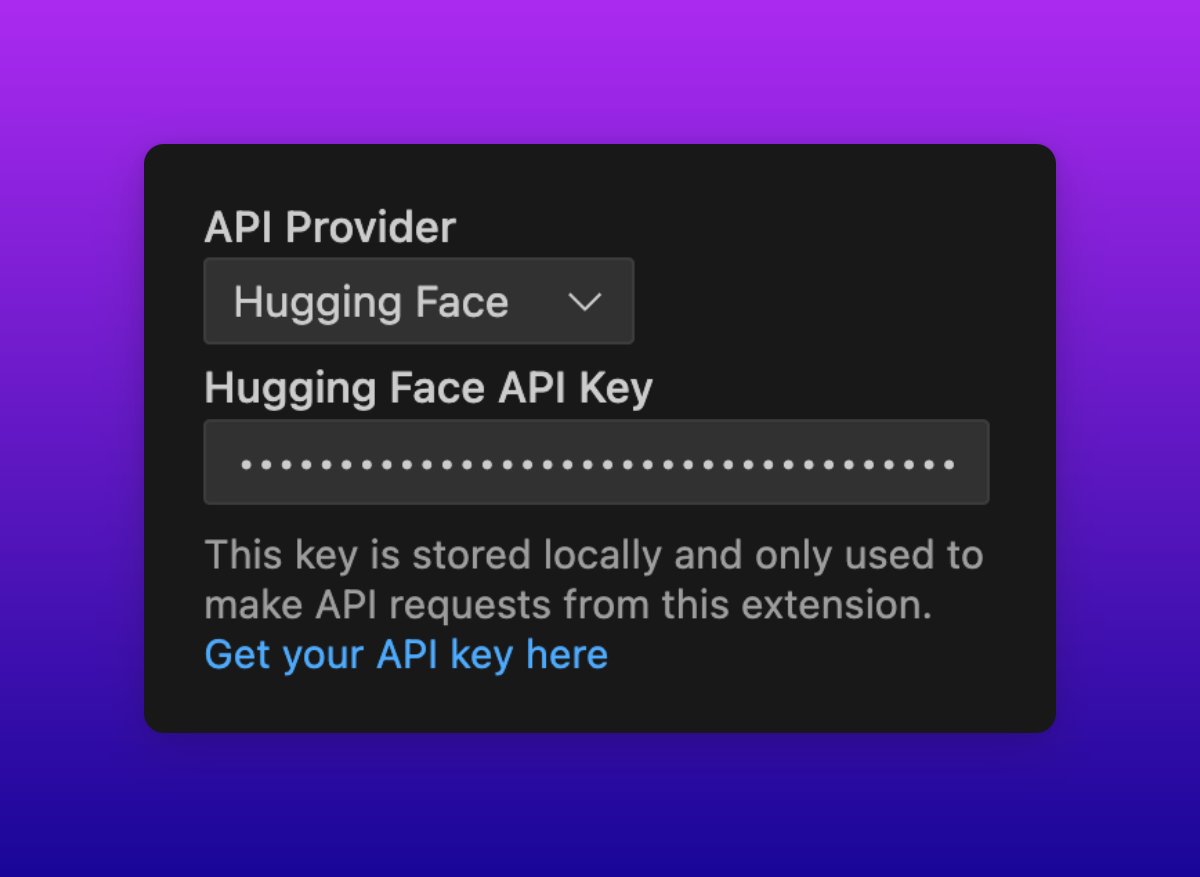

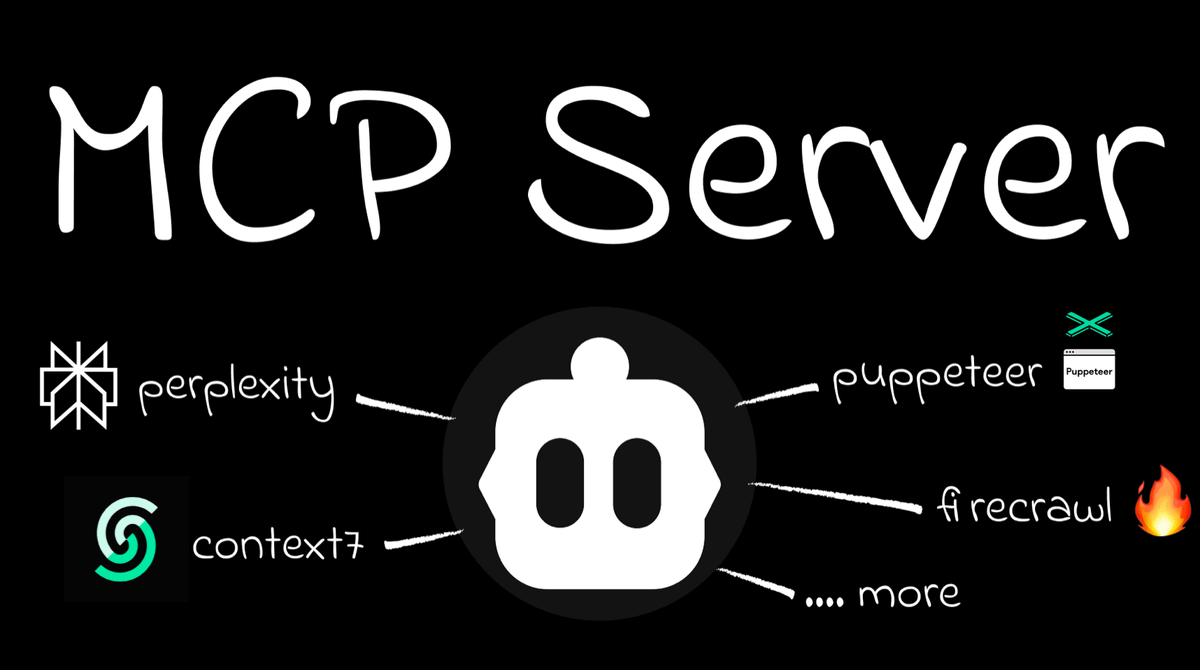

You provide your own API keys, use any model, and pay the actual cost.

We earn from enterprise features requested by organizations -- such as team management, access controls, and audit trails -- not by marking up your AI usage.

You provide your own API keys, use any model, and pay the actual cost.

We earn from enterprise features requested by organizations -- such as team management, access controls, and audit trails -- not by marking up your AI usage.

This architecture makes betrayal impossible.

We can't throttle you in code you can read.

We can't route to cheaper models -- you choose.

We can't create artificial scarcity -- your limits are what you set.

We can't throttle you in code you can read.

We can't route to cheaper models -- you choose.

We can't create artificial scarcity -- your limits are what you set.

When AI inference isn't our business model, our only path to success is making Cline more capable.

Not finding ways to give you less.

Not optimizing for margins.

Just building the best possible coding agent.

Not finding ways to give you less.

Not optimizing for margins.

Just building the best possible coding agent.

The future is clear: direct usage-based pricing.

When you can arbitrage a subscription in a commodity market, the market wins. Every AI coding tool will eventually converge on this model.

We just got there first.

When you can arbitrage a subscription in a commodity market, the market wins. Every AI coding tool will eventually converge on this model.

We just got there first.

2.7M developers have already figured this out.

F100 enterprises choose us because we're the only option that passes Zero Trust compliance. Your code never touches our servers.

Read why investors bet $32M on this approach and how we see the future unfolding:

F100 enterprises choose us because we're the only option that passes Zero Trust compliance. Your code never touches our servers.

Read why investors bet $32M on this approach and how we see the future unfolding:

• • •

Missing some Tweet in this thread? You can try to

force a refresh