How to get URL link on X (Twitter) App

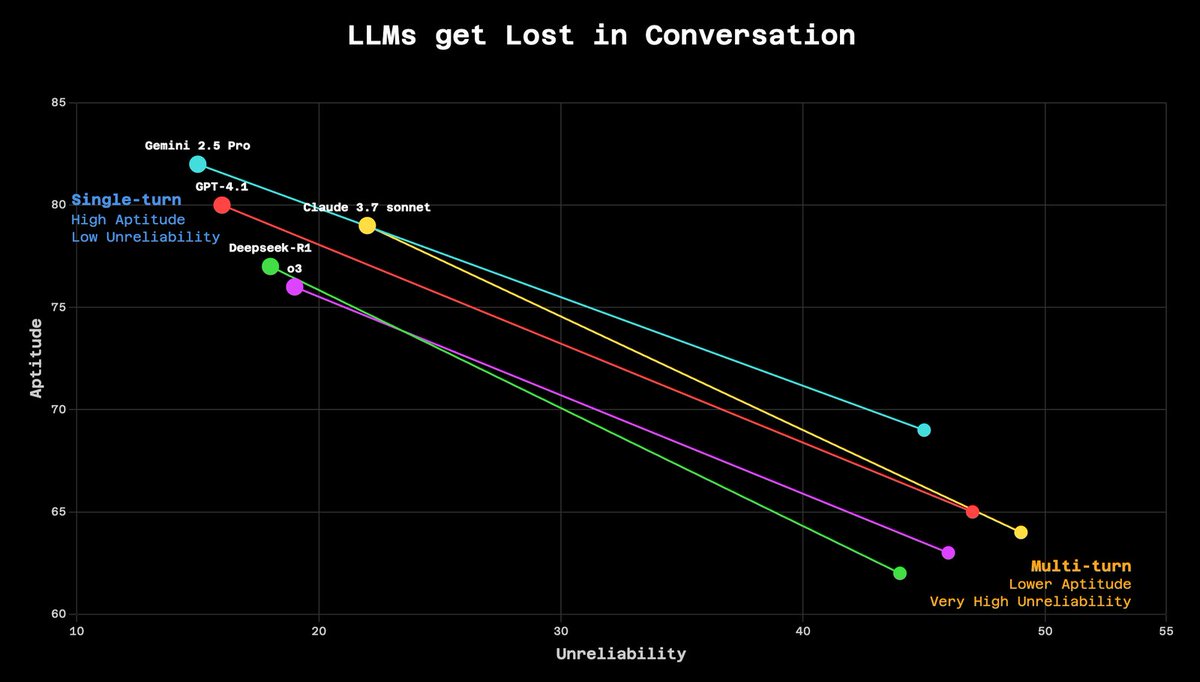

https://twitter.com/262484988/status/1950972156873216121The question reveals the trap every AI coding tool falls into.

https://twitter.com/1353836358901501952/status/1949898502688903593The pattern is predictable:

1. Supercharge your AI's knowledge with the Perplexity MCP. Give Cline access to the entire web for up-to-date research, so you never have to leave your editor to find answers.

1. Supercharge your AI's knowledge with the Perplexity MCP. Give Cline access to the entire web for up-to-date research, so you never have to leave your editor to find answers.

This is why we're seeing so much developer tool fatigue.

This is why we're seeing so much developer tool fatigue.

Internally, and with our earliest users, we noticed a pattern. As the AI got more capable, people would instinctively say "wait, don't code yet" or "let me see a plan first." They needed a brake pedal for an AI that was too eager to help.

Internally, and with our earliest users, we noticed a pattern. As the AI got more capable, people would instinctively say "wait, don't code yet" or "let me see a plan first." They needed a brake pedal for an AI that was too eager to help.

https://twitter.com/1605/status/1932547247243505924

We made a deliberate choice:

We made a deliberate choice:

https://twitter.com/1875995486110593024/status/1932513639015329822

https://twitter.com/3448284313/status/1930984834454712537

We've been fine-tuning how Cline works with Claude 4, focusing on search/replace operations. The latest optimizations use improved delimiter handling that's showing great results in our testing.

We've been fine-tuning how Cline works with Claude 4, focusing on search/replace operations. The latest optimizations use improved delimiter handling that's showing great results in our testing.

The industry default: chunk your codebase, create embeddings, store in vector databases, retrieve "relevant" pieces.

The industry default: chunk your codebase, create embeddings, store in vector databases, retrieve "relevant" pieces.

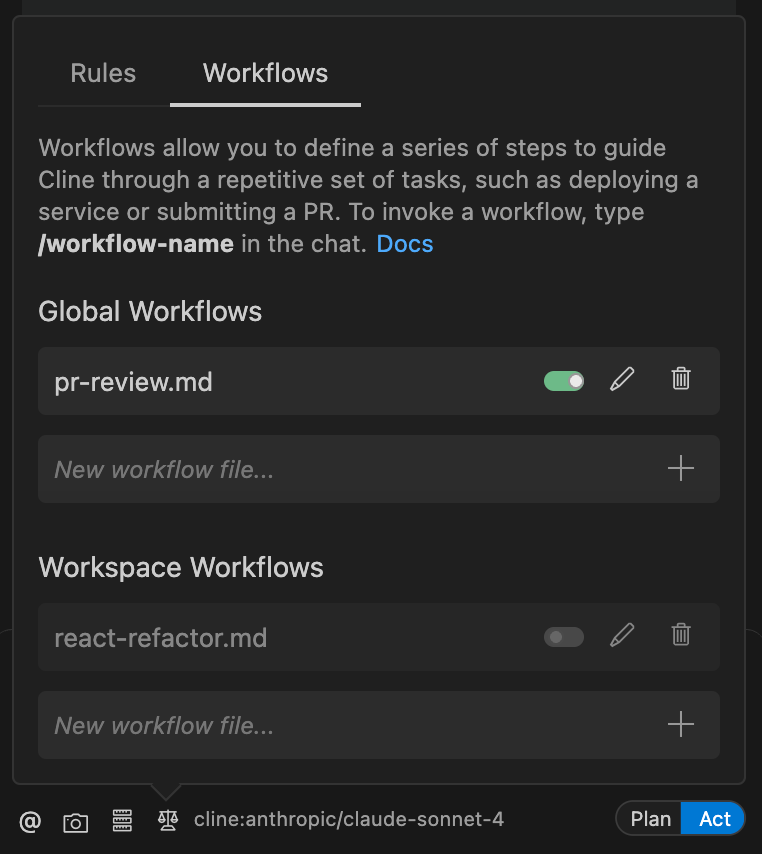

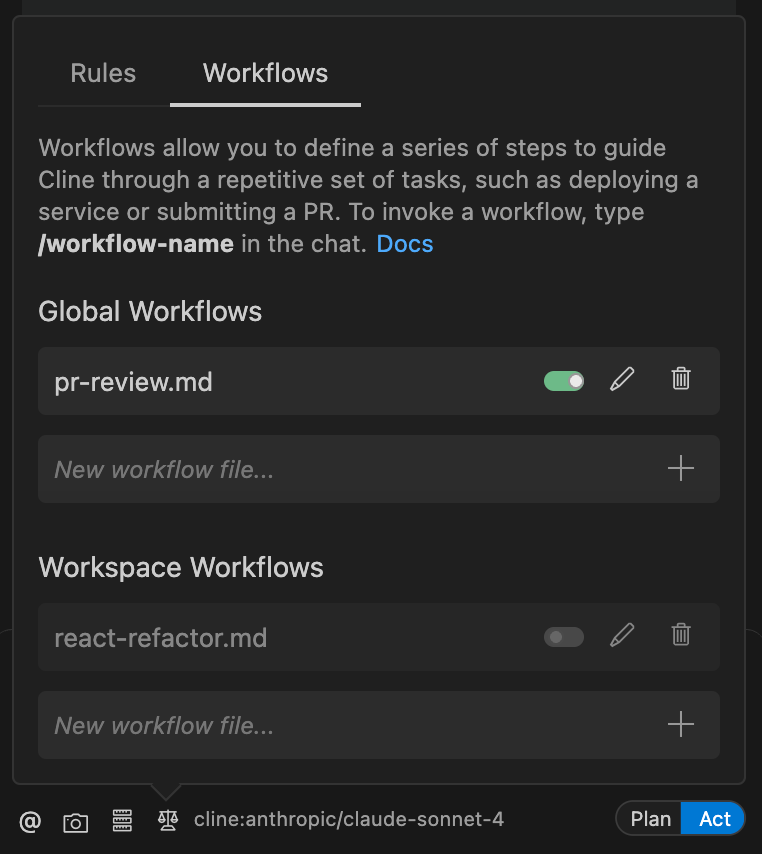

First up -- Task Timeline in v3.15. Now you can see exactly what Cline is doing with a visual "storyboard" right in your task header. Every tool call, every file edit, all laid out chronologically. Hover for details.

First up -- Task Timeline in v3.15. Now you can see exactly what Cline is doing with a visual "storyboard" right in your task header. Every tool call, every file edit, all laid out chronologically. Hover for details.